ML Operations

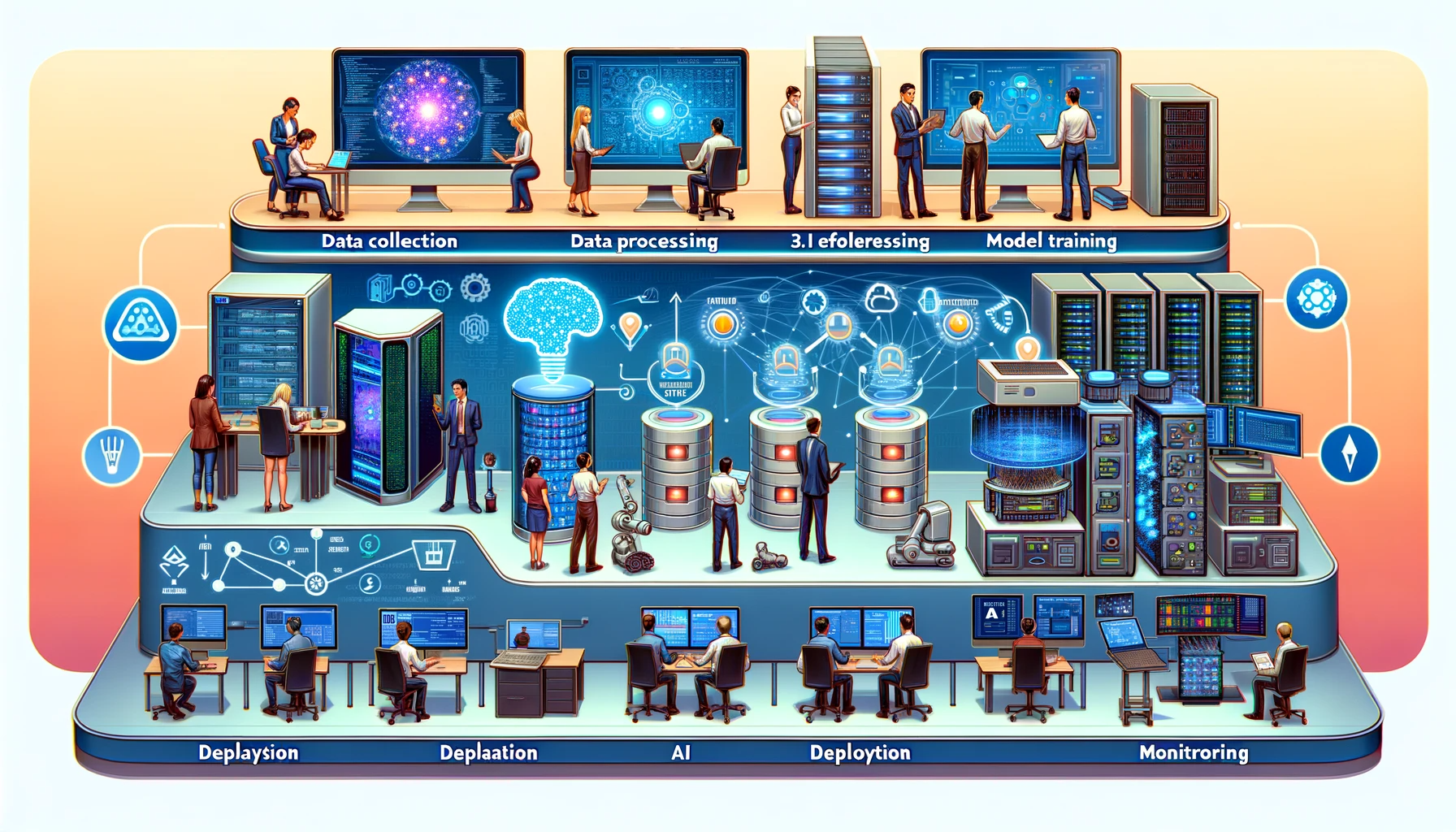

DALL·E 3 Prompt: Create a detailed, wide rectangular illustration of an AI workflow. The image should showcase the process across six stages, with a flow from left to right: 1. Data collection, with diverse individuals of different genders and descents using a variety of devices like laptops, smartphones, and sensors to gather data. 2. Data processing, displaying a data center with active servers and databases with glowing lights. 3. Model training, represented by a computer screen with code, neural network diagrams, and progress indicators. 4. Model evaluation, featuring people examining data analytics on large monitors. 5. Deployment, where the AI is integrated into robotics, mobile apps, and industrial equipment. 6. Monitoring, showing professionals tracking AI performance metrics on dashboards to check for accuracy and concept drift over time. Each stage should be distinctly marked and the style should be clean, sleek, and modern with a dynamic and informative color scheme.

Purpose

Why do machine learning prototypes that work perfectly in development often fail catastrophically when deployed to production environments?

The transition from prototype models to reliable production systems presents significant engineering challenges. Research models trained on clean datasets encounter production environments with shifting data distributions, evolving user behaviors, and unexpected system failures. Unlike traditional software that executes deterministic logic, machine learning systems exhibit probabilistic behavior that degrades silently as real-world conditions diverge from training assumptions. This instability requires operational practices that detect performance degradation before affecting users, automatically retrain models as data evolves, and maintain system reliability despite prediction uncertainty. Success demands engineering disciplines that bridge experimental validation and production reliability, enabling organizations to deploy models that remain effective throughout their operational lifespan.

Differentiate between traditional software failures and ML system silent failures to explain why MLOps emerged as a distinct engineering discipline

Analyze technical debt patterns (boundary erosion, correction cascades, data dependencies) in ML systems and propose systematic engineering solutions

Design CI/CD pipelines that address ML-specific challenges including model validation, data versioning, and automated retraining workflows

Evaluate monitoring strategies for production ML systems that detect both traditional system metrics and ML-specific indicators like data drift and prediction confidence

Implement deployment patterns for diverse environments including cloud services, edge devices, and federated learning systems

Assess organizational maturity levels for effective MLOps implementation

Compare MLOps adaptations across domains by analyzing how specialized requirements (healthcare, embedded systems) reshape operational frameworks

Create governance frameworks that ensure model reproducibility, auditability, and compliance in regulated environments

Introduction to Machine Learning Operations

Traditional software fails loudly with error messages and stack traces; machine learning systems fail silently. As introduced in Chapter 1: Introduction, the Silent Failure Problem is a defining characteristic of ML systems: performance degrades gradually as data distributions shift, user behaviors evolve, and model assumptions become outdated, all without raising any alarms. MLOps is the engineering discipline designed to make those silent failures visible and manageable. It provides the monitoring, automation, and governance required to ensure that data-driven systems remain reliable in production, even as the world around them changes.

Machine learning systems require more than algorithmic innovation; they need systematic engineering practices for reliable production deployment. While Chapter 14: On-Device Learning explored distributed learning under resource constraints and Chapter 16: Robust AI established fault tolerance methodologies, the security framework from Chapter 15: Security & Privacy becomes essential for production deployment. Machine Learning Operations (MLOps)1 provides the disciplinary framework that synthesizes these specialized capabilities into coherent production architectures. This operational discipline addresses the challenge of translating experimental success into sustainable system performance, integrating adaptive learning, security protocols, and resilience mechanisms within complex production ecosystems.

1 MLOps Emergence: While machine learning operations challenges were identified earlier by D. Sculley and colleagues at Google in their influential 2015 paper “Hidden Technical Debt in Machine Learning Systems” (Sculley et al. 2021), the term “MLOps” itself was coined around 2018 as the discipline matured. The field emerged as organizations like Netflix, Uber, and Airbnb faced the “last mile” problem, where approximately 90% of ML models never made it to production according to industry surveys and anecdotal reports due to operational challenges.

MLOps (Section 1.2.2) systematically integrates machine learning methodologies, data science practices, and software engineering principles to enable automated, end-to-end lifecycle management. This operational paradigm bridges experimental validation and production deployment, ensuring that validated models maintain their performance characteristics while adapting to real-world operational environments.

Consider deploying a demand prediction system for ridesharing services. While controlled experimental validation may demonstrate superior accuracy and latency characteristics, production deployment introduces challenges that extend beyond algorithmic performance. Data streams exhibit varying quality, temporal patterns undergo seasonal variations, and prediction services must satisfy strict availability requirements while maintaining real-time response capabilities. MLOps provides the framework needed to address these operational complexities.

As an engineering discipline, MLOps establishes standardized protocols, tools, and workflows that facilitate the transition of validated models from experimental environments to production systems. The discipline promotes collaboration by formalizing interfaces and defining responsibilities across traditionally isolated domains, including data science, machine learning engineering, and systems operations2. This approach enables continuous integration and deployment practices adapted for machine learning contexts, supporting iterative model refinement, validation, and deployment while preserving system stability and operational reliability.

2 DevOps Origins: The “wall of confusion” between development and operations teams was so notorious that Patrick Debois called his 2009 conference “DevOpsDays” specifically to bridge this gap. The movement emerged from the frustrations of the “throw it over the wall” mentality where developers built software in isolation from operations teams who had to deploy and maintain it.

Building on these operational foundations, mature MLOps methodologies transform how organizations manage machine learning systems through automation and monitoring frameworks. These practices enable continuous model retraining as new data becomes available, evaluation of alternative architectures against production baselines, controlled deployment of experimental modifications through graduated rollout strategies, and real-time performance assessment without compromising operational continuity. This operational flexibility ensures sustained model relevance while maintaining system reliability standards.

Beyond operational efficiency, MLOps encompasses governance frameworks and accountability mechanisms that become critical as systems scale. MLOps standardizes the tracking of model versions, data lineage documentation, and configuration parameter management, establishing reproducible and auditable artifact trails. This rigor proves essential in regulated domains where model interpretability and operational provenance constitute compliance requirements.

The practical benefits of this methodological rigor become evident in organizational outcomes. Evidence demonstrates that organizations adopting mature MLOps methodologies achieve significant improvements in deployment reliability, accelerated time-to-market cycles, and enhanced system maintainability3. The disciplinary framework enables sustainable scaling of machine learning systems while preserving the performance characteristics validated during benchmarking phases, ensuring operational fidelity to experimental results.

3 MLOps Business Impact: Companies implementing mature MLOps practices report significant improvements in deployment speed (reducing time from months to weeks), substantial reductions in model debugging time, and improved model reliability. Organizations with mature MLOps practices consistently achieve higher model success rates moving from pilot to production compared to those using ad hoc approaches.

This methodology of machine learning operations provides the pathway for transforming theoretical innovations into sustainable production capabilities. This chapter establishes the engineering foundations needed to bridge the gap between experimentally validated systems and operationally reliable production deployments. The analysis focuses particularly on centralized cloud computing environments, where monitoring infrastructure and management capabilities enable the implementation of mature operational practices for large-scale machine learning systems.

While Chapter 10: Model Optimizations and Chapter 9: Efficient AI establish optimization foundations, this chapter extends these techniques to production contexts requiring continuous maintenance and monitoring. The empirical benchmarking approaches established in Chapter 12: Benchmarking AI provide the methodological foundation for production performance assessment, while system reliability patterns emerge as critical determinants of operational availability. MLOps integrates these diverse technical foundations into unified operational workflows, systematically addressing the fundamental challenge of transitioning from model development to sustainable production deployment.

This chapter examines the theoretical foundations and practical motivations underlying MLOps, traces its disciplinary evolution from DevOps methodologies, and identifies the principal challenges and established practices that inform its adoption in contemporary machine learning system architectures.

Historical Context

Understanding this evolution from DevOps to MLOps clarifies why traditional operational practices require adaptation for machine learning systems. The following examination of this historical development reveals the specific challenges that motivated MLOps as a distinct discipline.

MLOps has its roots in DevOps, a set of practices that combines software development (Dev) and IT operations (Ops) to shorten the development lifecycle and support the continuous delivery of high-quality software. DevOps and MLOps both emphasize automation, collaboration, and iterative improvement. However, while DevOps emerged to address challenges in software deployment and operational management, MLOps evolved in response to the unique complexities of machine learning workflows, especially those involving data-driven components (Breck et al. 2017). Understanding this evolution is important for appreciating the motivations and structure of modern ML systems.

DevOps

The term DevOps was coined in 2009 by Patrick Debois, a consultant and Agile practitioner who organized the first DevOpsDays conference in Ghent, Belgium. DevOps extended the principles of the Agile movement, that emphasized close collaboration among development teams and rapid, iterative releases, by bringing IT operations into the fold.

This innovation addressed a core problem in traditional software pipelines, where development and operations teams worked in silos, creating inefficiencies, delays, and misaligned priorities. DevOps emerged as a response, advocating shared ownership, infrastructure as code4, and automation to streamline deployment pipelines.

4 Infrastructure as Code: The concept emerged from the painful lessons of “snowflake servers”, unique, manually-configured systems that were impossible to reproduce. Luke Kanies created Puppet in 2005 after experiencing the nightmare of managing hundreds of custom-configured servers at various startups.

5 Jenkins Origins: Originally called “Hudson,” Jenkins was created by Kohsuke Kawaguchi at Sun Microsystems in 2004 to automate his own tedious testing processes. The name change to “Jenkins” came in 2011 after a trademark dispute, named after the devoted butler from P.G. Wodehouse’s stories.

6 Kubernetes Origins: Greek for “helmsman,” Kubernetes emerged from Google’s internal Borg system that managed billions of containers across their data centers. Google open-sourced it in 2014, realizing that their competitive advantage wasn’t the orchestration system itself, but how they used it to run services at planetary scale.

7 Containerization and Orchestration: Docker containers package applications with all their dependencies into standardized, portable units that run consistently across different computing environments, isolating software from infrastructure variations. Kubernetes orchestrates these containers at scale, automating deployment, load balancing, scaling, and recovery across clusters of machines. Together, they enable the reproducible, automated infrastructure management essential for modern MLOps, where models and their serving environments must be deployed consistently across development, staging, and production.

To support these principles, tools such as Jenkins5, Docker, and Kubernetes67 became foundational for implementing continuous integration and continuous delivery (CI/CD) practices.

Through automation and feedback loops, DevOps promotes collaboration while reducing time-to-release and improving software reliability. This success established the cultural and technical groundwork for extending similar principles to the ML domain.

MLOps

While DevOps achieved considerable success in traditional software deployment, machine learning systems introduced new challenges that required further adaptation. MLOps builds on the DevOps foundation but addresses the specific demands of ML system development and deployment. Where DevOps focuses on integrating and delivering deterministic software, MLOps must manage non-deterministic, data-dependent workflows. These workflows span data acquisition, preprocessing, model training, evaluation, deployment, and continuous monitoring (see Figure 1).

The operational complexity and business risk of deploying machine learning without systematic engineering practices becomes clear when examining real-world failures. Consider a retail company that deployed a recommendation model that initially boosted sales by 15%. However, due to a silent data drift issue, the model’s accuracy degraded over six months, eventually reducing sales by 5% compared to the original system. The problem went undetected because monitoring focused on system uptime rather than model performance metrics. The company lost an estimated $10 million in revenue before the issue was discovered during routine quarterly analysis. This scenario, common in early ML deployments, illustrates why MLOps, with its emphasis on continuous model monitoring and automated retraining, is not merely an engineering best practice, but a business necessity for organizations depending on machine learning systems for critical operations.

This adaptation was driven by several recurring challenges in operationalizing machine learning that distinguished it from traditional software deployment. Data drift8, where shifts in input data distributions over time degrade model accuracy, requires continuous monitoring and automated retraining procedures.

8 Data Drift Discovery: The concept was first formalized by researchers studying spam detection systems in the early 2000s, who noticed that spam patterns evolved so rapidly that models became obsolete within weeks. This led to the realization that ML systems face a different challenge than traditional software: their environment actively adapts to defeat them.

9 ML Reproducibility Crisis: A 2016 study by Collberg and Proebsting found that only 54% of computer systems research papers could be reproduced even when authors were available to assist (Collberg and Proebsting 2016). This reproducibility challenge is even more acute in ML research, though the situation has improved with initiatives like Papers with Code and requirements for code submission at major ML conferences.

Building on this data-centric challenge, reproducibility9 presents another issue. ML workflows lack standardized mechanisms to track code, datasets, configurations, and environments, making it difficult to reproduce past experiments (Schelter et al. 2018). The lack of explainability in complex models has driven demand for tools that increase model transparency and interpretability, particularly in regulated domains.

Beyond these foundational challenges, organizations face additional operational complexities. Post-deployment monitoring of model performance proves difficult, especially in detecting silent failures or changes in user behavior. The manual overhead involved in retraining and redeploying models creates friction in experimentation and iteration. Configuring and maintaining ML infrastructure is complex and error-prone, highlighting the need for platforms that offer optimized, modular, and reusable infrastructure. Together, these challenges form the foundation for MLOps practices that focus on automation, collaboration, and lifecycle management.

In response to these distinct challenges, the field developed specialized tools and workflows tailored to the ML lifecycle. Building on DevOps foundations while addressing ML-specific requirements, MLOps coordinates a broader stakeholder ecosystem and introduces specialized practices such as data versioning10, model versioning, and model monitoring that extend beyond traditional DevOps scope. These practices are detailed in Table 1:

10 DVC Creation Story: Data Version Control was born from the frustration of Dmitry Petrov, who spent weeks trying to reproduce an experiment only to discover the training data had been quietly updated. He created DVC in 2017 to bring Git-like versioning to data science, solving what he called “the biggest unsolved problem in machine learning.”

| Aspect | DevOps | MLOps |

|---|---|---|

| Objective | Streamlining software development and operations processes | Optimizing the lifecycle of machine learning models |

| Methodology | Continuous Integration and Continuous Delivery (CI/CD) for software development | Similar to CI/CD but focuses on machine learning workflows |

| Primary Tools | Version control (Git), CI/CD tools (Jenkins, Travis CI), Configuration management (Ansible, Puppet) | Data versioning tools, Model training and deployment tools, CI/CD pipelines tailored for ML |

| Primary Concerns | Code integration, Testing, Release management, Automation, Infrastructure as code | Data management, Model versioning, Experiment tracking, Model deployment, Scalability of ML workflows |

| Typical Outcomes | Faster and more reliable software releases, Improved collaboration between development and operations teams | Efficient management and deployment of machine learning models, Enhanced collaboration between data scientists and engineers |

With these foundational distinctions established, we must first understand the unique operational challenges that motivate sophisticated MLOps practices before examining the infrastructure and practices designed to address them.

Technical Debt and System Complexity

While the DevOps foundation provides automation and collaboration principles, machine learning systems introduce unique forms of complexity that require engineering approaches to manage effectively. Unlike traditional software where broken code fails immediately, ML systems can degrade silently through data changes, model interactions, and evolving requirements. While federated learning systems face unique coordination challenges (Chapter 14: On-Device Learning) and robust systems require careful monitoring (Chapter 16: Robust AI), all deployment contexts must balance operational efficiency with security requirements. Understanding these operational challenges, collectively known as technical debt, is essential for motivating the engineering solutions and practices that follow.

This complexity manifests as machine learning systems mature and scale, where they accumulate technical debt: the long-term cost of expedient design decisions made during development. Originally proposed in software engineering in the 1990s11, this metaphor compares shortcuts in implementation to financial debt: it may enable short-term velocity, but requires ongoing interest payments in the form of maintenance, refactoring, and systemic risk.

11 Technical Debt Origins: Ward Cunningham coined the term in 1992, comparing rushed coding decisions to financial debt: “A little debt speeds development so long as it is paid back promptly with a rewrite.” He later regretted the metaphor became an excuse for bad code rather than a tool for communicating tradeoffs.

These operational challenges manifest in several distinct patterns that teams encounter as their ML systems evolve. Rather than cataloging every debt pattern, we focus on representative examples that illustrate the engineering approaches MLOps provides. Each challenge emerges from unique characteristics of machine learning workflows: their reliance on data rather than deterministic logic, their statistical rather than exact behavior, and their tendency to create implicit dependencies through data flows rather than explicit interfaces.

The following technical debt patterns demonstrate why traditional DevOps practices require extension for ML systems, motivating the infrastructure solutions presented in subsequent sections.

Building on this systems perspective, we examine key categories of technical debt unique to ML systems (Figure 3). Each subsection highlights common sources, illustrative examples, and engineering solutions that address these challenges. While some forms of debt may be unavoidable during early development, understanding their causes and impact enables engineers to design robust and maintainable ML systems through disciplined architectural practices and appropriate tooling choices.

Boundary Erosion

In traditional software systems, modularity and abstraction provide clear boundaries between components, allowing changes to be isolated and behavior to remain predictable. Machine learning systems, in contrast, tend to blur these boundaries. The interactions between data pipelines, feature engineering, model training, and downstream consumption often lead to tightly coupled components with poorly defined interfaces.

This erosion of boundaries makes ML systems particularly vulnerable to cascading effects from even minor changes. A seemingly small update to a preprocessing step or feature transformation can propagate through the system in unexpected ways, breaking assumptions made elsewhere in the pipeline. This lack of encapsulation increases the risk of entanglement, where dependencies between components become so intertwined that local modifications require global understanding and coordination.

One manifestation of this problem is known as CACHE (Change Anything Changes Everything). When systems are built without strong boundaries, adjusting a feature encoding, model hyperparameter, or data selection criterion can affect downstream behavior in unpredictable ways. This inhibits iteration and makes testing and validation more complex. For example, changing the binning strategy of a numerical feature may cause a previously tuned model to underperform, triggering retraining and downstream evaluation changes.

To mitigate boundary erosion, teams should prioritize architectural practices that support modularity and encapsulation. Designing components with well-defined interfaces allows teams to isolate faults, reason about changes, and reduce the risk of system-wide regressions. For instance, clearly separating data ingestion from feature engineering, and feature engineering from modeling logic, introduces layers that can be independently validated, monitored, and maintained.

Boundary erosion is often invisible in early development but becomes a significant burden as systems scale or require adaptation. However, established software engineering practices can effectively prevent and mitigate this problem. Proactive design decisions that preserve abstraction and limit interdependencies, combined with systematic testing and interface documentation, provide practical solutions for managing complexity and avoiding long-term maintenance costs.

This challenge arises because ML systems operate with statistical rather than logical guarantees, making traditional software engineering boundaries harder to enforce. Understanding why boundary erosion occurs so frequently requires examining how machine learning workflows differ from conventional software development.

Boundary erosion in ML systems violates established software engineering principles, particularly the Law of Demeter and the principle of least knowledge. While traditional software achieves modularity through explicit interfaces and information hiding, ML systems create implicit couplings through data flows that bypass these explicit boundaries.

The CACHE phenomenon represents a breakdown of the Liskov Substitution Principle, where component modifications violate behavioral contracts expected by dependent components. Unlike traditional software with compile-time guarantees, ML systems operate with statistical behavior that creates inherently different coupling patterns.

The challenge lies in reconciling traditional modularity concepts with the inherently interconnected nature of ML workflows, where statistical dependencies and data-driven behavior create coupling patterns that traditional software engineering frameworks were not designed to handle.

Correction Cascades

As machine learning systems evolve, they often undergo iterative refinement to address performance issues, accommodate new requirements, or adapt to environmental changes. In well-engineered systems, such updates are localized and managed through modular changes. However, in ML systems, even small adjustments can trigger correction cascades, a sequence of dependent fixes that propagate backward and forward through the workflow.

The diagram in Figure 4 visualizes how these cascading effects propagate through ML system development. Understanding the structure of these cascades helps teams anticipate and mitigate their impact.

Figure 4 illustrates how these cascades emerge across different stages of the ML lifecycle, from problem definition and data collection to model development and deployment. Each arc represents a corrective action, and the colors indicate different sources of instability, including inadequate domain expertise, brittle real-world interfaces, misaligned incentives, and insufficient documentation. The red arrows represent cascading revisions, while the dotted arrow at the bottom highlights a full system restart, a drastic but sometimes necessary outcome.

One common source of correction cascades is sequential model development: reusing or fine-tuning existing models to accelerate development for new tasks. While this strategy is often efficient, it can introduce hidden dependencies that are difficult to unwind later. Assumptions baked into earlier models become implicit constraints for future models, limiting flexibility and increasing the cost of downstream corrections.

Consider a scenario where a team fine-tunes a customer churn prediction model for a new product. The original model may embed product-specific behaviors or feature encodings that are not valid in the new setting. As performance issues emerge, teams may attempt to patch the model, only to discover that the true problem lies several layers upstream, perhaps in the original feature selection or labeling criteria.

To avoid or reduce the impact of correction cascades, teams must make careful tradeoffs between reuse and redesign. Several factors influence this decision. For small, static datasets, fine-tuning may be appropriate. For large or rapidly evolving datasets, retraining from scratch provides greater control and adaptability. Fine-tuning also requires fewer computational resources, making it attractive in constrained settings. However, modifying foundational components later becomes extremely costly due to these cascading effects.

Therefore, careful consideration should be given to introducing fresh model architectures, even if resource-intensive, to avoid correction cascades down the line. This approach may help mitigate the amplifying effects of issues downstream and reduce technical debt. However, there are still scenarios where sequential model building makes sense, necessitating a thoughtful balance between efficiency, flexibility, and long-term maintainability in the ML development process.

To understand why correction cascades occur so persistently in ML systems despite best practices, it helps to examine the underlying mechanisms that drive this phenomenon. The correction cascade pattern emerges from hidden feedback loops that violate system modularity principles established in software engineering. When model A’s outputs influence model B’s training data, this creates implicit dependencies that undermine modular design. These dependencies are particularly insidious because they operate through data flows rather than explicit code interfaces, making them invisible to traditional dependency analysis tools.

From a systems theory perspective, correction cascades represent instances of tight coupling between supposedly independent components. The cascade propagation follows power-law distributions, where small initial changes can trigger disproportionately large system-wide modifications. This phenomenon parallels the butterfly effect in complex systems, where minor perturbations amplify through nonlinear interactions.

Understanding these theoretical foundations helps engineers recognize that preventing correction cascades requires not just better tooling, but architectural decisions that preserve system modularity even in the presence of learning components. The challenge lies in designing ML systems that maintain loose coupling despite the inherently interconnected nature of data-driven workflows.

Interface and Dependency Challenges

Unlike traditional software where component interactions occur through explicit APIs, ML systems often develop implicit dependencies through data flows and shared outputs. Two critical patterns illustrate these challenges:

Undeclared Consumers: Model outputs frequently serve downstream components without formal tracking or interface contracts. When models evolve, these hidden dependencies can break silently. For example, a credit scoring model’s outputs might feed an eligibility engine, which influences future applicant pools and training data, creating untracked feedback loops that bias model behavior over time.

Data Dependency Debt: ML pipelines accumulate unstable and underutilized data dependencies that become difficult to trace or validate. Feature engineering scripts, data joins, and labeling conventions lack the dependency analysis tools available in traditional software development. When data sources change structure or distribution, downstream models can fail unexpectedly.

Engineering Solutions: These challenges require systematic approaches including strict access controls for model outputs, formal interface contracts with documented schemas, data versioning and lineage tracking systems, and comprehensive monitoring of prediction usage patterns. The MLOps infrastructure patterns presented in subsequent sections provide concrete implementations of these solutions.

System Evolution Challenges

As ML systems mature, they face unique evolution challenges that differ fundamentally from traditional software:

Feedback Loops: Models influence their own future behavior through the data they generate. Recommendation systems exemplify this: suggested items shape user clicks, which become training data, potentially creating self-reinforcing biases. These loops undermine data independence assumptions and can mask performance degradation for months.

Pipeline and Configuration Debt: ML workflows often evolve into “pipeline jungles” of ad hoc scripts and fragmented configurations. Without modular interfaces, teams build duplicate pipelines rather than refactor brittle ones, leading to inconsistent processing and maintenance burden.

Early-Stage Shortcuts: Rapid prototyping encourages embedding business logic in training code and undocumented configuration changes. While necessary for innovation, these shortcuts become liabilities as systems scale across teams.

Engineering Solutions: Managing evolution requires architectural discipline including cohort-based monitoring for loop detection, modular pipeline design with workflow orchestration tools, and treating configuration as a first-class system component with versioning and validation.

Real-World Technical Debt Examples

Hidden technical debt is not just theoretical; it has played a critical role in shaping the trajectory of real-world machine learning systems. These examples illustrate how unseen dependencies and misaligned assumptions can accumulate quietly, only to become major liabilities over time:

YouTube: Feedback Loop Debt

YouTube’s recommendation engine has faced repeated criticism for promoting sensational or polarizing content12. A large part of this stems from feedback loop debt: recommendations influence user behavior, which in turn becomes training data. Over time, this led to unintended content amplification. Mitigating this required substantial architectural overhauls, including cohort-based evaluation, delayed labeling, and more explicit disentanglement between engagement metrics and ranking logic.

12 YouTube Recommendation Impact: The recommendation system drives 70% of watch time on the platform (1+ billion hours daily), but algorithmic changes in 2016 increased average session time by 50% while inadvertently promoting conspiracy content. Fixing these feedback loops required 2+ years of engineering work and new evaluation frameworks.

Zillow: Correction Cascade Failure

Zillow’s home valuation model (Zestimate) faced significant correction cascades during its iBuying venture13. When initial valuation errors propagated into purchasing decisions, retroactive corrections triggered systemic instability that required data revalidation, model redesign, and eventually a full system rollback. The company shut down the iBuying arm in 2021, citing model unpredictability and data feedback effects as core challenges.

13 Zillow iBuying Failure: Zillow lost $881 million in a single quarter (Q3 2021) due to multiple factors including ML model failures, with the Zestimate algorithm reportedly overvaluing homes by an average of 5-7%. The company laid off 2,000+ employees and took a $569 million inventory write-down when shutting down Zillow Offers.

Tesla: Undeclared Consumer Debt

In early deployments, Tesla’s Autopilot made driving decisions based on models whose outputs were repurposed across subsystems without clear boundaries. Over-the-air updates occasionally introduced silent behavior changes that affected multiple subsystems (e.g., lane centering and braking) in unpredictable ways. This entanglement illustrates undeclared consumer debt and the risks of skipping strict interface governance in ML-enabled safety-critical systems.

Facebook: Configuration Debt

Facebook’s News Feed algorithm has undergone numerous iterations, often driven by rapid experimentation. However, the lack of consistent configuration management led to opaque settings that influenced content ranking without clear documentation. As a result, changes to the algorithm’s behavior were difficult to trace, and unintended consequences emerged from misaligned configurations. This situation highlights the importance of treating configuration as a first-class citizen in ML systems.

These real-world examples demonstrate the pervasive nature of technical debt in ML systems and why traditional DevOps practices require systematic extension. The infrastructure and production operations sections that follow present concrete engineering solutions designed to address these specific challenges: feature stores address data dependency debt, versioning systems enable reproducible configurations, monitoring frameworks detect feedback loops, and modular pipeline architectures prevent technical debt accumulation. This understanding of operational challenges provides the essential motivation for the specialized MLOps tools and practices we examine next.

Development Infrastructure and Automation

Building on the operational challenges established above, this section examines the infrastructure and development components that enable the specialized capabilities from preceding chapters while addressing systemic challenges. These foundational components must support federated learning coordination for edge devices (Chapter 14: On-Device Learning), implement secure model serving with privacy guarantees (Chapter 15: Security & Privacy), and maintain robustness monitoring for distribution shifts (Chapter 16: Robust AI). They form a layered architecture, as illustrated in Figure Figure 5, that integrates these diverse requirements into a cohesive operational framework. Understanding how these components interact enables practitioners to design systems that simultaneously achieve edge efficiency, security compliance, and fault tolerance while maintaining operational sustainability.

Data Infrastructure and Preparation

Reliable machine learning systems depend on structured, scalable, and repeatable handling of data. From the moment data is ingested to the point where it informs predictions, each stage must preserve quality, consistency, and traceability. In operational settings, data infrastructure supports not only initial development but also continual retraining, auditing, and serving, requiring systems that formalize the transformation and versioning of data throughout the ML lifecycle.

Data Management

Building on the data engineering foundations from Chapter 6: Data Engineering, data collection, preprocessing, and feature transformation become formalized into systematic operational processes. Within MLOps, these tasks are scaled into repeatable, automated workflows that ensure data reliability, traceability, and operational efficiency. Data management, in this setting, extends beyond initial preparation to encompass the continuous handling of data artifacts throughout the lifecycle of a machine learning system.

Central to this operational foundation is dataset versioning, which enables reproducible model development by tracking data evolution (see Section 1.4.1.3 for implementation details). Tools such as DVC enable teams to version large datasets alongside code repositories managed by Git, ensuring that data lineage is preserved and that experiments are reproducible.

This versioning foundation enables more sophisticated data management capabilities. Supervised learning pipelines, for instance, require consistent and well-managed annotation workflows. Labeling tools such as Label Studio support scalable, team-based annotation with integrated audit trails and version histories. These capabilities are essential in production settings, where labeling conventions evolve over time or require refinement across multiple iterations of a project.

Beyond annotation workflows, operational environments require data storage that supports secure, scalable, and collaborative access. Cloud-based object storage systems such as Amazon S3 and Google Cloud Storage offer durability and fine-grained access control, making them well-suited for managing both raw and processed data artifacts. These systems frequently serve as the foundation for downstream analytics, model development, and deployment workflows.

Building on this storage foundation, MLOps teams construct automated data pipelines to transition from raw data to analysis- or inference-ready formats. These pipelines perform structured tasks such as data ingestion, schema validation, deduplication, transformation, and loading. Orchestration tools including Apache Airflow, Prefect, and dbt are commonly used to define and manage these workflows. When managed as code, pipelines support versioning, modularity, and integration with CI/CD systems.

As these automated pipelines scale across organizations, they naturally encounter the challenge of feature management at scale. An increasingly important element of modern data infrastructure is the feature store, a concept pioneered by Uber’s Michelangelo platform team in 2017. They coined the term after realizing that feature engineering was being duplicated across hundreds of ML models. Their solution, a centralized “feature store”, became the template that inspired Feast, Tecton, and dozens of other platforms.

Feature stores centralize engineered features for reuse across models and teams (detailed in Section 1.4.1.2).

To illustrate these concepts in practice, consider a predictive maintenance application in an industrial setting. A continuous stream of sensor data is ingested and joined with historical maintenance logs through a scheduled pipeline managed in Airflow. The resulting features, including rolling averages and statistical aggregates, are stored in a feature store for both retraining and low-latency inference. This pipeline is versioned, monitored, and integrated with the model registry, enabling full traceability from data to deployed model predictions.

This comprehensive approach to data management extends far beyond ensuring data quality, establishing the operational backbone that enables model reproducibility, auditability, and sustained deployment at scale. Without robust data management, the integrity of downstream training, evaluation, and serving processes cannot be maintained, making feature stores a critical component of the infrastructure.

Feature Stores

Feature stores14 provide an abstraction layer between data engineering and machine learning. Their primary purpose is to enable consistent, reliable access to engineered features across training and inference workflows. In conventional pipelines, feature engineering logic is duplicated, manually reimplemented, or diverges across environments. This introduces risks of training-serving skew15 (where features differ between training and production), data leakage, and model drift.

14 Feature Store Scale: Uber’s Michelangelo feature store serves 10+ million features per second with P99 latency under 10ms using optimized, co-located serving infrastructure, storing 200+ petabytes of feature data. Airbnb’s feature store supports 1,000+ ML models with automated feature validation preventing 85% of potential training-serving skew issues.

15 Training-Serving Skew Impact: Studies show training-serving skew causes 5-15% accuracy degradation in production models. Google reported that fixing skew issues improved ad click prediction accuracy by 8%, translating to millions in additional revenue annually.

To address these challenges, feature stores manage both offline (batch) and online (real-time) feature access in a centralized repository. This becomes critical when deploying the optimized models discussed in Chapter 10: Model Optimizations, where feature consistency across environments is essential for maintaining model accuracy. During training, features are computed and stored in a batch environment, typically in conjunction with historical labels. At inference time, the same transformation logic is applied to fresh data in an online serving system. This architecture ensures that models consume identical features in both contexts, promoting consistency and improving reliability.

Beyond consistency across training and serving environments, feature stores support versioning, metadata management, and feature reuse across teams. For example, a fraud detection model and a credit scoring model rely on overlapping transaction features, which can be centrally maintained, validated, and shared. This reduces engineering overhead and supports alignment across use cases.

Feature stores can be integrated with data pipelines and model registries, enabling lineage tracking and traceability. When a feature is updated or deprecated, dependent models are identified and retrained accordingly. This integration enhances the operational maturity of ML systems and supports auditing, debugging, and compliance workflows.

Versioning and Lineage

Versioning is essential to reproducibility and traceability in machine learning systems. Unlike traditional software, ML models depend on multiple changing artifacts: training data, feature engineering logic, trained model parameters, and configuration settings. To manage this complexity, MLOps practices enforce tracking of versions across all pipeline components.

At the foundation of this tracking system, data versioning allows teams to snapshot datasets at specific points in time and associate them with particular model runs. This includes both raw data (e.g., input tables or log streams) and processed artifacts (e.g., cleaned datasets or feature sets). By maintaining a direct mapping between model checkpoints and the data used for training, teams can audit decisions, reproduce results, and investigate regressions.

Complementing data versioning, model versioning involves registering trained models as immutable artifacts, alongside metadata such as training parameters, evaluation metrics, and environment specifications. These records are maintained in a model registry, which provides a structured interface for promoting, deploying, and rolling back model versions. Some registries also support lineage visualization, which traces the full dependency graph from raw data to deployed prediction.

These complementary versioning practices together form the lineage layer of an ML system. This layer enables introspection, experimentation, and governance. When a deployed model underperforms, lineage tools help teams answer questions such as:

- Was the input distribution consistent with training data?

- Did the feature definitions change?

- Is the model version aligned with the serving infrastructure?

By elevating versioning and lineage to first-class citizens in the system design, MLOps enables teams to build and maintain reliable, auditable, and evolvable ML workflows at scale.

Continuous Pipelines and Automation

Automation enables machine learning systems to evolve continuously in response to new data, shifting objectives, and operational constraints. Rather than treating development and deployment as isolated phases, automated pipelines allow for synchronized workflows that integrate data preprocessing, training, evaluation, and release. These pipelines underpin scalable experimentation and ensure the repeatability and reliability of model updates in production.

CI/CD Pipelines

While conventional software systems rely on continuous integration and continuous delivery (CI/CD) pipelines to ensure that code changes can be tested, validated, and deployed efficiently, machine learning systems require significant adaptations. In the context of machine learning systems, CI/CD pipelines must handle additional complexities introduced by data dependencies, model training workflows, and artifact versioning. These pipelines provide a structured mechanism to transition ML models from development into production in a reproducible, scalable, and automated manner.

Building on these adapted foundations, a typical ML CI/CD pipeline consists of several coordinated stages, including: checking out updated code, preprocessing input data, training a candidate model, validating its performance, packaging the model, and deploying it to a serving environment. In some cases, pipelines also include triggers for automatic retraining based on data drift or performance degradation. By codifying these steps, CI/CD pipelines16 reduce manual intervention, enforce quality checks, and support continuous improvement of deployed systems.

16 Idempotency in ML Systems: Property where repeated operations produce identical results, crucial for reliable MLOps pipelines. Unlike traditional software where rerunning deployments is guaranteed identical, ML training introduces randomness through data shuffling, weight initialization, and hardware variations. Production MLOps achieves idempotency through fixed random seeds, deterministic data ordering, and consistent compute environments. Without idempotency, debugging becomes impossible when pipeline reruns produce different model artifacts.

17 GitHub Actions for ML: Over 60% of ML teams now use GitHub Actions for CI/CD according to recent developer surveys, with typical ML pipelines taking 15-45 minutes to run (vs. 2-5 minutes for traditional software). Netflix runs 10,000+ ML pipeline executions weekly through GitHub Actions, with 95% success rate on first run.

18 Kubeflow Production Usage: Google’s internal Kubeflow deployment runs 500,000+ ML jobs monthly across 50+ clusters, with automatic resource scaling reducing training costs by 40%. Companies like Spotify use Kubeflow to orchestrate 1,000+ concurrent training jobs with fault tolerance.

To support these complex workflows, a wide range of tools is available for implementing ML-focused CI/CD workflows. General-purpose CI/CD orchestrators such as Jenkins, CircleCI, and GitHub Actions17 manage version control events and execution logic. These tools integrate with domain-specific platforms such as Kubeflow18, Metaflow, and Prefect, which offer higher-level abstractions for managing ML tasks and workflows.

Figure 6 illustrates a representative CI/CD pipeline for machine learning systems. The process begins with a dataset and feature repository, from which data is ingested and validated. Validated data is then transformed for model training. A retraining trigger, such as a scheduled job or performance threshold, initiates this process automatically. Once training and hyperparameter tuning are complete, the resulting model undergoes evaluation against predefined criteria. If the model satisfies the required thresholds, it is registered in a model repository along with metadata, performance metrics, and lineage information. Finally, the model is deployed back into the production system, closing the loop and enabling continuous delivery of updated models.

To illustrate these concepts in practice, consider an image classification model under active development. When a data scientist commits changes to a GitHub repository, a Jenkins pipeline is triggered. The pipeline fetches the latest data, performs preprocessing, and initiates model training. Experiments are tracked using MLflow, which logs metrics and stores model artifacts. After passing automated evaluation tests, the model is containerized and deployed to a staging environment using Kubernetes. If the model meets validation criteria in staging, the pipeline orchestrates controlled deployment strategies such as canary testing (detailed in Section 1.4.2.3), gradually routing production traffic to the new model while monitoring key metrics for anomalies. In case of performance regressions, the system can automatically revert to a previous model version.

Through these comprehensive automation capabilities, CI/CD pipelines play a central role in enabling scalable, repeatable, and safe deployment of machine learning models. By unifying the disparate stages of the ML workflow under continuous automation, these pipelines support faster iteration, improved reproducibility, and greater resilience in production systems. In mature MLOps environments, CI/CD is not an optional layer, but a foundational capability that transforms ad hoc experimentation into a structured and operationally sound development process.

Training Pipelines

Model training is a central phase in the machine learning lifecycle, where algorithms are optimized to learn patterns from data. Building on the distributed training concepts covered in Chapter 8: AI Training, we examine how training workflows are operationalized through systematic pipelines. Within an MLOps context, these activities are reframed as part of a reproducible, scalable, and automated pipeline that supports continual experimentation and reliable production deployment.

The foundation of operational training lies in modern machine learning frameworks such as TensorFlow, PyTorch, and Keras, which provide modular components for building and training models. The framework selection principles from Chapter 7: AI Frameworks become essential for production training pipelines requiring reliable scaling. These libraries include high-level abstractions for neural network components and training algorithms, enabling practitioners to prototype and iterate efficiently. When embedded into MLOps pipelines, these frameworks serve as the foundation for training processes that can be systematically scaled, tracked, and retrained.

Building on these framework foundations, reproducibility emerges as a key objective of MLOps. Training scripts and configurations are version-controlled using tools like Git and hosted on platforms such as GitHub. Interactive development environments, including Jupyter notebooks, encapsulate data ingestion, feature engineering, training routines, and evaluation logic in a unified format. These notebooks integrate into automated pipelines, allowing the same logic used for local experimentation to be reused for scheduled retraining in production systems.

Beyond ensuring reproducibility, automation further enhances model training by reducing manual effort and standardizing critical steps. MLOps workflows incorporate techniques such as hyperparameter tuning, neural architecture search, and automatic feature selection to explore the design space efficiently. These tasks are orchestrated using CI/CD pipelines, which automate data preprocessing, model training, evaluation, registration, and deployment. For instance, a Jenkins pipeline triggers a retraining job when new labeled data becomes available. The resulting model is evaluated against baseline metrics, and if performance thresholds are met, it is deployed automatically.

Supporting these automated workflows, the increasing availability of cloud-based infrastructure has further expanded the reach of model training. This connects to the workflow orchestration patterns explored in Chapter 5: AI Workflow, which provide the foundation for managing complex, multi-stage training processes across distributed systems. Cloud providers offer managed services that provision high-performance computing resources, which include GPU and TPU accelerators, on demand19. Depending on the platform, teams construct their own training workflows or rely on fully managed services such as Vertex AI Fine Tuning, which support automated adaptation of foundation models to new tasks. Nonetheless, hardware availability, regional access restrictions, and cost constraints remain important considerations when designing cloud-based training systems.

19 Cloud ML Training Economics: Training GPT-3 was estimated to cost approximately $4.6 million on AWS according to Lambda Labs calculations, though official training costs were not disclosed by OpenAI, while fine-tuning typically costs $100-$10,000. Google’s TPU v4 pods can reduce training costs by 2-5\(\times\) compared to equivalent GPU clusters, with some organizations reporting 60-80% cost savings through spot instances and preemptible training.

To illustrate these integrated practices, consider a data scientist developing a neural network for image classification using a PyTorch notebook. The fastai library is used to simplify model construction and training. The notebook trains the model on a labeled dataset, computes performance metrics, and tunes model configuration parameters. Once validated, the training script is version-controlled and incorporated into a retraining pipeline that is periodically triggered based on data updates or model performance monitoring.

Through standardized workflows, versioned environments, and automated orchestration, MLOps enables the model training process to transition from ad hoc experimentation to a robust, repeatable, and scalable system. This not only accelerates development but also ensures that trained models meet production standards for reliability, traceability, and performance.

Model Validation

Before a machine learning model is deployed into production, it must undergo rigorous evaluation to ensure that it meets predefined performance, robustness, and reliability criteria. While earlier chapters discussed evaluation in the context of model development, MLOps reframes evaluation as a structured and repeatable process for validating operational readiness. It incorporates practices that support pre-deployment assessment, post-deployment monitoring, and automated regression testing.

The evaluation process begins with performance testing against a holdout test set, a dataset not used during training or validation. This dataset is sampled from the same distribution as production data and is used to measure generalization. Core metrics such as accuracy, area under the curve (AUC), precision, recall, and F1 score are computed to quantify model performance. These metrics are not only used at a single point in time but also tracked longitudinally to detect degradation, such as that caused by data drift, where shifts in input distributions can reduce model accuracy over time (see Figure 7).

Beyond static evaluation, MLOps encourages controlled deployment strategies that simulate production conditions while minimizing risk. One widely adopted method is canary testing, in which the new model is deployed to a small fraction of users or queries. During this limited rollout, live performance metrics are monitored to assess system stability and user impact. For instance, an e-commerce platform deploys a new recommendation model to 5% of web traffic and observes metrics such as click-through rate, latency, and prediction accuracy. Only after the model demonstrates consistent and reliable performance is it promoted to full production.

Cloud-based ML platforms further support model evaluation by enabling experiment logging, request replay, and synthetic test case generation. These capabilities allow teams to evaluate different models under identical conditions, facilitating comparisons and root-cause analysis. Tools such as Weights and Biases automate aspects of this process by capturing training artifacts, recording hyperparameter configurations, and visualizing performance metrics across experiments. These tools integrate directly into training and deployment pipelines, improving transparency and traceability.

While automation is central to MLOps evaluation practices, human oversight remains essential. Automated tests may fail to capture nuanced performance issues, such as poor generalization on rare subpopulations or shifts in user behavior. Therefore, teams combine quantitative evaluation with qualitative review, particularly for models deployed in high-stakes or regulated environments. This human-in-the-loop validation becomes especially critical for social impact applications, where model failures can have direct consequences on vulnerable populations.

This multi-stage evaluation process bridges offline testing and live system monitoring, ensuring that models not only meet technical benchmarks but also behave predictably and responsibly under real-world conditions. These evaluation practices reduce deployment risk and help maintain the reliability of machine learning systems over time, completing the development infrastructure foundation necessary for production deployment.

Infrastructure Integration Summary

The infrastructure and development components examined in this section establish the foundation for reliable machine learning operations. These systems transform ad hoc experimentation into structured workflows that support reproducibility, collaboration, and continuous improvement.

Data infrastructure provides the foundation through feature stores that enable feature reuse across projects, versioning systems that track data lineage and evolution, and validation frameworks that ensure data quality throughout the pipeline. Building on the data management foundations from Chapter 6: Data Engineering, these components extend basic capabilities to production contexts where multiple teams and models depend on shared data assets.

Continuous pipelines automate the ML lifecycle through CI/CD systems adapted for machine learning workflows. Unlike traditional software CI/CD that focuses solely on code, ML pipelines orchestrate data validation, feature transformation, model training, and evaluation in integrated workflows. Training pipelines specifically manage the computationally intensive process of model development, coordinating resource allocation, hyperparameter optimization, and experiment tracking. These automated workflows enable teams to iterate rapidly while maintaining reproducibility and quality standards.

Model validation bridges development and production through systematic evaluation that extends beyond offline metrics. Validation strategies combine performance benchmarking on held-out datasets with canary testing in production environments, allowing teams to detect issues before full deployment. This multi-stage validation recognizes that models must perform not just on static test sets but under dynamic real-world conditions where data distributions shift and user behavior evolves.

These infrastructure components directly address the operational challenges identified earlier through systematic engineering capabilities:

- Feature stores and data versioning solve data dependency debt by ensuring consistent, tracked feature access across training and serving

- CI/CD pipelines and model registries prevent correction cascades through controlled deployment and rollback mechanisms

- Automated workflows and lineage tracking eliminate undeclared consumer risks via explicit dependency management

- Modular pipeline architectures avoid pipeline debt through reusable, well-defined component interfaces

However, deploying a validated model represents only the beginning of the production journey. The infrastructure enables reliable model development, but production operations must address the dynamic challenges of maintaining system performance under real-world conditions: handling data drift, managing system failures, and adapting to evolving requirements without service disruption.

Production Operations

Building directly on the infrastructure foundation established above, production operations transform validated models into reliable services that maintain performance under real-world conditions. These operations must handle the diverse requirements established in preceding chapters: managing model updates across distributed edge devices without centralized visibility (Chapter 14: On-Device Learning), maintaining security controls during runtime inference and model updates (Chapter 15: Security & Privacy), and detecting performance degradation from adversarial attacks or distribution shifts (Chapter 16: Robust AI). This operational layer implements monitoring, governance, and deployment strategies that enable these specialized capabilities to function together reliably at scale.

This section explores the deployment patterns, serving infrastructure, monitoring systems, and governance frameworks that transform validated models into production services capable of operating reliably at scale.

Production operations introduce challenges that extend beyond model development. Deployed systems must handle variable loads, maintain consistent latency under diverse conditions, recover gracefully from failures, and adapt to evolving data distributions without disrupting service. These requirements demand specialized infrastructure, monitoring capabilities, and operational practices that complement the development workflows established in the previous section.

Model Deployment and Serving

Once a model has been trained and validated, it must be integrated into a production environment where it can deliver predictions at scale. This process involves packaging the model with its dependencies, managing versions, and deploying it in a way that aligns with performance, reliability, and governance requirements. Deployment transforms a static artifact into a live system component. Serving ensures that the model is accessible, reliable, and efficient in responding to inference requests. Together, these components bridge model development and real-world impact.

Model Deployment

Teams need to properly package, test, and track ML models to reliably deploy them to production. MLOps introduces frameworks and procedures for actively versioning, deploying, monitoring, and updating models in sustainable ways.

One common approach to deployment involves containerizing models using containerization technologies20. This packaging approach ensures smooth portability across environments, making deployment consistent and predictable.

20 Containerization and Orchestration: Docker containers package applications with all their dependencies into standardized, portable units that run consistently across different computing environments, isolating software from infrastructure variations. Kubernetes orchestrates these containers at scale, automating deployment, load balancing, scaling, and recovery across clusters of machines. Together, they enable the reproducible, automated infrastructure management essential for modern MLOps, where models and their serving environments must be deployed consistently across development, staging, and production.

Production deployment requires frameworks that handle model packaging, versioning, and integration with serving infrastructure. Tools like MLflow and model registries manage these deployment artifacts, while serving-specific frameworks (detailed in the Inference Serving section) handle the runtime optimization and scaling requirements.

Before full-scale rollout, teams deploy updated models to staging or QA environments21 to rigorously test performance.

21 TensorFlow Serving Origins: Born from Google’s internal serving system that handled billions of predictions per day for products like Gmail spam detection and YouTube recommendations. Google open-sourced it in 2016 when they realized that productionizing ML models was the bottleneck preventing widespread AI adoption.

22 Canary Deployment History: Named after the canaries miners used to detect toxic gases; if the bird died, miners knew to evacuate immediately. Netflix pioneered this technique for software in 2011, and it became essential for ML where model failures can be subtle and catastrophic.

23 Blue-Green Deployment: Zero-downtime deployment strategy maintaining two identical production environments. One serves traffic (blue) while the other receives updates (green). After validation, traffic switches instantly to green. For ML systems, this enables risk-free model updates since rollback takes <10 seconds vs. hours for model retraining. Spotify uses blue-green deployment for their recommendation models, serving 400+ million users with 99.95% uptime during model updates.

Techniques such as shadow deployments, canary testing22, and blue-green deployment23 are used to validate new models incrementally. As described in our evaluation frameworks, these controlled deployment strategies enable safe model validation in production. Robust rollback procedures are essential to handle unexpected issues, reverting systems to the previous stable model version to ensure minimal disruption.

When canary deployments reveal problems at partial traffic levels (e.g., issues appearing at 30% traffic but not at 5%), teams need systematic debugging strategies. Effective diagnosis requires correlating multiple signals: performance metrics from Chapter 12: Benchmarking AI, data distribution analysis to detect drift, and feature importance shifts that might explain degradation. Teams maintain debug toolkits including A/B test24 analysis frameworks, feature attribution tools, and data slice analyzers that identify which subpopulations are experiencing degraded performance.

24 A/B Testing for ML: Statistical method to compare model performance by splitting traffic between model versions. Netflix runs 1,000+ A/B tests annually on recommendation algorithms, while Uber tests ride pricing models on millions of trips daily to optimize both user experience and revenue. Rollback decisions must balance the severity of degradation against business impact: a 2% accuracy drop might be acceptable during feature launches but unacceptable for safety-critical applications.

Integration with CI/CD pipelines further automates the deployment and rollback process, enabling efficient iteration cycles.

Model registries, such as Vertex AI’s model registry, act as centralized repositories for storing and managing trained models. These registries not only facilitate version comparisons but also often include access to base models, which may be open source, proprietary, or a hybrid (e.g., LLAMA). Deploying a model from the registry to an inference endpoint is streamlined, handling resource provisioning, model weight downloads, and hosting.

Inference endpoints typically expose the deployed model via REST APIs for real-time predictions. Depending on performance requirements, teams can configure resources, such as GPU accelerators, to meet latency and throughput targets. Some providers also offer flexible options like serverless25 or batch inference, eliminating the need for persistent endpoints and enabling cost-efficient, scalable deployments.

25 Serverless Computing for ML: Infrastructure that automatically scales from zero to thousands of instances based on demand, with sub-second cold start times. AWS Lambda can handle 10,000+ concurrent ML inference requests, while Google Cloud Functions supports models up to 32 GB, charging only for actual compute time used. For example, AWS SageMaker Inference supports such configurations.

26 MLflow’s Creation: Built by the team at Databricks who were frustrated watching their customers struggle with ML experiment tracking. They noticed that data scientists were keeping model results in spreadsheets and could never reproduce their best experiments, a problem that inspired MLflow’s “model registry” concept.

To maintain lineage and auditability, teams track model artifacts, including scripts, weights, logs, and metrics, using tools like MLflow26.

By leveraging these tools and practices, teams can deploy ML models resiliently, ensuring smooth transitions between versions, maintaining production stability, and optimizing performance across diverse use cases.

Inference Serving

Once a model has been deployed, the final stage in operationalizing machine learning is to make it accessible to downstream applications or end-users. Serving infrastructure provides the interface between trained models and real-world systems, enabling predictions to be delivered reliably and efficiently. In large-scale settings, such as social media platforms or e-commerce services, serving systems may process tens of trillions of inference queries per day (Wu et al. 2019). The measurement frameworks established in Chapter 12: Benchmarking AI become essential for validating performance claims and establishing production baselines. Meeting such demand requires careful design to balance latency, scalability, and robustness.

27 TensorFlow Serving: Google’s production-grade ML serving system handles over 100,000 queries per second per machine for lightweight models on high-end hardware with <10 ms latency for most models. Originally built to serve YouTube’s recommendation system, processing over 1 billion hours of video watched daily.

28 NVIDIA Triton Inference Server: Can achieve up to 40,000 inferences per second on a single A100 GPU for BERT models, with dynamic batching reducing latency by up to 10\(\times\) compared to naive serving approaches. Supports concurrent execution of up to 100 different model types.

29 KServe (formerly KFServing): Kubernetes-native serving framework that can autoscale from zero to thousands of replicas in under 30 seconds. Used by companies like Bloomberg to serve over 10,000 models simultaneously with 99.9% uptime SLA.

To address these challenges, production-grade serving frameworks have emerged. Tools such as TensorFlow Serving27, NVIDIA Triton Inference Server28, and KServe29 provide standardized mechanisms for deploying, versioning, and scaling machine learning models across heterogeneous infrastructure. These frameworks abstract many of the lower-level concerns, allowing teams to focus on system behavior, integration, and performance targets.

Model serving architectures are typically designed around three broad paradigms:

- Online Serving, which provides low-latency, real-time predictions for interactive systems such as recommendation engines or fraud detection.

- Offline Serving, which processes large batches of data asynchronously, typically in scheduled jobs used for reporting or model retraining.

- Near-Online (Semi-Synchronous) Serving, which offers a balance between latency and throughput, appropriate for scenarios like chatbots or semi-interactive analytics.

Each of these approaches introduces different constraints in terms of availability, responsiveness, and throughput. The efficiency techniques from Chapter 9: Efficient AI become crucial for meeting these performance requirements, particularly when serving models at scale. Serving systems are therefore constructed to meet specific Service Level Agreements (SLAs)30 and Service Level Objectives (SLOs)31, which quantify acceptable performance boundaries along dimensions such as latency, error rates, and uptime. Achieving these goals requires a range of optimizations in request handling, scheduling, and resource allocation.

30 Service Level Agreements (SLAs): Production ML systems typically target 99.9% uptime (8.77 hours downtime/year) for critical services, with penalties of 10-25% monthly service credits for each 0.1% below target. Google’s Cloud AI Platform promises 99.95% uptime with automatic failover in <30 seconds.

31 Service Level Objectives (SLOs): Real-world ML serving SLOs often specify P95 latency <100 ms for online inference, P99 <500 ms, and error rates <0.1%. Netflix’s recommendation system maintains P99 latency under 150 ms while serving 200+ million users, processing 3+ billion hours of content monthly.

A number of serving system design strategies are commonly employed to meet these requirements. Request scheduling and batching aggregate inference requests to improve throughput and hardware utilization. For instance, Clipper (Crankshaw et al. 2017) applies batching and caching to reduce response times in online settings. Model instance selection and routing dynamically assign requests to model variants based on system load or user-defined constraints; INFaaS (Romero et al. 2021) illustrates this approach by optimizing accuracy-latency trade-offs across variant models.

- Request scheduling and batching: Efficiently manages incoming ML inference requests, optimizing performance through smart queuing and grouping strategies. Systems like Clipper (Crankshaw et al. 2017) introduce low-latency online prediction serving with caching and batching techniques.

- Model instance selection and routing: Intelligent algorithms direct requests to appropriate model versions or instances. INFaaS (Romero et al. 2021) explores this by generating model-variants and efficiently exploring the trade-off space based on performance and accuracy requirements.

- Load balancing: Distributes workloads evenly across multiple serving instances. MArk (Model Ark) (C. Zhang et al. 2019) demonstrates effective load balancing techniques for ML serving systems.

- Model instance autoscaling: Dynamically adjusts capacity based on demand. Both INFaaS (Romero et al. 2021) and MArk (C. Zhang et al. 2019) incorporate autoscaling capabilities to handle workload fluctuations efficiently.

- Model orchestration: Manages model execution, enabling parallel processing and strategic resource allocation. AlpaServe (Li et al. 2023) demonstrates advanced techniques for handling large models and complex serving scenarios.

- Execution time prediction: Systems like Clockwork (Gujarati et al. 2020) focus on high-performance serving by predicting execution times of individual inferences and efficiently using hardware accelerators.

In more complex inference scenarios, model orchestration coordinates the execution of multi-stage models or distributed components. AlpaServe (Li et al. 2023) exemplifies this by enabling efficient serving of large foundation models through coordinated resource allocation. Finally, execution time prediction enables systems to anticipate latency for individual requests. Clockwork (Gujarati et al. 2020) uses this capability to reduce tail latency and improve scheduling efficiency under high load.

While these systems differ in implementation, they collectively illustrate the critical techniques that underpin scalable and responsive ML-as-a-Service infrastructure. Table 2 summarizes these strategies and highlights representative systems that implement them.

| Technique | Description | Example System |

|---|---|---|

| Request Scheduling & Batching | Groups inference requests to improve throughput and reduce overhead | Clipper |

| Instance Selection & Routing | Dynamically assigns requests to model variants based on constraints | INFaaS |