Benchmarking AI

DALL·E 3 Prompt: Photo of a podium set against a tech-themed backdrop. On each tier of the podium, there are AI chips with intricate designs. The top chip has a gold medal hanging from it, the second one has a silver medal, and the third has a bronze medal. Banners with ‘AI Olympics’ are displayed prominently in the background.

Purpose

Why does systematic measurement form the foundation of engineering progress in machine learning systems, and how does standardized benchmarking enable scientific advancement in this emerging field?

Engineering disciplines advance through measurement and comparison, establishing benchmarking as essential to machine learning systems development. Without systematic evaluation frameworks, optimization claims lack scientific rigor, hardware investments proceed without evidence, and system improvements cannot be verified or reproduced. Benchmarking transforms subjective impressions into objective data, enabling engineers to distinguish genuine advances from implementation artifacts. This measurement discipline is essential because ML systems involve complex interactions between algorithms, hardware, and data that defy intuitive performance prediction. Standardized benchmarks establish shared baselines allowing meaningful comparison across research groups, enable cumulative progress through reproducible results, and provide empirical foundations necessary for engineering decision-making. Understanding benchmarking principles enables systematic evaluation driving continuous improvement and establishes machine learning systems engineering as rigorous scientific discipline.

Analyze the evolution of ML benchmarking and explain how benchmark gaming lessons inform current design

Distinguish between the three dimensions of ML benchmarking (algorithmic, systems, and data) and evaluate how each dimension contributes to comprehensive system assessment

Compare training and inference benchmarking methodologies, identifying specific metrics and evaluation protocols appropriate for each phase of the ML lifecycle

Apply MLPerf benchmarking standards to evaluate solutions and guide optimization decisions

Design statistically rigorous experimental protocols that account for ML system variability, including appropriate sample sizes and confidence interval reporting

Critique existing benchmark results for common fallacies and pitfalls, distinguishing between benchmark performance and real-world deployment effectiveness

Implement production monitoring strategies that extend benchmarking principles to operational environments, including A/B testing and continuous model validation

Evaluate performance trade-offs across accuracy, latency, energy, and fairness for deployment optimization

Machine Learning Benchmarking Framework

The systematic evaluation of machine learning systems presents a critical methodological challenge within the broader discipline of performance engineering. While previous chapters have established comprehensive optimization frameworks, particularly hardware acceleration strategies (Chapter 11: AI Acceleration), the validation of these approaches requires rigorous measurement methodologies that extend beyond traditional computational benchmarking.

Consider the challenge facing engineers evaluating competing AI hardware solutions. A vendor might demonstrate impressive performance gains on carefully selected benchmarks, yet fail to deliver similar improvements in production workloads. Without comprehensive evaluation frameworks, distinguishing genuine advances from implementation artifacts becomes nearly impossible. This challenge illustrates why systematic measurement forms the foundation of engineering progress in machine learning systems.

This chapter examines benchmarking as an essential empirical discipline that enables quantitative assessment of machine learning system performance across diverse operational contexts. Benchmarking establishes the methodological foundation for evidence-based engineering decisions, providing systematic evaluation frameworks that allow practitioners to compare competing approaches, validate optimization strategies, and ensure reproducible performance claims in both research and production environments.

Machine learning benchmarking presents unique challenges that distinguish it from conventional systems evaluation. The probabilistic nature of machine learning algorithms introduces inherent performance variability that traditional deterministic benchmarks cannot adequately characterize. ML system performance exhibits complex dependencies on data characteristics, model architectures, and computational resources, creating multidimensional evaluation spaces that require specialized measurement approaches.

Contemporary machine learning systems demand evaluation frameworks that accommodate multiple, often competing, performance objectives. Beyond computational efficiency, these systems must be assessed across dimensions including predictive accuracy, convergence properties, energy consumption, fairness, and robustness. This multi-objective evaluation paradigm necessitates sophisticated benchmarking methodologies that can characterize trade-offs and guide system design decisions within specific operational constraints.

The field has evolved to address these challenges through comprehensive evaluation approaches that operate across three core dimensions:

This chapter provides a systematic examination of machine learning benchmarking methodologies, beginning with the historical evolution of computational evaluation frameworks and their adaptation to address the unique requirements of probabilistic systems. We analyze standardized evaluation frameworks such as MLPerf that establish comparative baselines across diverse hardware architectures and implementation strategies. The discussion subsequently examines the essential distinctions between training and inference evaluation, exploring the specialized metrics and methodologies required to characterize their distinct computational profiles and operational requirements.

The analysis extends to specialized evaluation contexts, including resource-constrained mobile and edge deployment scenarios that present unique measurement challenges. We conclude by investigating production monitoring methodologies that extend benchmarking principles beyond controlled experimental environments into dynamic operational contexts. This comprehensive treatment demonstrates how rigorous measurement validates the performance improvements achieved through the optimization techniques and hardware acceleration strategies examined in preceding chapters, while establishing the empirical foundation essential for the deployment strategies explored in Part IV.

Historical Context

The evolution from simple performance metrics to comprehensive ML benchmarking reveals three critical methodological shifts, each addressing failures of previous evaluation paradigms that directly inform our current approach.

Performance Benchmarks

The evolution from synthetic operations to representative workloads emerged when early benchmark gaming undermined evaluation validity. Mainframe benchmarks like Whetstone (1964) and LINPACK (1979) measured isolated operations, enabling vendors to optimize for narrow tests rather than practical performance. SPEC CPU (1989) pioneered using real application workloads to ensure evaluation reflects actual deployment scenarios. This lesson directly shapes ML benchmarking, as optimization claims from Chapter 10: Model Optimizations require validation on representative tasks. MLPerf’s inclusion of real models like ResNet-50 and BERT ensures benchmarks capture deployment complexity rather than idealized test cases.

As deployment contexts diversified, benchmarks evolved from single-dimension to multi-objective evaluation. Graphics benchmarks measured quality alongside speed; mobile benchmarks evaluated battery life with performance. The multi-objective challenges from Chapter 9: Efficient AI, balancing accuracy, latency, and energy, manifest directly in modern ML evaluation where no single metric captures deployment viability.

The shift from isolated components to integrated systems occurred when distributed computing revealed that component optimization fails to predict system performance. ML training depends not just on accelerator compute (Chapter 11: AI Acceleration) but on data pipelines, gradient synchronization, and storage throughput. MLPerf evaluates complete workflows, recognizing that performance emerges from component interactions.

These lessons culminate in MLPerf (2018), which synthesizes representative workloads, multi-objective evaluation, and integrated measurement while addressing ML-specific challenges (Ranganathan and Hölzle 2024).

Energy Benchmarks

The multi-objective evaluation paradigm naturally extended to energy efficiency as computing diversified beyond mainframes with unlimited power budgets. Mobile devices demanded battery life optimization, while warehouse-scale systems faced energy costs rivaling hardware expenses. This shift established energy as a first-class metric alongside performance, spawning benchmarks like SPEC Power1 for servers, Green5002 for supercomputers, and ENERGY STAR3 for consumer systems.

1 SPEC Power: Introduced in 2007 to address the growing importance of energy efficiency in server design, SPEC Power measures performance per watt across 10 different load levels from 10% to 100%. Results show that modern servers achieve 8-12 SPECpower_ssj2008 scores per watt, compared to 1-3 for systems from the mid-2000s, representing approximately 3-4x efficiency improvement.

2 Green500: Started in 2007 as a counterpart to the Top500 supercomputer list, Green500 ranks systems by FLOPS per watt rather than raw performance. The most efficient systems achieve over 60 gigaFLOPS per watt compared to less than 1 gigaFLOPS/watt for early 2000s supercomputers, demonstrating improvements in computational efficiency.

3 ENERGY STAR: Launched by the EPA in 1992, this voluntary program has prevented over 4 billion tons of greenhouse gas emissions and saved consumers $450 billion on energy bills. Computing equipment must meet strict efficiency requirements: ENERGY STAR computers typically consume 30-65% less energy than standard models during operation and sleep modes.

Despite these advances, power benchmarking faces ongoing challenges in accounting for diverse workload patterns and system configurations across computing environments. Recent advancements, such as the MLPerf Power benchmark, have introduced specialized methodologies for measuring the energy impact of machine learning workloads, directly addressing the growing importance of energy efficiency in AI-driven computing.

Energy benchmarking extends beyond hardware energy measurement alone. Algorithmic energy optimization represents an equally critical dimension of modern AI benchmarking, where energy-efficient algorithms achieve performance improvements through computational reduction rather than purely hardware enhancement. Neural network pruning reduces energy consumption by eliminating unnecessary computations: pruned BERT models can achieve 90% of original task accuracy with 10x fewer parameters, delivering 4-8x inference speedup and 8-12x energy reduction depending on pruning method and hardware (Han, Mao, and Dally 2015). Quantization techniques achieve similar gains by reducing precision requirements: INT8 quantization typically provides 4x inference speedup with 4x energy reduction while maintaining 99%+ accuracy preservation (Jacob et al. 2018).

Knowledge distillation offers another algorithmic energy optimization pathway, where smaller “student” models learn from larger “teacher” models. MobileNet architectures demonstrate this principle, achieving 10x energy reduction versus ResNet while maintaining similar accuracy through depthwise separable convolutions and width multipliers (Howard et al. 2017). Model compression techniques collectively enable deployment of sophisticated AI capabilities within severe energy constraints, making techniques essential for mobile and edge computing scenarios.

Energy-aware benchmarking must evaluate not just hardware power consumption, but also algorithmic efficiency metrics including FLOP reduction through sparsity, memory access reduction through compression, and computational energy benefits from quantization. These algorithmic optimizations often achieve greater energy savings than hardware improvements alone, providing a critical dimension for energy benchmarking frameworks.

As artificial intelligence and edge computing evolve, power benchmarking will drive energy-efficient hardware and software innovations. This connects directly to sustainable AI practices discussed in Chapter 18: Sustainable AI, where energy-aware design principles guide environmentally responsible AI development.

Domain-Specific Benchmarks

Computing diversification necessitated specialized benchmarks tailored to domain-specific requirements that generic metrics cannot capture. Domain-specific benchmarks address three categories of specialization:

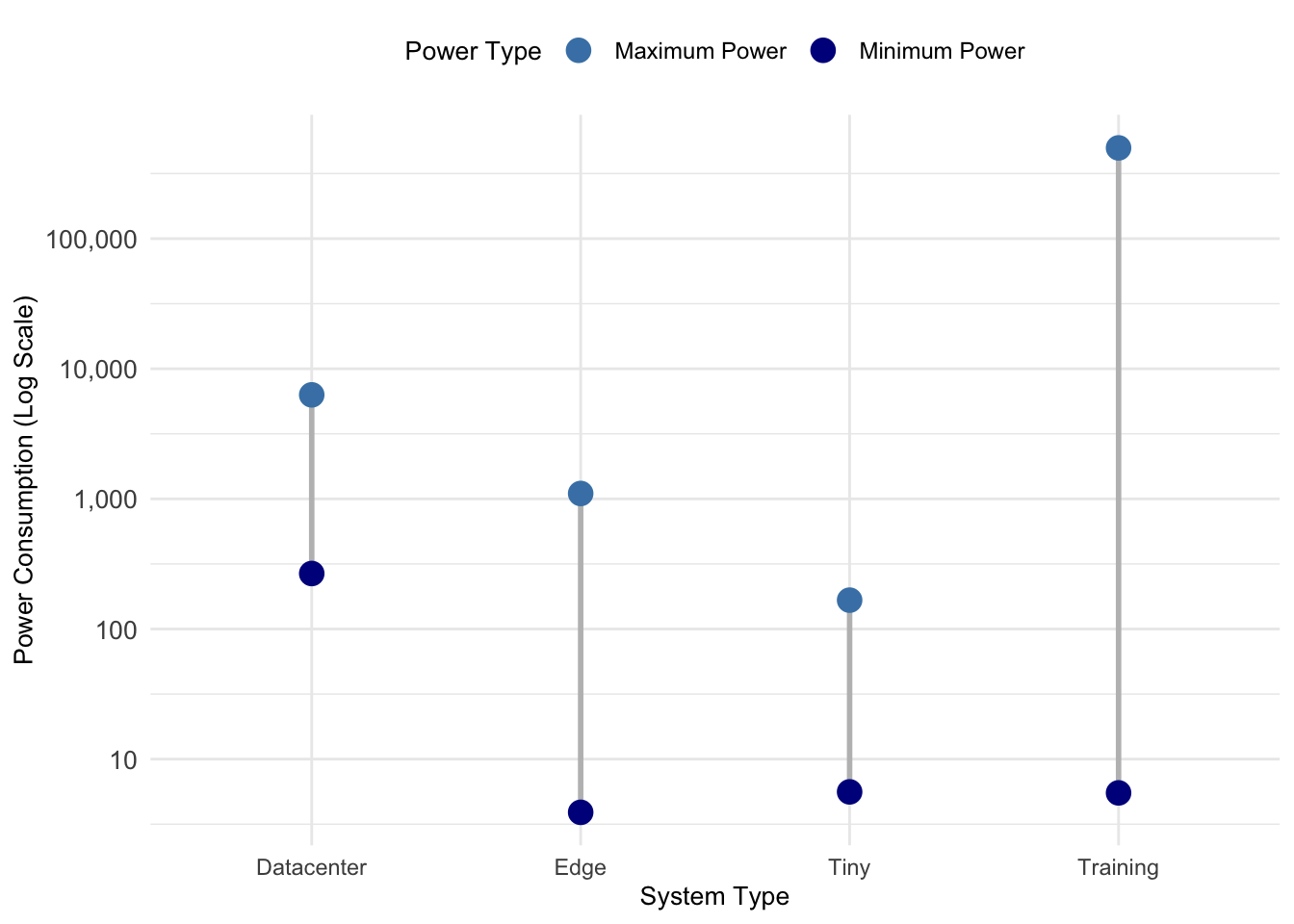

Deployment constraints shape core metric priorities. Datacenter workloads optimize for throughput with kilowatt-scale power budgets, while mobile AI operates within 2-5W thermal envelopes, and IoT devices require milliwatt-scale operation. These constraints, rooted in efficiency principles from Chapter 9: Efficient AI, determine whether benchmarks prioritize total throughput or energy per operation.

Application requirements impose functional and regulatory constraints beyond performance. Healthcare AI demands interpretability metrics alongside accuracy; financial systems require microsecond latency with audit compliance; autonomous vehicles need safety-critical reliability (ASIL-D: <10^-8 failure/hour). These requirements, connecting to responsible AI principles in Chapter 17: Responsible AI, extend evaluation beyond traditional performance metrics.

Operational conditions determine real-world viability. Autonomous vehicles face -40°C to +85°C temperatures and degraded sensor inputs; datacenters handle millions of concurrent requests with network partitions; industrial IoT endures years-long deployment without maintenance. Hardware capabilities from Chapter 11: AI Acceleration only deliver value when validated under these conditions.

Machine learning presents a prominent example of this transition toward domain-specific evaluation. Traditional CPU and GPU benchmarks prove insufficient for assessing ML workloads, which involve complex interactions between computation, memory bandwidth, and data movement patterns. MLPerf has standardized performance measurement for machine learning models across these three categories: MLPerf Training addresses datacenter deployment constraints with multi-node scaling benchmarks, MLPerf Inference evaluates latency-critical application requirements across server to edge deployments, and MLPerf Tiny assesses ultra-constrained operational conditions for microcontroller deployments. This tiered structure reflects the systematic application of our three-category framework to ML-specific evaluation needs.

The strength of domain-specific benchmarks lies in their ability to capture these specialized requirements that general benchmarks overlook. By systematically addressing deployment constraints, application requirements, and operational conditions, these benchmarks provide insights that drive targeted optimizations in both hardware and software while ensuring that improvements translate to real-world deployment success rather than merely optimizing for narrow laboratory conditions.

This historical progression from general computing benchmarks through energy-aware measurement to domain-specific evaluation frameworks provides the foundation for understanding contemporary ML benchmarking challenges. The lessons learned (representative workloads over synthetic tests, multi-objective over single metrics, and integrated systems over isolated components) directly shape how we approach AI system evaluation today.

Machine Learning Benchmarks

The historical evolution culminates in machine learning benchmarking, where the complexity exceeds all previous computing domains. Unlike traditional workloads with deterministic behavior, ML systems introduce inherent uncertainty through their probabilistic nature. A CPU benchmark produces identical results given the same inputs; an ML model’s performance varies with training data, initialization, and even the order of operations. This inherent variability, combined with the lessons from decades of benchmark evolution, necessitates our three-dimensional evaluation framework.

Building on the framework and optimization techniques from previous chapters, ML benchmarks must evaluate not just computational efficiency but the intricate interplay between algorithms, hardware, and data. The evolution of benchmarks reaches its current apex in machine learning, where our established three-dimensional framework reflects decades of computing measurement evolution. Early machine learning benchmarks focused primarily on algorithmic performance, measuring how well models could perform specific tasks (Lecun et al. 1998). However, as machine learning applications scaled dramatically and computational demands grew exponentially, the focus naturally expanded to include system performance and hardware efficiency (Jouppi et al. 2017). Recently, the role of data quality has emerged as the third dimension of evaluation (Gebru et al. 2021).

AI benchmarks differ from traditional performance metrics through their inherent variability, which introduces accuracy as a new evaluation dimension alongside deterministic characteristics like computational speed or energy consumption. The probabilistic nature of machine learning models means the same system can produce different results depending on the data it encounters, making accuracy a defining factor in performance assessment. This distinction adds complexity: benchmarking AI systems requires measuring not only raw computational efficiency but also understanding trade-offs between accuracy, generalization, and resource constraints.

Energy efficiency emerges as a cross-cutting concern that influences all three dimensions of our framework: algorithmic choices affect computational complexity and power requirements, hardware capabilities determine energy-performance trade-offs, and dataset characteristics influence training energy costs. This multifaceted evaluation approach represents a departure from earlier benchmarks that focused on isolated aspects like computational speed or energy efficiency (Hernandez and Brown 2020).

This evolution in benchmark complexity directly mirrors the field’s evolving understanding of what truly drives machine learning system success. While algorithmic innovations initially dominated progress metrics throughout the research phase, the practical challenges of deploying models at scale revealed the critical importance of hardware efficiency (Jouppi et al. 2021). Subsequently, high-profile failures of machine learning systems in real-world deployments highlighted how data quality and representation directly determine system reliability and fairness (Bender et al. 2021). Understanding how these dimensions interact has become necessary for accurately assessing machine learning system performance, informing development decisions, and measuring technological progress in the field.

ML Measurement Challenges

The unique characteristics of ML systems create measurement challenges that traditional benchmarks never faced. Unlike deterministic algorithms that produce identical outputs given the same inputs, ML systems exhibit inherent variability from multiple sources: algorithmic randomness from weight initialization and data shuffling, hardware thermal states affecting clock speeds, system load variations from concurrent processes, and environmental factors including network conditions and power management. This variability requires rigorous statistical methodology to distinguish genuine performance improvements from measurement noise.

To address this variability, effective benchmark protocols require multiple experimental runs with different random seeds. Running each benchmark 5-10 times and reporting statistical measures beyond simple means (including standard deviations or 95% confidence intervals) quantifies result stability and allows practitioners to distinguish genuine performance improvements from measurement noise.

Recent studies have highlighted how inadequate statistical rigor can lead to misleading conclusions. Many reinforcement learning papers report improvements that fall within statistical noise (Henderson et al. 2018), while GAN comparisons often lack proper experimental protocols, leading to inconsistent rankings across different random seeds (Lucic et al. 2018). These findings underscore the importance of establishing comprehensive measurement protocols that account for ML’s probabilistic nature.

Representative workload selection critically determines benchmark validity. Synthetic microbenchmarks often fail to capture the complexity of real ML workloads where data movement, memory allocation, and dynamic batching create performance patterns not visible in simplified tests. Comprehensive benchmarking requires workloads that reflect actual deployment patterns: variable sequence lengths in language models, mixed precision training regimes, and realistic data loading patterns that include preprocessing overhead. The distinction between statistical significance and practical significance requires careful interpretation. A small performance improvement might achieve statistical significance across hundreds of trials but prove operationally irrelevant if it falls within measurement noise or costs exceed benefits.

Addressing this requires careful benchmark design that prioritizes representative workloads over synthetic tests. Effective system evaluation relies on end-to-end application benchmarks like MLPerf that incorporate data preprocessing and reflect realistic deployment patterns. When developing custom evaluation frameworks, profiling production workloads helps identify the representative data distributions, batch sizes, and computational patterns essential for meaningful assessment.

Current benchmarking paradigms often fall short by measuring narrow task performance while missing characteristics that determine real-world system effectiveness. Most existing benchmarks evaluate supervised learning performance on static datasets, primarily testing pattern recognition capabilities rather than the adaptability and resilience required for production deployment. This limitation becomes apparent when models achieve excellent benchmark performance yet fail when deployed in slightly different conditions or domains. To address these shortcomings, comprehensive system evaluation must measure learning efficiency, continual learning capability, and out-of-distribution generalization alongside traditional metrics.

Algorithmic Benchmarks

Algorithmic benchmarks focus specifically on the first dimension of our framework: measuring model performance, accuracy, and efficiency. While hardware systems and training data quality certainly influence results, algorithmic benchmarks deliberately isolate model capabilities to enable clear understanding of the trade-offs between accuracy, computational complexity, and generalization.

AI algorithms face the complex challenge of balancing multiple performance objectives simultaneously, including accuracy, speed, resource efficiency, and generalization capability. As machine learning applications continue to span diverse domains, including computer vision, natural language processing, speech recognition, and reinforcement learning, evaluating these competing objectives requires carefully standardized methodologies tailored to each domain’s unique challenges. Algorithmic benchmarks, such as ImageNet4 (Deng et al. 2009), establish these evaluation frameworks, providing a consistent basis for comparing different machine learning approaches.

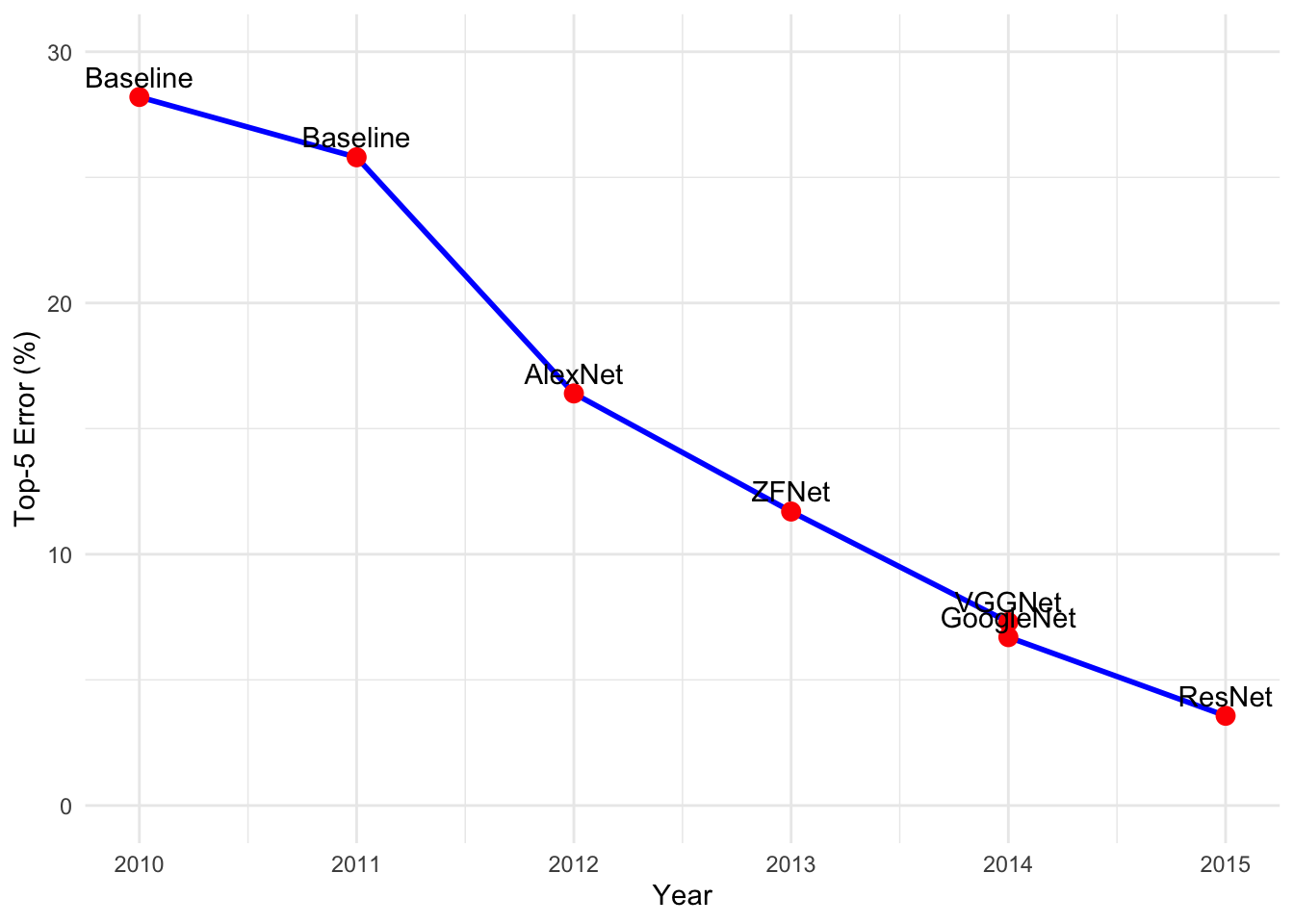

4 ImageNet: Created by Fei-Fei Li at Stanford starting in 2007, this dataset contains 14 million images across 20,000 categories, with 1.2 million images used for the annual classification challenge (ILSVRC). ImageNet’s impact is profound: it sparked the deep learning revolution when AlexNet achieved 15.3% top-5 error in 2012, compared to 25.8% for traditional methods, the largest single-year improvement in computer vision.

Algorithmic benchmarks advance AI through several functions. They establish clear performance baselines, enabling objective comparisons between competing approaches. By systematically evaluating trade-offs between model complexity, computational requirements, and task performance, they help researchers and practitioners identify optimal design choices. They track technological progress by documenting improvements over time, guiding the development of new techniques while exposing limitations in existing methodologies.

The graph in Figure 1 illustrates the reduction in error rates on the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) classification task over the years. Starting from the baseline models in 2010 and 2011, the introduction of AlexNet5 in 2012 marked an improvement, reducing the error rate from 25.8% to 16.4%. Subsequent models like ZFNet, VGGNet, GoogleNet, and ResNet6 continued this trend, with ResNet achieving an error rate of 3.57% by 2015 (Russakovsky et al. 2015). This progression highlights how algorithmic benchmarks measure current capabilities and drive advancements in AI performance.

5 AlexNet: Developed by Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton at the University of Toronto, this 8-layer neural network revolutionized computer vision in 2012. With 60 million parameters trained on two GTX 580 GPUs, AlexNet introduced key innovations in neural network design that became standard techniques in modern AI.

6 ResNet: Microsoft’s Residual Networks, introduced in 2015 by Kaiming He and colleagues, solved the vanishing gradient problem with skip connections, enabling networks with 152+ layers. ResNet-50 became the de facto standard for transfer learning, while ResNet-152 achieved superhuman performance on ImageNet with 3.57% top-5 error, exceeding the estimated 5% human error rate.

System Benchmarks

Moving to the second dimension of our framework, we address hardware performance: how efficiently different computational systems execute machine learning workloads. System benchmarks measure the computational foundation that enables algorithmic capabilities, systematically examining how hardware architectures, memory systems, and interconnects affect overall performance. Understanding these hardware limitations and capabilities proves necessary for optimizing the algorithm-system interaction.

AI computations place significant demands on computational resources, far exceeding traditional computing workloads. The underlying hardware infrastructure, encompassing general-purpose CPUs, graphics processing units (GPUs), tensor processing units (TPUs)7, and application-specific integrated circuits (ASICs)8, determines the speed, efficiency, and scalability of AI solutions. System benchmarks establish standardized methodologies for evaluating hardware performance across AI workloads, measuring metrics including computational throughput, memory bandwidth, power efficiency, and scaling characteristics (Reddi et al. 2019; Mattson et al. 2020).

7 Tensor Processing Unit (TPU): Google’s custom ASIC designed specifically for neural network workloads, first deployed secretly in 2015 and announced in 2016. The first-generation TPU achieved 15-30x better performance per watt than contemporary GPUs for inference, while TPU v4 pods deliver 1.1 exaFLOPS of BF16 computing power (full pod configuration), demonstrating the capabilities of specialized AI hardware.

8 Application-Specific Integrated Circuit (ASIC): Custom chips designed for specific computational tasks, offering superior performance and energy efficiency compared to general-purpose processors. AI ASICs like Google’s TPUs, Tesla’s FSD chips, and Bitcoin mining ASICs can achieve 100-1000x better efficiency than CPUs for their target applications, but lack the flexibility for other workloads.

These system benchmarks perform two critical functions in the AI ecosystem. First, they enable developers and organizations to make informed decisions when selecting hardware platforms for their AI applications by providing comparative performance data across system configurations. Evaluation factors include training speed, inference latency, energy efficiency, and cost-effectiveness. Second, hardware manufacturers rely on these benchmarks to quantify generational improvements and guide the development of specialized AI accelerators, driving advancement in computational capabilities.

However, effective benchmark interpretation requires deep understanding of the performance characteristics inherent to target hardware. Critically, understanding whether specific AI workloads are compute-bound or memory-bound provides essential insight for optimization decisions. Computational intensity, measured as FLOPS9 per byte of data movement, determines performance limits. Consider an NVIDIA A100 GPU with 312 TFLOPS of tensor performance and 1.6 TB/s memory bandwidth, yielding an arithmetic intensity threshold of 195 FLOPS/byte. The architectural foundations for understanding these hardware characteristics are established in Chapter 11: AI Acceleration, which provides context for interpreting system benchmark results.

9 FLOPS: Floating-Point Operations Per Second, a measure of computational performance indicating how many floating-point calculations a processor can execute in one second. Modern AI accelerators achieve high FLOPS ratings: NVIDIA A100 delivers 312 TFLOPS (trillion FLOPS) for tensor operations, while high-end CPUs achieve 1-10 TFLOPS. FLOPS measurements help compare hardware capabilities and determine computational bottlenecks in ML workloads.

High-intensity operations like dense matrix multiplication in certain AI model operations (typically >200 FLOPS/byte) achieve near-peak computational throughput on the A100. For example, a ResNet-50 forward pass on large batch sizes (256+) achieves arithmetic intensity of ~300 FLOPS/byte, enabling 85-90% of peak tensor performance (approximately 280 TFLOPS achieved vs 312 TFLOPS theoretical) (Choquette et al. 2021). Conversely, low-intensity operations like activation functions and certain lightweight operations (<10 FLOPS/byte) become memory bandwidth limited, utilizing only a fraction of the GPU’s computational capacity. A BERT inference with batch size 1 achieves only 8 FLOPS/byte arithmetic intensity, limiting performance to 12.8 TFLOPS (1.6 TB/s × 8 FLOPS/byte), representing just 4% of peak computational capability.

This quantitative analysis, formalized in roofline models10, provides a systematic framework that guides both algorithm design and hardware selection by clearly identifying the dominant performance constraints for specific workloads. Understanding these quantitative relationships allows engineers to predict performance bottlenecks accurately and optimize both model architectures and deployment strategies accordingly. For instance, increasing batch size from 1 to 32 for transformer inference can shift operations from memory-bound (8 FLOPS/byte) to compute-bound (150 FLOPS/byte), improving GPU utilization from 4% to 65% (Pope et al. 2022).

10 Roofline Model: A visual performance model developed at UC Berkeley that plots computational intensity (FLOPS/byte) against performance (FLOPS/second) to identify whether algorithms are compute-bound or memory-bound. The “roofline” represents theoretical peak performance limits, with flat sections indicating memory bandwidth constraints and sloped sections showing compute capacity limits. This model helps optimize both algorithms and hardware selection by revealing performance bottlenecks.

System benchmarks evaluate performance across scales, ranging from single-chip configurations to large distributed systems, and AI workloads including both training and inference tasks. This evaluation approach ensures that benchmarks accurately reflect real-world deployment scenarios and deliver insights that inform both hardware selection decisions and system architecture design. Figure 2 illustrates the correlation between ImageNet classification error rates and GPU adoption from 2010 to 2014. These results highlight how improved hardware capabilities, combined with algorithmic advances, drove progress in computer vision performance.

The ImageNet example above demonstrates how hardware advances enable algorithmic breakthroughs, but effective system benchmarking requires understanding the nuanced relationship between workload characteristics and hardware utilization. Modern AI systems rarely achieve theoretical peak performance due to complex interactions between computational patterns, memory hierarchies, and system architectures. This reality gap between theoretical and achieved performance shapes how we design meaningful system benchmarks.

Understanding realistic hardware utilization patterns becomes essential for actionable benchmark design. Different AI workloads interact with hardware architectures in distinctly different ways, creating utilization patterns that vary dramatically based on model architecture, batch size, and precision choices. GPU utilization varies from 85% for well-optimized ResNet-50 training with batch size 64 to only 15% with batch size 1 (You et al. 2019) due to insufficient parallelism. Memory bandwidth utilization ranges from 20% for parameter-heavy transformer models to 90% for activation-heavy convolutional networks, directly impacting achievable performance across different precision levels.

Energy efficiency considerations add another critical dimension to system benchmarking. Performance per watt varies by three orders of magnitude across computing platforms, making energy efficiency a critical benchmark dimension for production deployments. Utilization significantly impacts efficiency: underutilized GPUs consume disproportionate power while delivering minimal performance, creating substantial efficiency penalties that affect operational costs and environmental impact.

Distributed system performance introduces additional complexity that system benchmarks must capture. Traditional roofline models extend to multi-GPU and multi-node scenarios, but distributed training introduces communication bottlenecks that often dominate performance. Inter-node bandwidth limitations, NUMA topology effects, and network congestion create performance variations that single-node benchmarks cannot reveal.

Production distributed systems face challenges that require specialized benchmarking methodologies addressing real-world deployment scenarios. Network partitions during multi-node training affect gradient synchronization and model consistency, requiring fault tolerance evaluation under partial connectivity conditions. Clock synchronization becomes critical for accurate distributed performance measurement across geographically distributed nodes, where timestamp drift can invalidate benchmark results.

Scaling efficiency measurement reveals critical distributed systems bottlenecks in production ML workloads. Linear scaling efficiency degrades significantly beyond 64-128 nodes for most models due to communication overhead: ResNet-50 training achieves 90% scaling efficiency up to 32 nodes but only 60% efficiency at 128 nodes. Gradient aggregation latency increases quadratically with cluster size in traditional parameter server architectures, while all-reduce communication patterns achieve better scaling but require high-bandwidth interconnects.

Consensus mechanisms for benchmark completion across distributed nodes introduce coordination challenges absent from single-node evaluation. Determining benchmark completion requires distributed agreement on convergence criteria, handling node failures during benchmark execution, and ensuring consistent state across all participating nodes. Byzantine fault tolerance becomes necessary for benchmarks spanning multiple administrative domains or cloud providers.

Network topology effects significantly impact distributed training performance in production environments. InfiniBand interconnects achieve 200 Gbps per link with microsecond latency, enabling near-linear scaling for communication-intensive workloads. Ethernet-based clusters with 100 Gbps links experience 10-100x higher latency, limiting scaling efficiency for gradient-heavy models. NUMA topology within nodes creates memory bandwidth contention that affects local gradient computation before network communication.

Dynamic resource allocation in production distributed systems requires benchmarking frameworks that account for resource heterogeneity and temporal variations. Cloud instances with different memory capacities, CPU speeds, and network bandwidth create load imbalance that degrades overall training performance. Spot instance availability fluctuations require fault-tolerant benchmarking that measures recovery time from node failures and resource scaling responsiveness.

These distributed systems considerations highlight the gap between idealized single-node benchmarks and production deployment realities. Effective distributed ML benchmarking must therefore evaluate communication patterns, fault tolerance, resource heterogeneity, and coordination overhead to guide real-world system design decisions.

These hardware utilization insights directly inform benchmark design principles. Effective system benchmarks must evaluate performance across realistic utilization scenarios rather than focusing solely on peak theoretical capabilities. This approach ensures that benchmark results translate to practical deployment guidance, enabling engineers to make informed decisions about hardware selection, system configuration, and optimization strategies.

This transition from computational infrastructure evaluation naturally leads us to the third and equally critical dimension of comprehensive ML system benchmarking: data quality assessment.

Data Benchmarks

The third dimension of our framework systematically examines data quality, representativeness, and bias in machine learning evaluation. Data benchmarks assess how dataset characteristics affect model performance and reveal critical limitations that may not be apparent from algorithmic or system metrics alone. This dimension is particularly critical because data quality constraints often determine real-world deployment success regardless of algorithmic sophistication or hardware capability.

Data quality, scale, and diversity shape machine learning system performance, directly influencing how effectively algorithms learn and generalize to new situations. To address this dependency, data benchmarks establish standardized datasets and evaluation methodologies that enable consistent comparison of different approaches. These frameworks assess critical aspects of data quality, including domain coverage, potential biases, and resilience to real-world variations in input data (Gebru et al. 2021). The data engineering practices necessary for creating reliable benchmarks are detailed in Chapter 6: Data Engineering, while fairness considerations in benchmark design connect to broader responsible AI principles covered in Chapter 17: Responsible AI.

Data benchmarks serve an essential function in understanding AI system behavior under diverse data conditions. Through systematic evaluation, they help identify common failure modes, expose critical gaps in data coverage, and reveal underlying biases that could significantly impact model behavior in deployment. By providing common frameworks for data evaluation, these benchmarks enable the AI community to systematically improve data quality and address potential issues before deploying systems in production environments. This proactive approach to data quality assessment has become increasingly critical as AI systems take on more complex and consequential tasks across different domains.

Community-Driven Standardization

Building on our three-dimensional framework, we face a critical challenge created by the proliferation of benchmarks spanning performance, energy efficiency, and domain-specific applications: establishing industry-wide standards. While early computing benchmarks primarily measured simple metrics like processor speed and memory bandwidth, modern benchmarks must evaluate sophisticated aspects of system performance, from complex power consumption profiles to highly specialized application-specific capabilities. This evolution in scope and complexity necessitates comprehensive validation and consensus from the computing community, particularly in rapidly evolving fields like machine learning where performance must be evaluated across multiple interdependent dimensions.

The lasting impact of any benchmark depends critically on its acceptance by the broader research community, where technical excellence alone is insufficient for adoption. Benchmarks developed without broad community input often fail to gain meaningful traction, frequently missing critical metrics that leading research groups consider essential. Successful benchmarks emerge through collaborative development involving academic institutions, industry partners, and domain experts. This inclusive approach ensures benchmarks evaluate capabilities most crucial for advancing the field, while balancing theoretical and practical considerations.

In contrast, benchmarks developed through extensive collaboration among respected institutions carry the authority necessary to drive widespread adoption, while those perceived as advancing particular corporate interests face skepticism and limited acceptance. The remarkable success of ImageNet demonstrates how sustained community engagement through workshops and challenges establishes long-term viability and lasting impact. This community-driven development creates a foundation for formal standardization, where organizations like IEEE and ISO transform these benchmarks into official standards.

The standardization process provides crucial infrastructure for benchmark formalization and adoption. IEEE working groups transform community-developed benchmarking methodologies into formal industry standards, establishing precise specifications for measurement and reporting. The IEEE 2416-2019 standard for system power modeling exemplifies this process, codifying best practices developed through community consensus. Similarly, ISO/IEC technical committees develop international standards for benchmark validation and certification, ensuring consistent evaluation across global research and industry communities. These organizations bridge the gap between community-driven innovation and formal standardization, providing frameworks that enable reliable comparison of results across different institutions and geographic regions.

Successful community benchmarks establish clear governance structures for managing their evolution. Through rigorous version control systems and detailed change documentation, benchmarks maintain backward compatibility while incorporating new advances. This governance includes formal processes for proposing, reviewing, and implementing changes, ensuring that benchmarks remain relevant while maintaining stability. Modern benchmarks increasingly emphasize reproducibility requirements, incorporating automated verification systems and standardized evaluation environments.

Open access accelerates benchmark adoption and ensures consistent implementation. Projects that provide open-source reference implementations, comprehensive documentation, validation suites, and containerized evaluation environments reduce barriers to entry. This standardization enables research groups to evaluate solutions using uniform methods and metrics. Without such coordinated implementation frameworks, organizations might interpret benchmarks inconsistently, compromising result reproducibility and meaningful comparison across studies.

The most successful benchmarks strike a careful balance between academic rigor and industry practicality. Academic involvement ensures theoretical soundness and comprehensive evaluation methodology, while industry participation grounds benchmarks in practical constraints and real-world applications. This balance proves particularly crucial in machine learning benchmarks, where theoretical advances must translate to practical improvements in deployed systems (Patterson et al. 2021). These evaluation methodology principles guide both training and inference benchmark design throughout this chapter.

Community consensus establishes enduring benchmark relevance, while fragmentation impedes scientific progress. Through collaborative development and transparent operation, benchmarks evolve into authoritative standards for measuring advancement. The most successful benchmarks in energy efficiency and domain-specific applications share this foundation of community development and governance, demonstrating how collective expertise and shared purpose create lasting impact in rapidly advancing fields.

Benchmarking Granularity

The three-dimensional framework and measurement foundations established above provide the conceptual structure for benchmarking. However, implementing these principles requires choosing the appropriate level of detail for evaluation, from individual tensor operations to complete ML applications. Just as the optimization techniques from Chapter 10: Model Optimizations operate at different granularities, benchmarks must adapt their evaluation scope to match specific optimization goals. This hierarchical perspective allows practitioners to isolate performance bottlenecks at the micro level or assess system-wide behavior at the macro level.

System level benchmarking provides a structured and systematic approach to assessing a ML system’s performance across various dimensions. Given the complexity of ML systems, we can dissect their performance through different levels of granularity and obtain a comprehensive view of the system’s efficiency, identify potential bottlenecks, and pinpoint areas for improvement. To this end, various types of benchmarks have evolved over the years and continue to persist.

Figure 3 shows the different layers of granularity of an ML system. At the application level, end-to-end benchmarks assess the overall system performance, considering factors like data preprocessing, model training, and inference. While at the model layer, benchmarks focus on assessing the efficiency and accuracy of specific models. This includes evaluating how well models generalize to new data and their computational efficiency during training and inference. Benchmarking can extend to hardware and software infrastructure, examining the performance of individual components like GPUs or TPUs.

Micro Benchmarks

Micro-benchmarks are specialized evaluation tools that assess distinct components or specific operations within a broader machine learning process. These benchmarks isolate individual tasks to provide detailed insights into the computational demands of particular system elements, from neural network layers to optimization techniques to activation functions. For example, micro-benchmarks might measure the time required to execute a convolutional layer in a deep learning model or evaluate the speed of data preprocessing operations that prepare training data.

A key area of micro-benchmarking focuses on tensor operations11, which are the computational core of deep learning. Libraries like cuDNN12 by NVIDIA provide benchmarks for measuring fundamental computations such as convolutions and matrix multiplications across different hardware configurations. These measurements help developers understand how their hardware handles the core mathematical operations that dominate ML workloads.

11 Tensor Operations: Multi-dimensional array computations that form the backbone of neural networks, including matrix multiplication (GEMM), convolution, and element-wise operations. Modern AI accelerators optimize these primitives: NVIDIA’s Tensor Cores can achieve 312 TFLOPS for mixed-precision matrix multiplications (BF16), compared to 15-20 TFLOPS for traditional FP32 computations, representing approximately 15-20x speedup.

12 cuDNN: CUDA Deep Neural Network library, NVIDIA’s GPU-accelerated library of primitives for deep neural networks. Released in 2014, cuDNN provides highly optimized implementations for convolutions, pooling, normalization, and activation layers, delivering up to 10x performance improvements over naive implementations and becoming the de facto standard for GPU-accelerated deep learning.

13 Sigmoid Function: A mathematical activation function S(x) = 1/(1+e^(-x)) that maps any real number to a value between 0 and 1, historically important in early neural networks. Despite being computationally expensive due to exponential operations and suffering from vanishing gradient problems, sigmoid functions remain relevant for binary classification output layers and gates in LSTM cells.

14 Tanh Function: Hyperbolic tangent activation function tanh(x) = (e^x - e(-x))/(ex + e^(-x)) that maps inputs to values between -1 and 1, providing zero-centered outputs unlike sigmoid. While computationally intensive and still subject to vanishing gradients, tanh often performs better than sigmoid in hidden layers due to stronger gradients and symmetric output range.

15 LSTM (Long Short-Term Memory): A type of recurrent neural network architecture introduced by Hochreiter and Schmidhuber in 1997, designed to solve the vanishing gradient problem in traditional RNNs. LSTMs use gates (forget, input, output) to control information flow, enabling them to learn dependencies over hundreds of time steps, making them crucial for sequence modeling before the Transformer era.

Micro-benchmarks also examine activation functions and neural network layers in isolation. This includes measuring the performance of various activation functions like ReLU, Sigmoid13, and Tanh14 under controlled conditions, as well as evaluating the computational efficiency of distinct neural network components such as LSTM15 cells or Transformer blocks when processing standardized inputs.

DeepBench, developed by Baidu, was one of the first to demonstrate the value of comprehensive micro-benchmarking. It evaluates these fundamental operations across different hardware platforms, providing detailed performance data that helps developers optimize their deep learning implementations. By isolating and measuring individual operations, DeepBench enables precise comparison of hardware platforms and identification of potential performance bottlenecks.

Macro Benchmarks

While micro-benchmarks examine individual operations like tensor computations and layer performance, macro benchmarks evaluate complete machine learning models. This shift from component-level to model-level assessment provides insights into how architectural choices and component interactions affect overall model behavior. For instance, while micro-benchmarks might show optimal performance for individual convolutional layers, macro-benchmarks reveal how these layers work together within a complete convolutional neural network.

Macro-benchmarks measure multiple performance dimensions that emerge only at the model level. These include prediction accuracy, which shows how well the model generalizes to new data; memory consumption patterns across different batch sizes and sequence lengths; throughput under varying computational loads; and latency across different hardware configurations. Understanding these metrics helps developers make informed decisions about model architecture, optimization strategies, and deployment configurations.

The assessment of complete models occurs under standardized conditions using established datasets and tasks. For example, computer vision models might be evaluated on ImageNet, measuring both computational efficiency and prediction accuracy. Natural language processing models might be assessed on translation tasks, examining how they balance quality and speed across different language pairs.

Several industry-standard benchmarks enable consistent model evaluation across platforms. MLPerf Inference provides comprehensive testing suites adapted for different computational environments (Reddi et al. 2019). MLPerf Mobile focuses on mobile device constraints (Janapa Reddi et al. 2022), while MLPerf Tiny addresses microcontroller deployments (Banbury et al. 2021). For embedded systems, EEMBC’s MLMark emphasizes both performance and power efficiency. The AI-Benchmark suite specializes in mobile platforms, evaluating models across diverse tasks from image recognition to face parsing.

End-to-End Benchmarks

End-to-end benchmarks provide an all-inclusive evaluation that extends beyond the boundaries of the ML model itself. Rather than focusing solely on a machine learning model’s computational efficiency or accuracy, these benchmarks encompass the entire pipeline of an AI system. This includes initial ETL (Extract-Transform-Load) or ELT (Extract-Load-Transform) data processing, the core model’s performance, post-processing of results, and critical infrastructure components like storage and network systems.

Data processing is the foundation of all AI systems, transforming raw data into a format suitable for model training or inference. In ETL pipelines, data undergoes extraction from source systems, transformation through cleaning and feature engineering, and loading into model-ready formats. These preprocessing steps’ efficiency, scalability, and accuracy significantly impact overall system performance. End-to-end benchmarks must assess standardized datasets through these pipelines to ensure data preparation doesn’t become a bottleneck.

The post-processing phase plays an equally important role. This involves interpreting the model’s raw outputs, converting scores into meaningful categories, filtering results based on predefined tasks, or integrating with other systems. For instance, a computer vision system might need to post-process detection boundaries, apply confidence thresholds, and format results for downstream applications. In real-world deployments, this phase proves crucial for delivering actionable insights.

Beyond core AI operations, infrastructure components heavily influence overall performance and user experience. Storage solutions, whether cloud-based, on-premises, or hybrid, can significantly impact data retrieval and storage times, especially with vast AI datasets. Network interactions, vital for distributed systems, can become performance bottlenecks if not optimized. End-to-end benchmarks must evaluate these components under specified environmental conditions to ensure reproducible measurements of the entire system.

To date, there are no public, end-to-end benchmarks that fully account for data storage, network, and compute performance. While MLPerf Training and Inference approach end-to-end evaluation, they primarily focus on model performance rather than real-world deployment scenarios. Nonetheless, they provide valuable baseline metrics for assessing AI system capabilities.

Given the inherent specificity of end-to-end benchmarking, organizations typically perform these evaluations internally by instrumenting production deployments. This allows engineers to develop result interpretation guidelines based on realistic workloads, but given the sensitivity and specificity of the information, these benchmarks rarely appear in public settings.

Granularity Trade-offs and Selection Criteria

As shown in Table 1, different challenges emerge at different stages of an AI system’s lifecycle. Each benchmarking approach provides unique insights: micro-benchmarks help engineers optimize specific components like GPU kernel implementations or data loading operations, macro-benchmarks guide model architecture decisions and algorithm selection, while end-to-end benchmarks reveal system-level bottlenecks in production environments.

| Component | Micro Benchmarks | Macro Benchmarks | End-to-End Benchmarks |

|---|---|---|---|

| Focus | Individual operations | Complete models | Full system pipeline |

| Scope | Tensor ops, layers, activations | Model architecture, training, inference | ETL, model, infrastructure |

| Example | Conv layer performance on cuDNN | ResNet-50 on ImageNet | Production recommendation system |

| Advantages | Precise bottleneck identification, Component optimization | Model architecture comparison, Standardized evaluation | Realistic performance assessment, System-wide insights |

| Challenges | May miss interaction effects | Limited infrastructure insights | Complex to standardize, Often proprietary |

| Typical Use | Hardware selection, Operation optimization | Model selection, Research comparison | Production system evaluation |

Figure 4 visualizes the core trade-off between diagnostic power and real-world representativeness across benchmark granularity levels. This relationship illustrates why comprehensive ML system evaluation requires multiple benchmark types: micro-benchmarks provide precise optimization guidance for isolated components, while end-to-end benchmarks capture the complex interactions that emerge in production systems. The optimal benchmarking strategy combines insights from all three levels to balance detailed component analysis with realistic system-wide assessment.

Component interaction often produces unexpected behaviors. For example, while micro-benchmarks might show excellent performance for individual convolutional layers, and macro-benchmarks might demonstrate strong accuracy for the complete model, end-to-end evaluation could reveal that data preprocessing creates unexpected bottlenecks during high-traffic periods. These system-level insights often remain hidden when components undergo isolated testing.

With benchmarking granularity established, understanding which level of evaluation serves specific optimization goals, we now examine the concrete components that constitute benchmark implementations at any granularity level.

Benchmark Components

Using our established framework, we now examine the practical components that constitute any benchmark implementation. These components provide the concrete structure for measuring performance across all three dimensions simultaneously. Whether evaluating model accuracy (algorithmic dimension), measuring inference latency (system dimension), or assessing dataset quality (data dimension), benchmarks share common structural elements that ensure systematic and reproducible evaluation.

The granularity level established in the previous section directly shapes how these components are instantiated. Micro-benchmarks measuring tensor operations require synthetic inputs that isolate specific computational patterns, enabling precise performance characterization of individual kernels as discussed in Chapter 11: AI Acceleration. Macro-benchmarks evaluating complete models demand representative datasets like ImageNet that capture realistic task complexity while enabling standardized comparison across architectures. End-to-end benchmarks assessing production systems must incorporate real-world data characteristics including distribution shift, noise, and edge cases absent from curated evaluation sets. Similarly, evaluation metrics shift focus across granularity levels: micro-benchmarks emphasize FLOPS and memory bandwidth utilization, macro-benchmarks balance accuracy and inference speed, while end-to-end benchmarks prioritize system reliability and operational efficiency under load. Understanding this systematic variation ensures that component choices align with evaluation objectives rather than applying uniform approaches across different benchmarking scales.

Having established how benchmark granularity shapes evaluation scope (from micro-benchmarks isolating tensor operations to end-to-end assessments of complete systems), we now examine how these conceptual levels translate into concrete benchmark implementations. The components discussed abstractly above must be instantiated through specific choices about tasks, datasets, models, and metrics. This implementation process follows a systematic workflow that ensures reproducible and meaningful evaluation regardless of the chosen granularity level.

An AI benchmark provides this structured framework for systematically evaluating artificial intelligence systems. While individual benchmarks vary significantly in their specific focus and granularity, they share common implementation components that enable consistent evaluation and comparison across different approaches.

Figure 5 illustrates this structured workflow, showcasing how the essential components (task definition, dataset selection, model selection, and evaluation metrics) interconnect to form a complete evaluation pipeline. Each component builds upon the previous one, creating a systematic progression from problem specification through deployment assessment.

Effective benchmark design must account for the optimization techniques established in preceding chapters. Quantization and pruning affect model accuracy-efficiency trade-offs, requiring benchmarks that measure both speedup and accuracy preservation simultaneously. Hardware acceleration techniques influence arithmetic intensity and memory bandwidth utilization, necessitating roofline model analysis to interpret results correctly. Understanding these optimization foundations enables benchmark selection that validates claimed improvements rather than measuring artificial scenarios.

Problem Definition

As illustrated in Figure 5, a benchmark implementation begins with a formal specification of the machine learning task and its evaluation criteria. In machine learning, tasks represent well-defined problems that AI systems must solve. Consider an anomaly detection system that processes audio signals to identify deviations from normal operation patterns, as shown in Figure 5. This industrial monitoring application exemplifies how formal task specifications translate into practical implementations.

The formal definition of any benchmark task encompasses both the computational problem and its evaluation framework. While the specific tasks vary significantly by domain, well-established categories have emerged across major fields of AI research. Natural language processing tasks, for example, include machine translation, question answering (Hirschberg and Manning 2015), and text classification. Computer vision similarly employs standardized tasks such as object detection, image segmentation, and facial recognition (Everingham et al. 2009).

Every benchmark task specification must define three essential elements. The input specification determines what data the system processes. In Figure 5, this consists of audio waveform data. The output specification describes the required system response, such as the binary classification of normal versus anomalous patterns. The performance specification establishes quantitative requirements for accuracy, processing speed, and resource utilization.

Task design directly impacts the benchmark’s ability to evaluate AI systems effectively. The audio anomaly detection example clearly illustrates this relationship through its specific requirements: processing continuous signal data, adapting to varying noise conditions, and operating within strict time constraints. These practical constraints create a detailed framework for assessing model performance, ensuring evaluations reflect real-world operational demands.

The implementation of a benchmark proceeds systematically from this foundational task definition. Each subsequent phase, from dataset selection through deployment, builds directly upon these initial specifications, ensuring that evaluations maintain consistency while addressing the defined requirements across different approaches and implementations.

Standardized Datasets

Building directly upon the problem definition established in the previous phase, standardized datasets provide the essential foundation for training and evaluating models. These carefully curated collections ensure all models undergo testing under identical conditions, enabling direct comparisons across different approaches and architectures. Figure 5 demonstrates this through an audio anomaly detection example, where waveform data serves as the standardized input for evaluating detection performance.

In computer vision, datasets such as ImageNet (Deng et al. 2009), COCO (Lin et al. 2014), and CIFAR-1016 (Krizhevsky, Hinton, et al. 2009) serve as reference standards. For natural language processing, collections such as SQuAD17 (Rajpurkar et al. 2016), GLUE18 (Wang et al. 2018), and WikiText (Merity et al. 2016) fulfill similar functions. These datasets encompass a range of complexities and edge cases to thoroughly evaluate machine learning systems.

16 CIFAR-10: A dataset of 60,000 32×32 color images across 10 classes (airplane, automobile, bird, cat, deer, dog, frog, horse, ship, truck), collected by Alex Krizhevsky and Geoffrey Hinton at the University of Toronto in 2009. Despite its small image size, CIFAR-10 became fundamental for comparing deep learning architectures, with top-1 error rates improving from 18.5% with traditional methods to 2.6% with modern deep networks.

17 SQuAD: Stanford Question Answering Dataset, introduced in 2016, containing 100,000+ question-answer pairs based on Wikipedia articles. SQuAD became the gold standard for evaluating reading comprehension, with human performance at 87.4% F1 score and leading AI systems achieving over 90% by 2018, marking the first time machines exceeded human performance on this benchmark.

18 GLUE: General Language Understanding Evaluation, a collection of nine English sentence understanding tasks including sentiment analysis, textual entailment, and similarity. Introduced in 2018, GLUE provided standardized evaluation with a human baseline of 87.1% and became obsolete when BERT achieved 80.5% in 2019, leading to the more challenging SuperGLUE benchmark.

The strategic selection of datasets, shown early in the workflow of Figure 5, shapes all subsequent implementation steps and ultimately determines the benchmark’s effectiveness. In the audio anomaly detection example, the dataset must include representative waveform samples of normal operation alongside comprehensive examples of various anomalous conditions. Notable examples include datasets like ToyADMOS for industrial manufacturing anomalies and Google Speech Commands for general sound recognition. Regardless of the specific dataset chosen, the data volume must suffice for both model training and validation, while incorporating real-world signal characteristics and noise patterns that reflect deployment conditions.

The selection of benchmark datasets directly shapes experimental outcomes and model evaluation. Effective datasets must balance two key requirements: accurately representing real-world challenges while maintaining sufficient complexity to differentiate model performance meaningfully. While research often utilizes simplified datasets like ToyADMOS19 (Koizumi et al. 2019), these controlled environments, though valuable for methodological development, may not fully capture real-world deployment complexities.

19 ToyADMOS: A dataset for anomaly detection in machine operating sounds, developed by NTT Communications in 2019 containing audio recordings from toy car and toy conveyor belt operations. The dataset includes 1,000+ normal samples and 300+ anomalous samples per machine type, designed to standardize acoustic anomaly detection research with reproducible experimental conditions.

Model Selection

Following dataset specification, the benchmark process advances systematically to model architecture selection and implementation. This critical phase establishes performance baselines and determines the optimal modeling approach for the specific task at hand. The selection process directly builds upon the architectural foundations established in Chapter 4: DNN Architectures and must account for the framework-specific considerations discussed in Chapter 7: AI Frameworks. Figure 5 illustrates this progression through the model selection stage and subsequent training code development.

Baseline models serve as the reference points for evaluating novel approaches. These span from basic implementations, including linear regression for continuous predictions and logistic regression for classification tasks, to advanced architectures with proven success in comparable domains. The choice of baseline depends critically on the deployment framework—a PyTorch implementation may exhibit different performance characteristics than its TensorFlow equivalent due to framework-specific optimizations and operator implementations. In natural language processing applications, advanced language models like BERT20 have emerged as standard benchmarks for comparative analysis. The architectural details of transformers and their performance characteristics are thoroughly covered in Chapter 4: DNN Architectures.

20 BERT: Bidirectional Encoder Representations from Transformers, introduced by Google in 2018, revolutionized natural language processing by pre-training on vast text corpora using masked language modeling. BERT-Large contains 340 million parameters and achieved state-of-the-art results on 11 NLP tasks, establishing the foundation for modern language models like GPT and ChatGPT.

Selecting the right baseline model requires careful evaluation of architectures against benchmark requirements. This selection process directly informs the development of training code, which is the cornerstone of benchmark reproducibility. The training implementation must thoroughly document all aspects of the model pipeline, from data preprocessing through training procedures, enabling precise replication of model behavior across research teams.

With model architecture selected, model development follows two primary optimization paths: training and inference. During training optimization, efforts concentrate on achieving target accuracy metrics while operating within computational constraints. The training implementation must demonstrate consistent achievement of performance thresholds under specified conditions.

In parallel, the inference optimization path addresses deployment considerations, particularly the critical transition from development to production environments. A key example involves precision reduction through numerical optimization techniques, progressing from high-precision to lower-precision representations to enhance deployment efficiency. This process demands careful calibration to maintain model accuracy while reducing resource requirements. The benchmark must detail both the quantization methodology and verification procedures that confirm preserved performance.

The intersection of these two optimization paths with real-world constraints shapes overall deployment strategy. Comprehensive benchmarks must therefore specify requirements for both training and inference scenarios, ensuring models maintain consistent performance from development through deployment. This crucial connection between development and production metrics naturally leads to the establishment of evaluation criteria.

The optimization process must balance four key objectives: model accuracy, computational speed, memory utilization, and energy efficiency. Following our three-dimensional benchmarking framework, this complex optimization landscape necessitates robust evaluation metrics that can effectively quantify performance across algorithmic, system, and data dimensions. As models transition from development to deployment, these metrics serve as critical tools for guiding optimization decisions and validating performance enhancements.

Evaluation Metrics

Building upon the optimization framework established through model selection, evaluation metrics provide the quantitative measures needed to assess machine learning model performance. These metrics establish objective standards for comparing different approaches, allowing researchers and practitioners to gauge solution effectiveness. The selection of appropriate metrics represents a critical aspect of benchmark design, as they must align with task objectives while providing meaningful insights into model behavior across both training and deployment scenarios. Importantly, metric computation can vary between frameworks—the training methodologies from Chapter 8: AI Training demonstrate how different frameworks handle loss computation and gradient accumulation differently, affecting reported metrics.

Task-specific metrics quantify a model’s performance on its intended function. For example, classification tasks employ metrics including accuracy (overall correct predictions), precision (positive prediction accuracy), recall (positive case detection rate), and F1 score (precision-recall harmonic mean) (Sokolova and Lapalme 2009). Regression problems utilize error measurements like Mean Squared Error (MSE) and Mean Absolute Error (MAE) to assess prediction accuracy. Domain-specific applications often require specialized metrics - for example, machine translation uses the BLEU score21 to evaluate the semantic and syntactic similarity between machine-generated and human reference translations (Papineni et al. 2001).

21 BLEU Score: Bilingual Evaluation Understudy, introduced by IBM in 2002, measures machine translation quality by comparing n-gram overlap between machine and human reference translations. BLEU scores range from 0-100, with scores above 30 considered useful, above 50 good, and above 60 high quality. Google Translate achieved BLEU scores of 40+ on major language pairs by 2016.

However, as models transition from research to production deployment, implementation metrics become equally important. Model size, measured in parameters or memory footprint, directly affects deployment feasibility across different hardware platforms. Processing latency, typically measured in milliseconds per inference, determines whether the model meets real-time requirements. Energy consumption, measured in watts or joules per inference, indicates operational efficiency. These practical considerations reflect the growing need for solutions that balance accuracy with computational efficiency. The operational challenges of maintaining these metrics in production environments are explored in deployment strategies (Chapter 13: ML Operations).

Consequently, the selection of appropriate metrics requires careful consideration of both task requirements and deployment constraints. A single metric rarely captures all relevant aspects of performance in real-world scenarios. For instance, in anomaly detection systems, high accuracy alone may not indicate good performance if the model generates frequent false alarms. Similarly, a fast model with poor accuracy fails to provide practical value.

Figure 5 demonstrates this multi-metric evaluation approach. The anomaly detection system reports performance across multiple dimensions: model size (270 Kparameters), processing speed (10.4 ms/inference), and detection accuracy (0.86 AUC22). This combination of metrics ensures the model meets both technical and operational requirements in real-world deployment scenarios.

22 AUC (Area Under the Curve): A performance metric for binary classification that measures the area under the Receiver Operating Characteristic (ROC) curve, representing the trade-off between true positive and false positive rates. AUC values range from 0 to 1, where 0.5 indicates random performance, 0.7-0.8 is acceptable, 0.8-0.9 is excellent, and above 0.9 is outstanding discrimination ability.

Benchmark Harness

While evaluation metrics provide the measurement framework, a benchmark harness implements the systematic infrastructure for evaluating model performance under controlled conditions. This critical component ensures reproducible testing by managing how inputs are delivered to the system under test and how measurements are collected, effectively transforming theoretical metrics into quantifiable measurements.

The harness design should align with the intended deployment scenario and usage patterns. For server deployments, the harness implements request patterns that simulate real-world traffic, typically generating inputs using a Poisson distribution23 to model random but statistically consistent server workloads. The harness manages concurrent requests and varying load intensities to evaluate system behavior under different operational conditions.

23 Poisson Distribution: A mathematical model that describes the frequency of events occurring independently at a constant average rate, named after French mathematician Siméon Denis Poisson in 1837. In server workloads, Poisson distributions accurately model request arrivals with average rates of 10-1000 requests per second, where the probability of exactly k requests in time t follows P(k) = (λt)^k * e^(-λt) / k!.

For embedded and mobile applications, the harness generates input patterns that reflect actual deployment conditions. This might involve sequential image injection for mobile vision applications or synchronized multi-sensor streams for autonomous systems. Such precise input generation and timing control ensures the system experiences realistic operational patterns, revealing performance characteristics that would emerge in actual device deployment.

The harness must also accommodate different throughput models. Batch processing scenarios require the ability to evaluate system performance on large volumes of parallel inputs, while real-time applications need precise timing control for sequential processing. Figure 5 illustrates this in the embedded implementation phase, where the harness must support precise measurement of inference time and energy consumption per operation.