Sustainable AI

DALL·E 3 Prompt: 3D illustration on a light background of a sustainable AI network interconnected with a myriad of eco-friendly energy sources. The AI actively manages and optimizes its energy from sources like solar arrays, wind turbines, and hydro dams, emphasizing power efficiency and performance. Deep neural networks spread throughout, receiving energy from these sustainable resources.

Purpose

How do environmental considerations influence the design and implementation of machine learning systems, and what principles emerge from examining AI through an ecological perspective?

Machine learning systems inherently require significant computational resources, raising critical concerns about their environmental impact. Addressing these concerns requires a deep understanding of how architectural decisions affect energy consumption, resource utilization, and ecological sustainability. Designers and engineers must consider the relationships between computational demands, resource utilization, and environmental consequences across various system components. A systematic exploration of these considerations helps identify key architectural principles and design strategies that harmonize performance objectives with ecological stewardship.

- Define the key environmental impacts of AI systems.

- Identify the ethical considerations surrounding sustainable AI.

- Analyze strategies for reducing AI’s carbon footprint.

- Describe the role of energy-efficient design in sustainable AI.

- Discuss the importance of policy and regulation for sustainable AI.

- Recognize key challenges in the AI hardware and software lifecycle.

Overview

Machine learning has become an essential driver of technological progress, powering advancements across industries and scientific domains. However, as AI models grow in complexity and scale, the computational demands required to train and deploy them have increased significantly, raising critical concerns about sustainability. The environmental impact of AI extends beyond energy consumption, encompassing carbon emissions, resource extraction, and electronic waste. As a result, it is imperative to examine AI systems through the lens of sustainability and assess the trade-offs between performance and ecological responsibility.

Developing large-scale AI models, such as state-of-the-art language and vision models, requires substantial computational power. Training a single large model can consume thousands of megawatt-hours of electricity, equivalent to powering hundreds of households for a month. Much of this energy is supplied by data centers, which rely heavily on nonrenewable energy sources, contributing to global carbon emissions. Estimates indicate that AI-related emissions are comparable to those of entire industrial sectors, highlighting the urgency of transitioning to more energy-efficient models and renewable-powered infrastructure.

Beyond energy consumption, AI systems also impact the environment through hardware manufacturing and resource utilization. Training and inference workloads depend on specialized processors, such as GPUs and TPUs, which require rare earth metals whose extraction and processing generate significant pollution. Additionally, the growing demand for AI applications accelerates electronic waste production, as hardware rapidly becomes obsolete. Even small-scale AI systems, such as those deployed on edge devices, contribute to sustainability challenges, necessitating careful consideration of their lifecycle impact.

This chapter examines the sustainability challenges associated with AI systems and explores emerging solutions to mitigate their environmental footprint. It discusses strategies for improving algorithmic efficiency, optimizing training infrastructure, and designing energy-efficient hardware. Additionally, it considers the role of renewable energy sources, regulatory frameworks, and industry best practices in promoting sustainable AI development. By addressing these challenges, the field can advance toward more ecologically responsible AI systems while maintaining technological progress.

Ethical Responsibility

Long-Term Viability

The long-term sustainability of AI is increasingly challenged by the exponential growth of computational demands required to train and deploy machine learning models. Over the past decade, AI systems have scaled at an unprecedented rate, with compute requirements increasing 350,000× from 2012 to 2019 (Schwartz et al. 2020). This trend shows no signs of slowing down, as advancements in deep learning continue to prioritize larger models with more parameters, larger training datasets, and higher computational complexity. However, sustaining this trajectory poses significant sustainability challenges, particularly as the efficiency gains from hardware improvements fail to keep pace with the rising demands of AI workloads.

Historically, computational efficiency improved with advances in semiconductor technology. Moore’s Law, which predicted that the number of transistors on a chip would double approximately every two years, led to continuous improvements in processing power and energy efficiency. However, Moore’s Law is now reaching fundamental physical limits, making further transistor scaling increasingly difficult and costly. Dennard scaling, which once ensured that smaller transistors would operate at lower power levels, has also ended, leading to stagnation in energy efficiency improvements per transistor. As a result, while AI models continue to scale in size and capability, the hardware running these models is no longer improving at the same exponential rate. This growing divergence between computational demand and hardware efficiency creates an unsustainable trajectory in which AI consumes ever-increasing amounts of energy.

The training of complex AI systems like large deep learning models demands startlingly high levels of computing power with profound energy implications. Consider OpenAI’s state-of-the-art language model GPT-3 as a prime example. This system pushes the frontiers of text generation through algorithms trained on massive datasets, with training estimated to require 1,300 megawatt-hours (MWh) of electricity—roughly equivalent to the monthly energy consumption of 1,450 average U.S. households (Maslej et al. 2023). In recent years, these generative AI models have gained increasing popularity, leading to more models being trained with ever-growing parameter counts.

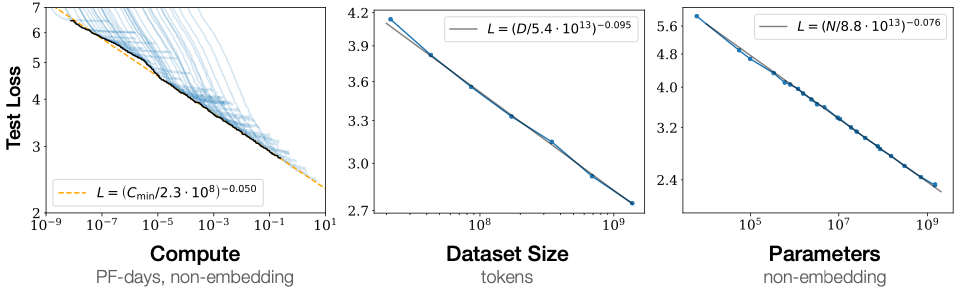

Research shows that increasing model size, dataset size, and compute used for training improves performance smoothly with no signs of saturation (Kaplan et al. 2020), as evidenced in Figure 1 where test loss decreases as each of these three factors increases. Beyond training, AI-powered applications such as large-scale recommender systems and generative models require continuous inference at scale, consuming significant energy even after training completes. As AI adoption grows across industries from finance to healthcare to entertainment, the cumulative energy burden of AI workloads continues to rise, raising concerns about the environmental impact of widespread deployment.

Beyond electricity consumption, the sustainability challenges of AI extend to hardware resource demands. High-performance computing (HPC) clusters and AI accelerators rely on specialized hardware, including GPUs, TPUs, and FPGAs, all of which require rare earth metals and complex manufacturing processes. The production of AI chips is energy-intensive, involving multiple fabrication steps that contribute to Scope 3 emissions, which account for the majority of the carbon footprint in semiconductor manufacturing. As model sizes continue to grow, the demand for AI hardware increases, further exacerbating the environmental impact of semiconductor production and disposal.

The long-term sustainability of AI requires a shift in how machine learning systems are designed, optimized, and deployed. As compute demands outpace efficiency improvements, addressing AI’s environmental impact will require rethinking system architecture, energy-aware computing, and lifecycle management. Without intervention, the unchecked growth of AI models will continue to place unsustainable pressures on energy grids, data centers, and natural resources, underscoring the need for a more systematic approach to sustainable AI development.

The environmental impact of AI is not just a technical issue but also an ethical and social one. As AI becomes more integrated into our lives and industries, its sustainability becomes increasingly critical.

Ethical Issues

The environmental impact of AI raises fundamental ethical questions regarding the responsibility of developers, organizations, and policymakers to mitigate its carbon footprint. As AI systems continue to scale, their energy consumption and resource demands have far-reaching implications, necessitating a proactive approach to sustainability. Developers and companies that build and deploy AI systems must consider not only performance and efficiency but also the broader environmental consequences of their design choices.

A key ethical challenge lies in balancing technological progress with ecological responsibility. The pursuit of increasingly large models often prioritizes accuracy and capability over energy efficiency, leading to substantial environmental costs. While optimizing for sustainability may introduce trade-offs, including increased development time or minor reductions in accuracy, it is an ethical imperative to integrate environmental considerations into AI system design. This requires shifting industry norms toward sustainable computing practices, such as energy-aware training techniques, low-power hardware designs, and carbon-conscious deployment strategies (Patterson et al. 2021).

Beyond sustainability, AI development also raises broader ethical concerns related to transparency, fairness, and accountability. Figure 2 illustrates the ethical challenges associated with AI development, linking different types of concerns, including inscrutable evidence, unfair outcomes, and traceability, to issues like opacity, bias, and automation bias. These concerns extend to sustainability, as the environmental trade-offs of AI development are often opaque and difficult to quantify. The lack of traceability in energy consumption and carbon emissions can lead to unjustified actions, where companies prioritize performance gains without fully understanding or disclosing the environmental costs.

Addressing these concerns also demands greater transparency and accountability from AI companies. Large technology firms operate extensive cloud infrastructures that power modern AI applications, yet their environmental impact is often opaque. Organizations must take active steps to measure, report, and reduce their carbon footprint across the entire AI lifecycle, from hardware manufacturing to model training and inference. Voluntary self-regulation is an important first step, but policy interventions and industry-wide standards may be necessary to ensure long-term sustainability. Reported metrics such as energy consumption, carbon emissions, and efficiency benchmarks could serve as mechanisms to hold organizations accountable.

Furthermore, ethical AI development must encourage open discourse on environmental trade-offs. Researchers should be empowered to advocate for sustainability within their institutions and organizations, ensuring that environmental concerns are factored into AI development priorities. The broader AI community has already begun addressing these issues, as exemplified by the open letter advocating a pause on large-scale AI experiments, which highlights concerns about unchecked expansion. By fostering a culture of transparency and ethical responsibility, the AI industry can work toward aligning technological advancement with ecological sustainability.

AI has the potential to reshape industries and societies, but its long-term viability depends on how responsibly it is developed. Ethical AI development is not only about preventing harm to individuals and communities but also about ensuring that AI-driven innovation does not come at the cost of environmental degradation. As stewards of these powerful technologies, developers and organizations have a profound duty to integrate sustainability into AI’s future trajectory.

Case Study: DeepMind’s Energy Efficiency

Google’s data centers form the backbone of services such as Search, Gmail, and YouTube, handling billions of queries daily. These data centers operate at massive scales, consuming vast amounts of electricity, particularly for cooling infrastructure that ensures optimal server performance. Improving the energy efficiency of data centers has long been a priority, but conventional engineering approaches faced diminishing returns due to the complexity of the cooling systems and the highly dynamic nature of environmental conditions. To address these challenges, Google collaborated with DeepMind to develop a machine learning-driven optimization system that could automate and enhance energy management at scale.

Building on more than a decade of efforts to optimize data center design, energy-efficient hardware, and renewable energy integration, DeepMind’s AI approach targeted one of the most energy-intensive aspects of data centers: cooling systems. Traditional cooling relies on manually set heuristics that account for factors such as server heat output, external weather conditions, and architectural constraints. However, these systems exhibit nonlinear interactions, meaning that simple rule-based optimizations often fail to capture the full complexity of their operations. The result was suboptimal cooling efficiency, leading to unnecessary energy waste.

DeepMind’s team trained a neural network model using Google’s historical sensor data, which included real-time temperature readings, power consumption levels, cooling pump activity, and other operational parameters. The model learned the intricate relationships between these factors and could dynamically predict the most efficient cooling configurations. Unlike traditional approaches, which relied on human engineers periodically adjusting system settings, the AI model continuously adapted in real time to changing environmental and workload conditions.

The results were unprecedented efficiency gains. When deployed in live data center environments, DeepMind’s AI-driven cooling system reduced cooling energy consumption by 40%, leading to an overall 15% improvement in Power Usage Effectiveness (PUE)—a key metric for data center energy efficiency that measures the ratio of total energy consumption to the energy used purely for computing tasks (Barroso, Hölzle, and Ranganathan 2019). Notably, these improvements were achieved without any additional hardware modifications, demonstrating the potential of software-driven optimizations to significantly reduce AI’s carbon footprint.

Beyond a single data center, DeepMind’s AI model provided a generalizable framework that could be adapted to different facility designs and climate conditions, offering a scalable solution for optimizing power consumption across global data center networks. This case study exemplifies how AI can be leveraged not just as a consumer of computational resources but as a tool for sustainability, driving substantial efficiency improvements in the infrastructure that supports machine learning itself.

The integration of data-driven decision-making, real-time adaptation, and scalable AI models demonstrates the growing role of intelligent resource management in sustainable AI system design. This breakthrough exemplifies how machine learning can be applied to optimize the very infrastructure that powers it, ensuring a more energy-efficient future for large-scale AI deployments.

AI Carbon Footprint

The carbon footprint of artificial intelligence is a critical aspect of its overall environmental impact. As AI adoption continues to expand, so does its energy consumption and associated greenhouse gas emissions. Training and deploying AI models require vast computational resources, often powered by energy-intensive data centers that contribute significantly to global carbon emissions. However, the carbon footprint of AI extends beyond electricity usage, encompassing hardware manufacturing, data storage, and end-user interactions—all of which contribute to emissions across an AI system’s lifecycle.

Quantifying the carbon impact of AI is complex, as it depends on multiple factors, including the size of the model, the duration of training, the hardware used, and the energy sources powering data centers. Large-scale AI models, such as GPT-3, require thousands of megawatt-hours (MWh) of electricity, equivalent to the energy consumption of entire communities. The energy required for inference, the phase during which trained models produce outputs, is also substantial, particularly for widely deployed AI services such as real-time translation, image generation, and personalized recommendations. Unlike traditional software, which has a relatively static energy footprint, AI models consume energy continuously, leading to an ongoing sustainability challenge.

Beyond direct energy use, the carbon footprint of AI must also account for indirect emissions from hardware production and supply chains. Manufacturing AI accelerators such as GPUs, TPUs, and custom chips involves energy-intensive fabrication processes that rely on rare earth metals and complex supply chains. The full life cycle emissions of AI systems, which encompass data centers, hardware manufacturing, and global AI deployments, must be considered to develop more sustainable AI practices.

Understanding AI’s carbon footprint requires breaking down where emissions come from, how they are measured, and what strategies can be employed to mitigate them. We explore the following:

- Carbon emissions and energy consumption trends in AI, which quantify AI’s energy demand and provide real-world comparisons.

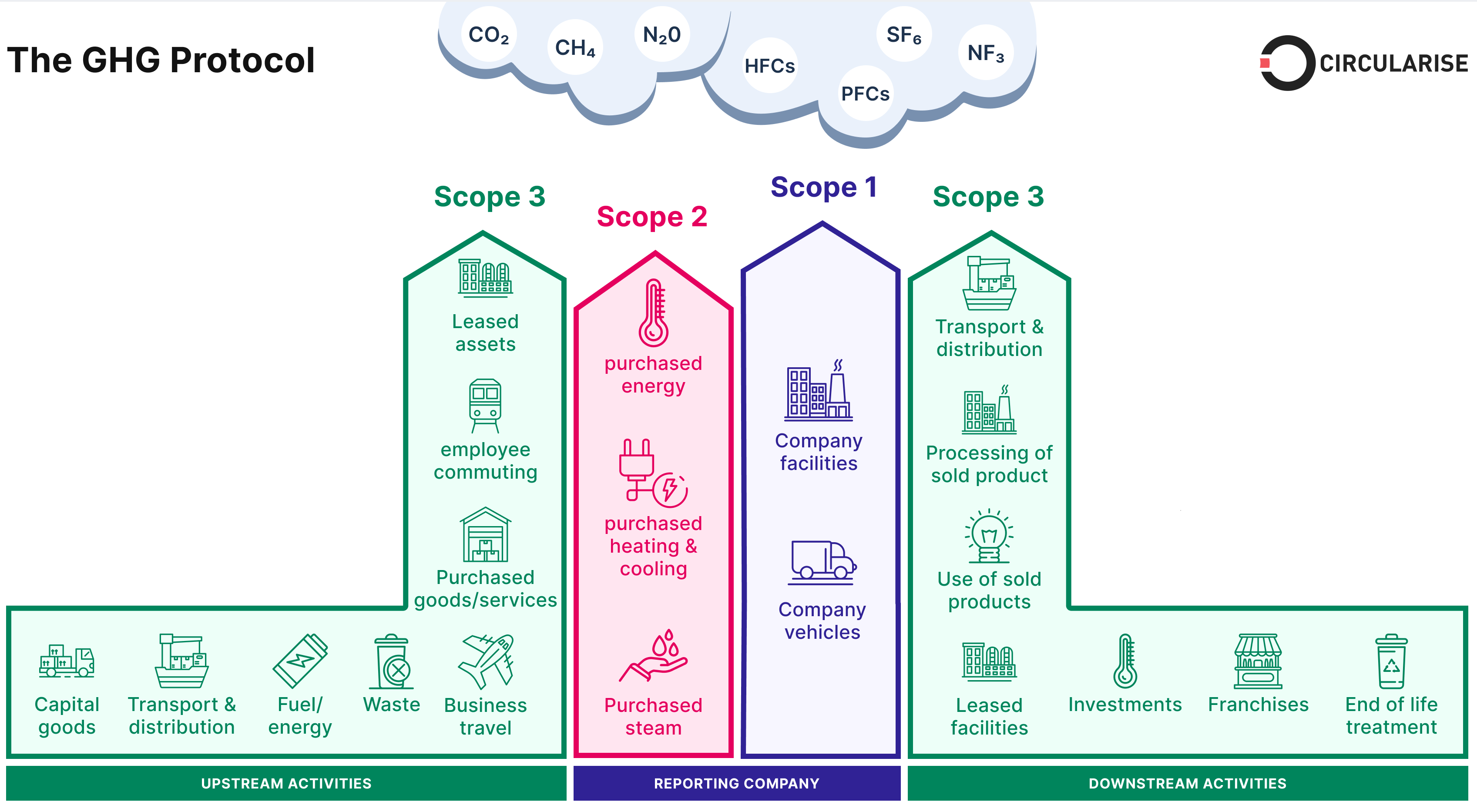

- Scopes of carbon emissions (Scope 1, 2, and 3)—differentiating between direct, indirect, and supply chain-related emissions.

- The energy cost of training vs. inference—analyzing how different phases of AI impact sustainability.

By dissecting these components, we can better assess the true environmental impact of AI systems and identify opportunities to reduce their footprint through more efficient design, energy-conscious deployment, and sustainable infrastructure choices.

Emissions & Consumption

Artificial intelligence systems require vast computational resources, making them one of the most energy-intensive workloads in modern computing. The energy consumed by AI systems extends beyond the training of large models to include ongoing inference workloads, data storage, and communication across distributed computing infrastructure. As AI adoption scales across industries, understanding its energy consumption patterns and carbon emissions is critical for designing more sustainable machine learning infrastructure.

Data centers play a central role in AI’s energy demands, consuming vast amounts of electricity to power compute servers, storage, and cooling systems. Without access to renewable energy, these facilities rely heavily on nonrenewable sources such as coal and natural gas, contributing significantly to global carbon emissions. Current estimates suggest that data centers produce up to 2% of total global CO₂ emissions—a figure that is closing in on the airline industry’s footprint (Liu et al. 2020). The energy burden of AI is expected to grow exponentially due to three key factors: increasing data center capacity, rising AI training workloads, and surging inference demands (Patterson, Gonzalez, Holzle, et al. 2022). Without intervention, these trends risk making AI’s environmental footprint unsustainably large (Thompson, Spanuth, and Matthews 2023).

Energy Demands in Data Centers

AI workloads are among the most compute-intensive operations in modern data centers. Companies such as Meta operate hyperscale data centers spanning multiple football fields in size, housing hundreds of thousands of AI-optimized servers. The training of large language models (LLMs) such as GPT-4 required over 25,000 Nvidia A100 GPUs running continuously for 90 to 100 days (Choi and Yoon 2024), consuming thousands of megawatt-hours (MWh) of electricity. These facilities rely on high-performance AI accelerators like NVIDIA DGX H100 units, each of which can draw up to 10.2 kW at peak power (Choquette 2023).

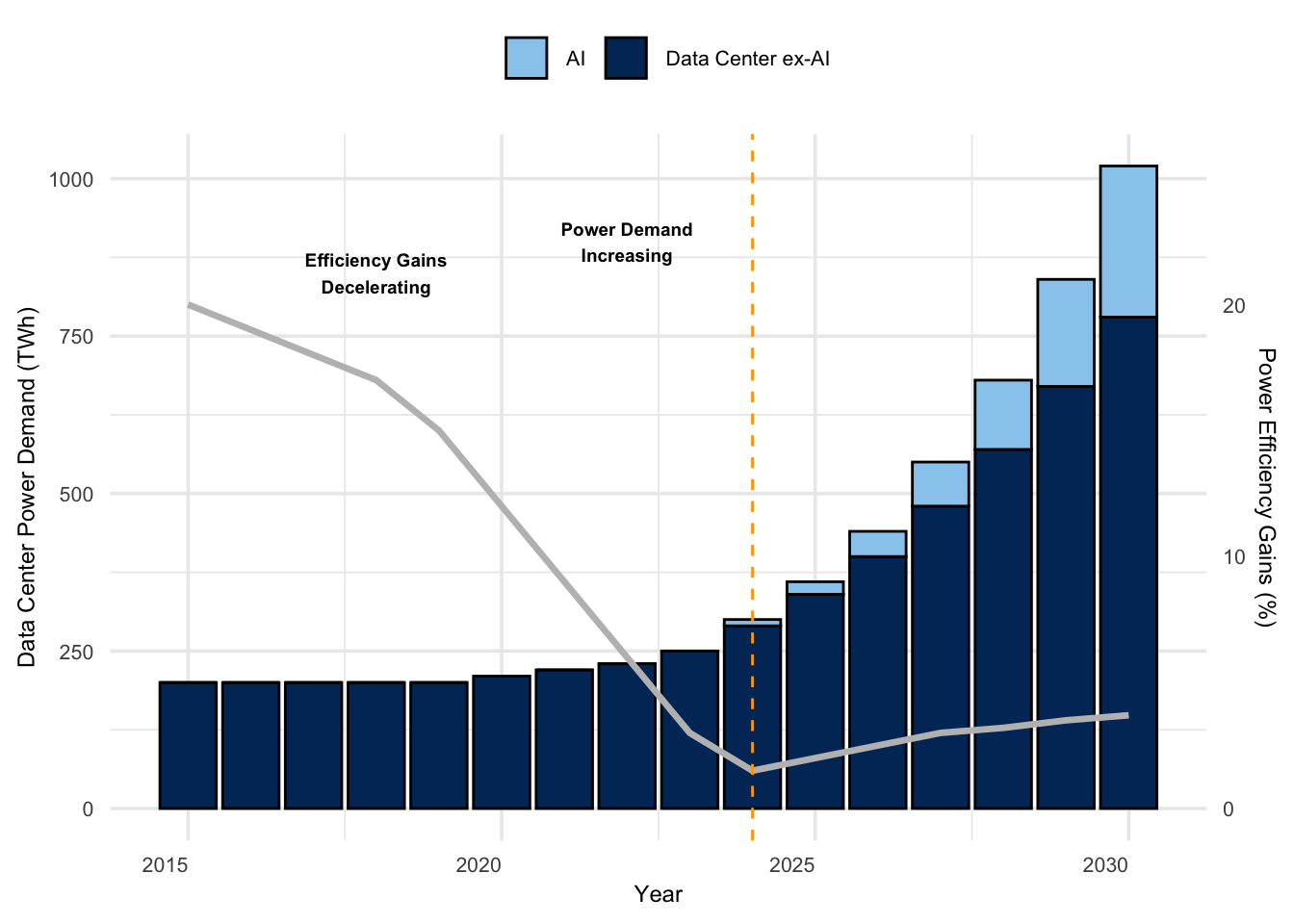

AI’s rapid adoption is driving a significant increase in data center energy consumption. As shown in Figure 3, the energy demand of AI workloads is projected to substantially increase total data center energy use, especially after 2024. While efficiency gains have historically offset rising power needs, these gains are decelerating, amplifying AI’s environmental impact.

Cooling is another major factor in AI’s energy footprint. Large-scale AI training and inference workloads generate massive amounts of heat, necessitating advanced cooling solutions to prevent hardware failures. Estimates indicate that 30-40% of a data center’s total electricity usage goes into cooling alone (Dayarathna, Wen, and Fan 2016). Companies have begun adopting alternative cooling methods to reduce this demand. For example, Microsoft’s data center in Ireland leverages a nearby fjord, using over half a million gallons of seawater daily to dissipate heat. However, as AI models scale in complexity, cooling demands continue to grow, making sustainable AI infrastructure design a pressing challenge.

AI vs. Other Industries

The environmental impact of AI workloads has emerged as a significant concern, with carbon emissions approaching levels comparable to established carbon-intensive sectors. Research demonstrates that training a single large AI model generates carbon emissions equivalent to multiple passenger vehicles over their complete lifecycle (Strubell, Ganesh, and McCallum 2019b). To contextualize AI’s environmental footprint, Figure 4 compares the carbon emissions of large-scale machine learning tasks to transcontinental flights, illustrating the substantial energy demands of training and inference workloads. It shows a comparison from lowest to highest carbon footprints, starting with a roundtrip flight between NY and SF, human life average per year, American life average per year, US car including fuel over a lifetime, and a Transformer model with neural architecture search, which has the highest footprint. These comparisons underscore the need for more sustainable AI practices to mitigate the industry’s carbon impact.

The training phase of large natural language processing models produces carbon dioxide emissions comparable to hundreds of transcontinental flights. When examining the broader industry impact, AI’s aggregate computational carbon footprint is approaching parity with the commercial aviation sector. Furthermore, as AI applications scale to serve billions of users globally, the cumulative emissions from continuous inference operations may ultimately exceed those generated during training.

Figure 5 provides a detailed analysis of carbon emissions across various large-scale machine learning tasks at Meta, illustrating the substantial environmental impact of different AI applications and architectures. This quantitative assessment of AI’s carbon footprint underscores the pressing need to develop more sustainable approaches to machine learning development and deployment. Understanding these environmental costs is crucial for implementing effective mitigation strategies and advancing the field responsibly.

Updated Analysis

Moreover, AI’s impact extends beyond energy consumption during operation. The full lifecycle emissions of AI include hardware manufacturing, supply chain emissions, and end-of-life disposal, making AI a significant contributor to environmental degradation. AI models not only require electricity to train and infer, but they also depend on a complex infrastructure of semiconductor fabrication, rare earth metal mining1, and electronic waste disposal. The next section breaks down AI’s carbon emissions into Scope 1 (direct emissions), Scope 2 (indirect emissions from electricity), and Scope 3 (supply chain and lifecycle emissions) to provide a more detailed view of its environmental impact.

1 The production of AI chips requires rare earth elements such as neodymium and dysprosium, the extraction of which has significant environmental consequences.

Carbon Emission Scopes

AI is expected to see an annual growth rate of 37.3% between 2023 and 2030. Yet, applying the same growth rate to operational computing could multiply annual AI energy needs up to 1,000 times by 2030. So, while model optimization tackles one facet, responsible innovation must also consider total lifecycle costs at global deployment scales that were unfathomable just years ago but now pose infrastructure and sustainability challenges ahead.

Scope 1

Scope 1 emissions refer to direct greenhouse gas emissions produced by AI data centers and computing facilities. These emissions result primarily from on-site power generation, including backup diesel generators used to ensure reliability in large cloud environments, as well as facility cooling systems. Although many AI data centers predominantly rely on grid electricity, those with their own power plants or fossil-fuel-dependent backup systems contribute significantly to direct emissions, especially in regions where renewable energy sources are less prevalent (Masanet et al. 2020a).

Scope 2

Scope 2 emissions encompass indirect emissions from electricity purchased to power AI infrastructure. The majority of AI’s operational energy consumption falls under Scope 2, as cloud providers and enterprise computing facilities require massive electrical inputs for GPUs, TPUs, and high-density servers. The carbon intensity associated with Scope 2 emissions varies geographically based on regional energy mixes. Regions dominated by coal and natural gas electricity generation create significantly higher AI-related emissions compared to regions utilizing renewable sources such as wind, hydro, or solar. This geographic variability motivates companies to strategically position data centers in areas with cleaner energy sources and adopt carbon-aware scheduling strategies to reduce emissions (Alvim et al. 2022).

Scope 3

Scope 3 emissions constitute the largest and most complex category, capturing indirect emissions across the entire AI supply chain and lifecycle. These emissions originate from manufacturing, transportation, and disposal of AI hardware, particularly semiconductors and memory modules. Semiconductor manufacturing is particularly energy-intensive, involving complex processes such as chemical etching, rare-earth metal extraction, and extreme ultraviolet (EUV) lithography, all of which produce substantial carbon outputs. Indeed, manufacturing a single high-performance AI accelerator can generate emissions equivalent to several years of operational energy use (Gupta, Kim, et al. 2022).

Beyond manufacturing, Scope 3 emissions include the downstream impact of AI once deployed. AI services such as search engines, social media platforms, and cloud-based recommendation systems operate at enormous scale, requiring continuous inference across millions or even billions of user interactions. The cumulative electricity demand of inference workloads can ultimately surpass the energy used for training, further amplifying AI’s carbon impact. End-user devices, including smartphones, IoT devices, and edge computing platforms, also contribute to Scope 3 emissions, as their AI-enabled functionality depends on sustained computation. Companies such as Meta and Google report that Scope 3 emissions from AI-powered services make up the largest share of their total environmental footprint, due to the sheer scale at which AI operates.

These massive facilities provide the infrastructure for training complex neural networks on vast datasets. For instance, based on leaked information, OpenAI’s language model GPT-4 was trained on Azure data centers packing over 25,000 Nvidia A100 GPUs, used continuously for over 90 to 100 days.

The GHG Protocol framework, illustrated in Figure 6, provides a structured way to visualize the sources of AI-related carbon emissions. Scope 1 emissions arise from direct company operations, such as data center power generation and company-owned infrastructure. Scope 2 covers electricity purchased from the grid, the primary source of emissions for cloud computing workloads. Scope 3 extends beyond an organization’s direct control, including emissions from hardware manufacturing, transportation, and even the end-user energy consumption of AI-powered services. Understanding this breakdown allows for more targeted sustainability strategies, ensuring that efforts to reduce AI’s environmental impact are not solely focused on energy efficiency but also address the broader supply chain and lifecycle emissions that contribute significantly to the industry’s carbon footprint.

Training vs. Inference Impact

The energy consumption of AI systems is often closely associated with the training phase, where substantial computational resources are utilized to develop large-scale machine learning models. However, while training demands significant power, it represents a one-time cost per model version. In contrast, inference, which involves the continuous application of trained models to new data, happens continuously at a massive scale and often becomes the dominant contributor to energy consumption over time (Patterson et al. 2021). As AI-powered services, such as real-time translation, recommender systems, and generative AI applications expand globally, inference workloads increasingly drive AI’s overall carbon footprint.

Training Energy Demands

Training state-of-the-art AI models demands enormous computational resources. For example, models like GPT-4 were trained using over 25,000 Nvidia A100 GPUs operating continuously for approximately three months within cloud-based data centers (Choi and Yoon 2024). OpenAI’s dedicated supercomputer infrastructure, built specifically for large-scale AI training, contains 285,000 CPU cores, 10,000 GPUs, and network bandwidth exceeding 400 gigabits per second per server, illustrating the vast scale and associated energy consumption of AI training infrastructures (Patterson et al. 2021).

High-performance AI accelerators, such as NVIDIA DGX H100 systems, are specifically designed for these training workloads. Each DGX H100 unit can draw up to 10.2 kW at peak load, with clusters often consisting of thousands of nodes running continuously (Choquette 2023). The intensive computational loads result in significant heat dissipation, necessitating substantial cooling infrastructure. Cooling alone can account for 30-40% of total data center energy consumption (Dayarathna, Wen, and Fan 2016).

While significant, these energy costs occur once per trained model. The primary sustainability challenge emerges during model deployment, where inference workloads continuously serve millions or billions of users.

Inference Energy Costs

Inference workloads execute every time an AI model responds to queries, classifies images, or makes predictions. Unlike training, inference scales dynamically and continuously across applications such as search engines, recommendation systems, and generative AI models. Although each individual inference request consumes far less energy compared to training, the cumulative energy usage from billions of daily AI interactions quickly surpasses training-related consumption (Patterson et al. 2021).

For example, AI-driven search engines handle billions of queries per day, recommendation systems provide personalized content continuously, and generative AI services such as ChatGPT or DALL-E have substantial per-query computational costs. The inference energy footprint is especially pronounced in transformer-based models due to high memory and computational bandwidth requirements.

As shown in Figure 7, the market for inference workloads in data centers is projected to grow significantly from $4-5 billion in 2017 to $9-10 billion by 2025, more than doubling in size. Similarly, edge inference workloads are expected to increase from less than $0.1 billion to $4-4.5 billion in the same period. This growth substantially outpaces the expansion of training workloads in both environments, highlighting how the economic footprint of inference is rapidly outgrowing that of training operations.

Unlike traditional software applications with fixed energy footprints, inference workloads dynamically scale with user demand. AI services like Alexa, Siri, and Google Assistant rely on continuous cloud-based inference, processing millions of voice queries per minute, necessitating uninterrupted operation of energy-intensive data center infrastructure.

Edge AI Impact

Inference does not always happen in large data centers—edge AI is emerging as a viable alternative to reduce cloud dependency. Instead of routing every AI request to centralized cloud servers, some AI models can be deployed directly on user devices or at edge computing nodes. This approach reduces data transmission energy costs and lowers the dependency on high-power cloud inference.

However, running inference at the edge does not eliminate energy concerns—especially when AI is deployed at scale. Autonomous vehicles, for instance, require millisecond-latency AI inference, meaning cloud processing is impractical. Instead, vehicles are now being equipped with onboard AI accelerators that function as “data centers on wheels (Sudhakar, Sze, and Karaman 2023). These embedded computing systems process real-time sensor data equivalent to small data centers, consuming significant power even without relying on cloud inference.

Similarly, consumer devices such as smartphones, wearables, and IoT sensors individually consume relatively little power but collectively contribute significantly to global energy use due to their sheer numbers. Therefore, the efficiency benefits of edge computing must be balanced against the extensive scale of device deployment.

Beyond Carbon

While reducing AI’s carbon emissions is critical, the environmental impact extends far beyond energy consumption. The manufacturing of AI hardware involves significant resource extraction, hazardous chemical usage, and water consumption that often receive less attention despite their ecological significance.

Modern semiconductor fabrication plants (fabs) that produce AI chips require millions of gallons of water daily and use over 250 hazardous substances in their processes (Mills and Le Hunte 1997). In regions already facing water stress, such as Taiwan, Arizona, and Singapore, this intensive water usage threatens local ecosystems and communities.

The industry also relies heavily on scarce materials like gallium, indium, arsenic, and helium, which are essential for AI accelerators and high-speed communication chips (Chen 2006; Davies 2011). These materials face both geopolitical supply risks and depletion concerns.

We will explore these critical but often overlooked aspects of AI’s environmental impact, including water consumption, hazardous waste production, rare material scarcity, and biodiversity disruption. Understanding these broader ecological impacts is essential for developing truly sustainable AI infrastructure.

Water Usage

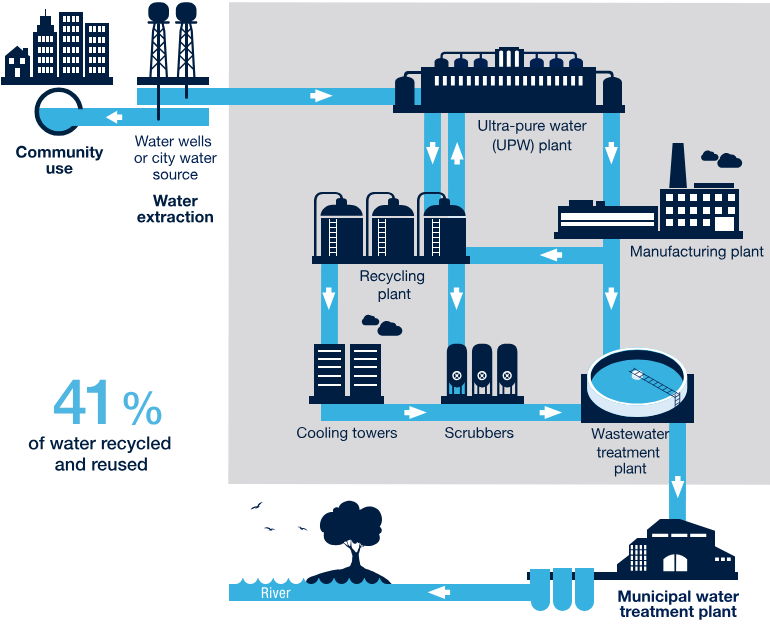

Semiconductor fabrication is an exceptionally water-intensive process, requiring vast quantities of ultrapure water 2 for cleaning, cooling, and chemical processing. The scale of water consumption in modern fabs is comparable to that of entire urban populations. For example, TSMC’s latest fab in Arizona is projected to consume 8.9 million gallons of water per day, accounting for nearly 3% of the city’s total water production. This demand places significant strain on local water resources, particularly in water-scarce regions such as Taiwan, Arizona, and Singapore, where semiconductor manufacturing is concentrated. Semiconductor companies have recognized this challenge and are actively investing in recycling technologies and more efficient water management practices. STMicroelectronics, for example, recycles and reuses approximately 41% of its water, significantly reducing its environmental footprint (see Figure 8 showing the typical semiconductor fab water cycle).

2 Ultrapure water (UPW): Water that has been purified to stringent standards, typically containing less than 1 part per billion of impurities. UPW is essential for semiconductor fabrication, as even trace contaminants can impair chip performance and yield.

The primary use of ultrapure water in semiconductor fabrication is for flushing contaminants from wafers at various production stages. Water also serves as a coolant and carrier fluid in thermal oxidation, chemical deposition, and planarization processes. A single 300mm silicon wafer requires over 8,300 liters of water, with more than two-thirds of this being ultrapure water (Cope 2009). During peak summer months, the cumulative daily water consumption of major fabs rivals that of cities with populations exceeding half a million people.

3 Saltwater Intrusion: The process by which seawater enters freshwater aquifers due to groundwater overuse, leading to water quality degradation.

The impact of this massive water usage extends beyond consumption. Excessive water withdrawal from local aquifers lowers groundwater levels, leading to issues such as land subsidence and saltwater intrusion3. In Hsinchu, Taiwan, one of the world’s largest semiconductor hubs, extensive water extraction by fabs has led to falling water tables and encroaching seawater contamination, affecting both agriculture and drinking water supplies.

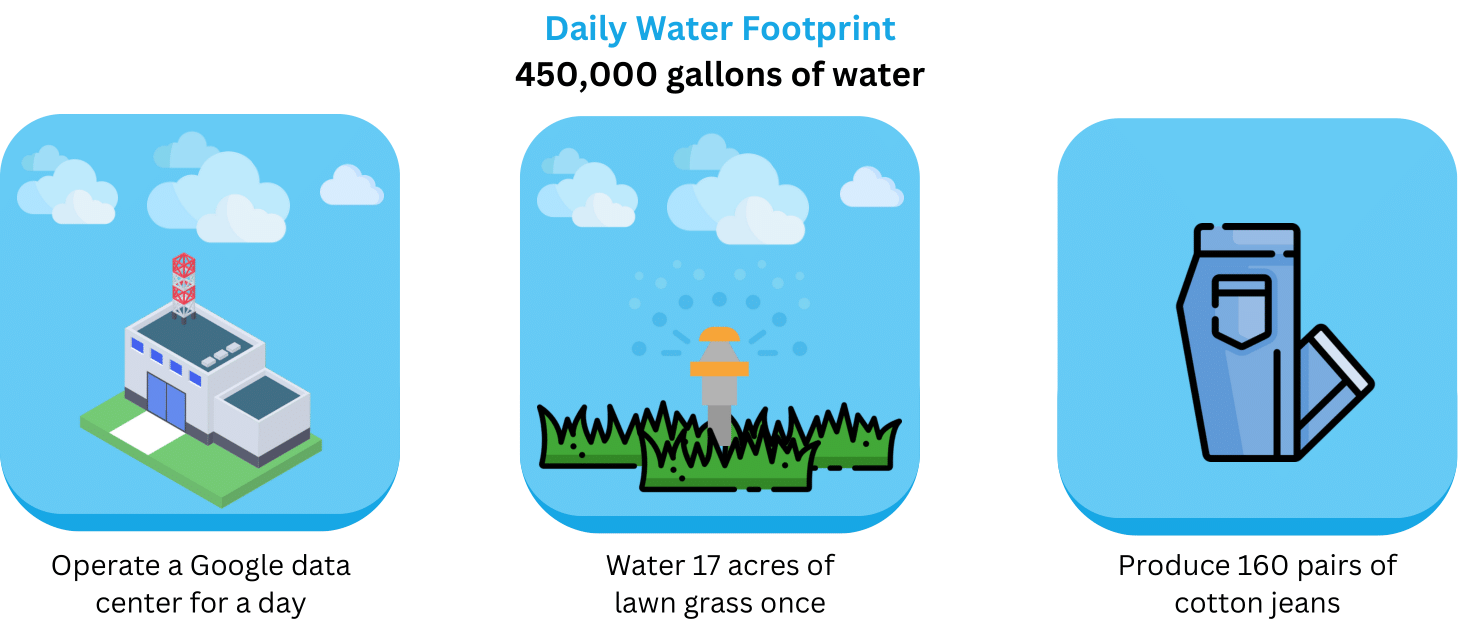

Figure 9 contextualizes the daily water footprint of data centers compared to other industrial uses, illustrating the immense water demand of high-tech infrastructure.

While some semiconductor manufacturers implement water recycling systems, the effectiveness of these measures varies. Intel reports that 97% of its direct water consumption is attributed to fabrication processes (Cooper et al. 2011), and while water reuse is increasing, the sheer scale of water withdrawals remains a critical sustainability challenge.

Beyond depletion, water discharge from semiconductor fabs introduces contamination risks if not properly managed. Wastewater from fabrication contains metals, acids, and chemical residues that must be thoroughly treated before release. Although modern fabs employ advanced purification systems, the extraction of contaminants still generates hazardous byproducts, which, if not carefully disposed of, pose risks to local ecosystems.

The growing demand for semiconductor manufacturing, driven by AI acceleration and computing infrastructure expansion, makes water management a crucial factor in sustainable AI development. Ensuring the long-term viability of semiconductor production requires not only reducing direct water consumption but also enhancing wastewater treatment and developing alternative cooling technologies that minimize reliance on fresh water sources.

Hazardous Chemicals

Semiconductor fabrication is heavily reliant on highly hazardous chemicals, which play an essential role in processes such as etching, doping, and wafer cleaning. The manufacturing of AI hardware, including GPUs, TPUs, and other specialized accelerators, requires the use of strong acids, volatile solvents, and toxic gases, all of which pose significant health and environmental risks if not properly managed. The scale of chemical usage in fabs is immense, with thousands of metric tons of hazardous substances consumed annually (Kim et al. 2018).

Among the most critical chemical categories used in fabrication are strong acids, which facilitate wafer etching and oxide removal. Hydrofluoric acid, sulfuric acid, nitric acid, and hydrochloric acid are commonly employed in the cleaning and patterning stages of chip production. While effective for these processes, these acids are highly corrosive and toxic, capable of causing severe chemical burns and respiratory damage if mishandled. Large semiconductor fabs require specialized containment, filtration, and neutralization systems to prevent accidental exposure and environmental contamination.

Solvents are another critical component in chip manufacturing, primarily used for dissolving photoresists and cleaning wafers. Key solvents include xylene, methanol, and methyl isobutyl ketone (MIBK), which, despite their utility, present air pollution and worker safety risks. These solvents are volatile organic compounds (VOCs)4 that can evaporate into the atmosphere, contributing to indoor and outdoor air pollution. If not properly contained, VOC exposure can result in neurological damage, respiratory issues, and long-term health effects for workers in semiconductor fabs.

4 Volatile organic compounds (VOCs): Organic chemicals that easily evaporate into the air, posing health risks and contributing to air pollution. VOCs are commonly used in semiconductor manufacturing for cleaning, etching, and photoresist removal.

Toxic gases are among the most dangerous substances used in AI chip manufacturing. Gases such as arsine (AsH₃), phosphine (PH₃), diborane (B₂H₆), and germane (GeH₄) are used in doping and chemical vapor deposition processes, essential for fine-tuning semiconductor properties. These gases are highly toxic and even fatal at low concentrations, requiring extensive handling precautions, gas scrubbers, and emergency response protocols. Any leaks or accidental releases in fabs can lead to severe health hazards for workers and surrounding communities.

While modern fabs employ strict safety controls, protective equipment, and chemical treatment systems, incidents still occur, leading to chemical spills, gas leaks, and contamination risks. The challenge of effectively managing hazardous chemicals is heightened by the ever-increasing complexity of AI accelerators, which require more advanced fabrication techniques and new chemical formulations.

Beyond direct safety concerns, the long-term environmental impact of hazardous chemical use remains a major sustainability issue. Semiconductor fabs generate large volumes of chemical waste, which, if improperly handled, can contaminate groundwater, soil, and local ecosystems. Regulations in many countries require fabs to neutralize and treat waste before disposal, but compliance and enforcement vary globally, leading to differing levels of environmental protection.

To mitigate these risks, fabs must continue advancing green chemistry initiatives, exploring alternative etchants, solvents, and gas formulations that reduce toxicity while maintaining fabrication efficiency. Additionally, process optimizations that minimize chemical waste, improve containment, and enhance recycling efforts will be essential to reducing the environmental footprint of AI hardware production.

Resource Depletion

While silicon is abundant and readily available, the fabrication of AI accelerators, GPUs, and specialized AI chips depends on scarce and geopolitically sensitive materials that are far more difficult to source. AI hardware manufacturing requires a range of rare metals, noble gases, and semiconductor compounds, many of which face supply constraints, geopolitical risks, and environmental extraction costs. As AI models become larger and more computationally intensive, the demand for these materials continues to rise, raising concerns about long-term availability and sustainability.

Although silicon forms the foundation of semiconductor devices, high-performance AI chips depend on rare elements such as gallium, indium, and arsenic, which are essential for high-speed, low-power electronic components (Chen 2006). Gallium and indium, for example, are widely used in compound semiconductors, particularly for 5G communications, optoelectronics, and AI accelerators. The United States Geological Survey (USGS) has classified indium as a critical material, with global supplies expected to last fewer than 15 years at the current rate of consumption (Davies 2011).

5 Extreme ultraviolet (EUV) lithography: A cutting-edge semiconductor manufacturing technique that uses EUV light to etch nanoscale features on silicon wafers. EUV lithography is essential for producing advanced AI chips with smaller transistors and higher performance.

Another major concern is helium, a noble gas critical for semiconductor cooling, plasma etching, and EUV lithography5 used in next-generation chip production. Helium is unique in that once released into the atmosphere, it escapes Earth’s gravity and is lost forever, making it a non-renewable resource (Davies 2011). The semiconductor industry is one of the largest consumers of helium, and supply shortages have already led to price spikes and disruptions in fabrication processes. As AI hardware manufacturing scales, the demand for helium will continue to grow, necessitating more sustainable extraction and recycling practices.

Beyond raw material availability, the geopolitical control of rare earth elements poses additional challenges. China currently dominates over 90% of the world’s rare earth element (REE) refining capacity, including materials essential for AI chips, such as neodymium (for high-performance magnets in AI accelerators) and yttrium (for high-temperature superconductors) (Jha 2014). This concentration of supply creates supply chain vulnerabilities, as trade restrictions or geopolitical tensions could severely impact AI hardware production.

Table 1 highlights the key materials essential for AI semiconductor manufacturing, their applications, and supply concerns.

| Material | Application in AI Semiconductor Manufacturing | Supply Concerns |

|---|---|---|

| Silicon (Si) | Primary substrate for chips, wafers, transistors | Processing constraints; geopolitical risks |

| Gallium (Ga) | GaN-based power amplifiers, high-frequency components | Limited availability; byproduct of aluminum and zinc production |

| Germanium (Ge) | High-speed transistors, photodetectors, optical interconnects | Scarcity; geographically concentrated |

| Indium (In) | Indium Tin Oxide (ITO), optoelectronics | Limited reserves; recycling dependency |

| Tantalum (Ta) | Capacitors, stable integrated components | Conflict mineral; vulnerable supply chains |

| Rare Earth Elements (REEs) | Magnets, sensors, high-performance electronics | High geopolitical risks; environmental extraction concerns |

| Cobalt (Co) | Batteries for edge computing devices | Human rights issues; geographical concentration (Congo) |

| Tungsten (W) | Interconnects, barriers, heat sinks | Limited production sites; geopolitical concerns |

| Copper (Cu) | Interconnects, barriers, heat sinks | Limited high-purity sources; geopolitical concerns |

| Helium (He) | Semiconductor cooling, plasma etching, EUV lithography | Non-renewable; irretrievable atmospheric loss; limited extraction capacity |

| Indium (In) | ITO layers, optoelectronic components | Limited global reserves; geopolitical concentration |

| Cobalt (Co) | Batteries for edge computing devices | Geographical concentration; human rights concerns |

| Tungsten (W) | Interconnects, heat sinks | Limited production sites; geopolitical concerns |

| Copper (Cu) | Conductive pathways, wiring | Geopolitical dependencies; limited recycling capacity |

The rapid growth of AI and semiconductor demand has accelerated the depletion of these critical resources, creating an urgent need for material recycling, substitution strategies, and more sustainable extraction methods. Some efforts are underway to explore alternative semiconductor materials that reduce dependency on rare elements, but these solutions require significant advancement before they become viable alternatives at scale.

The transition toward optical interconnects in AI infrastructure exemplifies how emerging technologies can compound these resource challenges. Modern AI systems like Google’s TPUs and high-performance interconnect solutions from companies like Mellanox6 increasingly rely on optical technologies to achieve the bandwidth requirements for distributed training and inference. While optical interconnects offer advantages including higher bandwidth (up to 400 Gbps in the case of TPUv4 (Jouppi et al. 2023)), reduced power consumption, and immunity to electromagnetic interference compared to copper-based connections, they introduce additional material dependencies, particularly for germanium used in high-speed photodetectors and optical components. As AI systems increasingly adopt optical interconnection to address data center bandwidth limitations, the demand for germanium-based components will intensify existing supply chain vulnerabilities, highlighting the need for comprehensive material sustainability planning in AI infrastructure development.

6 Mellanox Technologies: Founded in 1999 and acquired by NVIDIA in 2020, Mellanox pioneered high-performance InfiniBand and Ethernet networking solutions that enable high-speed optical interconnects in data centers and AI infrastructure.

Waste Generation

Semiconductor fabrication produces significant volumes of hazardous waste, including gaseous emissions, VOCs, chemical-laden wastewater, and solid toxic byproducts. The production of AI accelerators, GPUs, and other high-performance chips involves multiple stages of chemical processing, etching, and cleaning, each generating waste materials that must be carefully treated to prevent environmental contamination.

Fabs release gaseous waste from various processing steps, particularly chemical vapor deposition (CVD), plasma etching, and ion implantation. This includes toxic and corrosive gases such as arsine (AsH₃), phosphine (PH₃), and germane (GeH₄), which require advanced scrubber systems to neutralize before release into the atmosphere. If not properly filtered, these gases pose severe health hazards and contribute to air pollution and acid rain formation (Grossman 2007).

VOCs are another major waste category, emitted from photoresist processing, cleaning solvents, and lithographic coatings. Chemicals such as xylene, acetone, and methanol readily evaporate into the air, where they contribute to ground-level ozone formation and indoor air quality hazards for fab workers. In regions where semiconductor production is concentrated, such as Taiwan and South Korea, regulators have imposed strict VOC emission controls to mitigate their environmental impact.

Semiconductor fabs also generate large volumes of spent acids and metal-laden wastewater, requiring extensive treatment before discharge. Strong acids such as sulfuric acid, hydrofluoric acid, and nitric acid are used to etch silicon wafers, removing excess materials during fabrication. When these acids become contaminated with heavy metals, fluorides, and chemical residues, they must undergo neutralization and filtration before disposal. Improper handling of wastewater has led to groundwater contamination incidents, highlighting the importance of robust waste management systems (Prakash et al. 2023).

The solid waste produced in AI hardware manufacturing includes sludge, filter cakes, and chemical residues collected from fab exhaust and wastewater treatment systems. These byproducts often contain concentrated heavy metals, rare earth elements, and semiconductor process chemicals, making them hazardous for conventional landfill disposal. In some cases, fabs incinerate toxic waste, generating additional environmental concerns related to airborne pollutants and toxic ash disposal.

Beyond the waste generated during manufacturing, the end-of-life disposal of AI hardware presents another sustainability challenge. AI accelerators, GPUs, and server hardware have short refresh cycles, with data center equipment typically replaced every 3-5 years. This results in millions of tons of e-waste annually, much of which contains toxic heavy metals such as lead, cadmium, and mercury. Despite growing efforts to improve electronics recycling, current systems capture only 17.4% of global e-waste, leaving the majority to be discarded in landfills or improperly processed (Singh and Ogunseitan 2022).

Addressing the hazardous waste impact of AI requires advancements in both semiconductor manufacturing and e-waste recycling. Companies are exploring closed-loop recycling for rare metals, improved chemical treatment processes, and alternative materials with lower toxicity. However, as AI models continue to drive demand for higher-performance chips and larger-scale computing infrastructure, the industry’s ability to manage its waste footprint will be a key factor in achieving sustainable AI development.

Biodiversity Impact

The environmental footprint of AI hardware extends beyond carbon emissions, resource depletion, and hazardous waste. The construction and operation of semiconductor fabrication facilities (fabs), data centers, and supporting infrastructure directly impact natural ecosystems, contributing to habitat destruction, water stress, and pollution. These environmental changes have far-reaching consequences for wildlife, plant ecosystems, and aquatic biodiversity, highlighting the need for sustainable AI development that considers broader ecological effects.

Semiconductor fabs and data centers require large tracts of land, often leading to deforestation and destruction of natural habitats. These facilities are typically built in industrial parks or near urban centers, but as demand for AI hardware increases, fabs are expanding into previously undeveloped regions, encroaching on forests, wetlands, and agricultural land.

The physical expansion of AI infrastructure disrupts wildlife migration patterns, as roads, pipelines, transmission towers, and supply chains fragment natural landscapes. Species that rely on large, connected ecosystems for survival, including migratory birds, large mammals, and pollinators, face increased barriers to movement, reducing genetic diversity and population stability. In regions with dense semiconductor manufacturing, such as Taiwan and South Korea, habitat loss has already been linked to declining biodiversity in affected areas (Hsu et al. 2016).

The massive water consumption of semiconductor fabs poses serious risks to aquatic ecosystems, particularly in water-stressed regions. Excessive groundwater extraction for AI chip production can lower water tables, affecting local rivers, lakes, and wetlands. In Hsinchu, Taiwan, where fabs draw millions of gallons of water daily, seawater intrusion has been reported in local aquifers, altering water chemistry and making it unsuitable for native fish species and vegetation.

Beyond depletion, wastewater discharge from fabs introduces chemical contaminants into natural water systems. While many facilities implement advanced filtration and recycling, even trace amounts of heavy metals, fluorides, and solvents can accumulate in water bodies, bioaccumulating in fish and disrupting aquatic ecosystems. Additionally, thermal pollution from data centers, which release heated water back into lakes and rivers, can raise temperatures beyond tolerable levels for native species, affecting oxygen levels and reproductive cycles (LeRoy Poff, Brinson, and Day 2002).

Semiconductor fabs emit a variety of airborne pollutants, including VOCs, acid mists, and metal particulates, which can travel significant distances before settling in the environment. These emissions contribute to air pollution and acid deposition, which damage plant life, soil quality, and nearby agricultural systems.

Airborne chemical deposition has been linked to tree decline, reduced crop yields, and soil acidification, particularly near industrial semiconductor hubs. In areas with high VOC emissions, plant growth can be stunted by prolonged exposure, affecting ecosystem resilience and food chains. Additionally, accidental chemical spills or gas leaks from fabs pose severe risks to both local wildlife and human populations, requiring strict regulatory enforcement to minimize long-term ecological damage (Wald and Jones 1987).

The environmental consequences of AI hardware manufacturing demonstrate the urgent need for sustainable semiconductor production, including reduced land use, improved water recycling, and stricter emissions controls. Without intervention, the accelerating demand for AI chips could further strain global biodiversity, emphasizing the importance of balancing technological progress with ecological responsibility.

Semiconductor Life Cycle

The environmental footprint of AI systems extends beyond energy consumption during model training and inference. A comprehensive assessment of AI’s sustainability must consider the entire lifecycle—from the extraction of raw materials used in hardware manufacturing to the eventual disposal of obsolete computing infrastructure. Life Cycle Analysis (LCA) provides a systematic approach to quantifying the cumulative environmental impact of AI across its four key phases: design, manufacture, use, and disposal.

By applying LCA to AI systems, researchers and policymakers can pinpoint critical intervention points to reduce emissions, improve resource efficiency, and implement sustainable practices. This approach provides a holistic understanding of AI’s ecological costs, extending sustainability considerations beyond operational power consumption to include hardware supply chains and electronic waste management.

Figure 10 illustrates the four primary stages of an AI system’s lifecycle, each contributing to its total environmental footprint.

The following sections will analyze each lifecycle phase in detail, exploring its specific environmental impacts and sustainability challenges.

Design Phase

The design phase of an AI system encompasses the research, development, and optimization of machine learning models before deployment. This stage involves iterating on model architectures, adjusting hyperparameters, and running training experiments to improve performance. These processes are computationally intensive, requiring extensive use of hardware resources and energy. The environmental cost of AI model design is often underestimated, but repeated training runs, algorithm refinements, and exploratory experimentation contribute significantly to the overall sustainability impact of AI systems.

Developing an AI model requires running multiple experiments to determine the most effective architecture. Neural architecture search (NAS), for instance, automates the process of selecting the best model structure by evaluating hundreds or even thousands of configurations, each requiring a separate training cycle. Similarly, hyperparameter tuning involves modifying parameters such as learning rates, batch sizes, and optimization strategies to enhance model performance, often through exhaustive search techniques. Pre-training and fine-tuning further add to the computational demands, as models undergo multiple training iterations on different datasets before deployment. The iterative nature of this process results in high energy consumption, with studies indicating that hyperparameter tuning alone can account for up to 80% of training-related emissions.

The scale of energy consumption in the design phase becomes evident when examining large AI models. OpenAI’s GPT-3, for example, required an estimated 1,300 megawatt-hours (MWh) of electricity for training, a figure comparable to the energy consumption of 1,450 U.S. homes over an entire month (Maslej et al. 2023). However, this estimate only reflects the final training run and does not account for the extensive trial-and-error processes that preceded model selection. In deep reinforcement learning applications, such as DeepMind’s AlphaZero, models undergo repeated training cycles to improve decision-making policies, further amplifying energy demands.

The carbon footprint of AI model design varies significantly depending on the computational resources required and the energy sources powering the data centers where training occurs. A widely cited study found that training a single large-scale natural language processing (NLP) model could produce emissions equivalent to the lifetime carbon footprint of five cars (Strubell, Ganesh, and McCallum 2019b). The impact is even more pronounced when training is conducted in data centers reliant on fossil fuels. For instance, models trained in coal-powered facilities in Virginia (USA) generate far higher emissions than those trained in regions powered by hydroelectric or nuclear energy. Hardware selection also plays a crucial role; training on energy-efficient tensor processing units (TPUs) can significantly reduce emissions compared to using traditional graphics processing units (GPUs).

Table 2 summarizes the estimated carbon emissions associated with training various AI models, illustrating the correlation between model complexity and environmental impact.

| AI Model | Training FLOPs | Estimated \(\textrm{CO}_2\) Emissions (kg) | Equivalent Car Mileage |

|---|---|---|---|

| GPT-3 | \(3.1 \times 10^{23}\) | 502,000 kg | 1.2 million miles |

| T5-11B | \(2.3 \times 10^{22}\) | 85,000 kg | 210,000 miles |

| BERT (Base) | \(3.3 \times 10^{18}\) | 650 kg | 1,500 miles |

| ResNet-50 | \(2.0 \times 10^{17}\) | 35 kg | 80 miles |

Addressing the sustainability challenges of the design phase requires innovations in training efficiency and computational resource management. Researchers have explored techniques such as sparse training, low-precision arithmetic, and weight-sharing methods to reduce the number of required computations without sacrificing model performance. The use of pre-trained models has also gained traction as a means of minimizing resource consumption. Instead of training models from scratch, researchers can fine-tune smaller versions of pre-trained networks, leveraging existing knowledge to achieve similar results with lower computational costs.

Optimizing model search algorithms further contributes to sustainability. Traditional neural architecture search methods require evaluating a large number of candidate architectures, but recent advances in energy-aware NAS approaches prioritize efficiency by reducing the number of training iterations needed to identify optimal configurations. Companies have also begun implementing carbon-aware computing strategies by scheduling training jobs during periods of lower grid carbon intensity or shifting workloads to data centers with cleaner energy sources (Gupta, Elgamal, et al. 2022).

The design phase sets the foundation for the entire AI lifecycle, influencing energy demands in both the training and inference stages. As AI models grow in complexity, their development processes must be reevaluated to ensure that sustainability considerations are integrated at every stage. The decisions made during model design not only determine computational efficiency but also shape the long-term environmental footprint of AI technologies.

Manufacturing Phase

The manufacturing phase of AI systems is one of the most resource-intensive aspects of their lifecycle, involving the fabrication of specialized semiconductor hardware such as GPUs, TPUs, FPGAs, and other AI accelerators. The production of these chips requires large-scale industrial processes, including raw material extraction, wafer fabrication, lithography, doping, and packaging—all of which contribute significantly to environmental impact (Bhamra et al. 2024). This phase not only involves high energy consumption but also generates hazardous waste, relies on scarce materials, and has long-term consequences for resource depletion.

Fabrication Materials

The foundation of AI hardware lies in semiconductors, primarily silicon-based integrated circuits that power AI accelerators. However, modern AI chips rely on more than just silicon; they require specialty materials such as gallium, indium, arsenic, and helium, each of which carries unique environmental extraction costs. These materials are often classified as critical elements due to their scarcity, geopolitical sensitivity, and high energy costs associated with mining and refining (Bhamra et al. 2024).

Silicon itself is abundant, but refining it into high-purity wafers requires extensive energy-intensive processes. The production of a single 300mm silicon wafer requires over 8,300 liters of water, along with strong acids such as hydrofluoric acid, sulfuric acid, and nitric acid used for etching and cleaning (Cope 2009). The demand for ultra-pure water in semiconductor fabrication places a significant burden on local water supplies, with leading fabs consuming millions of gallons per day.

Beyond silicon, gallium and indium are essential for high-performance compound semiconductors, such as those used in high-speed AI accelerators and 5G communications. The U.S. Geological Survey has classified indium as a critically endangered material, with global supplies estimated to last fewer than 15 years at current consumption rates (Davies 2011). Meanwhile, helium, a crucial cooling agent in chip production, is a non-renewable resource that, once released, escapes Earth’s gravity, making it permanently unrecoverable. The continued expansion of AI hardware manufacturing is accelerating the depletion of these critical elements, raising concerns about long-term sustainability.

The environmental burden of semiconductor fabrication is further amplified by the use of EUV lithography, a process required for manufacturing sub-5nm chips. EUV systems consume massive amounts of energy, requiring high-powered lasers and complex optics. The International Semiconductor Roadmap estimates that each EUV tool consumes approximately one megawatt (MW) of electricity, significantly increasing the carbon footprint of cutting-edge chip production.

Manufacturing Energy Consumption

The energy required to manufacture AI hardware is substantial, with the total energy cost per chip often exceeding its entire operational lifetime energy use. The manufacturing of a single AI accelerator can emit more carbon than years of continuous use in a data center, making fabrication a key hotspot in AI’s environmental impact.

Hazardous Waste and Water Usage in Fabs

Semiconductor fabrication also generates large volumes of hazardous waste, including gaseous emissions, VOCs, chemical wastewater, and solid byproducts. The acids and solvents used in chip production produce toxic waste streams that require specialized handling to prevent contamination of surrounding ecosystems. Despite advancements in wastewater treatment, trace amounts of metals and chemical residues can still be released into rivers and lakes, affecting aquatic biodiversity and human health (Prakash et al. 2023).

7 Taiwan Semiconductor Manufacturing Company (TSMC) is one of the world’s largest semiconductor fabs, consuming millions of gallons of water daily in chip production, raising concerns about water scarcity.

The demand for water in semiconductor fabs has also raised concerns about regional water stress. The TSMC7 fab in Arizona is projected to consume 8.9 million gallons per day, a figure that accounts for nearly 3% of the city’s water supply. While some fabs have begun investing in water recycling systems, these efforts remain insufficient to offset the growing demand.

Sustainable Initiatives

Recognizing the sustainability challenges of semiconductor manufacturing, industry leaders have started implementing initiatives to reduce energy consumption, waste generation, and emissions. Companies like Intel, TSMC, and Samsung have pledged to transition towards carbon-neutral semiconductor fabrication through several key approaches. Many fabs are incorporating renewable energy sources, with facilities in Taiwan and Europe increasingly powered by hydroelectric and wind energy. Water conservation efforts have expanded through closed-loop recycling systems that reduce dependence on local water supplies. Manufacturing processes are being redesigned with eco-friendly etching and lithography techniques that minimize hazardous waste generation. Additionally, companies are developing energy-efficient chip architectures, such as low-power AI accelerators optimized for performance per watt, to reduce the environmental impact of both manufacturing and operation. Despite these efforts, the overall environmental footprint of AI chip manufacturing continues to grow as demand for AI accelerators escalates. Without significant improvements in material efficiency, recycling, and fabrication techniques, the manufacturing phase will remain a major contributor to AI’s sustainability challenges.

The manufacturing phase of AI hardware represents one of the most resource-intensive and environmentally impactful aspects of AI’s lifecycle. The extraction of critical materials, high-energy fabrication processes, and hazardous waste generation all contribute to AI’s growing carbon footprint. While industry efforts toward sustainable semiconductor manufacturing are gaining momentum, scaling these initiatives to meet rising AI demand remains a significant challenge.

Addressing the sustainability of AI hardware will require a combination of material innovation, supply chain transparency, and greater investment in circular economy models that emphasize chip recycling and reuse. As AI systems continue to advance, their long-term viability will depend not only on computational efficiency but also on reducing the environmental burden of their underlying hardware infrastructure.

Use Phase

The use phase of AI systems represents one of the most energy-intensive stages in their lifecycle, encompassing both training and inference workloads. As AI adoption grows across industries, the computational requirements for developing and deploying models continue to increase, leading to greater energy consumption and carbon emissions. The operational costs of AI systems extend beyond the direct electricity used in processing; they also include the power demands of data centers, cooling infrastructure, and networking equipment that support large-scale AI workloads. Understanding the sustainability challenges of this phase is critical for mitigating AI’s long-term environmental impact.

AI model training is among the most computationally expensive activities in the use phase. Training large-scale models involves running billions or even trillions of mathematical operations across specialized hardware, such as GPUs and TPUs, for extended periods. The energy consumption of training has risen sharply in recent years as AI models have grown in complexity. OpenAI’s GPT-3, for example, required approximately 1,300 megawatt-hours (MWh) of electricity, an amount equivalent to powering 1,450 U.S. homes for a month (Maslej et al. 2023). The carbon footprint of such training runs depends largely on the energy mix of the data center where they are performed. A model trained in a region relying primarily on fossil fuels, such as coal-powered data centers in Virginia, generates significantly higher emissions than one trained in a facility powered by hydroelectric or nuclear energy.

Beyond training, the energy demands of AI do not end once a model is developed. The inference phase, where a trained model is used to generate predictions, is responsible for an increasingly large share of AI’s operational carbon footprint. In real-world applications, inference workloads run continuously, handling billions of requests daily across services such as search engines, recommendation systems, language models, and autonomous systems. The cumulative energy impact of inference is substantial, especially in large-scale deployments. While a single training run for a model like GPT-3 is energy-intensive, inference workloads running across millions of users can consume even more power over time. Studies have shown that inference now accounts for more than 60% of total AI-related energy consumption, exceeding the carbon footprint of training in many cases (Patterson, Gonzalez, Holzle, et al. 2022).

Data centers play a central role in enabling AI, housing the computational infrastructure required for training and inference. These facilities rely on thousands of high-performance servers, each drawing significant power to process AI workloads. The power usage effectiveness of a data center, which measures the efficiency of its energy use, directly influences AI’s carbon footprint. Many modern data centers operate with PUE values between 1.1 and 1.5, meaning that for every unit of power used for computation, an additional 10% to 50% is consumed for cooling, power conversion, and infrastructure overhead (Barroso, Hölzle, and Ranganathan 2019). Cooling systems, in particular, are a major contributor to data center energy consumption, as AI accelerators generate substantial heat during operation.

The geographic location of data centers has a direct impact on their sustainability. Facilities situated in regions with renewable energy availability can significantly reduce emissions compared to those reliant on fossil fuel-based grids. Companies such as Google and Microsoft have invested in carbon-aware computing strategies, scheduling AI workloads during periods of high renewable energy production to minimize their carbon impact (Gupta, Elgamal, et al. 2022). Google’s DeepMind, for instance, developed an AI-powered cooling optimization system that reduced data center cooling energy consumption by 40%, lowering the overall carbon footprint of AI infrastructure.

The increasing energy demands of AI raise concerns about grid capacity and sustainability trade-offs. AI workloads often compete with other high-energy sectors, such as manufacturing and transportation, for limited electricity supply. In some regions, the rise of AI-driven data centers has led to increased stress on power grids, necessitating new infrastructure investments. The so-called “duck curve” problem, where renewable energy generation fluctuates throughout the day, poses additional challenges for balancing AI’s energy demands with grid availability. The shift toward distributed AI computing and edge processing is emerging as a potential solution to reduce reliance on centralized data centers, shifting some computational tasks closer to end users.

Mitigating the environmental impact of AI’s use phase requires a combination of hardware, software, and infrastructure-level optimizations. Advances in energy-efficient chip architectures, such as low-power AI accelerators and specialized inference hardware, have shown promise in reducing per-query energy consumption. AI models themselves are being optimized for efficiency through techniques such as quantization, pruning, and distillation, which allow for smaller, faster models that maintain high accuracy while requiring fewer computational resources. Meanwhile, ongoing improvements in cooling efficiency, renewable energy integration, and data center operations are essential for ensuring that AI’s growing footprint remains sustainable in the long term.

As AI adoption continues to expand, energy efficiency must become a central consideration in model deployment strategies. The use phase will remain a dominant contributor to AI’s environmental footprint, and without significant intervention, the sector’s electricity consumption could grow exponentially. Sustainable AI development requires a coordinated effort across industry, academia, and policymakers to promote responsible AI deployment while ensuring that technological advancements do not come at the expense of long-term environmental sustainability.

Disposal Phase

The disposal phase of AI systems is often overlooked in discussions of sustainability, yet it presents significant environmental challenges. The rapid advancement of AI hardware has led to shorter hardware lifespans, contributing to growing electronic waste (e-waste) and resource depletion. As AI accelerators, GPUs, and high-performance processors become obsolete within a few years, managing their disposal has become a pressing sustainability concern. Unlike traditional computing devices, AI hardware contains complex materials, rare earth elements, and hazardous substances that complicate recycling and waste management efforts. Without effective strategies for repurposing, recycling, or safely disposing of AI hardware, the environmental burden of AI infrastructure will continue to escalate.

The lifespan of AI hardware is relatively short, particularly in data centers where performance efficiency dictates frequent upgrades. On average, GPUs, TPUs, and AI accelerators are replaced every three to five years, as newer, more powerful models enter the market. This rapid turnover results in a constant cycle of hardware disposal, with large-scale AI deployments generating substantial e-waste. Unlike consumer electronics, which may have secondary markets for resale or reuse, AI accelerators often become unviable for commercial use once they are no longer state-of-the-art. The push for ever-faster and more efficient AI models accelerates this cycle, leading to an increasing volume of discarded high-performance computing hardware.

One of the primary environmental concerns with AI hardware disposal is the presence of hazardous materials. AI accelerators contain heavy metals such as lead, cadmium, and mercury, as well as toxic chemical compounds used in semiconductor fabrication. If not properly handled, these materials can leach into soil and water sources, causing long-term environmental and health hazards. The burning of e-waste releases toxic fumes, contributing to air pollution and exposing workers in informal recycling operations to harmful substances. Studies estimate that only 17.4% of global e-waste is properly collected and recycled, leaving the majority to end up in landfills or informal waste processing sites with inadequate environmental protections (Singh and Ogunseitan 2022).