AI Acceleration

DALL·E 3 Prompt: Create an intricate and colorful representation of a System on Chip (SoC) design in a rectangular format. Showcase a variety of specialized machine learning accelerators and chiplets, all integrated into the processor. Provide a detailed view inside the chip, highlighting the rapid movement of electrons. Each accelerator and chiplet should be designed to interact with neural network neurons, layers, and activations, emphasizing their processing speed. Depict the neural networks as a network of interconnected nodes, with vibrant data streams flowing between the accelerator pieces, showcasing the enhanced computation speed.

Purpose

What makes specialized hardware acceleration not just beneficial but essential for practical machine learning deployment, and why does this represent a fundamental shift in how we approach computational system design?

Practical machine learning systems depend entirely on hardware acceleration. Without specialized processors, computational demands remain economically and physically infeasible. General-purpose CPUs achieve only 100 GFLOPS1 for neural network operations (Sze et al. 2017a), while modern training workloads require trillions of operations per second, creating a performance gap that traditional scaling cannot bridge. Hardware acceleration transforms computationally impossible tasks into practical deployments, enabling entirely new application categories. Engineers working with modern AI systems must understand acceleration principles to harness 100-1000\(\times\) performance improvements that make real-time inference, large-scale training, and edge deployment economically viable.

1 GFLOPS (Giga Floating-Point Operations Per Second): A measure of computational throughput representing one billion floating-point operations per second. TOPS (Tera Operations Per Second) represents one trillion operations per second, typically used for integer operations in AI accelerators.

Trace the evolution of hardware acceleration from floating-point coprocessors to modern AI accelerators and explain the architectural principles driving this progression

Classify AI compute primitives (vector operations, matrix multiplication, systolic arrays) and analyze their implementation in contemporary accelerators

Evaluate memory hierarchy designs for AI accelerators and predict their impact on performance bottlenecks using bandwidth and energy consumption metrics

Design mapping strategies for neural network layers onto specialized hardware architectures, considering dataflow patterns and resource utilization trade-offs

Apply compiler optimization techniques (graph optimization, kernel fusion, memory planning) to transform high-level ML models into efficient hardware execution plans

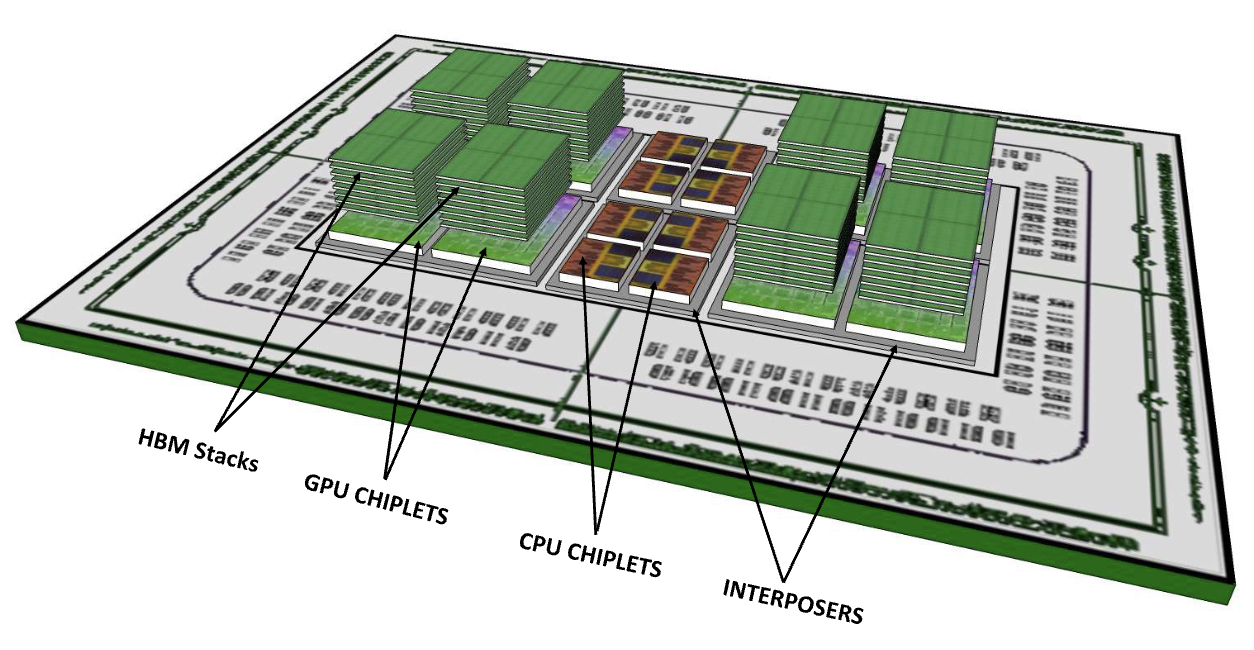

Compare multi-chip scaling approaches (chiplets, multi-GPU, distributed systems) and assess their suitability for different AI workload characteristics

Critique common misconceptions about hardware acceleration and identify potential pitfalls in accelerator selection and deployment strategies

AI Hardware Acceleration Fundamentals

Modern machine learning systems challenge the architectural assumptions underlying general-purpose processors. While software optimization techniques examined in the preceding chapter provide systematic approaches to algorithmic efficiency through precision reduction, structural pruning, and execution refinements, they operate within the constraints of existing computational substrates. Conventional CPUs achieve utilization rates of merely 5-10% when executing typical machine learning workloads (Gholami et al. 2024), due to architectural misalignments between sequential processing models and the highly parallel, data-intensive nature of neural network computations.

This performance gap has driven a shift toward domain-specific hardware acceleration within computer architecture. Hardware acceleration complements software optimization, addressing efficiency limitations through architectural redesign rather than algorithmic modification. The co-evolution of machine learning algorithms and specialized computing architectures has enabled the transition from computationally prohibitive research conducted on high-performance computing systems to ubiquitous deployment across diverse computing environments, from hyperscale data centers to resource-constrained edge devices.

Hardware acceleration for machine learning systems sits at the intersection of computer systems engineering, computer architecture, and applied machine learning. For practitioners developing production systems, architectural selection decisions regarding accelerator technologies encompassing graphics processing units, tensor processing units, and neuromorphic processors directly determine system-level performance characteristics, energy efficiency profiles, and implementation complexity. Deployed systems in domains such as natural language processing, computer vision, and autonomous systems demonstrate performance improvements spanning two to three orders of magnitude relative to general-purpose implementations.

This chapter examines hardware acceleration principles and methodologies for machine learning systems. The analysis begins with the historical evolution of domain-specific computing architectures, showing how design patterns from floating-point coprocessors to graphics processing units inform contemporary AI acceleration strategies. We then address the computational primitives that characterize machine learning workloads, including matrix multiplication, vector operations, and nonlinear activation functions, and analyze the architectural mechanisms through which specialized hardware optimizes these operations via innovations such as systolic array architectures and tensor processing cores.

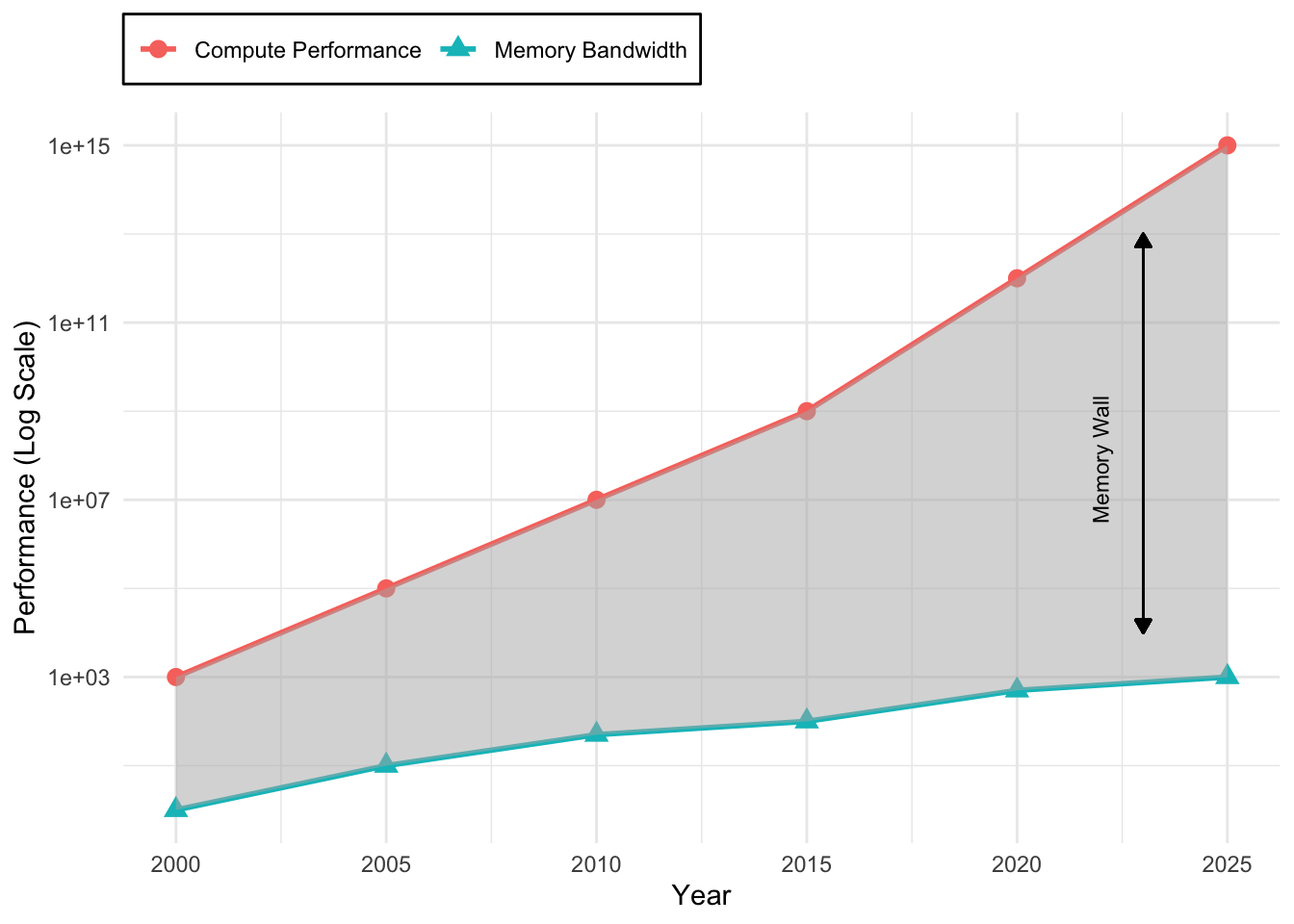

Memory hierarchy design plays a critical role in acceleration effectiveness, given that data movement energy costs typically exceed computational energy by more than two orders of magnitude. This analysis covers memory architecture design principles, from on-chip SRAM buffer optimization to high-bandwidth memory interfaces, and examines approaches to minimizing energy-intensive data movement patterns. We also address compiler optimization and runtime system support, which determine the extent to which theoretical hardware capabilities translate into measurable system performance.

The chapter concludes with scaling methodologies for systems requiring computational capacity beyond single-chip implementations. Multi-chip architectures, ranging from chiplet-based integration to distributed warehouse-scale systems, introduce trade-offs between computational parallelism and inter-chip communication overhead. Through detailed analysis of contemporary systems including NVIDIA GPU architectures, Google Tensor Processing Units, and emerging neuromorphic computing platforms, we establish the theoretical foundations and practical considerations necessary for effective deployment of AI acceleration across diverse system contexts.

Evolution of Hardware Specialization

Computing architectures follow a recurring pattern: as computational workloads grow in complexity, general-purpose processors become increasingly inefficient, prompting the development of specialized hardware accelerators. The need for higher computational efficiency, reduced energy consumption, and optimized execution of domain-specific workloads drives this transition. Machine learning acceleration represents the latest stage in this ongoing evolution, following a trajectory observed in prior domains such as floating-point arithmetic, graphics processing, and digital signal processing.

This evolutionary progression provides context for understanding how modern ML accelerators including GPUs with tensor cores (specialized units that accelerate matrix operations), Google’s TPUs2, and Apple’s Neural Engine emerged from established architectural principles. These technologies enable widely deployed applications such as real-time language translation, image recognition, and personalized recommendations. The architectural strategies enabling such capabilities derive from decades of hardware specialization research and development.

2 TPU Origins: Google secretly developed the Tensor Processing Unit (TPU) starting in 2013 when they realized CPUs couldn’t handle the computational demands of their neural networks. The TPUv1, deployed in 2015, delivered 15-30\(\times\) better performance per watt than contemporary GPUs for inference. This breakthrough significantly changed how the industry approached AI hardware, proving that domain-specific architectures could dramatically outperform general-purpose processors for neural network workloads.

Hardware specialization forms the foundation of this transition, enhancing performance and efficiency by optimizing frequently executed computational patterns through dedicated circuit implementations. While this approach yields significant gains, it introduces trade-offs in flexibility, silicon area utilization, and programming complexity. As computing demands continue to evolve, specialized accelerators must balance these factors to deliver sustained improvements in efficiency and performance.

The evolution of hardware specialization provides perspective for understanding modern machine learning accelerators. Many principles that shaped the development of early floating-point and graphics accelerators now inform the design of AI-specific hardware. Examining these past trends offers a framework for analyzing contemporary approaches to AI acceleration and anticipating future developments in specialized computing.

Specialized Computing

The transition toward specialized computing architectures stems from the limitations of general-purpose processors. Early computing systems relied on central processing units (CPUs) to execute all computational tasks sequentially, following a one-size-fits-all approach. As computing workloads diversified and grew in complexity, certain operations, especially floating-point arithmetic, emerged as performance bottlenecks that could not be efficiently handled by CPUs alone. These inefficiencies prompted the development of specialized hardware architectures designed to accelerate specific computational patterns (Flynn 1966).

3 Intel 8087 Impact: The 8087 coprocessor cost hundreds of dollars (up to $700-795 according to various accounts, about $2,100-2,400 today) but transformed scientific computing. CAD workstations that took hours for complex calculations could complete them in minutes. This success created the entire coprocessor market and established the economic model for specialized hardware that persists today: charge premium prices for dramatic performance improvements in specific domains.

One of the earliest examples of hardware specialization was the Intel 8087 mathematics coprocessor3, introduced in 1980. This floating-point unit (FPU) was designed to offload arithmetic-intensive computations from the main CPU, dramatically improving performance for scientific and engineering applications. The 8087 demonstrated unprecedented efficiency, achieving performance gains of up to 100× for floating-point operations compared to software-based implementations on general-purpose processors (Fisher 1981). This milestone established a principle in computer architecture: carefully designed hardware specialization could provide order-of-magnitude improvements for well-defined, computationally intensive tasks.

The success of floating-point coprocessors4 led to their eventual integration into mainstream processors. The Intel 486DX, released in 1989, incorporated an on-chip floating-point unit, eliminating the requirement for an external coprocessor. This integration improved processing efficiency and established a recurring pattern in computer architecture: successful specialized functions become standard features in subsequent generations of general-purpose processors (Patterson and Hennessy 2021).

4 Coprocessor: A specialized secondary processor designed to handle specific tasks that the main CPU performs poorly. The 8087 math coprocessor was the first successful example, followed by graphics coprocessors (GPUs) and network processors. Modern “accelerators” are essentially evolved coprocessors. The term changed as these chips became more powerful than host CPUs for their target workloads. Today’s AI accelerators follow the same pattern but often eclipse CPU performance.

Early floating-point acceleration established principles that continue to influence modern hardware specialization:

- Identification of computational bottlenecks through workload analysis

- Development of specialized circuits for frequent operations

- Creation of efficient hardware-software interfaces

- Progressive integration of proven specialized functions

This progression from domain-specific specialization to general-purpose integration has shaped modern computing architectures. As computational workloads expanded beyond arithmetic operations, these core principles were applied to new domains, such as graphics processing, digital signal processing, and ultimately, machine learning acceleration. Each domain introduced specialized architectures tailored to their unique computational requirements, establishing hardware specialization as an approach for advancing computing performance and efficiency in increasingly complex workloads.

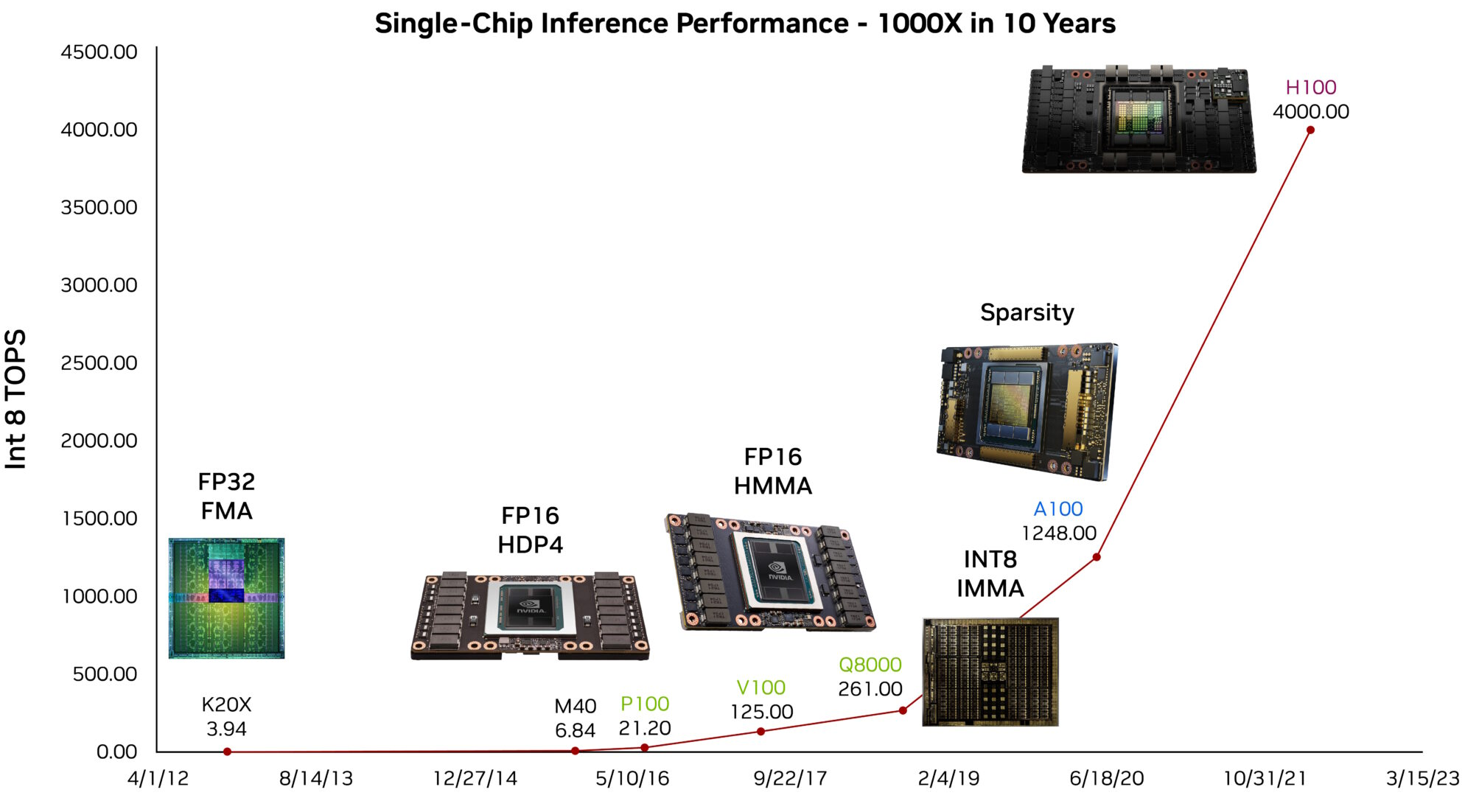

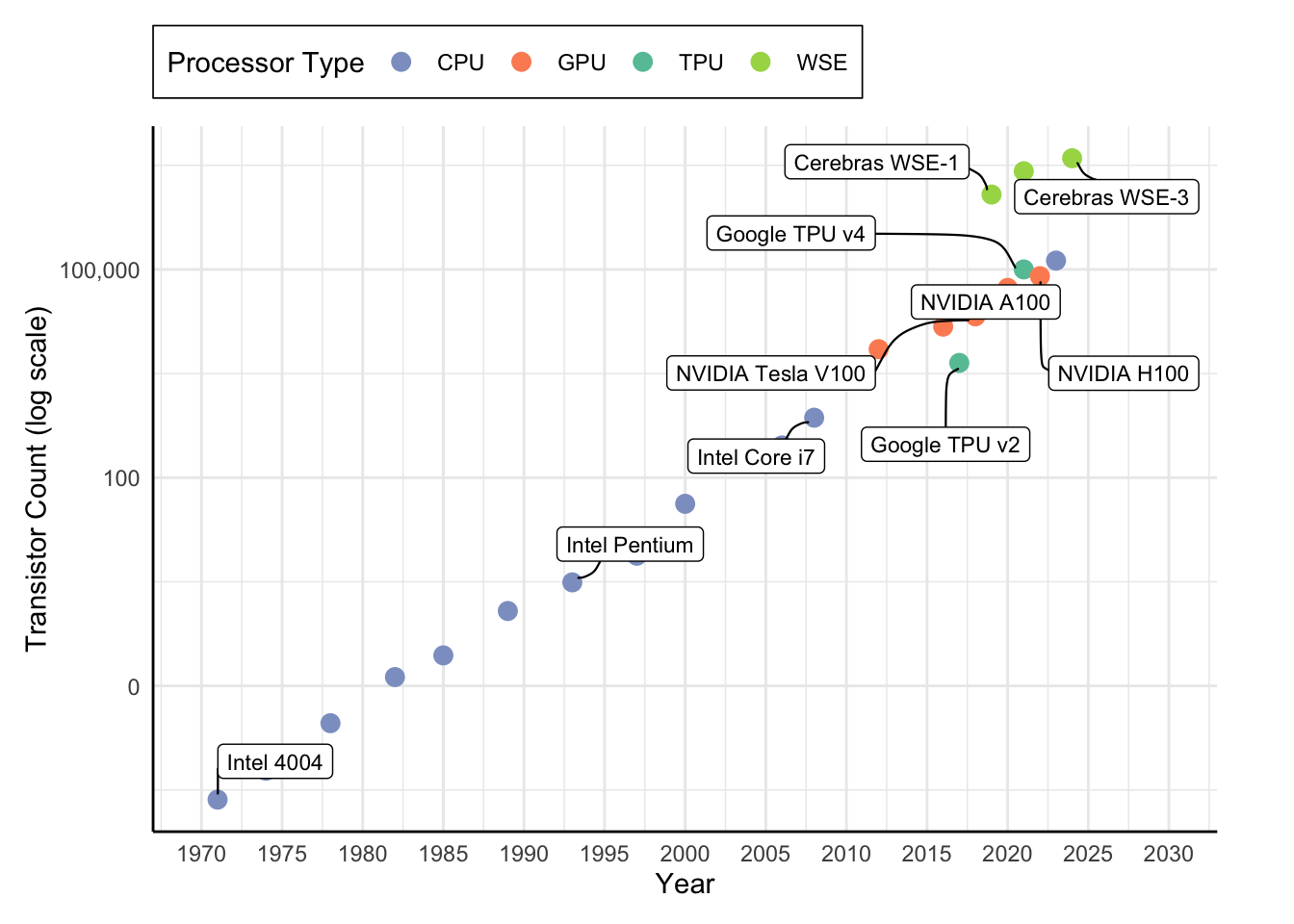

The evolution of specialized computing hardware follows a consistent trajectory, wherein architectural innovations are introduced to address emerging computational bottlenecks and are subsequently incorporated into mainstream computing platforms. As illustrated in Figure 1, each computing era produced accelerators that addressed the dominant workload characteristics of the period. These developments have advanced architectural efficiency and shaped the foundation upon which contemporary machine learning systems operate. The computational capabilities required for tasks such as real-time language translation, personalized recommendations, and on-device inference depend on foundational principles and architectural innovations established in earlier domains, including floating-point computation, graphics processing, and digital signal processing.

Parallel Computing and Graphics Processing

The principles established through floating-point acceleration provided a blueprint for addressing emerging computational challenges. As computing applications diversified, new computational patterns emerged that exceeded the capabilities of general-purpose processors. This expansion of specialized computing manifested across multiple domains, each contributing unique insights to hardware acceleration strategies.

Graphics processing emerged as a primary driver of hardware specialization in the 1990s. Early graphics accelerators focused on specific operations like bitmap transfers and polygon filling. The introduction of programmable graphics pipelines with NVIDIA’s GeForce 256 in 1999 represented a significant advancement in specialized computing. Graphics Processing Units (GPUs) demonstrated how parallel processing architectures could efficiently handle data-parallel workloads, achieving 50-100\(\times\) speedups in 3D rendering tasks like texture mapping and vertex transformation. By 2004, high-end GPUs could process over 100 million polygons per second (Owens et al. 2008).

Concurrently, Digital Signal Processing (DSP) processors established parallel data path architectures with specialized multiply-accumulate units and circular buffers optimized for filtering and transform operations. Texas Instruments’ TMS32010 (1983) demonstrated how domain-specific instruction sets could dramatically improve performance for signal processing applications (Lyons 2011).

Network processing introduced additional patterns of specialization. Network processors developed unique architectures to handle packet processing at line rate, incorporating multiple processing cores, specialized packet manipulation units, and sophisticated memory management systems. Intel’s IXP2800 network processor demonstrated how multiple levels of hardware specialization could be combined to address complex processing requirements.

These diverse domains of specialization exhibit several common characteristics:

- Identification of domain-specific computational patterns

- Development of specialized processing elements and memory hierarchies

- Creation of domain-specific programming models

- Progressive evolution toward more flexible architectures

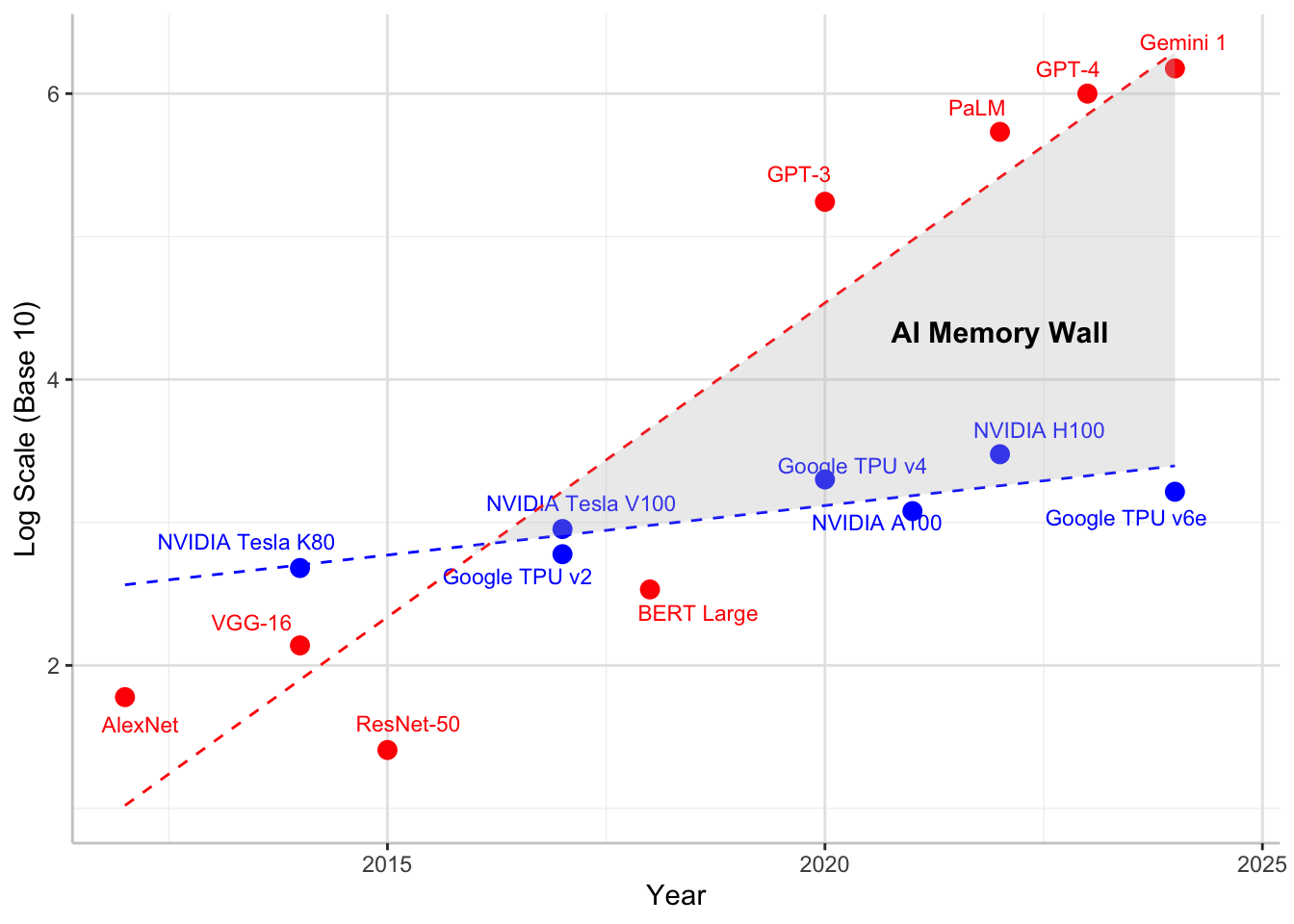

This period of expanding specialization demonstrated that hardware acceleration strategies could address diverse computational requirements across multiple domains. The GPU’s success in parallelizing 3D graphics pipelines enabled its subsequent adoption for training deep neural networks, exemplified by AlexNet5 in 2012, which executed on consumer-grade NVIDIA GPUs. DSP innovations in low-power signal processing facilitated real-time inference on edge devices, including voice assistants and wearables. These domains informed ML hardware designs and established that accelerators could be deployed across both cloud and embedded contexts, principles that continue to influence contemporary AI ecosystem development.

5 AlexNet’s GPU Revolution: AlexNet’s breakthrough wasn’t just algorithmic. It proved GPUs could train deep networks 10\(\times\) faster than CPUs (Krizhevsky, Sutskever, and Hinton 2017). The team split the 8-layer network across two NVIDIA GTX 580s (512 cores each), reducing training time from weeks to days. This success triggered the “deep learning gold rush” and established NVIDIA as the default AI hardware company, with GPU sales for data centers growing from $200 million to $47 billion by 2024. Modern GPUs like the NVIDIA H100 contains 16,896 streaming processors, demonstrating the massive scaling in parallel processing capability since AlexNet’s era.

Emergence of Domain-Specific Architectures

The emergence of domain-specific architectures (DSA)6 marks a shift in computer system design, driven by two factors: the breakdown of traditional scaling laws and the increasing computational demands of specialized workloads. The slowdown of Moore’s Law7, which previously ensured predictable enhancements in transistor density every 18 to 24 months, and the end of Dennard scaling8, which permitted frequency increases without corresponding power increases, created a performance and efficiency bottleneck in general-purpose computing. As John Hennessy and David Patterson noted in their 2017 Turing Lecture (Hennessy and Patterson 2019), these limitations signaled the onset of a new era in computer architecture, one centered on domain-specific solutions that optimize hardware for specialized workloads.

6 Domain-Specific Architectures (DSA): Computing architectures optimized for specific application domains rather than general-purpose computation. Unlike CPUs designed for flexibility, DSAs sacrifice programmability for dramatic efficiency gains. Google’s TPU achieves 15-30\(\times\) better performance per watt than GPUs for neural networks, while video codecs provide 100-1000\(\times\) improvements over software decoding. The 2018 Turing Award recognized this shift as the defining trend in modern computer architecture.

7 Moore’s Law: Intel co-founder Gordon Moore’s 1965 observation that transistor density doubles every 18-24 months. This exponential scaling drove computing progress for 50 years, enabling everything from smartphones to supercomputers. However, physical limits around 2005 slowed this pace dramatically. Modern 3 nm chips cost $20 billion to develop versus $3 million in 1999, forcing the industry toward specialized architectures.

8 Dennard Scaling: Robert Dennard’s 1974 principle that as transistors shrink, their power density remains constant, allowing higher frequencies without increased power consumption. This enabled CPUs to reach 3+ GHz by 2005. However, quantum effects and leakage current ended Dennard scaling around 2005, forcing architects to prioritize efficiency over raw speed and leading to the multi-core revolution.

Historically, improvements in processor performance depended on semiconductor process scaling and increasing clock speeds. However, as power density limitations restricted further frequency scaling, and as transistor miniaturization encountered increasing physical and economic constraints, architects explored alternative approaches to sustain computational growth. This resulted in a shift toward domain-specific architectures, which dedicate silicon resources to optimize computation for specific application domains, trading flexibility for efficiency.

Domain-specific architectures achieve superior performance and energy efficiency through several key principles:

Customized datapaths: Design processing paths specifically optimized for target application patterns, enabling direct hardware execution of common operations. For example, matrix multiplication units in AI accelerators implement systolic arrays—grid-like networks of processing elements that rhythmically compute and pass data through neighboring units—tailored for neural network computations.

Specialized memory hierarchies: Optimize memory systems around domain-specific access patterns and data reuse characteristics. This includes custom cache configurations, prefetching logic, and memory controllers tuned for expected workloads.

Reduced instruction overhead: Implement domain-specific instruction sets that minimize decode and dispatch complexity by encoding common operation sequences into single instructions. This improves both performance and energy efficiency.

Direct hardware implementation: Create dedicated circuit blocks that natively execute frequently used operations without software intervention. This eliminates instruction processing overhead and maximizes throughput.

These principles achieve compelling demonstration in modern smartphones. Modern smartphones can decode 4K video at 60 frames per second while consuming only a few watts of power, despite video processing requiring billions of operations per second. This efficiency is achieved through dedicated hardware video codecs that implement industry standards such as H.264/AVC (introduced in 2003) and H.265/HEVC (finalized in 2013) (Sullivan et al. 2012). These specialized circuits provide 100–1000\(\times\) improvements in both performance and power efficiency compared to software-based decoding on general-purpose processors.

9 Application-Specific Integrated Circuits (ASICs): Custom silicon chips designed for a single application, offering maximum efficiency by eliminating unused features. Bitcoin mining ASICs achieve 100,000\(\times\) better energy efficiency than CPUs for SHA-256 hashing. However, their inflexibility means they become worthless if algorithms change. An estimated $5 billion in Ethereum mining ASICs became obsolete when Ethereum switched to proof-of-stake in September 2022.

The trend toward specialization continues to accelerate, with new architectures emerging for an expanding range of domains. Genomics processing benefits from custom accelerators that optimize sequence alignment and variant calling, reducing the time required for DNA analysis (Shang, Wang, and Liu 2018). Similarly, blockchain computation has produced application-specific integrated circuits (ASICs)9 optimized for cryptographic hashing, substantially increasing the efficiency of mining operations (Bedford Taylor 2017). These examples demonstrate that domain-specific architecture represents a fundamental transformation in computing systems, offering tailored solutions that address the growing complexity and diversity of modern computational workloads.

Machine Learning Hardware Specialization

Machine learning constitutes a computational domain with unique characteristics that have driven the development of specialized hardware architectures. Unlike traditional computing workloads that exhibit irregular memory access patterns and diverse instruction streams, neural networks are characterized by predictable patterns: dense matrix multiplications, regular data flow, and tolerance for reduced precision. These characteristics enable specialized hardware optimizations that would be ineffective for general-purpose computing but provide substantial speedups for ML workloads.

Machine learning computational requirements reveal limitations in traditional processors. CPUs achieve only 5-10% utilization on neural network workloads, delivering approximately 100 GFLOPS10 while consuming hundreds of watts. This inefficiency results from architectural mismatches: CPUs optimize for single-thread performance and irregular memory access, while neural networks require massive parallelism and predictable data streams. The memory bandwidth11 constraint becomes particularly severe: a single neural network layer may require accessing gigabytes of parameters, overwhelming CPU cache hierarchies12 designed for kilobyte-scale working sets.

10 GFLOPS (Giga Floating-Point Operations Per Second): A measure of computational throughput representing one billion floating-point operations per second. TOPS (Tera Operations Per Second) represents one trillion operations per second, typically used for integer operations in AI accelerators.

11 Memory Bandwidth: The rate at which data can be transferred between memory and processors, measured in GB/s or TB/s. AI workloads are often bandwidth-bound rather than compute-bound. NVIDIA H100 provides 3.35 TB/s (approximately 40\(\times\) faster than typical DDR5-4800 configurations at ~80 GB/s) because neural networks require constant weight access, making memory bandwidth the primary bottleneck in many AI applications.

12 Cache Hierarchy: Multi-level memory system with L1, L2, and L3 caches providing progressively larger capacity but higher latency. CPUs optimize for 32-64KB L1 caches with <1ns access time, but neural networks need gigabytes of weights that cannot fit in cache, causing frequent expensive DRAM accesses (100ns latency) and degrading performance from 90%+ cache hit rates to <10%.

The energy economics of data movement influence accelerator design. Accessing data from DRAM requires approximately 640 picojoules while performing a multiply-accumulate operation consumes only 3.7 pJ, approximately a 173× penalty (specific values vary by technology node and design) that establishes minimizing data movement as the primary optimization target. This disparity explains the progression from repurposed graphics processors to purpose-built neural network accelerators. GPUs achieve 15,000+ GFLOPS through massive parallelism but encounter efficiency challenges from their graphics heritage. TPUs and other custom accelerators achieve utilization above 85% by implementing systolic arrays and other architectures that maximize data reuse while minimizing movement.

Training and inference present distinct computational profiles that influence accelerator design. Training requires high-precision arithmetic (FP32 or FP16) for gradient computation and weight updates, bidirectional data flow for backpropagation13, and large memory capacity for storing activations. Inference can exploit reduced precision (INT8 or INT4), requires only forward computation, and prioritizes latency over throughput14. These differences drive specialized architectures: training accelerators maximize FLOPS and memory bandwidth, while inference accelerators optimize for energy efficiency and deterministic latency.

13 Backpropagation: The key training algorithm that computes gradients by propagating errors backwards through the network using the chain rule. Unlike forward inference which only needs current layer outputs, backpropagation requires storing all intermediate activations from forward pass, increasing memory requirements 2-3\(\times\) and necessitating bidirectional data flow that complicates accelerator design.

14 Latency vs Throughput: Latency measures response time for a single request (milliseconds), while throughput measures requests processed per unit time (requests/second). Training optimizes throughput to process large batches efficiently, while inference prioritizes latency for real-time responses. A GPU might achieve 1000 images/second (high throughput) but take 50ms per image (high latency), making it unsuitable for real-time applications requiring <10ms response times.

Deployment context shapes architectural choices. Datacenter accelerators accept 700-watt power budgets to maximize throughput for training massive models. Edge devices must deliver real-time inference within milliwatt constraints, driving architectures that eliminate every unnecessary data movement. Mobile processors balance performance with battery life, while automotive systems prioritize deterministic response times for safety-critical applications. This diversity has produced a rich ecosystem of specialized accelerators, each optimized for specific deployment scenarios and computational requirements.

In data centers, training accelerators such as NVIDIA H100 and Google TPUv4 reduce model development from weeks to days through massive parallelism and high-bandwidth memory systems. These systems prioritize raw computational throughput, accepting 700-watt power consumption to achieve petaflop-scale performance. The economics support this trade-off—reducing training time from months to days can reduce millions in operational costs and accelerate time-to-market for AI applications.

At the opposite extreme, edge deployment requires different optimization strategies. Processing-in-memory architectures eliminate data movement by integrating compute directly with memory. Dynamic voltage scaling reduces power by 50-90% during low-intensity operations. Neuromorphic designs process only changing inputs, achieving 1000× power reduction for temporal workloads. These techniques enable sophisticated AI models to operate continuously on battery power, supporting applications from smartphone photography to autonomous sensors that function for years without external power.

The success of application-specific accelerators demonstrates that no single architecture can efficiently address all ML workloads. The 156 billion edge devices projected by 2030 will require architectures optimized for energy efficiency and real-time guarantees, while cloud-scale training will continue advancing the boundaries of computational throughput. This diversity drives continued innovation in specialized architectures, each optimized for its specific deployment context and computational requirements.

The evolution of specialized hardware architectures illustrates a principle in computing systems: as computational patterns emerge and mature, hardware specialization follows to achieve optimal performance and energy efficiency. This progression appears clearly in machine learning acceleration, where domain-specific architectures have evolved to meet the increasing computational demands of machine learning models. Unlike general-purpose processors, which prioritize flexibility, specialized accelerators optimize execution for well-defined workloads, balancing performance, energy efficiency, and integration with software frameworks.

Table 1 summarizes key milestones in the evolution of hardware specialization, showing how each era produced architectures tailored to the prevailing computational demands. While these accelerators initially emerged to optimize domain-specific workloads, including floating-point operations, graphics rendering, and media processing, they also introduced architectural strategies that persist in contemporary systems. The specialization principles outlined in earlier generations now underpin the design of modern AI accelerators. Understanding this historical trajectory provides context for analyzing how hardware specialization continues to enable scalable, efficient execution of machine learning workloads across diverse deployment environments.

| Era | Computational Pattern | Architecture Examples | Characteristics |

|---|---|---|---|

| 1980s | Floating-Point & Signal Processing | FPU, DSP |

|

| 1990s | 3D Graphics & Multimedia | GPU, SIMD Units |

|

| 2000s | Real-time Media Coding | Media Codecs, Network Processors |

|

| 2010s | Deep Learning Tensor Operations | TPU, GPU Tensor Cores |

|

| 2020s | Application-Specific Acceleration | ML Engines, Smart NICs, Domain Accelerators |

|

This historical progression reveals a recurring pattern: each wave of hardware specialization responded to a computational bottleneck, whether graphics rendering, media encoding, or neural network inference. What distinguishes the 2020s is not just specialization, but its pervasiveness: AI accelerators now underpin everything from product recommendations on YouTube to object detection in autonomous vehicles. Unlike earlier accelerators, today’s AI hardware must integrate tightly with dynamic software frameworks and scale across cloud-to-edge deployments. The table illustrates not just the past but also the trajectory toward increasingly tailored, high-impact computing platforms.

For AI acceleration, this transition has introduced challenges that extend well beyond hardware design. Machine learning accelerators must integrate seamlessly into ML workflows by aligning with optimizations at multiple levels of the computing stack. They must operate effectively with widely adopted frameworks such as TensorFlow, PyTorch, and JAX, ensuring that deployment is smooth and consistent across varied hardware platforms. Compiler and runtime support become necessary; advanced optimization techniques, such as graph-level transformations, kernel fusion, and memory scheduling, are critical for using the full potential of these specialized accelerators.

Scalability drives additional complexity as AI accelerators deploy across diverse environments from high-throughput data centers to resource-constrained edge and mobile devices, requiring tailored performance tuning and energy efficiency strategies. Integration into heterogeneous computing15 environments demands interoperability that enables specialized units to coordinate effectively with conventional CPUs and GPUs in distributed systems.

15 Heterogeneous Computing: Computing systems that combine different types of processors (CPUs, GPUs, TPUs, FPGAs) to optimize performance for diverse workloads. Modern data centers mix x86 CPUs for control tasks, GPUs for training, and TPUs for inference. Programming heterogeneous systems requires frameworks like OpenCL or CUDA that can coordinate execution across different architectures, but offers 10-100\(\times\) efficiency gains by matching each task to optimal hardware.

AI accelerators represent a system-level transformation that requires tight hardware-software coupling. This transformation manifests in three specific computational patterns, compute primitives, that drive accelerator design decisions. Understanding these primitives determines the architectural features that enable 100-1000\(\times\) performance improvements through coordinated hardware specialization and software optimization strategies examined in subsequent sections.

The evolution from floating-point coprocessors to AI accelerators reveals a consistent pattern: computational bottlenecks drive specialized hardware development. Where the Intel 8087 addressed floating-point operations that consumed 80% of scientific computing time, modern AI workloads present an even more extreme case. Matrix multiplications and convolutions constitute over 95% of neural network computation. This concentration of computational demand creates unprecedented opportunities for specialization, explaining why AI accelerators achieve 100-1000\(\times\) performance improvements over general-purpose processors.

The specialization principles established through decades of hardware evolution identifying dominant operations, creating dedicated datapaths, and optimizing memory access patterns now guide AI accelerator design. However, neural networks introduce unique characteristics that demand new architectural approaches: massive parallelism in matrix operations, predictable data access patterns enabling prefetching, and tolerance for reduced precision that allows aggressive optimization. Understanding these computational patterns, which we term AI compute primitives, helps comprehend how modern accelerators transform the theoretical efficiency gains from Chapter 10: Model Optimizations into practical performance improvements. These hardware-software optimizations become critical in deployment scenarios ranging from Chapter 2: ML Systems edge devices to cloud-scale inference systems.

Before examining these computational primitives in detail, we need to understand the architectural organization that enables their efficient execution. Modern AI accelerators achieve their dramatic performance improvements through a carefully orchestrated hierarchy of specialized components operating in concert. The architecture comprises three subsystems, each addressing distinct aspects of the computational challenge.

The processing substrate consists of an array of processing elements, each containing dedicated computational units optimized for specific operations: tensor cores execute matrix multiplication, vector units perform element-wise operations, and special function units compute activation functions. These processing elements are organized in a grid topology that enables massive parallelism, with dozens to hundreds of units operating simultaneously on different portions of the computation, exploiting the data-level parallelism inherent in neural network workloads.

The memory hierarchy forms an equally critical architectural component. High-bandwidth memory provides the aggregate throughput required to sustain these numerous processing elements, while a multi-level cache hierarchy from shared L2 caches down to per-element L1 caches and scratchpads minimizes the energy cost of data movement. This hierarchical organization embodies a design principle: in AI accelerators, data movement typically consumes more energy than computation itself, necessitating architectural strategies that prioritize data reuse by maintaining frequently accessed values, including weights and partial results, in proximity to compute units.

The host interface establishes connectivity between the specialized accelerator and the broader computing system, enabling coordination between general-purpose CPUs that manage program control flow and the accelerator that executes computationally intensive neural network operations. This architectural partitioning reflects specialization at the system level: CPUs address control flow, conditional logic, and system coordination, while accelerators focus on the regular, massively parallel arithmetic operations that dominate neural network execution.

Figure 2 illustrates this architectural organization, showing how specialized compute units, hierarchical memory subsystems, and host connectivity integrate to form a system optimized for AI workloads.

AI Compute Primitives

Understanding how hardware evolved toward AI-specific designs requires examining the computational patterns that drove this specialization. The transition from general-purpose CPUs achieving 100 GFLOPS to specialized accelerators delivering 100,000+ GFLOPS reflects architectural optimization for specific computational patterns that dominate machine learning workloads. These patterns, which we term compute primitives, appear repeatedly across all neural network architectures regardless of application domain or model size.

Modern neural networks are built upon a small number of core computational patterns. Regardless of the layer type—whether fully connected, convolutional, or attention-based layers—the underlying operation typically involves multiplying input values by learned weights and accumulating the results. This repeated multiply-accumulate process dominates neural network execution and defines the arithmetic foundation of AI workloads. The regularity and frequency of these operations have led to the development of AI compute primitives: hardware-level abstractions optimized to execute these core computations with high efficiency.

Neural networks exhibit highly structured, data-parallel computations that enable architectural specialization. Building on the parallelization principles established in Section 1.2.2, these patterns emphasize predictable data reuse and fixed operation sequences. AI compute primitives distill these patterns into reusable architectural units that support high-throughput and energy-efficient execution.

This decomposition is illustrated in Listing 1, which defines a dense layer at the framework level.

dense = Dense(512)(input_tensor)This high-level call expands into mathematical operations is shown in Listing 2.

output = matmul(input_weights) + bias

output = activation(output)At the processor level, the computation reduces to nested loops that multiply inputs and weights, sum the results, and apply a nonlinear function, as shown in Listing 3.

for n in range(batch_size):

for m in range(output_size):

sum = bias[m]

for k in range(input_size):

sum += input[n, k] * weights[k, m]

output[n, m] = activation(sum)This transformation reveals four computational characteristics: data-level parallelism enabling simultaneous execution, structured matrix operations defining computational workloads, predictable data movement patterns driving memory optimization, and frequent nonlinear transformations motivating specialized function units.

The design of AI compute primitives follows three architectural criteria. First, the primitive must be used frequently enough to justify dedicated hardware resources. Second, its specialized implementation must offer substantial performance or energy efficiency gains relative to general-purpose alternatives. Third, the primitive must remain stable across generations of neural network architectures to ensure long-term applicability. These considerations shape the inclusion of primitives such as vector operations, matrix operations, and special function units in modern ML accelerators. Together, they serve as the architectural foundation for efficient and scalable neural network execution.

Vector Operations

Vector operations provide the first level of hardware acceleration by processing multiple data elements simultaneously. This parallelism exists at multiple scales, from individual neurons to entire layers, making vector processing essential for efficient neural network execution. Framework-level code translates to hardware instructions, revealing the critical role of vector processing in neural accelerators.

High-Level Framework Operations

Machine learning frameworks hide hardware complexity through high-level abstractions. These abstractions decompose into progressively lower-level operations, revealing opportunities for hardware acceleration. One such abstraction is shown in Listing 4, which illustrates the execution flow of a linear layer.

layer = nn.Linear(256, 512) # 256 inputs to

# 512 outputs

output = layer(input_tensor) # Process a batch of inputsThis abstraction represents a fully connected layer that transforms input features through learned weights. To understand how hardware acceleration opportunities emerge, Listing 5 shows how the framework translates this high-level expression into mathematical operations.

Z = matmul(weights, input) + bias # Each output needs all inputs

output = activation(Z) # Transform each resultThese mathematical operations further decompose into explicit computational steps during processor execution. Listing 6 illustrates the nested loops that implement these multiply-accumulate operations.

for batch in range(32): # Process 32 samples at once

for out_neuron in range(512): # Compute each output neuron

sum = 0.0

for in_feature in range(256): # Each output needs

# all inputs

sum += input[batch, in_feature] *

weights[out_neuron, in_feature]

output[batch, out_neuron] = activation(sum +

bias[out_neuron])Sequential Scalar Execution

Traditional scalar processors execute these operations sequentially, processing individual values one at a time. For the linear layer example above with a batch of 32 samples, computing the outputs requires over 4 million multiply-accumulate operations. Each operation involves loading an input value and a weight value, multiplying them, and accumulating the result. This sequential approach becomes highly inefficient when processing the massive number of identical operations required by neural networks.

Recognizing this inefficiency, modern processors leverage vector processing to transform execution patterns fundamentally.

Parallel Vector Execution

Vector processing units achieve this transformation by operating on multiple data elements simultaneously. Listing 7 demonstrates this approach using RISC-V16 assembly code that showcases modern vector processing capabilities.

16 RISC-V for AI: RISC-V, the open-source instruction set architecture from UC Berkeley (2010), is becoming important for AI accelerators because it’s freely customizable. Companies like SiFive and Google have created RISC-V chips with custom AI extensions. Unlike proprietary architectures, RISC-V allows hardware designers to add specialized ML instructions without licensing fees, potentially democratizing AI hardware development beyond the current duopoly of x86 and ARM.

vsetvli t0, a0, e32 # Process 8 elements at once

loop_batch:

loop_neuron:

vxor.vv v0, v0, v0 # Clear 8 accumulators

loop_feature:

vle32.v v1, (in_ptr) # Load 8 inputs together

vle32.v v2, (wt_ptr) # Load 8 weights together

vfmacc.vv v0, v1, v2 # 8 multiply-adds at once

add in_ptr, in_ptr, 32 # Move to next 8 inputs

add wt_ptr, wt_ptr, 32 # Move to next 8 weights

bnez feature_cnt, loop_featureThis vector implementation processes eight data elements in parallel, reducing both computation time and energy consumption. Vector load instructions transfer eight values simultaneously, maximizing memory bandwidth utilization. The vector multiply-accumulate instruction processes eight pairs of values in parallel, dramatically reducing the total instruction count from over 4 million to approximately 500,000.

To clarify how vector instructions map to common deep learning patterns, Table 2 introduces key vector operations and their typical applications in neural network computation. These operations, such as reduction, gather, scatter, and masked operations, are frequently encountered in layers like pooling, embedding lookups, and attention mechanisms. This terminology is necessary for interpreting how low-level vector hardware accelerates high-level machine learning workloads.

| Vector Operation | Description | Neural Network Application |

|---|---|---|

| Reduction | Combines elements across a vector (e.g., sum, max) | Pooling layers, attention score computation |

| Gather | Loads multiple non-consecutive memory elements | Embedding lookups, sparse operations |

| Scatter | Writes to multiple non-consecutive memory locations | Gradient updates for embeddings |

| Masked operations | Selectively operates on vector elements | Attention masks, padding handling |

| Vector-scalar broadcast | Applies scalar to all vector elements | Bias addition, scaling operations |

Vector processing efficiency gains extend beyond instruction count reduction. Memory bandwidth utilization improves as vector loads transfer multiple values per operation. Energy efficiency increases because control logic is shared across multiple operations. These improvements compound across the deep layers of modern neural networks, where billions of operations execute for each forward pass.

Vector Processing History

The principles underlying vector operations have long been central to high-performance computing. In the 1970s and 1980s, vector processors emerged as an architectural solution for scientific computing, weather modeling, and physics simulations, where large arrays of data required efficient parallel processing. Early systems such as the Cray-117, one of the first commercially successful supercomputers, introduced dedicated vector units to perform arithmetic operations on entire data vectors in a single instruction. These vector units dramatically improved computational throughput compared to traditional scalar execution (Jordan 1982).

17 Cray-1 Vector Legacy: The Cray-1 (1975) cost $8.8 million (approximately $40-45 million in 2024 dollars) but could perform 160 million floating-point operations per second—1000x faster than typical computers. Its 64-element vector registers and pipelined vector units established the architectural template that modern AI accelerators still follow: process many data elements simultaneously with specialized hardware pipelines.

These concepts have reemerged in machine learning, where neural networks exhibit structure well suited to vectorized execution. The same operations, such as vector addition, multiplication, and reduction, that once accelerated numerical simulations now drive the execution of machine learning workloads. While the scale and specialization of modern AI accelerators differ from their historical predecessors, the underlying architectural principles remain the same. The resurgence of vector processing in neural network acceleration highlights its utility for achieving high computational efficiency.

Vector operations establish the foundation for neural network acceleration by enabling efficient parallel processing of independent data elements. While vector operations excel at element-wise transformations like activation functions, neural networks also require structured computations that combine multiple input features to produce output features, transformations that naturally express themselves as matrix operations. This need for coordinated computation across multiple dimensions simultaneously leads to the next architectural primitive: matrix operations.

Matrix Operations

Matrix operations form the computational workhorse of neural networks, transforming high-dimensional data through structured patterns of weights, activations, and gradients (Goodfellow, Courville, and Bengio 2013). While vector operations process elements independently, matrix operations orchestrate computations across multiple dimensions simultaneously. These operations reveal patterns that drive hardware acceleration strategies.

Matrix Operations in Neural Networks

Neural network computations decompose into hierarchical matrix operations. As shown in Listing 8, a linear layer demonstrates this hierarchy by transforming input features into output neurons over a batch.

layer = nn.Linear(256, 512) # Layer transforms 256 inputs to

# 512 outputs

output = layer(input_batch) # Process a batch of 32 samples

# Framework Internal: Core operations

Z = matmul(weights, input) # Matrix: transforms [256 x 32]

# input to [512 x 32] output

Z = Z + bias # Vector: adds bias to each

# output independently

output = relu(Z) # Vector: applies activation to

# each element independentlyThis computation demonstrates the scale of matrix operations in neural networks. Each output neuron (512 total) must process all input features (256 total) for every sample in the batch (32 samples). The weight matrix alone contains \(256 \times 512 = 131,072\) parameters that define these transformations, illustrating why efficient matrix multiplication becomes crucial for performance.

Neural networks employ matrix operations across diverse architectural patterns beyond simple linear layers.

Types of Matrix Computations in Neural Networks

Matrix operations appear consistently across modern neural architectures, as illustrated in Listing 9. Convolution operations are transformed into matrix multiplications through the im2col technique18, enabling efficient execution on hardware optimized for matrix operations.

18 Im2col (Image-to-Column): A preprocessing technique that converts convolution operations into matrix multiplications by unfolding image patches into column vectors. A 3×3 convolution on a 224×224 image creates a matrix with ~50,000 columns, enabling efficient GEMM execution but increasing memory usage 9× due to overlapping patches. This transformation explains why convolutions are actually matrix operations in modern ML accelerators.

hidden = matmul(weights, inputs)

# weights: [out_dim x in_dim], inputs: [in_dim x batch]

# Result combines all inputs for each output

# Attention Mechanisms - Multiple matrix operations

Q = matmul(Wq, inputs)

# Project inputs to query space [query_dim x batch]

K = matmul(Wk, inputs)

# Project inputs to key space[key_dim x batch]

attention = matmul(Q, K.T)

# Compare all queries with all keys [query_dim x key_dim]

# Convolutions - Matrix multiply after reshaping

patches = im2col(input)

# Convert [H x W x C] image to matrix of patches

output = matmul(kernel, patches)

# Apply kernels to all patches simultaneouslyThis pervasive pattern of matrix multiplication has direct implications for hardware design. The need for efficient matrix operations drives the development of specialized hardware architectures that can handle these computations at scale. The following sections explore how modern AI accelerators implement matrix operations, focusing on their architectural features and performance optimizations.

Matrix Operations Hardware Acceleration

The computational demands of matrix operations have driven specialized hardware optimizations. Modern processors implement dedicated matrix units that extend beyond vector processing capabilities. An example of such matrix acceleration is shown in Listing 10.

mload mr1, (weight_ptr) # Load e.g., 16x16 block of

# weight matrix

mload mr2, (input_ptr) # Load corresponding input block

matmul.mm mr3, mr1, mr2 # Multiply and accumulate entire

# blocks at once

mstore (output_ptr), mr3 # Store computed output blockThis matrix processing unit can handle \(16\times16\) blocks of the linear layer computation described earlier, processing 256 multiply-accumulate operations simultaneously compared to the 8 operations possible with vector processing. These matrix operations complement vectorized computation by enabling structured many-to-many transformations. The interplay between matrix and vector operations shapes the efficiency of neural network execution.

Matrix operations provide computational capabilities for neural networks through coordinated parallel processing across multiple dimensions (see Table 3). While they enable transformations such as attention mechanisms and convolutions, their performance depends on efficient data handling. Conversely, vector operations are optimized for one-to-one transformations like activation functions and layer normalization. The distinction between these operations highlights the importance of dataflow patterns in neural accelerator design, examined next (Hwu 2011).

| Operation Type | Best For | Examples | Key Characteristic |

|---|---|---|---|

| Matrix Operations | Many-to-many transforms |

|

Each output depends on multiple inputs |

| Vector Operations | One-to-one transforms |

|

Each output depends only on corresponding input |

Historical Foundations of Matrix Computation

Matrix operations have long served as a cornerstone of computational mathematics, with applications extending from numerical simulations to graphics processing (Golub and Loan 1996). The structured nature of matrix multiplications and transformations made them natural targets for acceleration in early computing architectures. In the 1980s and 1990s, specialized digital signal processors (DSPs) and graphics processing units (GPUs) optimized for matrix computations played a critical role in accelerating workloads such as image processing, scientific computing, and 3D rendering (Owens et al. 2008).

The widespread adoption of machine learning has reinforced the importance of efficient matrix computation. Neural networks, fundamentally built on matrix multiplications and tensor operations, have driven the development of dedicated hardware architectures that extend beyond traditional vector processing. Modern tensor processing units (TPUs) and AI accelerators implement matrix multiplication at scale, reflecting the same architectural principles that once underpinned early scientific computing and graphics workloads. The resurgence of matrix-centric architectures highlights the deep connection between classical numerical computing and contemporary AI acceleration.

While matrix operations provide the computational backbone for neural networks, they represent only part of the acceleration challenge. Neural networks also depend critically on non-linear transformations that cannot be efficiently expressed through linear algebra alone.

Special Function Units

While vector and matrix operations efficiently handle the linear transformations in neural networks, non-linear functions present unique computational challenges that require dedicated hardware solutions. Special Function Units (SFUs) provide hardware acceleration for these essential computations, completing the set of fundamental processing primitives needed for efficient neural network execution.

Non-Linear Functions

Non-linear functions play a fundamental role in machine learning by enabling neural networks to model complex relationships (Goodfellow, Courville, and Bengio 2013). Listing 11 illustrates a typical neural network layer sequence.

layer = nn.Sequential(

nn.Linear(256, 512), nn.ReLU(), nn.BatchNorm1d(512)

)

output = layer(input_tensor)This sequence introduces multiple non-linear transformations that extend beyond simple matrix operations. Listing 12 demonstrates how the framework decomposes these operations into their mathematical components.

Z = matmul(weights, input) + bias # Linear transformation

H = max(0, Z) # ReLU activation

mean = reduce_mean(H, axis=0) # BatchNorm statistics

var = reduce_mean((H - mean) ** 2) # Variance computation

output = gamma * (H - mean) / sqrt(var + eps) + beta

# NormalizationHardware Implementation of Non-Linear Functions

The computational complexity of these operations becomes apparent when examining their implementation on traditional processors. These seemingly simple mathematical operations translate into complex sequences of instructions. Consider the computation of batch normalization: calculating the square root requires multiple iterations of numerical approximation, while exponential functions in operations like softmax need series expansion or lookup tables (Ioffe and Szegedy 2015). Even a simple ReLU activation introduces branching logic that can disrupt instruction pipelining (see Listing 13 for an example).

for batch in range(32):

for feature in range(512):

# ReLU: Requires branch prediction and potential

# pipeline stalls

z = matmul_output[batch, feature]

h = max(0.0, z) # Conditional operation

# BatchNorm: Multiple passes over data

mean_sum[feature] += h # First pass for mean

var_sum[feature] += h * h # Additional pass for variance

temp[batch, feature] = h # Extra memory storage needed

# Normalization requires complex arithmetic

for feature in range(512):

mean = mean_sum[feature] / batch_size

var = (var_sum[feature] / batch_size) - mean * mean

# Square root computation: Multiple iterations

scale = gamma[feature] / sqrt(var + eps) # Iterative

# approximation

shift = beta[feature] - mean * scale

# Additional pass over data for final computation

for batch in range(32):

output[batch, feature] = temp[batch, feature] *

scale + shiftThese operations introduce several key inefficiencies:

- Multiple passes over data, increasing memory bandwidth requirements

- Complex arithmetic requiring many instruction cycles

- Conditional operations that can cause pipeline stalls

- Additional memory storage for intermediate results

- Poor utilization of vector processing units

More specifically, each operation introduces distinct challenges. Batch normalization requires multiple passes through data: one for mean computation, another for variance, and a final pass for output transformation. Each pass loads and stores data through the memory hierarchy. Operations that appear simple in mathematical notation often expand into many instructions. The square root computation typically requires 10-20 iterations of numerical methods like Newton-Raphson approximation for suitable precision (Goldberg 1991). Conditional operations like ReLU’s max function require branch instructions that can stall the processor’s pipeline. The implementation needs temporary storage for intermediate values, increasing memory usage and bandwidth consumption. While vector units excel at regular computations, functions like exponentials and square roots often require scalar operations that cannot fully utilize vector processing capabilities.

Hardware Acceleration

SFUs address these inefficiencies through dedicated hardware implementation. Modern ML accelerators include specialized circuits that transform these complex operations into single-cycle or fixed-latency computations. The accelerator can load a vector of values and apply non-linear functions directly, eliminating the need for multiple passes and complex instruction sequences as shown in Listing 14.

vld.v v1, (input_ptr) # Load vector of values

vrelu.v v2, v1 # Single-cycle ReLU on entire vector

vsigm.v v3, v1 # Fixed-latency sigmoid computation

vtanh.v v4, v1 # Direct hardware tanh implementation

vrsqrt.v v5, v1 # Fast reciprocal square rootEach SFU implements a specific function through specialized circuitry. For instance, a ReLU unit performs the comparison and selection in dedicated logic, eliminating branching overhead. Square root operations use hardware implementations of algorithms like Newton-Raphson with fixed iteration counts, providing guaranteed latency. Exponential and logarithmic functions often combine small lookup tables with hardware interpolation circuits (Costa et al. 2019). Using these custom instructions, the SFU implementation eliminates multiple passes over data, removes complex arithmetic sequences, and maintains high computational efficiency. Table 4 shows the various hardware implementations and their typical latencies.

| Function Unit | Operation | Implementation Strategy | Typical Latency |

|---|---|---|---|

| Activation Unit | ReLU, sigmoid, tanh | Piece-wise approximation circuits | 1-2 cycles |

| Statistics Unit | Mean, variance | Parallel reduction trees | log(N) cycles |

| Exponential Unit | exp, log | Table lookup + hardware interpolation | 2-4 cycles |

| Root/Power Unit | sqrt, rsqrt | Fixed-iteration Newton-Raphson | 4-8 cycles |

SFUs History

The need for efficient non-linear function evaluation has shaped computer architecture for decades. Early processors incorporated hardware support for complex mathematical functions, such as logarithms and trigonometric operations, to accelerate workloads in scientific computing and signal processing (Smith 1997). In the 1970s and 1980s, floating-point co-processors were introduced to handle complex mathematical operations separately from the main CPU (Palmer 1980). In the 1990s, instruction set extensions such as Intel’s SSE and ARM’s NEON provided dedicated hardware for vectorized mathematical transformations, improving efficiency for multimedia and signal processing applications.

Machine learning workloads have reintroduced a strong demand for specialized functional units, as activation functions, normalization layers, and exponential transformations are fundamental to neural network computations. Rather than relying on iterative software approximations, modern AI accelerators implement fast, fixed-latency SFUs for these operations, mirroring historical trends in scientific computing. The reemergence of dedicated special function units underscores the ongoing cycle in hardware evolution, where domain-specific requirements drive the reinvention of classical architectural concepts in new computational paradigms.

The combination of vector, matrix, and special function units provides the computational foundation for modern AI accelerators. However, the effective utilization of these processing primitives depends critically on data movement and access patterns. This leads us to examine the architectures, hierarchies, and strategies that enable efficient data flow in neural network execution.

Compute Units and Execution Models

The vector operations, matrix operations, and special function units examined previously represent the fundamental computational primitives in AI accelerators. Modern AI processors package these primitives into distinct execution units, such as SIMD units, tensor cores, and processing elements, which define how computations are structured and exposed to users. Understanding this organization reveals both the theoretical capabilities and practical performance characteristics that developers can leverage in contemporary AI accelerators.

Mapping Primitives to Execution Units

The progression from computational primitives to execution units follows a structured hierarchy that reflects the increasing complexity and specialization of AI accelerators:

- Vector operations → SIMD/SIMT units that enable parallel processing of independent data elements

- Matrix operations → Tensor cores and systolic arrays that provide structured matrix multiplication

- Special functions → Dedicated hardware units integrated within processing elements

Each execution unit combines these computational primitives with specialized memory and control mechanisms, optimizing both performance and energy efficiency. This structured packaging allows hardware vendors to expose standardized programming interfaces while implementing diverse underlying architectures tailored to specific workload requirements. The choice of execution unit significantly influences overall system efficiency, affecting data locality, compute density, and workload adaptability. Subsequent sections examine how these execution units operate within AI accelerators to maximize performance across different machine learning tasks.

Evolution from SIMD to SIMT Architectures

Single Instruction Multiple Data (SIMD)19 execution applies identical operations to multiple data elements in parallel, minimizing instruction overhead while maximizing data throughput. This execution model is widely used to accelerate workloads with regular, independent data parallelism, such as neural network computations. The ARM Scalable Vector Extension (SVE) provides a representative example of how modern architectures implement SIMD operations efficiently, as illustrated in Listing 15.

19 SIMD Evolution: SIMD originated in Flynn’s 1966 taxonomy for scientific computing, but neural networks transformed it from a niche HPC concept to mainstream necessity. Modern CPUs have 512-bit SIMD units (AVX-512), but AI pushed development of SIMT (Single Instruction, Multiple Thread) where thousands of lightweight threads execute in parallel—GPU architectures now coordinate 65,536+ threads simultaneously, impossible with traditional SIMD.

ptrue p0.s # Create predicate for vector length

ld1w z0.s, p0/z, [x0] # Load vector of inputs

fmul z1.s, z0.s, z0.s # Multiply elements

fadd z2.s, z1.s, z0.s # Add elements

st1w z2.s, p0, [x1] # Store resultsProcessor architectures continue to expand SIMD capabilities to accommodate increasing computational demands. Intel’s Advanced Matrix Extensions (AMX) (Corporation 2021) and ARM’s SVE2 architecture (Stephens et al. 2017) provide flexible SIMD execution, enabling software to scale across different hardware implementations.

20 Streaming Multiprocessor (SM): NVIDIA’s fundamental GPU compute unit containing multiple CUDA cores, tensor cores, shared memory, and schedulers. Each SM manages 2048+ threads organized into 64 warps (32 threads each), enabling massive parallelism. NVIDIA H100 contains 132 SMs with 128 streaming processors each, totaling 16,896 cores. SMs execute threads in SIMT fashion, with all threads in a warp sharing the same instruction but processing different data.

21 Warp: NVIDIA’s fundamental execution unit of 32 threads that execute the same instruction simultaneously in lock-step. All threads in a warp share instruction fetch and decode, maximizing instruction throughput. If threads diverge (different control flow), the warp becomes inefficient by serializing execution paths. Modern GPUs achieve best performance when threads in a warp access consecutive memory addresses, enabling memory coalescing.

To address these limitations, SIMT extends SIMD principles by enabling parallel execution across multiple independent threads, each maintaining its own program counter and architectural state (Lindholm et al. 2008). This model maps naturally to matrix computations, where each thread processes different portions of a workload while still benefiting from shared instruction execution. In NVIDIA’s GPU architectures, each Streaming Multiprocessor (SM)20 coordinates thousands of threads executing in parallel, allowing for efficient scaling of neural network computations, as demonstrated in Listing 16. Threads are organized into warps21, which are the fundamental execution units that enable SIMT efficiency.

__global__ void matrix_multiply(float* C, float* A, float*

B, int N) {

// Each thread processes one output element

int row = blockIdx.y * blockDim.y + threadIdx.y;

int col = blockIdx.x * blockDim.x + threadIdx.x;

float sum = 0.0f;

for (int k = 0; k < N; k++) {

// Threads in a warp execute in parallel

sum += A[row * N + k] * B[k * N + col];

}

C[row * N + col] = sum;

}SIMT execution allows neural network computations to scale efficiently across thousands of threads while maintaining flexibility for divergent execution paths. Similar execution models appear in AMD’s RDNA and Intel’s Xe architectures, reinforcing SIMT as a fundamental mechanism for AI acceleration.

Tensor Cores

While SIMD and SIMT units provide efficient execution of vector operations, neural networks rely heavily on matrix computations that require specialized execution units for structured multi-dimensional processing. The energy economics of matrix operations drive this specialization: traditional scalar processing requires multiple DRAM accesses per operation, consuming 640 pJ per fetch, while tensor cores amortize this energy cost across entire matrix blocks. Tensor processing units extend SIMD and SIMT principles by enabling efficient matrix operations through dedicated hardware blocks that execute matrix multiplications and accumulations on entire matrix blocks in a single operation. Tensor cores transform the energy profile from 173× memory-bound inefficiency to compute-optimized execution where the 3.7 pJ multiply-accumulate operation dominates the energy budget rather than data movement.

Tensor cores22, implemented in architectures such as NVIDIA’s Ampere GPUs, provide an example of this approach. They expose matrix computation capabilities through specialized instructions, such as the tensor core operation shown in Listing 17 on the NVIDIA A100 GPU.

22 Tensor Core Breakthrough: NVIDIA introduced tensor cores in the V100 (2017) to accelerate the \(4\times 4\) matrix operations common in neural networks. The A100’s third-generation tensor cores achieve 312 TFLOPS for FP16 tensor operations—20\(\times\) faster than traditional CUDA cores. This single innovation enabled training of models like GPT-3 that would have been impossible with conventional hardware, fundamentally changing the scale of AI research.

Tensor Core Operation (NVIDIA A100):

mma.sync.aligned.m16n16k16.f16.f16

{d0,d1,d2,d3}, // Destination registers

{a0,a1,a2,a3}, // Source matrix A

{b0,b1,b2,b3}, // Source matrix B

{c0,c1,c2,c3} // AccumulatorA single tensor core instruction processes an entire matrix block while maintaining intermediate results in local registers, significantly improving computational efficiency compared to implementations based on scalar or vector operations. This structured approach enables hardware to achieve high throughput while reducing the burden of explicit loop unrolling and data management at the software level.

Tensor processing unit architectures differ based on design priorities. NVIDIA’s Ampere architecture incorporates tensor cores optimized for general-purpose deep learning acceleration. Google’s TPUv4 utilizes large-scale matrix units arranged in systolic arrays to maximize sustained training throughput. Apple’s M1 neural engine23 integrates smaller matrix processors optimized for mobile inference workloads, while Intel’s Sapphire Rapids architecture introduces AMX tiles designed for high-performance datacenter applications.

23 Apple’s Neural Engine Strategy: Apple introduced the Neural Engine in September 2017’s A11 chip to enable on-device ML without draining battery life. The M1’s 16-core Neural Engine delivers 11 TOPS while the entire M1 chip has a 20-watt system TDP—enabling real-time features like live text recognition and voice processing without cloud connectivity. This “privacy through hardware” approach influenced the entire industry to prioritize edge AI capabilities.

The increasing specialization of AI hardware has driven significant performance improvements in deep learning workloads. Figure 3 illustrates the trajectory of AI accelerator performance in NVIDIA GPUs, highlighting the transition from general-purpose floating-point execution units to highly optimized tensor processing cores.

Processing Elements

The highest level of execution unit organization integrates multiple tensor cores with local memory into processing elements (PEs). A processing element serves as a fundamental building block in many AI accelerators, combining different computational units to efficiently execute neural network operations. Each PE typically includes vector units for element-wise operations, tensor cores for matrix computation, special function units for non-linear transformations, and dedicated memory resources to optimize data locality and minimize data movement overhead.

Processing elements play an essential role in AI hardware by balancing computational density with memory access efficiency. Their design varies across different architectures to support diverse workloads and scalability requirements. Graphcore’s Intelligence Processing Unit (IPU) distributes computation across 1,472 tiles, each containing independent processing elements optimized for fine-grained parallelism (Graphcore 2020). Cerebras extends this approach in the CS-2 system, integrating 850,000 processing elements across a wafer-scale device to accelerate sparse computations. Tesla’s D1 processor arranges processing elements with substantial local memory, optimizing throughput and latency for real-time autonomous vehicle workloads (Quinnell 2024).

Processing elements provide the structural foundation for large-scale AI acceleration. Their efficiency depends not only on computational capability but also on interconnect strategies and memory hierarchy design. The next sections explore how these architectural choices impact performance across different AI workloads.

Tensor processing units have enabled substantial efficiency gains in AI workloads by using hardware-accelerated matrix computation. Their role continues to evolve as architectures incorporate support for advanced execution techniques, including structured sparsity and workload-specific optimizations. The effectiveness of these units, however, depends not only on their computational capabilities but also on how they interact with memory hierarchies and data movement mechanisms, which are examined in subsequent sections.

Systolic Arrays

While tensor cores package matrix operations into structured computational units, systolic arrays provide an alternative approach optimized for continuous data flow and operand reuse. The fundamental motivation for systolic architectures stems from the energy efficiency constraints discussed earlier—minimizing the impact of memory access penalties through architectural design. A systolic array arranges processing elements in a grid pattern, where data flows rhythmically between neighboring units in a synchronized manner, enabling each operand to participate in multiple computations as it propagates through the array. This structured movement minimizes external memory accesses by maximizing local data reuse—a single weight value can contribute to dozens of operations as it moves through the processing elements, fundamentally transforming the energy profile from memory-bound to compute-efficient execution.

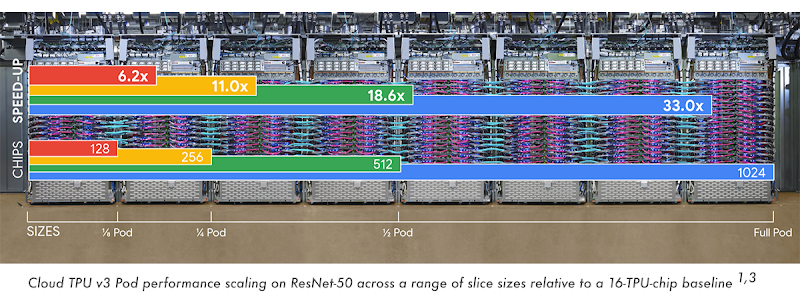

The concept of systolic arrays was first introduced by Kung and Leiserson24, who formalized their use in parallel computing architectures for efficient matrix operations (Kung 1982). Unlike general-purpose execution units, systolic arrays exploit spatial and temporal locality by reusing operands as they propagate through the grid. Google’s TPU exemplifies this architectural approach. In the TPUv4, a \(128\times128\) systolic array of multiply-accumulate units processes matrix operations by streaming data through the array in a pipelined manner, as shown in Figure 4.

24 Systolic Array Renaissance: H.T. Kung and Charles Leiserson introduced systolic arrays at CMU in 1979 for VLSI signal processing, but the concept languished for decades due to programming complexity. Google’s 2016 TPU resurrection proved these “heartbeat” architectures could deliver massive efficiency gains for neural networks—the TPUv1’s \(256\times 256\) systolic array achieved 92 TOPS for 8-bit integer operations while consuming just 40 watts, making systolic arrays the dominant AI architecture today.