1 Introduction

1.1 Overview

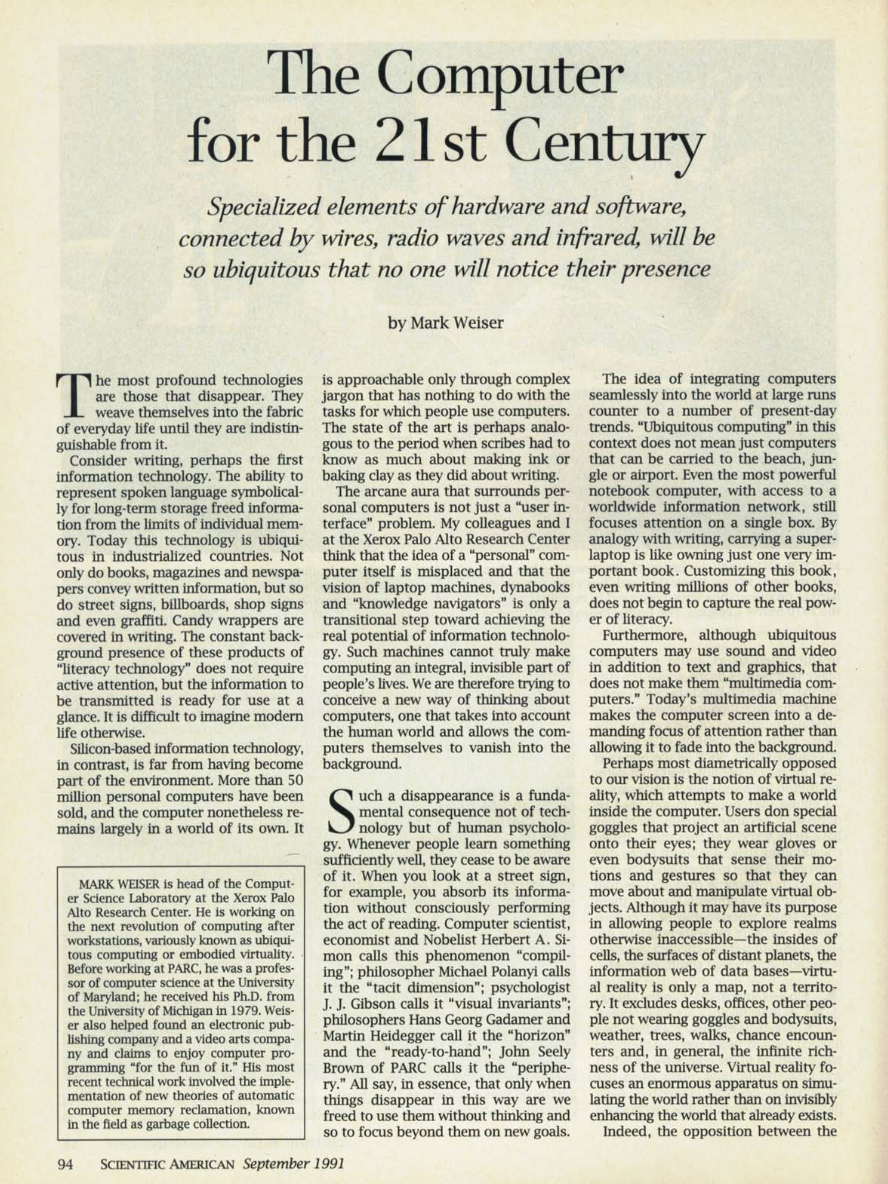

In the early 1990s, Mark Weiser, a pioneering computer scientist, introduced the world to a revolutionary concept that would forever change how we interact with technology. This was succintly captured in the paper he wrote on “The Computer for the 21st Century” (Figure 1.1). He envisioned a future where computing would be seamlessly integrated into our environments, becoming an invisible, integral part of daily life. This vision, which he termed “ubiquitous computing,” promised a world where technology would serve us without demanding our constant attention or interaction. Fast forward to today, and we find ourselves on the cusp of realizing Weiser’s vision, thanks to the advent and proliferation of machine learning systems.

In the vision of ubiquitous computing (Weiser 1991), the integration of processors into everyday objects is just one aspect of a larger paradigm shift. The true essence of this vision lies in creating an intelligent environment that can anticipate our needs and act on our behalf, enhancing our experiences without requiring explicit commands. To achieve this level of pervasive intelligence, it is crucial to develop and deploy machine learning systems that span the entire ecosystem, from the cloud to the edge and even to the tiniest IoT devices.

By distributing machine learning capabilities across the computing continuum, we can harness the strengths of each layer while mitigating their limitations. The cloud, with its vast computational resources and storage capacity, is ideal for training complex models on large datasets and performing resource-intensive tasks. Edge devices, such as gateways and smartphones, can process data locally, enabling faster response times, improved privacy, and reduced bandwidth requirements. Finally, the tiniest IoT devices, equipped with machine learning capabilities, can make quick decisions based on sensor data, enabling highly responsive and efficient systems.

This distributed intelligence is particularly crucial for applications that require real-time processing, such as autonomous vehicles, industrial automation, and smart healthcare. By processing data at the most appropriate layer of the computing continuum, we can ensure that decisions are made quickly and accurately, without relying on constant communication with a central server.

The migration of machine learning intelligence across the ecosystem also enables more personalized and context-aware experiences. By learning from user behavior and preferences at the edge, devices can adapt to individual needs without compromising privacy. This localized intelligence can then be aggregated and refined in the cloud, creating a feedback loop that continuously improves the overall system.

However, deploying machine learning systems across the computing continuum presents several challenges. Ensuring the interoperability and seamless integration of these systems requires standardized protocols and interfaces. Security and privacy concerns must also be addressed, as the distribution of intelligence across multiple layers increases the attack surface and the potential for data breaches.

Furthermore, the varying computational capabilities and energy constraints of devices at different layers of the computing continuum necessitate the development of efficient and adaptable machine learning models. Techniques such as model compression, federated learning, and transfer learning can help address these challenges, enabling the deployment of intelligence across a wide range of devices.

As we move towards the realization of Weiser’s vision of ubiquitous computing, the development and deployment of machine learning systems across the entire ecosystem will be critical. By leveraging the strengths of each layer of the computing continuum, we can create an intelligent environment that seamlessly integrates with our daily lives, anticipating our needs and enhancing our experiences in ways that were once unimaginable. As we continue to push the boundaries of what’s possible with distributed machine learning, we inch closer to a future where technology becomes an invisible but integral part of our world.

1.2 What’s Inside the Book

In this book, we will explore the technical foundations of ubiquitous machine learning systems, the challenges of building and deploying these systems across the computing continuum, and the vast array of applications they enable. A unique aspect of this book is its function as a conduit to seminal scholarly works and academic research papers, aimed at enriching the reader’s understanding and encouraging deeper exploration of the subject. This approach seeks to bridge the gap between pedagogical materials and cutting-edge research trends, offering a comprehensive guide that is in step with the evolving field of applied machine learning.

To improve the learning experience, we have included a variety of supplementary materials. Throughout the book, you will find slides that summarize key concepts, videos that provide in-depth explanations and demonstrations, exercises that reinforce your understanding, and labs that offer hands-on experience with the tools and techniques discussed. These additional resources are designed to cater to different learning styles and help you gain a deeper, more practical understanding of the subject matter.

We begin with the fundamentals, introducing key concepts in systems and machine learning, and providing a deep learning primer. We then guide you through the AI workflow, from data engineering to selecting the right AI frameworks. The training section covers efficient AI training techniques, model optimizations, and AI acceleration using specialized hardware. Deployment is addressed next, with chapters on benchmarking AI, distributed learning, and ML operations. Advanced topics like security, privacy, responsible AI, sustainable AI, robust AI, and generative AI are then explored in depth. The book concludes by highlighting the positive impact of AI and its potential for good.

1.4 Chapter Breakdown

Here’s a closer look at what each chapter covers. We have structured the book into six main sections: Fundamentals, Workflow, Training, Deployment, Advanced Topics, and Impact. These sections closely reflect the major components of a typical machine learning pipeline, from understanding the basic concepts to deploying and maintaining AI systems in real-world applications. By organizing the content in this manner, we aim to provide a logical progression that mirrors the actual process of developing and implementing embedded AI solutions.

1.4.1 Fundamentals

In the Fundamentals section, we lay the groundwork for understanding embedded AI. We introduce key concepts, provide an overview of machine learning systems, and dive into the principles and algorithms of deep learning that power AI applications in embedded systems. This section equips you with the essential knowledge needed to grasp the subsequent chapters.

- Introduction: This chapter sets the stage, providing an overview of embedded AI and laying the groundwork for the chapters that follow.

- ML Systems: We introduce the basics of machine learning systems, the platforms where AI algorithms are widely applied.

- Deep Learning Primer: This chapter offers a comprehensive introduction to the algorithms and principles that underpin AI applications in embedded systems.

1.4.2 Workflow

The Workflow section guides you through the practical aspects of building AI models. We break down the AI workflow, discuss data engineering best practices, and review popular AI frameworks. By the end of this section, you’ll have a clear understanding of the steps involved in developing proficient AI applications and the tools available to streamline the process.

- AI Workflow: This chapter breaks down the machine learning workflow, offering insights into the steps leading to proficient AI applications.

- Data Engineering: We focus on the importance of data in AI systems, discussing how to effectively manage and organize data.

- AI Frameworks: This chapter reviews different frameworks for developing machine learning models, guiding you in choosing the most suitable one for your projects.

1.4.3 Training

In the Training section, we explore techniques for training efficient and reliable AI models. We cover strategies for achieving efficiency, model optimizations, and the role of specialized hardware in AI acceleration. This section empowers you with the knowledge to develop high-performing models that can be seamlessly integrated into embedded systems.

- AI Training: This chapter explores model training, exploring techniques for developing efficient and reliable models.

- Efficient AI: Here, we discuss strategies for achieving efficiency in AI applications, from computational resource optimization to performance enhancement.

- Model Optimizations: We explore various avenues for optimizing AI models for seamless integration into embedded systems.

- AI Acceleration: We discuss the role of specialized hardware in enhancing the performance of embedded AI systems.

1.4.4 Deployment

The Deployment section focuses on the challenges and solutions for deploying AI models on embedded devices. We discuss benchmarking methods to evaluate AI system performance, techniques for on-device learning to improve efficiency and privacy, and the processes involved in ML operations. This section equips you with the skills to effectively deploy and maintain AI functionalities in embedded systems.

- Benchmarking AI: This chapter focuses on how to evaluate AI systems through systematic benchmarking methods.

- On-Device Learning: We explore techniques for localized learning, which enhances both efficiency and privacy.

- ML Operations: This chapter looks at the processes involved in the seamless integration, monitoring, and maintenance of AI functionalities in embedded systems.

1.4.5 Advanced Topics

In the Advanced Topics section, We will study the critical issues surrounding embedded AI. We address privacy and security concerns, explore the ethical principles of responsible AI, discuss strategies for sustainable AI development, examine techniques for building robust AI models, and introduce the exciting field of generative AI. This section broadens your understanding of the complex landscape of embedded AI and prepares you to navigate its challenges.

- Security & Privacy: As AI becomes more ubiquitous, this chapter addresses the crucial aspects of privacy and security in embedded AI systems.

- Responsible AI: We discuss the ethical principles guiding the responsible use of AI, focusing on fairness, accountability, and transparency.

- Sustainable AI: This chapter explores practices and strategies for sustainable AI, ensuring long-term viability and reduced environmental impact.

- Robust AI: We discuss techniques for developing reliable and robust AI models that can perform consistently across various conditions.

- Generative AI: This chapter explores the algorithms and techniques behind generative AI, opening avenues for innovation and creativity.

1.4.7 Closing

In the Closing section, we reflect on the key learnings from the book and look ahead to the future of embedded AI. We synthesize the concepts covered, discuss emerging trends, and provide guidance on continuing your learning journey in this rapidly evolving field. This section leaves you with a comprehensive understanding of embedded AI and the excitement to apply your knowledge in innovative ways.

- Conclusion: The book concludes with a reflection on the key learnings and future directions in the field of embedded AI.

1.5 Contribute Back

Learning in the fast-paced world of AI is a collaborative journey. We set out to nurture a vibrant community of learners, innovators, and contributors. As you explore the concepts and engage with the exercises, we encourage you to share your insights and experiences. Whether it’s a novel approach, an interesting application, or a thought-provoking question, your contributions can enrich the learning ecosystem. Engage in discussions, offer and seek guidance, and collaborate on projects to foster a culture of mutual growth and learning. By sharing knowledge, you play an important role in fostering a globally connected, informed, and empowered community.

1.4.6 Social Impact

The Impact section highlights the transformative potential of embedded AI in various domains. We showcase real-world applications of TinyML in healthcare, agriculture, conservation, and other areas where AI is making a positive difference. This section inspires you to leverage the power of embedded AI for societal good and to contribute to the development of impactful solutions.