16 Sustainable AI

Resources: Slides, Videos, Exercises, Labs

- Understand AI’s environmental impact, including energy consumption, carbon emissions, electronic waste, and biodiversity effects.

- Learn about methods and best practices for developing sustainable AI systems

- Appreciate the importance of taking a lifecycle perspective when evaluating and addressing the sustainability of AI systems.

- Recognize the roles various stakeholders, such as researchers, corporations, policymakers, and end users, play in furthering responsible and sustainable AI progress.

- Learn about specific frameworks, metrics, and tools to enable greener AI development.

- Appreciate real-world case studies like Google’s 4M efficiency practices that showcase how organizations are taking tangible steps to improve AI’s environmental record

16.1 Introduction

The rapid advancements in artificial intelligence (AI) and machine learning (ML) have led to many beneficial applications and optimizations for performance efficiency. However, the remarkable growth of AI comes with a significant yet often overlooked cost: its environmental impact. The most recent report released by the IPCC, the international body leading scientific assessments of climate change and its impacts, emphasized the pressing importance of tackling climate change. Without immediate efforts to decrease global \(\textrm{CO}_2\) emissions by at least 43 percent before 2030, we exceed global warming of 1.5 degrees Celsius (Winkler et al. 2022). This could initiate positive feedback loops, pushing temperatures even higher. Next to environmental issues, the United Nations recognized 17 Sustainable Development Goals (SDGs), in which AI can play an important role, and vice versa, play an important role in the development of AI systems. As the field continues expanding, considering sustainability is crucial.

AI systems, particularly large language models like GPT-3 and computer vision models like DALL-E 2, require massive amounts of computational resources for training. For example, GPT-3 was estimated to consume 1,300 megawatt-hours of electricity, which is equal to 1,450 average US households in an entire month (Maslej et al. 2023), or put another way, it consumed enough energy to supply an average US household for 120 years! This immense energy demand stems primarily from power-hungry data centers with servers running intense computations to train these complex neural networks for days or weeks.

Current estimates indicate that the carbon emissions produced from developing a single, sophisticated AI model can equal the emissions over the lifetime of five standard gasoline-powered vehicles (Strubell, Ganesh, and McCallum 2019). A significant portion of the electricity presently consumed by data centers is generated from nonrenewable sources such as coal and natural gas, resulting in data centers contributing around 1% of total worldwide carbon emissions. This is comparable to the emissions from the entire airline sector. This immense carbon footprint demonstrates the pressing need to transition to renewable power sources such as solar and wind to operate AI development.

Additionally, even small-scale AI systems deployed to edge devices as part of TinyML have environmental impacts that should not be ignored (Prakash, Stewart, et al. 2023). The specialized hardware required for AI has an environmental toll from natural resource extraction and manufacturing. GPUs, CPUs, and chips like TPUs depend on rare earth metals whose mining and processing generate substantial pollution. The production of these components also has its energy demands. Furthermore, collecting, storing, and preprocessing data used to train both small- and large-scale models comes with environmental costs, further exacerbating the sustainability implications of ML systems.

Thus, while AI promises innovative breakthroughs in many fields, sustaining progress requires addressing sustainability challenges. AI can continue advancing responsibly by optimizing models’ efficiency, exploring alternative specialized hardware and renewable energy sources for data centers, and tracking its overall environmental impact.

16.3 Energy Consumption

16.3.1 Understanding Energy Needs

Understanding the energy needs for training and operating AI models is crucial in the rapidly evolving field of A.I. With AI entering widespread use in many new fields (Bohr and Memarzadeh 2020; Sudhakar, Sze, and Karaman 2023), the demand for AI-enabled devices and data centers is expected to explode. This understanding helps us understand why AI, particularly deep learning, is often labeled energy-intensive.

Energy Requirements for AI Training

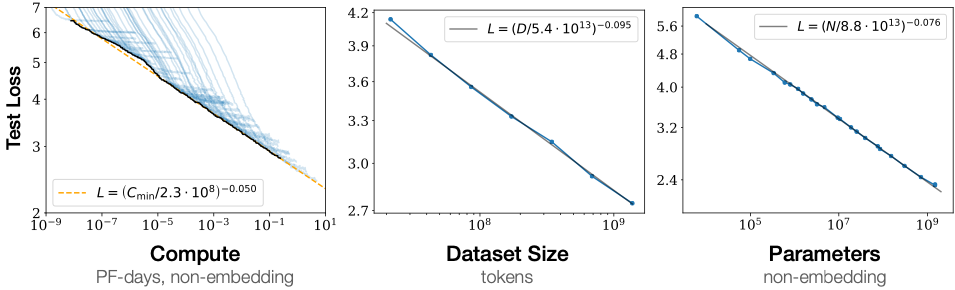

The training of complex AI systems like large deep learning models can demand startlingly high levels of computing power–with profound energy implications. Consider OpenAI’s state-of-the-art language model GPT-3 as a prime example. This system pushes the frontiers of text generation through algorithms trained on massive datasets. Yet, the energy GPT-3 consumed for a single training cycle could rival an entire small town’s monthly usage. In recent years, these generative AI models have gained increasing popularity, leading to more models being trained. Next to the increased number of models, the number of parameters in these models will also increase. Research shows that increasing the model size (number of parameters), dataset size, and compute used for training improves performance smoothly with no signs of saturation (Kaplan et al. 2020). See how, in Figure 16.1, the test loss decreases as each of the 3 increases above.

What drives such immense requirements? During training, models like GPT-3 learn their capabilities by continuously processing huge volumes of data to adjust internal parameters. The processing capacity enabling AI’s rapid advances also contributes to surging energy usage, especially as datasets and models balloon. GPT-3 highlights a steady trajectory in the field where each leap in AI’s sophistication traces back to ever more substantial computational power and resources. Its predecessor, GPT-2, required 10x less training to compute only 1.5 billion parameters, a difference now dwarfed by magnitudes as GPT-3 comprises 175 billion parameters. Sustaining this trajectory toward increasingly capable AI raises energy and infrastructure provision challenges ahead.

Operational Energy Use

Developing and training AI models requires immense data, computing power, and energy. However, the deployment and operation of those models also incur significant recurrent resource costs over time. AI systems are now integrated across various industries and applications and are entering the daily lives of an increasing demographic. Their cumulative operational energy and infrastructure impacts could eclipse the upfront model training.

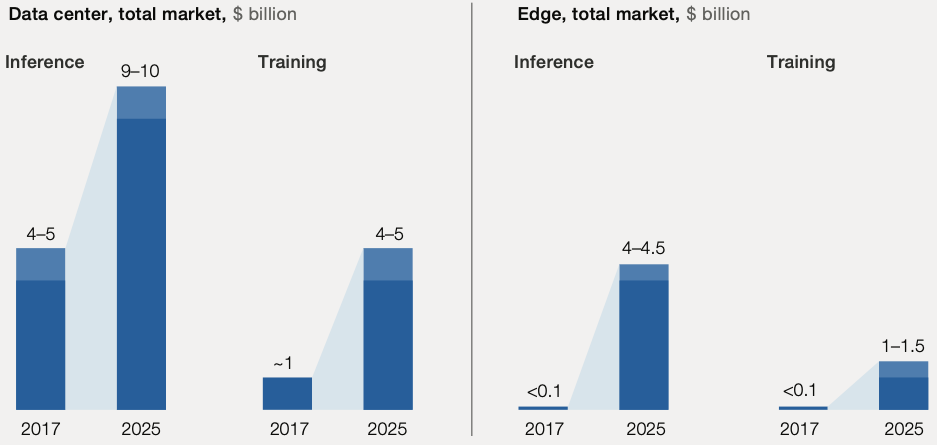

This concept is reflected in the demand for training and inference hardware in data centers and on the edge. Inference refers to using a trained model to make predictions or decisions on real-world data. According to a recent McKinsey analysis, the need for advanced systems to train ever-larger models is rapidly growing. However, inference computations already make up a dominant and increasing portion of total AI workloads, as shown in Figure 16.2. Running real-time inference with trained models–whether for image classification, speech recognition, or predictive analytics–invariably demands computing hardware like servers and chips. However, even a model handling thousands of facial recognition requests or natural language queries daily is dwarfed by massive platforms like Meta. Where inference on millions of photos and videos shared on social media, the infrastructure energy requirements continue to scale!

Algorithms powering AI-enabled smart assistants, automated warehouses, self-driving vehicles, tailored healthcare, and more have marginal individual energy footprints. However, the projected proliferation of these technologies could add hundreds of millions of endpoints running AI algorithms continually, causing the scale of their collective energy requirements to surge. Current efficiency gains need help to counterbalance this sheer growth.

AI is expected to see an annual growth rate of 37.3% between 2023 and 2030. Yet, applying the same growth rate to operational computing could multiply annual AI energy needs up to 1,000 times by 2030. So, while model optimization tackles one facet, responsible innovation must also consider total lifecycle costs at global deployment scales that were unfathomable just years ago but now pose infrastructure and sustainability challenges ahead.

16.3.2 Data Centers and Their Impact

As the demand for AI services grows, the impact of data centers on the energy consumption of AI systems is becoming increasingly important. While these facilities are crucial for the advancement and deployment of AI, they contribute significantly to its energy footprint.

Scale

Data centers are the essential workhorses enabling the recent computational demands of advanced AI systems. For example, leading providers like Meta operate massive data centers spanning up to the size of multiple football fields, housing hundreds of thousands of high-capacity servers optimized for parallel processing and data throughput.

These massive facilities provide the infrastructure for training complex neural networks on vast datasets. For instance, based on leaked information, OpenAI’s language model GPT-4 was trained on Azure data centers packing over 25,000 Nvidia A100 GPUs, used continuously for over 90 to 100 days.

Additionally, real-time inference for consumer AI applications at scale is only made possible by leveraging the server farms inside data centers. Services like Alexa, Siri, and Google Assistant process billions of voice requests per month from users globally by relying on data center computing for low-latency response. In the future, expanding cutting-edge use cases like self-driving vehicles, precision medicine diagnostics, and accurate climate forecasting models will require significant computational resources to be obtained by tapping into vast on-demand cloud computing resources from data centers. Some emerging applications, like autonomous cars, have harsh latency and bandwidth constraints. Locating data center-level computing power on the edge rather than the cloud will be necessary.

MIT research prototypes have shown trucks and cars with onboard hardware performing real-time AI processing of sensor data equivalent to small data centers (Sudhakar, Sze, and Karaman 2023). These innovative “data centers on wheels” demonstrate how vehicles like self-driving trucks may need embedded data center-scale compute on board to achieve millisecond system latency for navigation, though still likely supplemented by wireless 5G connectivity to more powerful cloud data centers.

The bandwidth, storage, and processing capacities required to enable this future technology at scale will depend heavily on advancements in data center infrastructure and AI algorithmic innovations.

Energy Demand

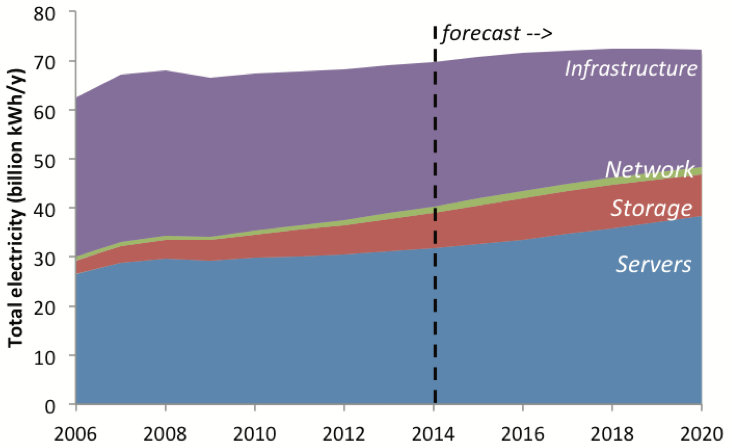

The energy demand of data centers can roughly be divided into 4 components—infrastructure, network, storage, and servers. In Figure 16.3, we see that the data infrastructure (which includes cooling, lighting, and controls) and the servers use most of the total energy budget of data centers in the US (Shehabi et al. 2016). This section breaks down the energy demand for the servers and the infrastructure. For the latter, the focus is on cooling systems, as cooling is the dominant factor in energy consumption in the infrastructure.

Servers

The increase in energy consumption of data centers stems mainly from exponentially growing AI computing requirements. NVIDIA DGX H100 machines that are optimized for deep learning can draw up to 10.2 kW at peak. Leading providers operate data centers with hundreds to thousands of these power-hungry DGX nodes networked to train the latest AI models. For example, the supercomputer developed for OpenAI is a single system with over 285,000 CPU cores, 10,000 GPUs, and 400 gigabits per second of network connectivity for each GPU server.

The intensive computations needed across an entire facility’s densely packed fleet and supporting hardware result in data centers drawing tens of megawatts around the clock. Overall, advancing AI algorithms continue to expand data center energy consumption as more DGX nodes get deployed to keep pace with projected growth in demand for AI compute resources over the coming years.

Cooling Systems

To keep the beefy servers fed at peak capacity and cool, data centers require tremendous cooling capacity to counteract the heat produced by densely packed servers, networking equipment, and other hardware running computationally intensive workloads without pause. With large data centers packing thousands of server racks operating at full tilt, massive industrial-scale cooling towers and chillers are required, using energy amounting to 30-40% of the total data center electricity footprint (Dayarathna, Wen, and Fan 2016). Consequently, companies are looking for alternative methods of cooling. For example, Microsoft’s data center in Ireland leverages a nearby fjord to exchange heat using over half a million gallons of seawater daily.

Recognizing the importance of energy-efficient cooling, there have been innovations aimed at reducing this energy demand. Techniques like free cooling, which uses outside air or water sources when conditions are favorable, and the use of AI to optimize cooling systems are examples of how the industry adapts. These innovations reduce energy consumption, lower operational costs, and lessen the environmental footprint. However, exponential increases in AI model complexity continue to demand more servers and acceleration hardware operating at higher utilization, translating to rising heat generation and ever greater energy used solely for cooling purposes.

The Environmental Impact

The environmental impact of data centers is not only caused by the direct energy consumption of the data center itself (Siddik, Shehabi, and Marston 2021). Data center operation involves the supply of treated water to the data center and the discharge of wastewater from the data center. Water and wastewater facilities are major electricity consumers.

Next to electricity usage, there are many more aspects to the environmental impacts of these data centers. The water usage of the data centers can lead to water scarcity issues, increased water treatment needs, and proper wastewater discharge infrastructure. Also, raw materials required for construction and network transmission considerably impact environmental t the environment, and components in data centers need to be upgraded and maintained. Where almost 50 percent of servers were refreshed within 3 years of usage, refresh cycles have shown to slow down (Davis et al. 2022). Still, this generates significant e-waste, which can be hard to recycle.

16.3.3 Energy Optimization

Ultimately, measuring and understanding the energy consumption of AI facilitates optimizing energy consumption.

One way to reduce the energy consumption of a given amount of computational work is to run it on more energy-efficient hardware. For instance, TPU chips can be more energy-efficient compared to CPUs when it comes to running large tensor computations for AI, as TPUs can run such computations much faster without drawing significantly more power than CPUs. Another way is to build software systems aware of energy consumption and application characteristics. Good examples are systems works such as Zeus (You, Chung, and Chowdhury 2023) and Perseus (Chung et al. 2023), both of which characterize the tradeoff between computation time and energy consumption at various levels of an ML training system to achieve energy reduction without end-to-end slowdown. In reality, building both energy-efficient hardware and software and combining their benefits should be promising, along with open-source frameworks (e.g., Zeus) that facilitate community efforts.

16.4 Carbon Footprint

The massive electricity demands of data centers can lead to significant environmental externalities absent an adequate renewable power supply. Many facilities rely heavily on nonrenewable energy sources like coal and natural gas. For example, data centers are estimated to produce up to 2% of total global \(\textrm{CO}_2\) emissions which is closing the gap with the airline industry. As mentioned in previous sections, the computational demands of AI are set to increase. The emissions of this surge are threefold. First, data centers are projected to increase in size (Liu et al. 2020). Secondly, emissions during training are set to increase significantly (Patterson et al. 2022). Thirdly, inference calls to these models are set to increase dramatically.

Without action, this exponential demand growth risks ratcheting up the carbon footprint of data centers further to unsustainable levels. Major providers have pledged carbon neutrality and committed funds to secure clean energy, but progress remains incremental compared to overall industry expansion plans. More radical grid decarbonization policies and renewable energy investments may prove essential to counteracting the climate impact of the coming tide of new data centers aimed at supporting the next generation of AI.

16.4.1 Definition and Significance

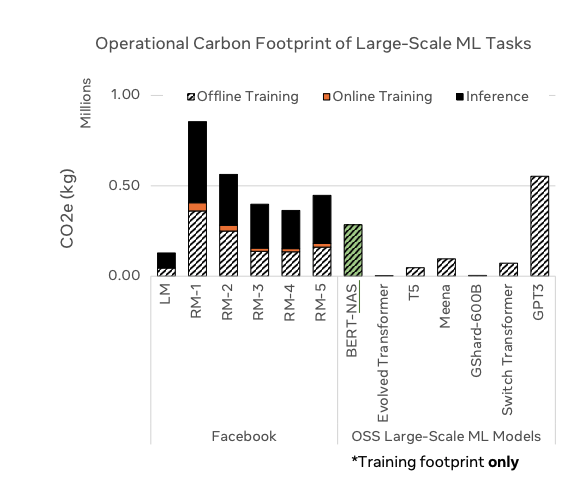

The concept of a ‘carbon footprint’ has emerged as a key metric. This term refers to the total amount of greenhouse gasses, particularly carbon dioxide, emitted directly or indirectly by an individual, organization, event, or product. These emissions significantly contribute to the greenhouse effect, accelerating global warming and climate change. The carbon footprint is measured in terms of carbon dioxide equivalents (\(\textrm{CO}_2\)e), allowing for a comprehensive account that includes various greenhouse gasses and their relative environmental impact. Examples of this as applied to large-scale ML tasks are shown in Figure 16.4.

Considering the carbon footprint is especially important in AI’s rapid advancement and integration into various sectors, bringing its environmental impact into sharp focus. AI systems, particularly those involving intensive computations like deep learning and large-scale data processing, are known for their substantial energy demands. This energy, often drawn from power grids, may still predominantly rely on fossil fuels, leading to significant greenhouse gas emissions.

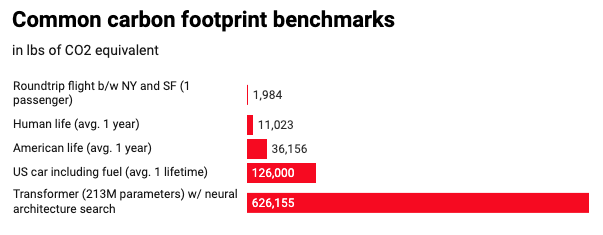

Take, for example, training large AI models such as GPT-3 or complex neural networks. These processes require immense computational power, typically provided by data centers. The energy consumption associated with operating these centers, particularly for high-intensity tasks, results in notable greenhouse gas emissions. Studies have highlighted that training a single AI model can generate carbon emissions comparable to that of the lifetime emissions of multiple cars, shedding light on the environmental cost of developing advanced AI technologies (Dayarathna, Wen, and Fan 2016). Figure 16.5 shows a comparison from lowest to highest carbon footprints, starting with a roundtrip flight between NY and SF, human life average per year, American life average per year, US car including fuel over a lifetime, and a Transformer model with neural architecture search, which has the highest footprint.

Moreover, AI’s carbon footprint extends beyond the operational phase. The entire lifecycle of AI systems, including the manufacturing of computing hardware, the energy used in data centers for cooling and maintenance, and the disposal of electronic waste, contributes to their overall carbon footprint. We have discussed some of these aspects earlier, and we will discuss the waste aspects later in this chapter.

16.4.2 The Need for Awareness and Action

Understanding the carbon footprint of AI systems is crucial for several reasons. Primarily, it is a step towards mitigating the impacts of climate change. As AI continues to grow and permeate different aspects of our lives, its contribution to global carbon emissions becomes a significant concern. Awareness of these emissions can inform decisions made by developers, businesses, policymakers, and even ML engineers and scientists like us to ensure a balance between technological innovation and environmental responsibility.

Furthermore, this understanding stimulates the drive towards ‘Green AI’ (R. Schwartz et al. 2020). This approach focuses on developing AI technologies that are efficient, powerful, and environmentally sustainable. It encourages exploring energy-efficient algorithms, using renewable energy sources in data centers, and adopting practices that reduce AI’s overall environmental impact.

In essence, the carbon footprint is an essential consideration in developing and applying AI technologies. As AI evolves and its applications become more widespread, managing its carbon footprint is key to ensuring that this technological progress aligns with the broader environmental sustainability goals.

16.4.3 Estimating the AI Carbon Footprint

Estimating AI systems’ carbon footprint is critical in understanding their environmental impact. This involves analyzing the various elements contributing to emissions throughout AI technologies’ lifecycle and employing specific methodologies to quantify these emissions accurately. Many different methods for quantifying ML’s carbon emissions have been proposed.

The carbon footprint of AI encompasses several key elements, each contributing to the overall environmental impact. First, energy is consumed during the AI model training and operational phases. The source of this energy heavily influences the carbon emissions. Once trained, these models, depending on their application and scale, continue to consume electricity during operation. Next to energy considerations, the hardware used stresses the environment as well.

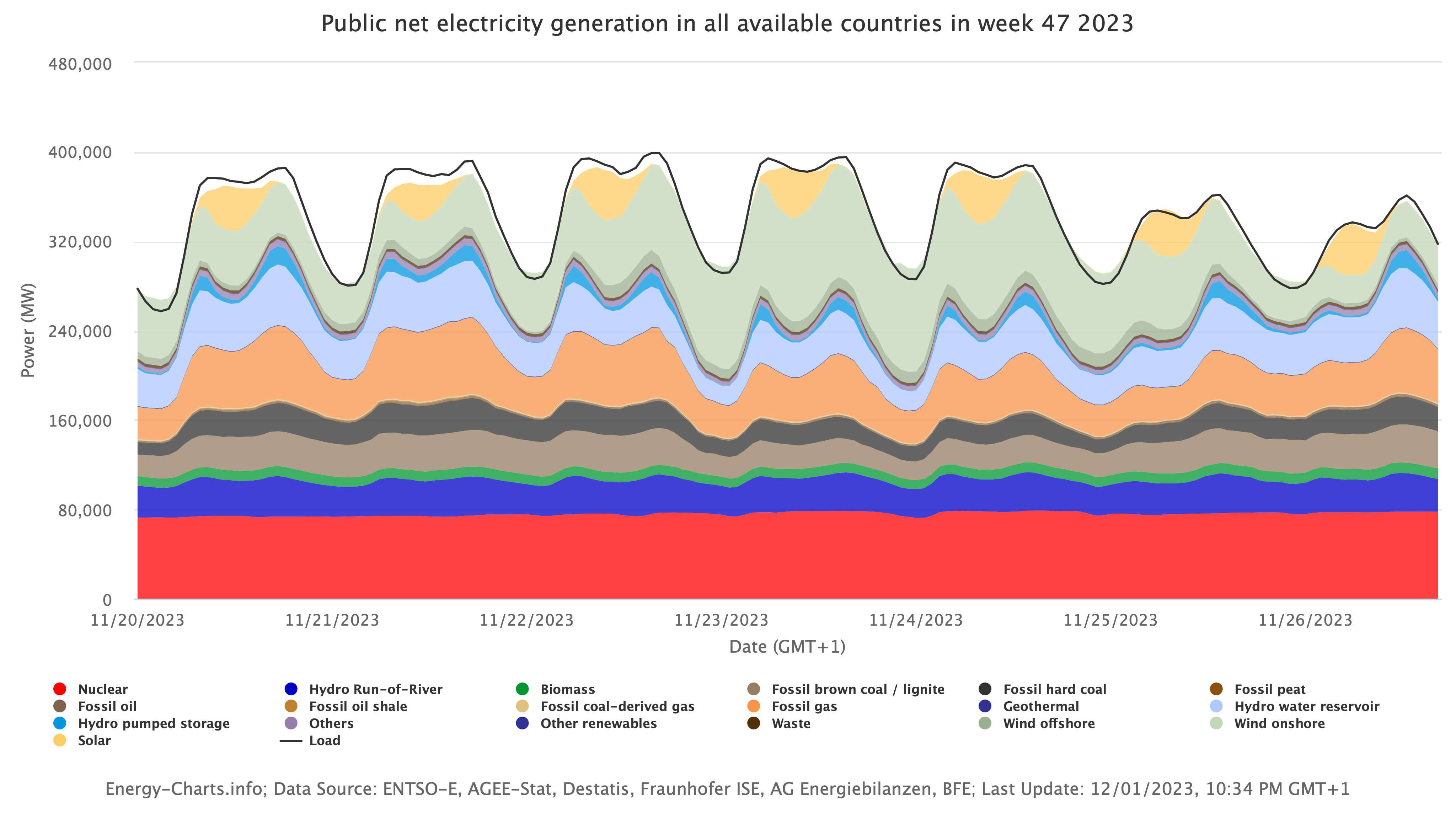

The carbon footprint varies significantly based on the energy sources used. The composition of the sources providing the energy used in the grid varies widely depending on geographical region and even time in a single day! For example, in the USA, roughly 60 percent of the total energy supply is still covered by fossil fuels. Nuclear and renewable energy sources cover the remaining 40 percent. These fractions are not constant throughout the day. As renewable energy production usually relies on environmental factors, such as solar radiation and pressure fields, they do not provide a constant energy source.

The variability of renewable energy production has been an ongoing challenge in the widespread use of these sources. Looking at Figure 16.6, which shows data for the European grid, we see that it is supposed to be able to produce the required amount of energy throughout the day. While solar energy peaks in the middle of the day, wind energy has two distinct peaks in the mornings and evenings. Currently, we rely on fossil and coal-based energy generation methods to supplement the lack of energy during times when renewable energy does not meet requirements.

Innovation in energy storage solutions is required to enable constant use of renewable energy sources. The base energy load is currently met with nuclear energy. This constant energy source does not directly produce carbon emissions but needs to be faster to accommodate the variability of renewable energy sources. Tech companies such as Microsoft have shown interest in nuclear energy sources to power their data centers. As the demand of data centers is more constant than the demand of regular households, nuclear energy could be used as a dominant source of energy.

Additionally, the manufacturing and disposal of AI hardware add to the carbon footprint. Producing specialized computing devices, such as GPUs and CPUs, is energy- and resource-intensive. This phase often relies on energy sources that contribute to greenhouse gas emissions. The electronics industry’s manufacturing process has been identified as one of the eight big supply chains responsible for more than 50 percent of global emissions (Challenge 2021). Furthermore, the end-of-life disposal of this hardware, which can lead to electronic waste, also has environmental implications. As mentioned, servers have a refresh cycle of roughly 3 to 5 years. Of this e-waste, currently only 17.4 percent is properly collected and recycled.. The carbon emissions of this e-waste has shown an increase of more than 50 percent between 2014 and 2020 (Singh and Ogunseitan 2022).

As is clear from the above, a proper Life Cycle Analysis is necessary to portray all relevant aspects of the emissions caused by AI. Another method is carbon accounting, which quantifies the amount of carbon dioxide emissions directly and indirectly associated with AI operations. This measurement typically uses \(\textrm{CO}_2\) equivalents, allowing for a standardized way of reporting and assessing emissions.

Did you know that the cutting-edge AI models you might use have an environmental impact? This exercise will go into an AI system’s “carbon footprint.” You’ll learn how data centers’ energy demands, large AI models’ training, and even hardware manufacturing contribute to greenhouse gas emissions. We’ll discuss why it’s crucial to be aware of this impact, and you’ll learn methods to estimate the carbon footprint of your own AI projects. Get ready to explore the intersection of AI and environmental sustainability!

16.5 Beyond Carbon Footprint

The current focus on reducing AI systems’ carbon emissions and energy consumption addresses one crucial aspect of sustainability. However, manufacturing the semiconductors and hardware that enable AI also carries severe environmental impacts that receive comparatively less public attention. Building and operating a leading-edge semiconductor fabrication plant, or “fab,” has substantial resource requirements and polluting byproducts beyond a large carbon footprint.

For example, a state-of-the-art fab producing chips like those in 5nm may require up to four million gallons of pure water each day. This water usage approaches what a city of half a million people would require for all needs. Sourcing this consistently places immense strain on local water tables and reservoirs, especially in already water-stressed regions that host many high-tech manufacturing hubs.

Additionally, over 250 unique hazardous chemicals are utilized at various stages of semiconductor production within fabs (Mills and Le Hunte 1997). These include volatile solvents like sulfuric acid, nitric acid, and hydrogen fluoride, along with arsine, phosphine, and other highly toxic substances. Preventing the discharge of these chemicals requires extensive safety controls and wastewater treatment infrastructure to avoid soil contamination and risks to surrounding communities. Any improper chemical handling or unanticipated spill carries dire consequences.

Beyond water consumption and chemical risks, fab operations also depend on rare metals sourcing, generate tons of dangerous waste products, and can hamper local biodiversity. This section will analyze these critical but less discussed impacts. With vigilance and investment in safety, the harms from semiconductor manufacturing can be contained while still enabling technological progress. However, ignoring these externalized issues will exacerbate ecological damage and health risks over the long run.

16.5.1 Water Usage and Stress

Semiconductor fabrication is an incredibly water-intensive process. Based on an article from 2009, a typical 300mm silicon wafer requires 8,328 liters of water, of which 5,678 liters is ultrapure water (Cope 2009). Today, a typical fab can use up to four million gallons of pure water. To operate one facility, TSMC’s latest fab in Arizona is projected to use 8.9 million gallons daily or nearly 3 percent of the city’s current water production. To put things in perspective, Intel and Quantis found that over 97% of their direct water consumption is attributed to semiconductor manufacturing operations within their fabrication facilities (Cooper et al. 2011).

This water is repeatedly used to flush away contaminants in cleaning steps and also acts as a coolant and carrier fluid in thermal oxidation, chemical deposition, and chemical mechanical planarization processes. During peak summer months, this approximates the daily water consumption of a city with a population of half a million people.

Despite being located in regions with sufficient water, the intensive usage can severely depress local water tables and drainage basins. For example, the city of Hsinchu in Taiwan suffered sinking water tables and seawater intrusion into aquifers due to excessive pumping to satisfy water supply demands from the Taiwan Semiconductor Manufacturing Company (TSMC) fab. In water-scarce inland areas like Arizona, massive water inputs are needed to support fabs despite already strained reservoirs.

Water discharge from fabs risks environmental contamination besides depletion if not properly treated. While much discharge is recycled within the fab, the purification systems still filter out metals, acids, and other contaminants that can pollute rivers and lakes if not cautiously handled (Prakash, Callahan, et al. 2023). These factors make managing water usage essential when mitigating wider sustainability impacts.

16.5.2 Hazardous Chemicals Usage

Modern semiconductor fabrication involves working with many highly hazardous chemicals under extreme conditions of heat and pressure (Kim et al. 2018). Key chemicals utilized include:

- Strong acids: Hydrofluoric, sulfuric, nitric, and hydrochloric acids rapidly eat through oxides and other surface contaminants but also pose toxicity dangers. Fabs can use thousands of metric tons of these acids annually, and accidental exposure can be fatal for workers.

- Solvents: Key solvents like xylene, methanol, and methyl isobutyl ketone (MIBK) handle dissolving photoresists but have adverse health impacts like skin/eye irritation and narcotic effects if mishandled. They also create explosive and air pollution risks.

- Toxic gases: Gas mixtures containing arsine (AsH3), phosphine (PH3), diborane (B2H6), germane (GeH4), etc., are some of the deadliest chemicals used in doping and vapor deposition steps. Minimal exposures can lead to poisoning, tissue damage, and even death without quick treatment.

- Chlorinated compounds: Older chemical mechanical planarization formulations incorporated perchloroethylene, trichloroethylene, and other chlorinated solvents, which have since been banned due to their carcinogenic effects and impacts on the ozone layer. However, their prior release still threatens surrounding groundwater sources.

Strict handling protocols, protective equipment for workers, ventilation, filtrating/scrubbing systems, secondary containment tanks, and specialized disposal mechanisms are vital where these chemicals are used to minimize health, explosion, air, and environmental spill dangers (Wald and Jones 1987). But human errors and equipment failures still occasionally occur–highlighting why reducing fab chemical intensities is an ongoing sustainability effort.

16.5.3 Resource Depletion

While silicon forms the base, there is an almost endless supply of silicon on Earth. In fact, silicon is the second most plentiful element found in the Earth’s crust, accounting for 27.7% of the crust’s total mass. Only oxygen exceeds silicon in abundance within the crust. Therefore, silicon is not necessary to consider for resource depletion. However, the various specialty metals and materials that enable the integrated circuit fabrication process and provide specific properties still need to be discovered. Maintaining supplies of these resources is crucial yet threatened by finite availability and geopolitical influences (Nakano 2021).

Gallium, indium, and arsenic are vital ingredients in forming ultra-efficient compound semiconductors in the highest-speed chips suited for 5G and AI applications (Chen 2006). However, these rare elements have relatively scarce natural deposits that are being depleted. The United States Geological Survey has indium on its list of most critical at-risk commodities, estimated to have less than a 15-year viable global supply at current demand growth (Davies 2011).

Helium is required in huge volumes for next-gen fabs to enable precise wafer cooling during operation. But helium’s relative rarity and the fact that once it vents into the atmosphere, it quickly escapes Earth make maintaining helium supplies extremely challenging long-term (Davies 2011). According to the US National Academies, substantial price increases and supply shocks are already occurring in this thinly traded market.

Other risks include China’s control over 90% of the rare earth elements critical to semiconductor material production (Jha 2014). Any supply chain issues or trade disputes can lead to catastrophic raw material shortages, given the lack of current alternatives. In conjunction with helium shortages, resolving the limited availability and geographic imbalance in accessing essential ingredients remains a sector priority for sustainability.

16.5.4 Hazardous Waste Generation

Semiconductor fabs generate tons of hazardous waste annually as byproducts from the various chemical processes (Grossman 2007). The key waste streams include:

- Gaseous waste: Fab ventilation systems capture harmful gases like arsine, phosphine, and germane and filter them out to avoid worker exposure. However, this produces significant quantities of dangerous condensed gas that need specialized treatment.

- VOCs: Volatile organic compounds like xylene, acetone, and methanol are used extensively as photoresist solvents and are evaporated as emissions during baking, etching, and stripping. VOCs pose toxicity issues and require scrubbing systems to prevent release.

- Spent acids: Strong acids such as sulfuric acid, hydrofluoric acid, and nitric acid get depleted in cleaning and etching steps, transforming into a corrosive, toxic soup that can dangerously react, releasing heat and fumes if mixed.

- Sludge: Water treatment of discharged effluent contains concentrated heavy metals, acid residues, and chemical contaminants. Filter press systems separate this hazardous sludge.

- Filter cake: Gaseous filtration systems generate multi-ton sticky cakes of dangerous absorbed compounds requiring containment.

Without proper handling procedures, storage tanks, packaging materials, and secondary containment, improper disposal of any of these waste streams can lead to dangerous spills, explosions, and environmental releases. The massive volumes mean even well-run fabs produce tons of hazardous waste year after year, requiring extensive treatment.

16.5.5 Biodiversity Impacts

Habitat Disruption and Fragmentation

Semiconductor fabs require large, contiguous land areas to accommodate cleanrooms, support facilities, chemical storage, waste treatment, and ancillary infrastructure. Developing these vast built-up spaces inevitably dismantles existing habitats, damaging sensitive biomes that may have taken decades to develop. For example, constructing a new fabrication module may level local forest ecosystems that species, like spotted owls and elk, rely upon for survival. The outright removal of such habitats severely threatens wildlife populations dependent on those lands.

Furthermore, pipelines, water channels, air and waste exhaust systems, access roads, transmission towers, and other support infrastructure fragment the remaining undisturbed habitats. Animals moving daily for food, water, and spawning can find their migration patterns blocked by these physical human barriers that bisect previously natural corridors.

Aquatic Life Disturbances

With semiconductor fabs consuming millions of gallons of ultra-pure water daily, accessing and discharging such volumes risks altering the suitability of nearby aquatic environments housing fish, water plants, amphibians, and other species. If the fab is tapping groundwater tables as its primary supply source, overdrawing at unsustainable rates can deplete lakes or lead to stream drying as water levels drop (Davies 2011).

Also, discharging wastewater at higher temperatures to cool fabrication equipment can shift downstream river conditions through thermal pollution. Temperature changes beyond thresholds that native species evolved for can disrupt reproductive cycles. Warmer water also holds less dissolved oxygen, critical to supporting aquatic plant and animal life (LeRoy Poff, Brinson, and Day 2002). Combined with traces of residual contaminants that escape filtration systems, the discharged water can cumulatively transform environments to be far less habitable for sensitive organisms (Till et al. 2019).

Air and Chemical Emissions

While modern semiconductor fabs aim to contain air and chemical discharges through extensive filtration systems, some levels of emissions often persist, raising risks for nearby flora and fauna. Air pollutants can carry downwind, including volatile organic compounds (VOCs), nitrogen oxide compounds (NOx), particulate matter from fab operational exhausts, and power plant fuel emissions.

As contaminants permeate local soils and water sources, wildlife ingesting affected food and water ingest toxic substances, which research shows can hamper cell function, reproduction rates, and longevity–slowly poisoning ecosystems (Hsu et al. 2016).

Likewise, accidental chemical spills and improper waste handling, which release acids and heavy metals into soils, can dramatically affect retention and leaching capabilities. Flora, such as vulnerable native orchids adapted to nutrient-poor substrates, can experience die-offs when contacted by foreign runoff chemicals that alter soil pH and permeability. One analysis found that a single 500-gallon nitric acid spill led to the regional extinction of a rare moss species in the year following when the acidic effluent reached nearby forest habitats. Such contamination events set off chain reactions across the interconnected web of life. Thus, strict protocols are essential to avoid hazardous discharge and runoff.

16.6 Life Cycle Analysis

Understanding the holistic environmental impact of AI systems requires a comprehensive approach that considers the entire life cycle of these technologies. Life Cycle Analysis (LCA) refers to a methodological framework used to quantify the environmental impacts across all stages in a product or system’s lifespan, from raw material extraction to end-of-life disposal. Applying LCA to AI systems can help identify priority areas to target for reducing overall environmental footprints.

16.6.1 Stages of an AI System’s Life Cycle

The life cycle of an AI system can be divided into four key phases:

Design Phase: This includes the energy and resources used in researching and developing AI technologies. It encompasses the computational resources used for algorithm development and testing contributing to carbon emissions.

Manufacture Phase: This stage involves producing hardware components such as graphics cards, processors, and other computing devices necessary for running AI algorithms. Manufacturing these components often involves significant energy for material extraction, processing, and greenhouse gas emissions.

Use Phase: The next most energy-intensive phase involves the operational use of AI systems. It includes the electricity consumed in data centers for training and running neural networks and powering end-user applications. This is arguably one of the most carbon-intensive stages.

Disposal Phase: This final stage covers the end-of-life aspects of AI systems, including the recycling and disposal of electronic waste generated from outdated or non-functional hardware past their usable lifespan.

16.6.2 Environmental Impact at Each Stage

Design and Manufacturing

The environmental impact during these beginning-of-life phases includes emissions from energy use and resource depletion from extracting materials for hardware production. At the heart of AI hardware are semiconductors, primarily silicon, used to make the integrated circuits in processors and memory chips. This hardware manufacturing relies on metals like copper for wiring, aluminum for casings, and various plastics and composites for other components. It also uses rare earth metals and specialized alloys- elements like neodymium, terbium, and yttrium- used in small but vital quantities. For example, the creation of GPUs relies on copper and aluminum. At the same time, chips use rare earth metals, which is the mining process that can generate substantial carbon emissions and ecosystem damage.

Use Phase

AI computes the majority of emissions in the lifecycle due to continuous high-power consumption, especially for training and running models. This includes direct and indirect emissions from electricity usage and nonrenewable grid energy generation. Studies estimate training complex models can have a carbon footprint comparable to the lifetime emissions of up to five cars.

Disposal Phase

The disposal stage impacts include air and water pollution from toxic materials in devices, challenges associated with complex electronics recycling, and contamination when improperly handled. Harmful compounds from burned e-waste are released into the atmosphere. At the same time, landfill leakage of lead, mercury, and other materials poses risks of soil and groundwater contamination if not properly controlled. Implementing effective electronics recycling is crucial.

In this exercise, you’ll explore the environmental impact of training machine learning models. We’ll use CodeCarbon to track emissions, learn about Life Cycle Analysis (LCA) to understand AI’s carbon footprint, and explore strategies to make your ML model development more environmentally friendly. By the end, you’ll be equipped to track the carbon emissions of your models and start implementing greener practices in your projects.

16.7 Challenges in LCA

16.7.1 Lack of Consistency and Standards

One major challenge facing life cycle analysis (LCA) for AI systems is the need for consistent methodological standards and frameworks. Unlike product categories like building materials, which have developed international standards for LCA through ISO 14040, there are no firmly established guidelines for analyzing the environmental footprint of complex information technology like AI.

This absence of uniformity means researchers make differing assumptions and varying methodological choices. For example, a 2021 study from the University of Massachusetts Amherst (Strubell, Ganesh, and McCallum 2019) analyzed the life cycle emissions of several natural language processing models but only considered computational resource usage for training and omitted hardware manufacturing impacts. A more comprehensive 2020 study from Stanford University researchers included emissions estimates from producing relevant servers, processors, and other components, following an ISO-aligned LCA standard for computer hardware. However, these diverging choices in system boundaries and accounting approaches reduce robustness and prevent apples-to-apples comparisons of results.

Standardized frameworks and protocols tailored to AI systems’ unique aspects and rapid update cycles would provide more coherence. This could equip researchers and developers to understand environmental hotspots, compare technology options, and accurately track progress on sustainability initiatives across the AI field. Industry groups and international standards bodies like the IEEE or ACM should prioritize addressing this methodological gap.

16.7.2 Data Gaps

Another key challenge for comprehensive life cycle assessment of AI systems is substantial data gaps, especially regarding upstream supply chain impacts and downstream electronic waste flows. Most existing studies focus narrowly on the learner or usage phase emissions from computational power demands, which misses a significant portion of lifetime emissions (Gupta et al. 2022).

For example, little public data from companies exists quantifying energy use and emissions from manufacturing the specialized hardware components that enable AI–including high-end GPUs, ASIC chips, solid-state drives, and more. Researchers often rely on secondary sources or generic industry averages to approximate production impacts. Similarly, on average, there is limited transparency into downstream fate once AI systems are discarded after 4-5 years of usable lifespans.

While electronic waste generation levels can be estimated, specifics on hazardous material leakage, recycling rates, and disposal methods for the complex components are hugely uncertain without better corporate documentation or regulatory reporting requirements.

The need for fine-grained data on computational resource consumption for training different model types makes reliable per-parameter or per-query emissions calculations difficult even for the usage phase. Attempts to create lifecycle inventories estimating average energy needs for key AI tasks exist (Henderson et al. 2020; Anthony, Kanding, and Selvan 2020), but variability across hardware setups, algorithms, and input data uncertainty remains extremely high. Furthermore, real-time carbon intensity data, critical in accurately tracking operational carbon footprint, must be improved in many geographic locations, rendering existing tools for operational carbon emission mere approximations based on annual average carbon intensity values.

The challenge is that tools like CodeCarbon and ML \(\textrm{CO}_2\) are just ad hoc approaches at best, despite their well-meaning intentions. Bridging the real data gaps with more rigorous corporate sustainability disclosures and mandated environmental impact reporting will be key for AI’s overall climatic impacts to be understood and managed.

16.7.3 Rapid Pace of Evolution

The extremely quick evolution of AI systems poses additional challenges in keeping life cycle assessments up-to-date and accounting for the latest hardware and software advancements. The core algorithms, specialized chips, frameworks, and technical infrastructure underpinning AI have all been advancing exceptionally fast, with new developments rapidly rendering prior systems obsolete.

For example, in deep learning, novel neural network architectures that achieve significantly better performance on key benchmarks or new optimized hardware like Google’s TPU chips can completely change an “average” model in less than a year. These swift shifts quickly make one-off LCA studies outdated for accurately tracking emissions from designing, running, or disposing of the latest AI.

However, the resources and access required to update LCAs continuously need to be improved. Frequently re-doing labor—and data-intensive life cycle inventories and impact modeling to stay current with AI’s state-of-the-art is likely infeasible for many researchers and organizations. However, updated analyses could notice environmental hotspots as algorithms and silicon chips continue rapidly evolving.

This presents difficulty in balancing dynamic precision through continuous assessment with pragmatic constraints. Some researchers have proposed simplified proxy metrics like tracking hardware generations over time or using representative benchmarks as an oscillating set of goalposts for relative comparisons, though granularity may be sacrificed. Overall, the challenge of rapid change will require innovative methodological solutions to prevent underestimating AI’s evolving environmental burdens.

16.7.4 Supply Chain Complexity

Finally, the complex and often opaque supply chains associated with producing the wide array of specialized hardware components that enable AI pose challenges for comprehensive life cycle modeling. State-of-the-art AI relies on cutting-edge advancements in processing chips, graphics cards, data storage, networking equipment, and more. However, tracking emissions and resource use across the tiered networks of globalized suppliers for all these components is extremely difficult.

For example, NVIDIA graphics processing units dominate much of the AI computing hardware, but the company relies on several discrete suppliers across Asia and beyond to produce GPUs. Many firms at each supplier tier choose to keep facility-level environmental data private, which could fully enable robust LCAs. Gaining end-to-end transparency down multiple levels of suppliers across disparate geographies with varying disclosure protocols and regulations poses barriers despite being crucial for complete boundary setting. This becomes even more complex when attempting to model emerging hardware accelerators like tensor processing units (TPUs), whose production networks still need to be made public.

Without tech giants’ willingness to require and consolidate environmental impact data disclosure from across their global electronics supply chains, considerable uncertainty will remain around quantifying the full lifecycle footprint of AI hardware enablement. More supply chain visibility coupled with standardized sustainability reporting frameworks specifically addressing AI’s complex inputs hold promise for enriching LCAs and prioritizing environmental impact reductions.

16.8 Sustainable Design and Development

16.8.1 Sustainability Principles

As the impact of AI on the environment becomes increasingly evident, the focus on sustainable design and development in AI is gaining prominence. This involves incorporating sustainability principles into AI design, developing energy-efficient models, and integrating these considerations throughout the AI development pipeline. There is a growing need to consider its sustainability implications and develop principles to guide responsible innovation. Below is a core set of principles. The principles flow from the conceptual foundation to practical execution to supporting implementation factors; the principles provide a full cycle perspective on embedding sustainability in AI design and development.

Lifecycle Thinking: Encouraging designers to consider the entire lifecycle of AI systems, from data collection and preprocessing to model development, training, deployment, and monitoring. The goal is to ensure sustainability is considered at each stage. This includes using energy-efficient hardware, prioritizing renewable energy sources, and planning to reuse or recycle retired models.

Future Proofing: Designing AI systems anticipating future needs and changes can improve sustainability. This may involve making models adaptable via transfer learning and modular architectures. It also includes planning capacity for projected increases in operational scale and data volumes.

Efficiency and Minimalism: This principle focuses on creating AI models that achieve desired results with the least possible resource use. It involves simplifying models and algorithms to reduce computational requirements. Specific techniques include pruning redundant parameters, quantizing and compressing models, and designing efficient model architectures, such as those discussed in the Optimizations chapter.

Lifecycle Assessment (LCA) Integration: Analyzing environmental impacts throughout the development and deployment of lifecycles highlights unsustainable practices early on. Teams can then make adjustments instead of discovering issues late when they are more difficult to address. Integrating this analysis into the standard design flow avoids creating legacy sustainability problems.

Incentive Alignment: Economic and policy incentives should promote and reward sustainable AI development. These may include government grants, corporate initiatives, industry standards, and academic mandates for sustainability. Aligned incentives enable sustainability to become embedded in AI culture.

Sustainability Metrics and Goals: It is important to establish clearly defined Metrics that measure sustainability factors like carbon usage and energy efficiency. Establishing clear targets for these metrics provides concrete guidelines for teams to develop responsible AI systems. Tracking performance on metrics over time shows progress towards set sustainability goals.

Fairness, Transparency, and Accountability: Sustainable AI systems should be fair, transparent, and accountable. Models should be unbiased, with transparent development processes and mechanisms for auditing and redressing issues. This builds public trust and enables the identification of unsustainable practices.

Cross-disciplinary Collaboration: AI researchers teaming up with environmental scientists and engineers can lead to innovative systems that are high-performing yet environmentally friendly. Combining expertise from different fields from the start of projects enables sustainable thinking to be incorporated into the AI design process.

Education and Awareness: Workshops, training programs, and course curricula that cover AI sustainability raise awareness among the next generation of practitioners. This equips students with the knowledge to develop AI that consciously minimizes negative societal and environmental impacts. Instilling these values from the start shapes tomorrow’s professionals and company cultures.

16.9 Green AI Infrastructure

Green AI represents a transformative approach to AI that incorporates environmental sustainability as a fundamental principle across the AI system design and lifecycle (R. Schwartz et al. 2020). This shift is driven by growing awareness of AI technologies’ significant carbon footprint and ecological impact, especially the compute-intensive process of training complex ML models.

The essence of Green AI lies in its commitment to align AI advancement with sustainability goals around energy efficiency, renewable energy usage, and waste reduction. The introduction of Green AI ideals reflects maturing responsibility across the tech industry towards environmental stewardship and ethical technology practices. It moves beyond technical optimizations toward holistic life cycle assessment on how AI systems affect sustainability metrics. Setting new bars for ecologically conscious AI paves the way for the harmonious coexistence of technological progress and planetary health.

16.9.1 Energy Efficient AI Systems

Energy efficiency in AI systems is a cornerstone of Green AI, aiming to reduce the energy demands traditionally associated with AI development and operations. This shift towards energy-conscious AI practices is vital in addressing the environmental concerns raised by the rapidly expanding field of AI. By focusing on energy efficiency, AI systems can become more sustainable, lessening their environmental impact and paving the way for more responsible AI use.

As we discussed earlier, the training and operation of AI models, especially large-scale ones, are known for their high energy consumption, which stems from compute-intensive model architecture and reliance on vast amounts of training data. For example, it is estimated that training a large state-of-the-art neural network model can have a carbon footprint of 284 tonnes—equivalent to the lifetime emissions of 5 cars (Strubell, Ganesh, and McCallum 2019).

To tackle the massive energy demands, researchers and developers are actively exploring methods to optimize AI systems for better energy efficiency while maintaining model accuracy and performance. This includes techniques like the ones we have discussed in the model optimizations, efficient AI, and hardware acceleration chapters:

- Knowledge distillation to transfer knowledge from large AI models to miniature versions

- Quantization and pruning approaches that reduce computational and space complexities

- Low-precision numerics–lowering mathematical precision without impacting model quality

- Specialized hardware like TPUs, neuromorphic chips tuned explicitly for efficient AI processing

One example is Intel’s work on Q8BERT—quantizing the BERT language model with 8-bit integers, leading to a 4x reduction in model size with minimal accuracy loss (Zafrir et al. 2019). The push for energy-efficient AI is not just a technical endeavor–it has tangible real-world implications. More performant systems lower AI’s operational costs and carbon footprint, making it accessible for widespread deployment on mobile and edge devices. It also paves the path toward the democratization of AI and mitigates unfair biases that can emerge from uneven access to computing resources across regions and communities. Pursuing energy-efficient AI is thus crucial for creating an equitable and sustainable future with AI.

16.9.2 Sustainable AI Infrastructure

Sustainable AI infrastructure includes the physical and technological frameworks that support AI systems, focusing on environmental sustainability. This involves designing and operating AI infrastructure to minimize ecological impact, conserve resources, and reduce carbon emissions. The goal is to create a sustainable ecosystem for AI that aligns with broader environmental objectives.

Green data centers are central to sustainable AI infrastructure, optimized for energy efficiency, and often powered by renewable energy sources. These data centers employ advanced cooling technologies (Ebrahimi, Jones, and Fleischer 2014), energy-efficient server designs (Uddin and Rahman 2012), and smart management systems (Buyya, Beloglazov, and Abawajy 2010) to reduce power consumption. The shift towards green computing infrastructure also involves adopting energy-efficient hardware, like AI-optimized processors that deliver high performance with lower energy requirements, which we discussed in the AI. Acceleration chapter. These efforts collectively reduce the carbon footprint of running large-scale AI operations.

Integrating renewable energy sources, such as solar, wind, and hydroelectric power, into AI infrastructure is important for environmental sustainability (Chua 1971). Many tech companies and research institutions are investing in renewable energy projects to power their data centers. This not only helps in making AI operations carbon-neutral but also promotes the wider adoption of clean energy. Using renewable energy sources clearly shows commitment to environmental responsibility in the AI industry.

Sustainability in AI also extends to the materials and hardware used in creating AI systems. This involves choosing environmentally friendly materials, adopting recycling practices, and ensuring responsible electronic waste disposal. Efforts are underway to develop more sustainable hardware components, including energy-efficient chips designed for domain-specific tasks (such as AI accelerators) and environmentally friendly materials in device manufacturing (Cenci et al. 2021; Irimia-Vladu 2014). The lifecycle of these components is also a focus, with initiatives aimed at extending the lifespan of hardware and promoting recycling and reuse.

While strides are being made in sustainable AI infrastructure, challenges remain, such as the high costs of green technology and the need for global standards in sustainable practices. Future directions include more widespread adoption of green energy, further innovations in energy-efficient hardware, and international collaboration on sustainable AI policies. Pursuing sustainable AI infrastructure is not just a technical endeavor but a holistic approach that encompasses environmental, economic, and social aspects, ensuring that AI advances harmoniously with our planet’s health.

16.9.3 Frameworks and Tools

Access to the right frameworks and tools is essential to effectively implementing green AI practices. These resources are designed to assist developers and researchers in creating more energy-efficient and environmentally friendly AI systems. They range from software libraries optimized for low-power consumption to platforms that facilitate the development of sustainable AI applications.

Several software libraries and development environments are specifically tailored for Green AI. These tools often include features for optimizing AI models to reduce their computational load and, consequently, their energy consumption. For example, libraries in PyTorch and TensorFlow that support model pruning, quantization, and efficient neural network architectures enable developers to build AI systems that require less processing power and energy. Additionally, open-source communities like the Green Carbon Foundation are creating a centralized carbon intensity metric and building software for carbon-aware computing.

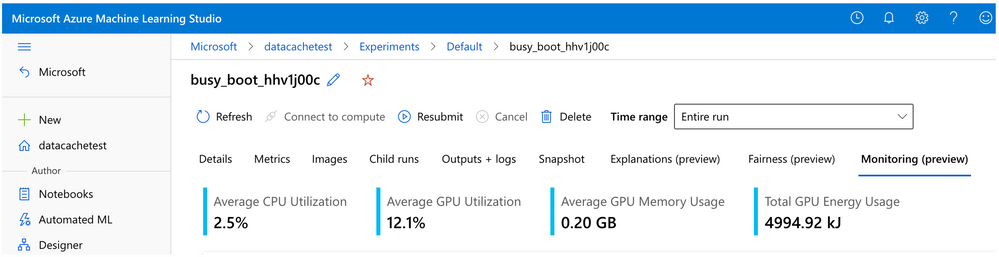

Energy monitoring tools are crucial for Green AI, as they allow developers to measure and analyze the energy consumption of their AI systems. By providing detailed insights into where and how energy is being used, these tools enable developers to make informed decisions about optimizing their models for better energy efficiency. This can involve adjustments in algorithm design, hardware selection, cloud computing software selection, or operational parameters. Figure 16.7 is a screenshot of an energy consumption dashboard provided by Microsoft’s cloud services platform.

With the increasing integration of renewable energy sources in AI operations, frameworks facilitating this process are becoming more important. These frameworks help manage the energy supply from renewable sources like solar or wind power, ensuring that AI systems can operate efficiently with fluctuating energy inputs.

Beyond energy efficiency, sustainability assessment tools help evaluate the broader environmental impact of AI systems. These tools can analyze factors like the carbon footprint of AI operations, the lifecycle impact of hardware components (Gupta et al. 2022), and the overall sustainability of AI projects (Prakash, Callahan, et al. 2023).

The availability and ongoing development of Green AI frameworks and tools are critical for advancing sustainable AI practices. By providing the necessary resources for developers and researchers, these tools facilitate the creation of more environmentally friendly AI systems and encourage a broader shift towards sustainability in the tech community. As Green AI continues to evolve, these frameworks and tools will play a vital role in shaping a more sustainable future for AI.

16.9.4 Benchmarks and Leaderboards

Benchmarks and leaderboards are important for driving progress in Green AI, as they provide standardized ways to measure and compare different methods. Well-designed benchmarks that capture relevant metrics around energy efficiency, carbon emissions, and other sustainability factors enable the community to track advancements fairly and meaningfully.

Extensive benchmarks exist for tracking AI model performance, such as those extensively discussed in the Benchmarking chapter. Still, a clear and pressing need exists for additional standardized benchmarks focused on sustainability metrics like energy efficiency, carbon emissions, and overall ecological impact. Understanding the environmental costs of AI currently needs to be improved by a lack of transparency and standardized measurement around these factors.

Emerging efforts such as the ML.ENERGY Leaderboard, which provides performance and energy consumption benchmarking results for large language models (LLMs) text generation, assists in enhancing the understanding of the energy cost of GenAI deployment.

As with any benchmark, Green AI benchmarks must represent realistic usage scenarios and workloads. Benchmarks that focus narrowly on easily gamed metrics may lead to short-term gains but fail to reflect actual production environments where more holistic efficiency and sustainability measures are needed. The community should continue expanding benchmarks to cover diverse use cases.

Wider adoption of common benchmark suites by industry players will accelerate innovation in Green AI by allowing easier comparison of techniques across organizations. Shared benchmarks lower the barrier to demonstrating the sustainability benefits of new tools and best practices. However, when designing industry-wide benchmarks, care must be taken around issues like intellectual property, privacy, and commercial sensitivity. Initiatives to develop open reference datasets for Green AI evaluation may help drive broader participation.

As methods and infrastructure for Green AI continue maturing, the community must revisit benchmark design to ensure existing suites capture new techniques and scenarios well. Tracking the evolving landscape through regular benchmark updates and reviews will be important to maintain representative comparisons over time. Community efforts for benchmark curation can enable sustainable benchmark suites that stand the test of time. Comprehensive benchmark suites owned by research communities or neutral third parties like MLCommons may encourage wider participation and standardization.

16.10 Case Study: Google’s 4Ms

Over the past decade, AI has rapidly moved from academic research to large-scale production systems powering numerous Google products and services. As AI models and workloads have grown exponentially in size and computational demands, concerns have emerged about their energy consumption and carbon footprint. Some researchers predicted runaway growth in ML’s energy appetite that could outweigh efficiencies gained from improved algorithms and hardware (Thompson et al. 2021).

However, Google’s production data reveals a different story—AI represents a steady 10-15% of total company energy usage from 2019 to 2021. This case study analyzes how Google applied a systematic approach leveraging four best practices—what they term the “4 Ms” of model efficiency, machine optimization, mechanization through cloud computing, and mapping to green locations—to bend the curve on emissions from AI workloads.

The scale of Google’s AI usage makes it an ideal case study. In 2021 alone, the company trained models like the 1.2 trillion-parameter GLam model. Analyzing how the application of AI has been paired with rapid efficiency gains in this environment helps us by providing a logical blueprint for the broader AI field to follow.

By transparently publishing detailed energy usage statistics, adopting rates of carbon-free clouds and renewables purchases, and more, alongside its technical innovations, Google has enabled outside researchers to measure progress accurately. Their study in the ACM CACM (Patterson et al. 2022) highlights how the company’s multipronged approach shows that runaway AI energy consumption predictions can be overcome by focusing engineering efforts on sustainable development patterns. The pace of improvements also suggests ML’s efficiency gains are just starting.

16.10.1 Google’s 4M Best Practices

To curb emissions from their rapidly expanding AI workloads, Google engineers systematically identified four best practice areas–termed the “4 Ms”–where optimizations could compound to reduce the carbon footprint of ML:

- Model: Selecting efficient AI model architectures can reduce computation by 5-10X with no loss in model quality. Google has extensively researched developing sparse models and neural architecture search to create more efficient models like the Evolved Transformer and Primer.

- Machine: Using hardware optimized for AI over general-purpose systems improves performance per watt by 2-5X. Google’s Tensor Processing Units (TPUs) led to 5-13X better carbon efficiency versus GPUs not optimized for ML.

- Mechanization: By leveraging cloud computing systems tailored for high utilization over conventional on-premise data centers, energy costs are reduced by 1.4-2X. Google cites its data center’s power usage effectiveness as outpacing industry averages.

- Map: Choosing data center locations with low-carbon electricity reduces gross emissions by another 5-10X. Google provides real-time maps highlighting the percentage of renewable energy used by its facilities.

Together, these practices created drastic compound efficiency gains. For example, optimizing the Transformer AI model on TPUs in a sustainable data center location cut energy use by 83. It lowered \(\textrm{CO}_2\) emissions by a factor of 747.

16.10.2 Significant Results

Despite exponential growth in AI adoption across products and services, Google’s efforts to improve the carbon efficiency of ML have produced measurable gains, helping to restrain overall energy appetite. One key data point highlighting this progress is that AI workloads have remained a steady 10% to 15% of total company energy use from 2019 to 2021. As AI became integral to more Google offerings, overall compute cycles dedicated to AI grew substantially. However, efficiencies in algorithms, specialized hardware, data center design, and flexible geography allowed sustainability to keep pace—with AI representing just a fraction of total data center electricity over years of expansion.

Other case studies underscore how an engineering focus on sustainable AI development patterns enabled rapid quality improvements in lockstep with environmental gains. For example, the natural language processing model GPT-3 was viewed as state-of-the-art in mid-2020. Yet its successor GLaM improved accuracy while cutting training compute needs and using cleaner data center energy–cutting CO2 emissions by a factor of 14 in just 18 months of model evolution.

Similarly, Google found past published speculation missing the mark on ML’s energy appetite by factors of 100 to 100,000X due to a lack of real-world metrics. By transparently tracking optimization impact, Google hoped to motivate efficiency while preventing overestimated extrapolations about ML’s environmental toll.

These data-driven case studies show how companies like Google are steering AI advancements toward sustainable trajectories and improving efficiency to outpace adoption growth. With further efforts around lifecycle analysis, inference optimization, and renewable expansion, companies can aim to accelerate progress, giving evidence that ML’s clean potential is only just being unlocked by current gains.

16.10.3 Further Improvements

While Google has made measurable progress in restraining the carbon footprint of its AI operations, the company recognizes further efficiency gains will be vital for responsible innovation given the technology’s ongoing expansion.

One area of focus is showing how advances are often incorrectly viewed as increasing unsustainable computing—like neural architecture search (NAS) to find optimized models— spur downstream savings, outweighing their upfront costs. Despite expending more energy on model discovery rather than hand-engineering, NAS cuts lifetime emissions by producing efficient designs callable across countless applications.

Additionally, the analysis reveals that focusing sustainability efforts on data center and server-side optimization makes sense, given the dominant energy draw versus consumer devices. Though Google shrinks inference impacts across processors like mobile phones, priority rests on improving training cycles and data center renewables procurement for maximal effect.

To that end, Google’s progress in pooling computing inefficiently designed cloud facilities highlights the value of scale and centralization. As more workloads shift away from inefficient on-premise servers, internet giants’ prioritization of renewable energy—with Google and Facebook matched 100% by renewables since 2017 and 2020, respectively—unlocks compounding emissions cuts.

Together, these efforts emphasize that while no resting on laurels is possible, Google’s multipronged approach shows that AI efficiency improvements are only accelerating. Cross-domain initiatives around lifecycle assessment, carbon-conscious development patterns, transparency, and matching rising AI demand with clean electricity supply pave a path toward bending the curve further as adoption grows. The company’s results compel the broader field towards replicating these integrated sustainability pursuits.

16.11 Embedded AI - Internet of Trash

While much attention has focused on making the immense data centers powering AI more sustainable, an equally pressing concern is the movement of AI capabilities into smart edge devices and endpoints. Edge/embedded AI allows near real-time responsiveness without connectivity dependencies. It also reduces transmission bandwidth needs. However, the increase of tiny devices leads to other risks.

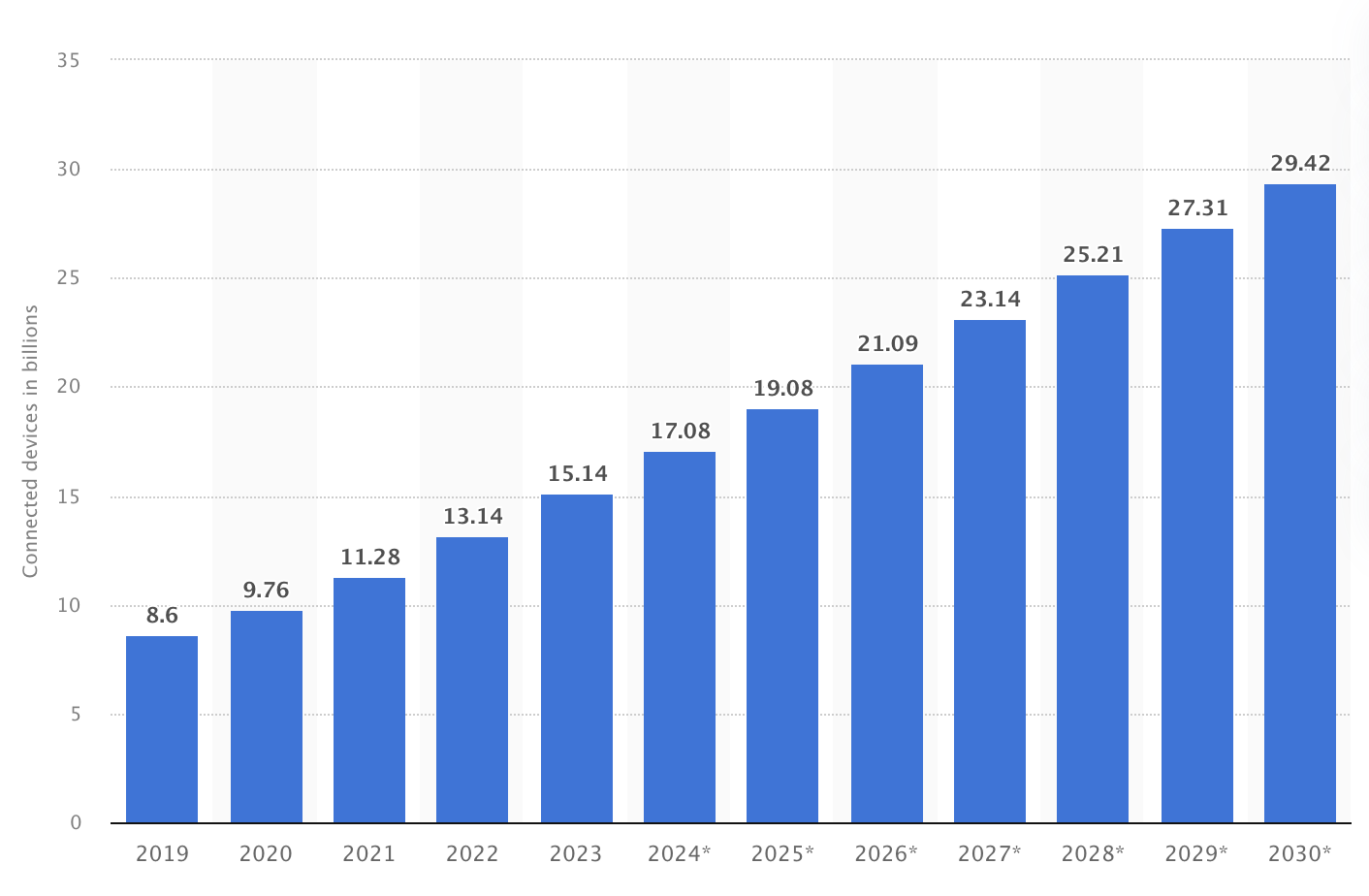

Tiny computers, microcontrollers, and custom ASICs powering edge intelligence face size, cost, and power limitations that rule out high-end GPUs used in data centers. Instead, they require optimized algorithms and extremely compact, energy-efficient circuitry to run smoothly. However, engineering for these microscopic form factors opens up risks around planned obsolescence, disposability, and waste. Figure 16.8 shows that the number of IoT devices is projected to reach 30 billion connected devices by 2030.

End-of-life handling of internet-connected gadgets embedded with sensors and AI remains an often overlooked issue during design. However, these products permeate consumer goods, vehicles, public infrastructure, industrial equipment, and more.

16.11.1 E-waste

Electronic waste, or e-waste, refers to discarded electrical equipment and components that enter the waste stream. This includes devices that have to be plugged in, have a battery, or electrical circuitry. With the rising adoption of internet-connected smart devices and sensors, e-waste volumes rapidly increase yearly. These proliferating gadgets contain toxic heavy metals like lead, mercury, and cadmium that become environmental and health hazards when improperly disposed of.

The amount of electronic waste being produced is growing at an alarming rate. Today, we already produce 50 million tons per year. By 2030, that figure is projected to jump to a staggering 75 million tons as consumer electronics consumption continues to accelerate. Global e-waste production will reach 120 million tonnes annually by 2050 (Un and Forum 2019). The soaring production and short lifecycles of our gadgets fuel this crisis, from smartphones and tablets to internet-connected devices and home appliances.

Developing nations are being hit the hardest as they need more infrastructure to process obsolete electronics safely. In 2019, formal e-waste recycling rates in poorer countries ranged from 13% to 23%. The remainder ends up illegally dumped, burned, or crudely dismantled, releasing toxic materials into the environment and harming workers and local communities. Clearly, more needs to be done to build global capacity for ethical and sustainable e-waste management, or we risk irreversible damage.

The danger is that crude handling of electronics to strip valuables exposes marginalized workers and communities to noxious burnt plastics/metals. Lead poisoning poses especially high risks to child development if ingested or inhaled. Overall, only about 20% of e-waste produced was collected using environmentally sound methods, according to UN estimates (Un and Forum 2019). So solutions for responsible lifecycle management are urgently required to contain the unsafe disposal as volume soars higher.

16.11.2 Disposable Electronics

The rapidly falling costs of microcontrollers, tiny rechargeable batteries, and compact communication hardware have enabled the embedding of intelligent sensor systems throughout everyday consumer goods. These internet-of-things (IoT) devices monitor product conditions, user interactions, and environmental factors to enable real-time responsiveness, personalization, and data-driven business decisions in the evolving connected marketplace.

However, these embedded electronics face little oversight or planning around sustainably handling their eventual disposal once the often plastic-encased products are discarded after brief lifetimes. IoT sensors now commonly reside in single-use items like water bottles, food packaging, prescription bottles, and cosmetic containers that overwhelmingly enter landfill waste streams after a few weeks to months of consumer use.

The problem accelerates as more manufacturers rush to integrate mobile chips, power sources, Bluetooth modules, and other modern silicon ICs, costing under US$1, into various merchandise without protocols for recycling, replacing batteries, or component reusability. Despite their small individual size, the volumes of these devices and lifetime waste burden loom large. Unlike regulating larger electronics, few policy constraints exist around materials requirements or toxicity in tiny disposable gadgets.

While offering convenience when working, the unsustainable combination of difficult retrievability and limited safe breakdown mechanisms causes disposable connected devices to contribute outsized shares of future e-waste volumes needing urgent attention.

16.11.3 Planned Obsolescence

Planned obsolescence refers to the intentional design strategy of manufacturing products with artificially limited lifetimes that quickly become non-functional or outdated. This spurs faster replacement purchase cycles as consumers find devices no longer meet their needs within a few years. However, electronics designed for premature obsolescence contribute to unsustainable e-waste volumes.

For example, gluing smartphone batteries and components together hinders repairability compared to modular, accessible assemblies. Rolling out software updates that deliberately slow system performance creates a perception that upgrading devices produced only several years earlier is worth it.

Likewise, fashionable introductions of new product generations with minor but exclusive feature additions make prior versions rapidly seem dated. These tactics compel buying new gadgets (e.g., iPhones) long before operational endpoints. When multiplied across fast-paced electronics categories, billions of barely worn items are discarded annually.

Planned obsolescence thus intensifies resource utilization and waste creation in making products with no intention for long lifetimes. This contradicts sustainability principles around durability, reuse, and material conservation. While stimulating continuous sales and gains for manufacturers in the short term, the strategy externalizes environmental costs and toxins onto communities lacking proper e-waste processing infrastructure.