17 Robust AI

Resources: Slides, Videos, Exercises, Labs

The development of robust machine learning systems has become increasingly crucial. As these systems are deployed in various critical applications, from autonomous vehicles to healthcare diagnostics, ensuring their resilience to faults and errors is paramount.

Robust AI, in the context of hardware faults, software faults, and errors, plays an important role in maintaining the reliability, safety, and performance of machine learning systems. By addressing the challenges posed by transient, permanent, and intermittent hardware faults (Ahmadilivani et al. 2024), as well as bugs, design flaws, and implementation errors in software (H. Zhang 2008), robust AI techniques enable machine learning systems to operate effectively even in adverse conditions.

This chapter explores the fundamental concepts, techniques, and tools for building fault-tolerant and error-resilient machine learning systems. It empowers researchers and practitioners to develop AI solutions that can withstand the complexities and uncertainties of real-world environments.

Understand the importance of robust and resilient AI systems in real-world applications.

Identify and characterize hardware faults, software faults, and their impact on ML systems.

Recognize and develop defensive strategies against threats posed by adversarial attacks, data poisoning, and distribution shifts.

Learn techniques for detecting, mitigating, and designing fault-tolerant ML systems.

Become familiar with tools and frameworks for studying and enhancing ML system resilience throughout the AI development lifecycle.

17.1 Introduction

Robust AI refers to a system’s ability to maintain its performance and reliability in the presence of errors. A robust machine learning system is designed to be fault-tolerant and error-resilient, capable of operating effectively even under adverse conditions.

As ML systems become increasingly integrated into various aspects of our lives, from cloud-based services to edge devices and embedded systems, the impact of hardware and software faults on their performance and reliability becomes more significant. In the future, as ML systems become more complex and are deployed in even more critical applications, the need for robust and fault-tolerant designs will be paramount.

ML systems are expected to play crucial roles in autonomous vehicles, smart cities, healthcare, and industrial automation domains. In these domains, the consequences of hardware or software faults can be severe, potentially leading to loss of life, economic damage, or environmental harm.

Researchers and engineers must focus on developing advanced techniques for fault detection, isolation, and recovery to mitigate these risks and ensure the reliable operation of future ML systems.

This chapter will focus specifically on three main categories of faults and errors that can impact the robustness of ML systems: hardware faults, software faults, and human errors.

Hardware Faults: Transient, permanent, and intermittent faults can affect the hardware components of an ML system, corrupting computations and degrading performance.

Model Robustness: ML models can be vulnerable to adversarial attacks, data poisoning, and distribution shifts, which can induce targeted misclassifications, skew the model’s learned behavior, or compromise the system’s integrity and reliability.

Software Faults: Bugs, design flaws, and implementation errors in the software components, such as algorithms, libraries, and frameworks, can propagate errors and introduce vulnerabilities.

The specific challenges and approaches to achieving robustness may vary depending on the scale and constraints of the ML system. Large-scale cloud computing or data center systems may focus on fault tolerance and resilience through redundancy, distributed processing, and advanced error detection and correction techniques. In contrast, resource-constrained edge devices or embedded systems face unique challenges due to limited computational power, memory, and energy resources.

Regardless of the scale and constraints, the key characteristics of a robust ML system include fault tolerance, error resilience, and performance maintenance. By understanding and addressing the multifaceted challenges to robustness, we can develop trustworthy and reliable ML systems that can navigate the complexities of real-world environments.

This chapter is not just about exploring ML systems’ tools, frameworks, and techniques for detecting and mitigating faults, attacks, and distributional shifts. It’s about emphasizing the crucial role of each one of you in prioritizing resilience throughout the AI development lifecycle, from data collection and model training to deployment and monitoring. By proactively addressing the challenges to robustness, we can unlock the full potential of ML technologies while ensuring their safe, reliable, and responsible deployment in real-world applications.

As AI continues to shape our future, the potential of ML technologies is immense. But it’s only when we build resilient systems that can withstand the challenges of the real world that we can truly harness this potential. This is a defining factor in the success and societal impact of this transformative technology, and it’s within our reach.

17.2 Real-World Examples

Here are some real-world examples of cases where faults in hardware or software have caused major issues in ML systems across cloud, edge, and embedded environments:

17.2.1 Cloud

In February 2017, Amazon Web Services (AWS) experienced a significant outage due to human error during maintenance. An engineer inadvertently entered an incorrect command, causing many servers to be taken offline. This outage disrupted many AWS services, including Amazon’s AI-powered assistant, Alexa. As a result, Alexa-powered devices, such as Amazon Echo and third-party products using Alexa Voice Service, could not respond to user requests for several hours. This incident highlights the potential impact of human errors on cloud-based ML systems and the need for robust maintenance procedures and failsafe mechanisms.

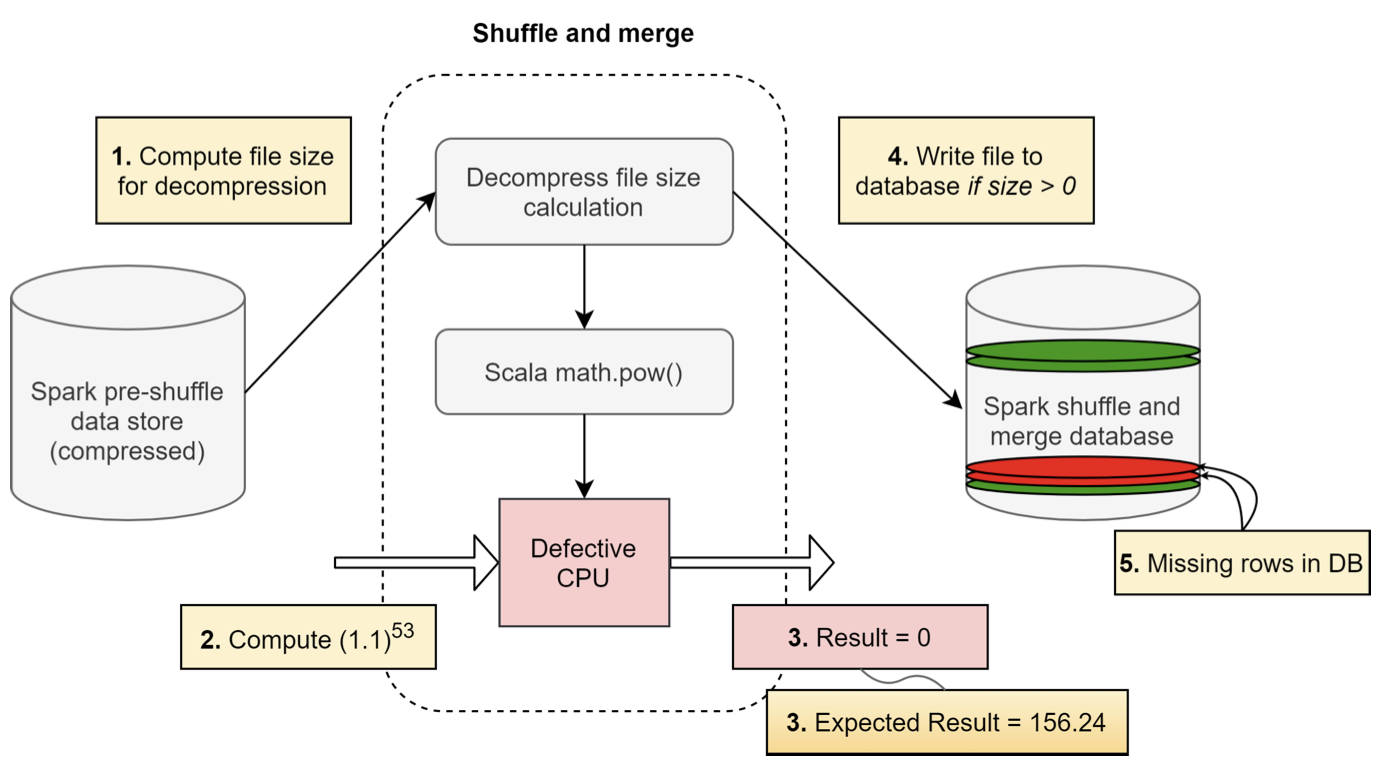

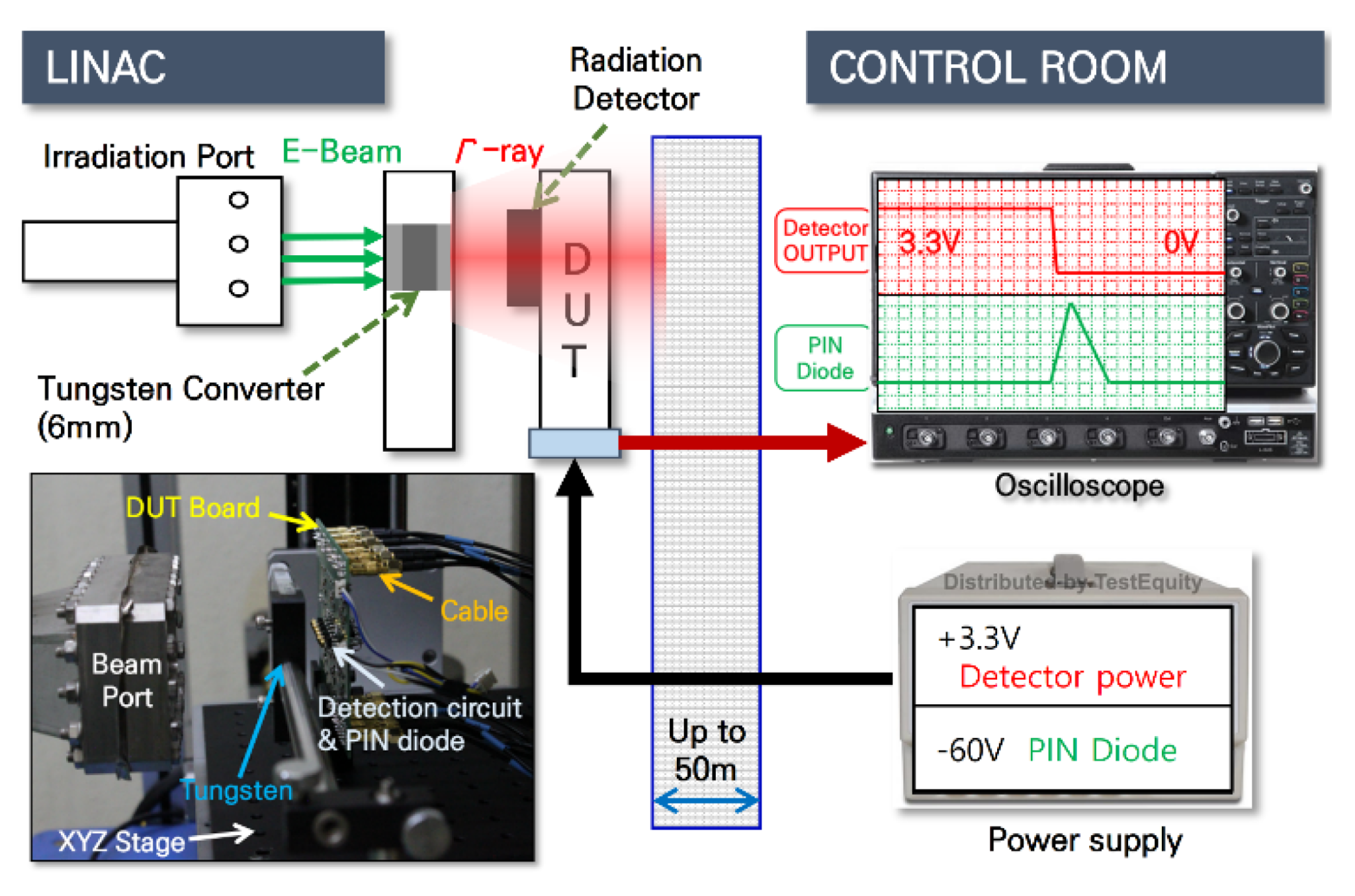

In another example (Vangal et al. 2021), Facebook encountered a silent data corruption (SDC) issue within its distributed querying infrastructure, as shown in Figure 17.1. Facebook’s infrastructure includes a querying system that fetches and executes SQL and SQL-like queries across multiple datasets using frameworks like Presto, Hive, and Spark. One of the applications that utilized this querying infrastructure was a compression application to reduce the footprint of data stores. In this compression application, files were compressed when not being read and decompressed when a read request was made. Before decompression, the file size was checked to ensure it was greater than zero, indicating a valid compressed file with contents.

However, in one instance, when the file size was being computed for a valid non-zero-sized file, the decompression algorithm invoked a power function from the Scala library. Unexpectedly, the Scala function returned a zero size value for the file despite having a known non-zero decompressed size. As a result, the decompression was not performed, and the file was not written to the output database. This issue manifested sporadically, with some occurrences of the same file size computation returning the correct non-zero value.

The impact of this silent data corruption was significant, leading to missing files and incorrect data in the output database. The application relying on the decompressed files failed due to the data inconsistencies. In the case study presented in the paper, Facebook’s infrastructure, which consists of hundreds of thousands of servers handling billions of requests per day from their massive user base, encountered a silent data corruption issue. The affected system processed user queries, image uploads, and media content, which required fast, reliable, and secure execution.

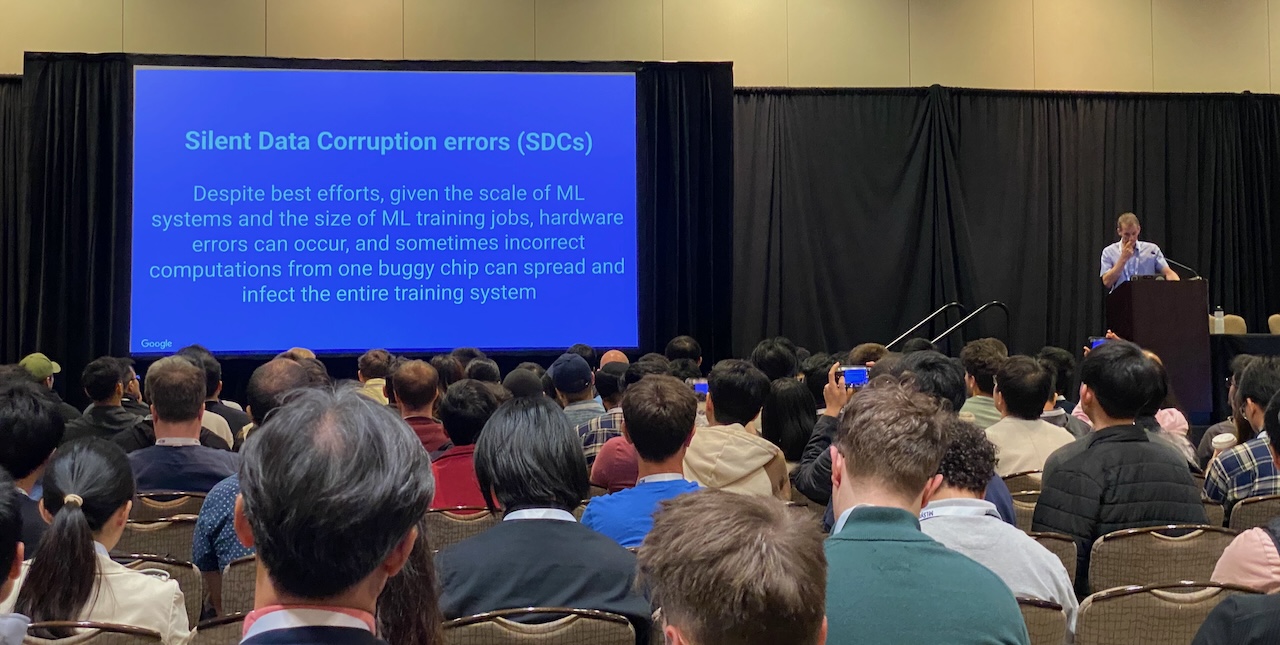

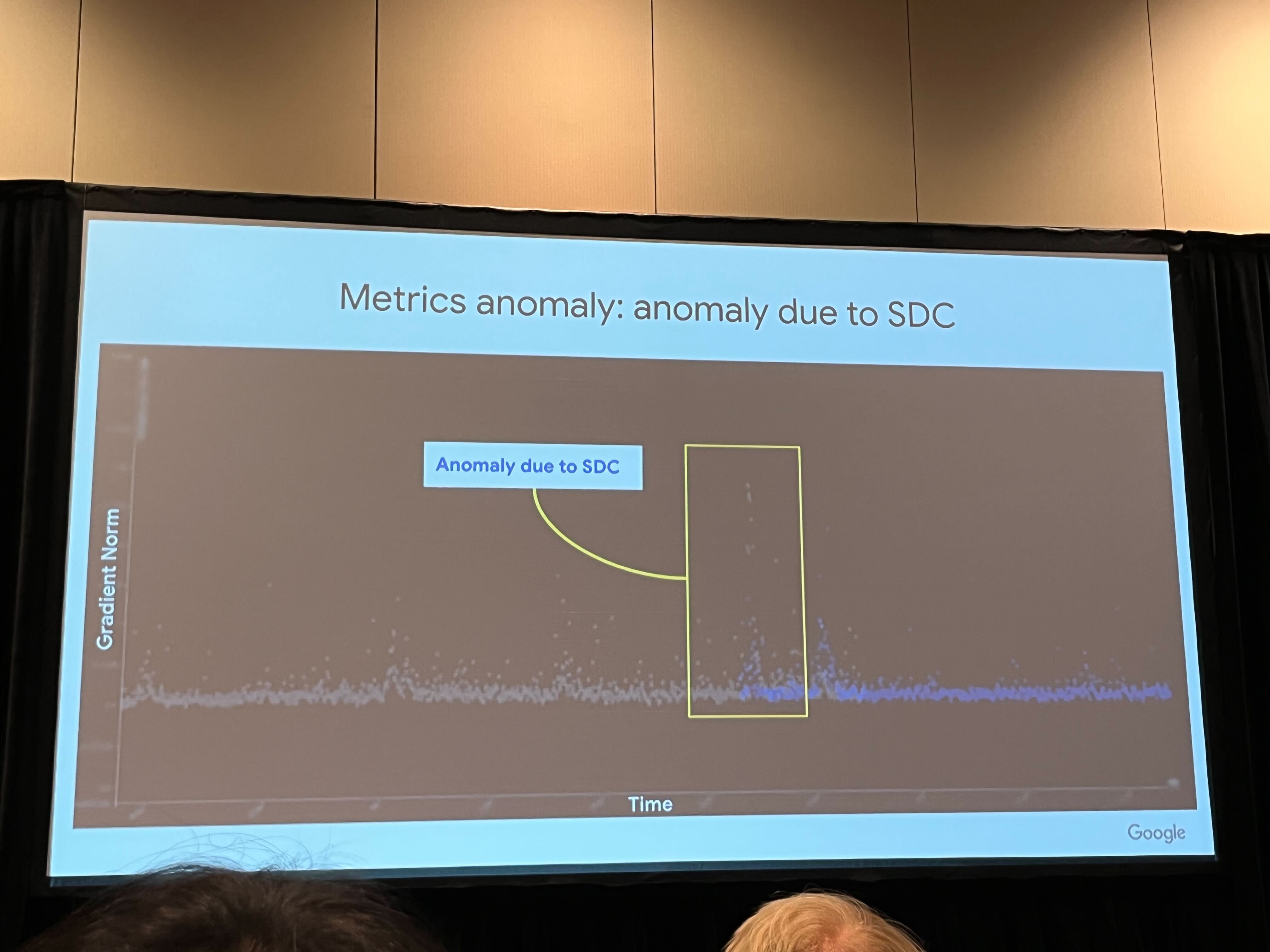

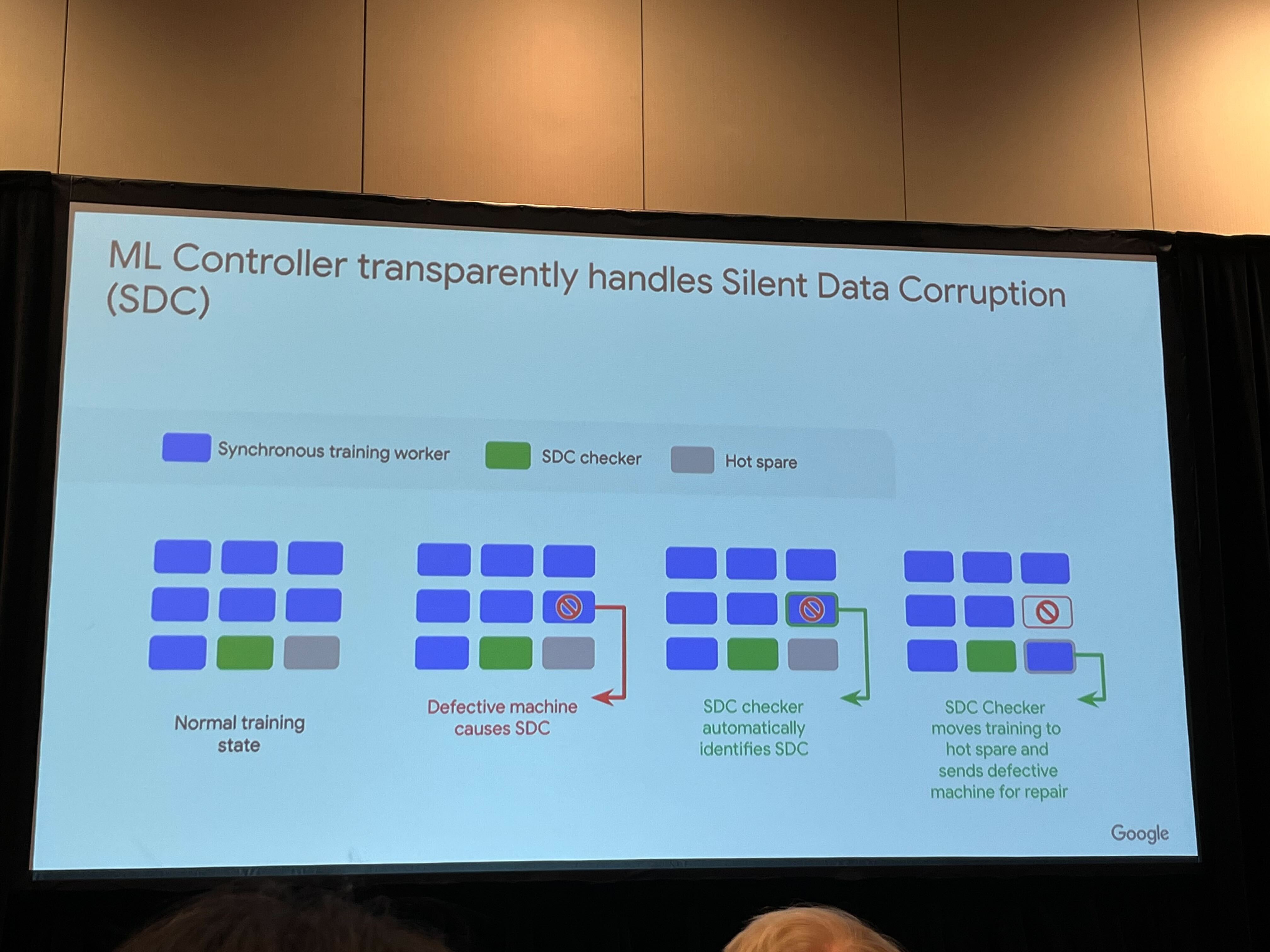

This case study illustrates how silent data corruption can propagate through multiple layers of an application stack, leading to data loss and application failures in a large-scale distributed system. The intermittent nature of the issue and the lack of explicit error messages made it particularly challenging to diagnose and resolve. But this is not restricted to just Meta, even other companies such as Google that operate AI hypercomputers face this challenge. Figure 17.2 Jeff Dean, Chief Scientist at Google DeepMind and Google Research, discusses SDCs and their impact on ML systems.

17.2.2 Edge

Regarding examples of faults and errors in edge ML systems, one area that has gathered significant attention is the domain of self-driving cars. Self-driving vehicles rely heavily on machine learning algorithms for perception, decision-making, and control, making them particularly susceptible to the impact of hardware and software faults. In recent years, several high-profile incidents involving autonomous vehicles have highlighted the challenges and risks associated with deploying these systems in real-world environments.

In May 2016, a fatal accident occurred when a Tesla Model S operating on Autopilot crashed into a white semi-trailer truck crossing the highway. The Autopilot system, which relied on computer vision and machine learning algorithms, failed to recognize the white trailer against a bright sky background. The driver, who was reportedly watching a movie when the crash, did not intervene in time, and the vehicle collided with the trailer at full speed. This incident raised concerns about the limitations of AI-based perception systems and the need for robust failsafe mechanisms in autonomous vehicles. It also highlighted the importance of driver awareness and the need for clear guidelines on using semi-autonomous driving features, as shown in Figure 17.3.

In March 2018, an Uber self-driving test vehicle struck and killed a pedestrian crossing the street in Tempe, Arizona. The incident was caused by a software flaw in the vehicle’s object recognition system, which failed to identify the pedestrians appropriately to avoid them as obstacles. The safety driver, who was supposed to monitor the vehicle’s operation and intervene if necessary, was found distracted during the crash. This incident led to widespread scrutiny of Uber’s self-driving program and raised questions about the readiness of autonomous vehicle technology for public roads. It also emphasized the need for rigorous testing, validation, and safety measures in developing and deploying AI-based self-driving systems.

In 2021, Tesla faced increased scrutiny following several accidents involving vehicles operating on Autopilot mode. Some of these accidents were attributed to issues with the Autopilot system’s ability to detect and respond to certain road situations, such as stationary emergency vehicles or obstacles in the road. For example, in April 2021, a Tesla Model S crashed into a tree in Texas, killing two passengers. Initial reports suggested that no one was in the driver’s seat at the time of the crash, raising questions about the use and potential misuse of Autopilot features. These incidents highlight the ongoing challenges in developing robust and reliable autonomous driving systems and the need for clear regulations and consumer education regarding the capabilities and limitations of these technologies.

17.2.3 Embedded

Embedded systems, which often operate in resource-constrained environments and safety-critical applications, have long faced challenges related to hardware and software faults. As AI and machine learning technologies are increasingly integrated into these systems, the potential for faults and errors takes on new dimensions, with the added complexity of AI algorithms and the critical nature of the applications in which they are deployed.

Let’s consider a few examples, starting with outer space exploration. NASA’s Mars Polar Lander mission in 1999 suffered a catastrophic failure due to a software error in the touchdown detection system (Figure 17.4). The spacecraft’s onboard software mistakenly interpreted the noise from the deployment of its landing legs as a sign that it had touched down on the Martian surface. As a result, the spacecraft prematurely shut down its engines, causing it to crash into the surface. This incident highlights the critical importance of robust software design and extensive testing in embedded systems, especially those operating in remote and unforgiving environments. As AI capabilities are integrated into future space missions, ensuring these systems’ reliability and fault tolerance will be paramount to mission success.

Back on earth, in 2015, a Boeing 787 Dreamliner experienced a complete electrical shutdown during a flight due to a software bug in its generator control units. This incident underscores the potential for software faults to have severe consequences in complex embedded systems like aircraft. As AI technologies are increasingly applied in aviation, such as in autonomous flight systems and predictive maintenance, ensuring the robustness and reliability of these systems will be critical to passenger safety.

“If the four main generator control units (associated with the engine-mounted generators) were powered up at the same time, after 248 days of continuous power, all four GCUs will go into failsafe mode at the same time, resulting in a loss of all AC electrical power regardless of flight phase.” – Federal Aviation Administration directive (2015)

As AI capabilities increasingly integrate into embedded systems, the potential for faults and errors becomes more complex and severe. Imagine a smart pacemaker that has a sudden glitch. A patient could die from that effect. Therefore, AI algorithms, such as those used for perception, decision-making, and control, introduce new sources of potential faults, such as data-related issues, model uncertainties, and unexpected behaviors in edge cases. Moreover, the opaque nature of some AI models can make it challenging to identify and diagnose faults when they occur.

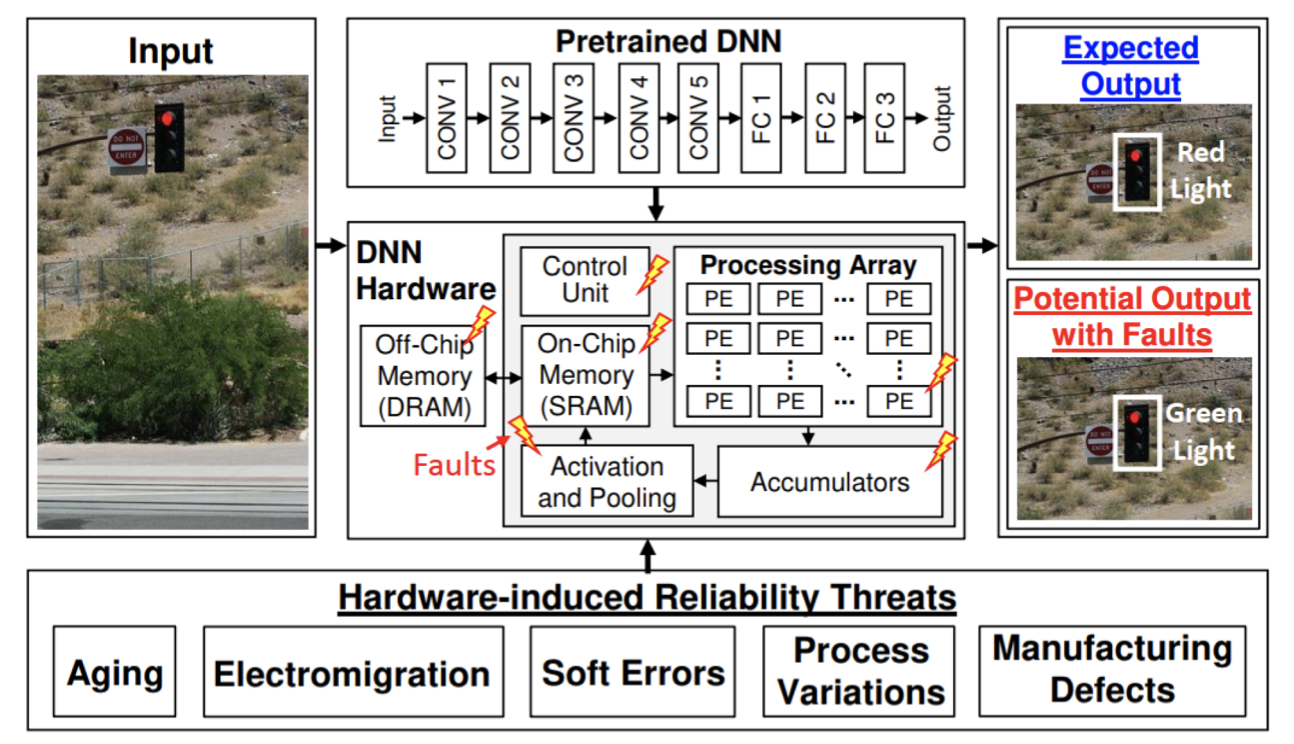

17.3 Hardware Faults

Hardware faults are a significant challenge in computing systems, including traditional and ML systems. These faults occur when physical components, such as processors, memory modules, storage devices, or interconnects, malfunction or behave abnormally. Hardware faults can cause incorrect computations, data corruption, system crashes, or complete system failure, compromising the integrity and trustworthiness of the computations performed by the system (Jha et al. 2019). A complete system failure refers to a situation where the entire computing system becomes unresponsive or inoperable due to a critical hardware malfunction. This type of failure is the most severe, as it renders the system unusable and may lead to data loss or corruption, requiring manual intervention to repair or replace the faulty components.

Understanding the taxonomy of hardware faults is essential for anyone working with computing systems, especially in the context of ML systems. ML systems rely on complex hardware architectures and large-scale computations to train and deploy models that learn from data and make intelligent predictions or decisions. However, hardware faults can introduce errors and inconsistencies in the MLOps pipeline, affecting the trained models’ accuracy, robustness, and reliability (G. Li et al. 2017).

Knowing the different types of hardware faults, their mechanisms, and their potential impact on system behavior is crucial for developing effective strategies to detect, mitigate, and recover them. This knowledge is necessary for designing fault-tolerant computing systems, implementing robust ML algorithms, and ensuring the overall dependability of ML-based applications.

The following sections will explore the three main categories of hardware faults: transient, permanent, and intermittent. We will discuss their definitions, characteristics, causes, mechanisms, and examples of how they manifest in computing systems. We will also cover detection and mitigation techniques specific to each fault type.

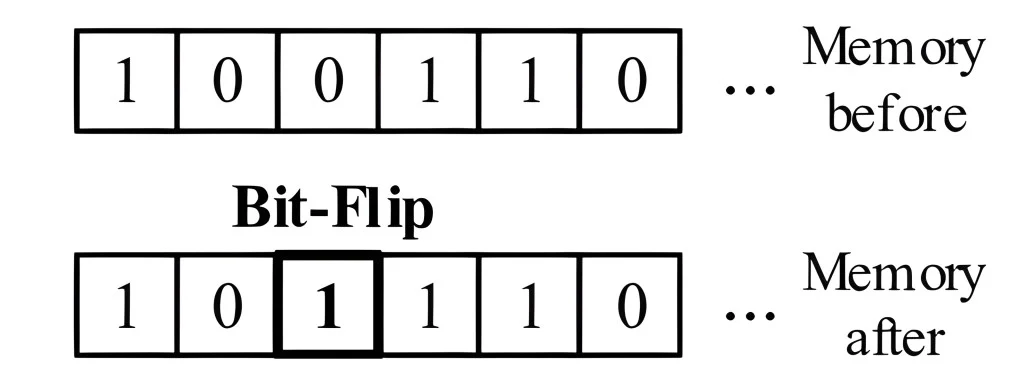

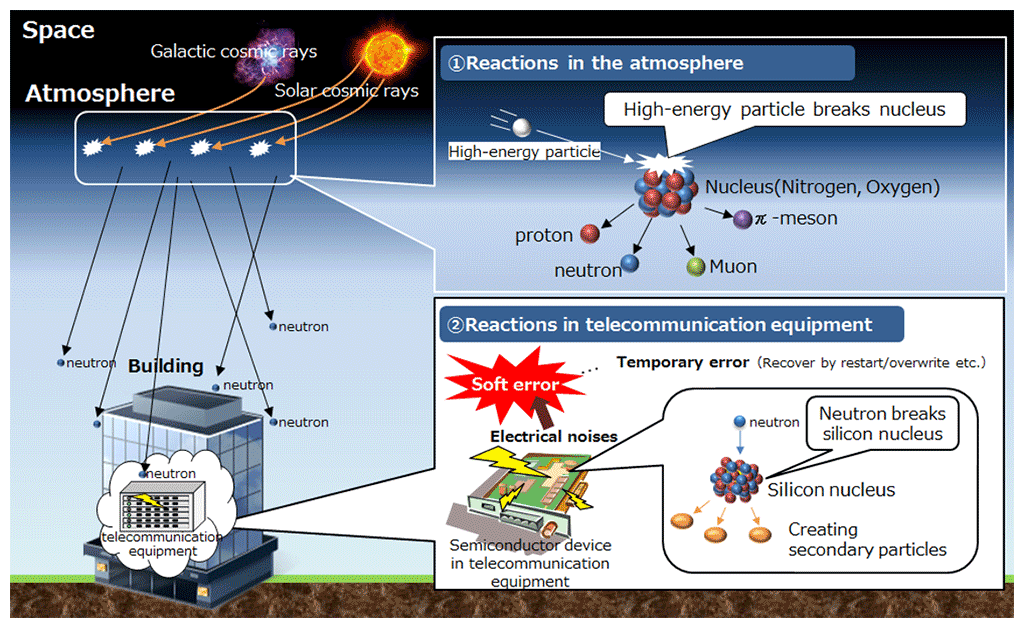

Transient Faults: Transient faults are temporary and non-recurring. They are often caused by external factors such as cosmic rays, electromagnetic interference, or power fluctuations. A common example of a transient fault is a bit flip, where a single bit in a memory location or register changes its value unexpectedly. Transient faults can lead to incorrect computations or data corruption, but they do not cause permanent damage to the hardware.

Permanent Faults: Permanent faults, also called hard errors, are irreversible and persist over time. They are typically caused by physical defects or wear-out of hardware components. Examples of permanent faults include stuck-at faults, where a bit or signal is permanently set to a specific value (e.g., always 0 or always 1), and device failures, such as a malfunctioning processor or a damaged memory module. Permanent faults can result in complete system failure or significant performance degradation.

Intermittent Faults: Intermittent faults are recurring faults that appear and disappear intermittently. Unstable hardware conditions, such as loose connections, aging components, or manufacturing defects, often cause them. Intermittent faults can be challenging to diagnose and reproduce because they may occur sporadically and under specific conditions. Examples include intermittent short circuits or contact resistance issues. Intermittent faults can lead to unpredictable system behavior and intermittent errors.

By the end of this discussion, readers will have a solid understanding of fault taxonomy and its relevance to traditional computing and ML systems. This foundation will help them make informed decisions when designing, implementing, and deploying fault-tolerant solutions, improving the reliability and trustworthiness of their computing systems and ML applications.

17.3.1 Transient Faults

Transient faults in hardware can manifest in various forms, each with its own unique characteristics and causes. These faults are temporary in nature and do not result in permanent damage to the hardware components.

Definition and Characteristics

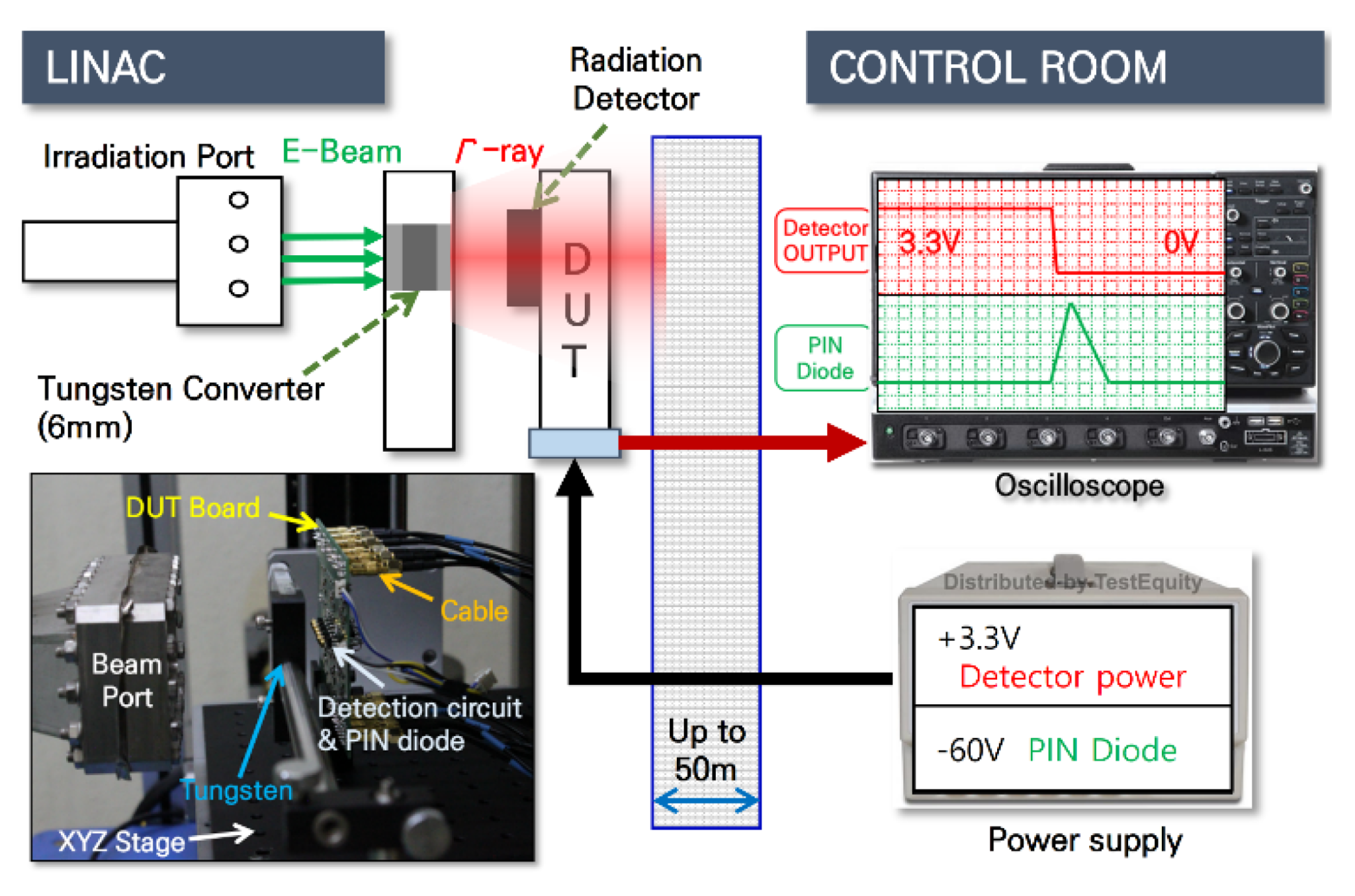

Some of the common types of transient faults include Single Event Upsets (SEUs) caused by ionizing radiation, voltage fluctuations (Reddi and Gupta 2013) due to power supply noise or electromagnetic interference, Electromagnetic Interference (EMI) induced by external electromagnetic fields, Electrostatic Discharge (ESD) resulting from sudden static electricity flow, crosstalk caused by unintended signal coupling, ground bounce triggered by simultaneous switching of multiple outputs, timing violations due to signal timing constraint breaches, and soft errors in combinational logic affecting the output of logic circuits (Mukherjee, Emer, and Reinhardt 2005). Understanding these different types of transient faults is crucial for designing robust and resilient hardware systems that can mitigate their impact and ensure reliable operation.

All of these transient faults are characterized by their short duration and non-permanent nature. They do not persist or leave any lasting impact on the hardware. However, they can still lead to incorrect computations, data corruption, or system misbehavior if not properly handled.

Causes of Transient Faults

Transient faults can be attributed to various external factors. One common cause is cosmic rays, high-energy particles originating from outer space. When these particles strike sensitive areas of the hardware, such as memory cells or transistors, they can induce charge disturbances that alter the stored or transmitted data. This is illustrated in Figure 17.5. Another cause of transient faults is electromagnetic interference (EMI) from nearby devices or power fluctuations. EMI can couple with the circuits and cause voltage spikes or glitches that temporarily disrupt the normal operation of the hardware.

Mechanisms of Transient Faults

Transient faults can manifest through different mechanisms depending on the affected hardware component. In memory devices like DRAM or SRAM, transient faults often lead to bit flips, where a single bit changes its value from 0 to 1 or vice versa. This can corrupt the stored data or instructions. In logic circuits, transient faults can cause glitches or voltage spikes propagating through the combinational logic, resulting in incorrect outputs or control signals. Transient faults can also affect communication channels, causing bit errors or packet losses during data transmission.

Impact on ML Systems

A common example of a transient fault is a bit flip in the main memory. If an important data structure or critical instruction is stored in the affected memory location, it can lead to incorrect computations or program misbehavior. If a transient fault occurs in the memory storing the model weights or gradients. For instance, a bit flip in the memory storing a loop counter can cause the loop to execute indefinitely or terminate prematurely. Transient faults in control registers or flag bits can alter the flow of program execution, leading to unexpected jumps or incorrect branch decisions. In communication systems, transient faults can corrupt transmitted data packets, resulting in retransmissions or data loss.

In ML systems, transient faults can have significant implications during the training phase (He et al. 2023). ML training involves iterative computations and updates to model parameters based on large datasets. If a transient fault occurs in the memory storing the model weights or gradients, it can lead to incorrect updates and compromise the convergence and accuracy of the training process. Figure 17.6 show a real-world example from Google’s production fleet where an SDC anomaly caused a significant difference in the gradient norm.

For example, a bit flip in the weight matrix of a neural network can cause the model to learn incorrect patterns or associations, leading to degraded performance (Wan et al. 2021). Transient faults in the data pipeline, such as corruption of training samples or labels, can also introduce noise and affect the quality of the learned model.

During the inference phase, transient faults can impact the reliability and trustworthiness of ML predictions. If a transient fault occurs in the memory storing the trained model parameters or in the computation of the inference results, it can lead to incorrect or inconsistent predictions. For instance, a bit flip in the activation values of a neural network can alter the final classification or regression output (Mahmoud et al. 2020).

In safety-critical applications, such as autonomous vehicles or medical diagnosis, transient faults during inference can have severe consequences, leading to incorrect decisions or actions (G. Li et al. 2017; Jha et al. 2019). Ensuring the resilience of ML systems against transient faults is crucial to maintaining the integrity and reliability of the predictions.

At the other extreme, in resource-constrained environments like TinyML, Binarized Neural Networks [BNNs] (Courbariaux et al. 2016) have emerged as a promising solution. BNNs represent network weights in single-bit precision, offering computational efficiency and faster inference times. However, this binary representation renders BNNs fragile to bit-flip errors on the network weights. For instance, prior work (Aygun, Gunes, and De Vleeschouwer 2021) has shown that a two-hidden layer BNN architecture for a simple task such as MNIST classification suffers performance degradation from 98% test accuracy to 70% when random bit-flipping soft errors are inserted through model weights with a 10% probability.

Addressing such issues requires considering flip-aware training techniques or leveraging emerging computing paradigms (e.g., stochastic computing) to improve fault tolerance and robustness, which we will discuss in Section 17.3.4. Future research directions aim to develop hybrid architectures, novel activation functions, and loss functions tailored to bridge the accuracy gap compared to full-precision models while maintaining their computational efficiency.

17.3.2 Permanent Faults

Permanent faults are hardware defects that persist and cause irreversible damage to the affected components. These faults are characterized by their persistent nature and require repair or replacement of the faulty hardware to restore normal system functionality.

Definition and Characteristics

Permanent faults are hardware defects that cause persistent and irreversible malfunctions in the affected components. The faulty component remains non-operational until a permanent fault is repaired or replaced. These faults are characterized by their consistent and reproducible nature, meaning that the faulty behavior is observed every time the affected component is used. Permanent faults can impact various hardware components, such as processors, memory modules, storage devices, or interconnects, leading to system crashes, data corruption, or complete system failure.

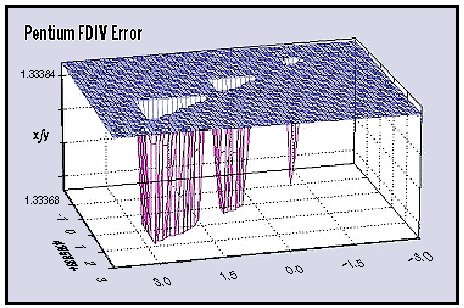

One notable example of a permanent fault is the Intel FDIV bug, which was discovered in 1994. The FDIV bug was a flaw in certain Intel Pentium processors’ floating-point division (FDIV) units. The bug caused incorrect results for specific division operations, leading to inaccurate calculations.

The FDIV bug occurred due to an error in the lookup table used by the division unit. In rare cases, the processor would fetch an incorrect value from the lookup table, resulting in a slightly less precise result than expected. For instance, Figure 17.7 shows a fraction 4195835/3145727 plotted on a Pentium processor with the FDIV permanent fault. The triangular regions are where erroneous calculations occurred. Ideally, all correct values would round to 1.3338, but the erroneous results show 1.3337, indicating a mistake in the 5th digit.

Although the error was small, it could compound over many division operations, leading to significant inaccuracies in mathematical calculations. The impact of the FDIV bug was significant, especially for applications that relied heavily on precise floating-point division, such as scientific simulations, financial calculations, and computer-aided design. The bug led to incorrect results, which could have severe consequences in fields like finance or engineering.

The Intel FDIV bug is a cautionary tale for the potential impact of permanent faults on ML systems. In the context of ML, permanent faults in hardware components can lead to incorrect computations, affecting the accuracy and reliability of the models. For example, if an ML system relies on a processor with a faulty floating-point unit, similar to the Intel FDIV bug, it could introduce errors in the calculations performed during training or inference.

These errors can propagate through the model, leading to inaccurate predictions or skewed learning. In applications where ML is used for critical tasks, such as autonomous driving, medical diagnosis, or financial forecasting, the consequences of incorrect computations due to permanent faults can be severe.

It is crucial for ML practitioners to be aware of the potential impact of permanent faults and to incorporate fault-tolerant techniques, such as hardware redundancy, error detection and correction mechanisms, and robust algorithm design, to mitigate the risks associated with these faults. Additionally, thorough testing and validation of ML hardware components can help identify and address permanent faults before they impact the system’s performance and reliability.

Causes of Permanent Faults

Permanent faults can arise from several causes, including manufacturing defects and wear-out mechanisms. Manufacturing defects are inherent flaws introduced during the fabrication process of hardware components. These defects include improper etching, incorrect doping, or contamination, leading to non-functional or partially functional components.

On the other hand, wear-out mechanisms occur over time as the hardware components are subjected to prolonged use and stress. Factors such as electromigration, oxide breakdown, or thermal stress can cause gradual degradation of the components, eventually leading to permanent failures.

Mechanisms of Permanent Faults

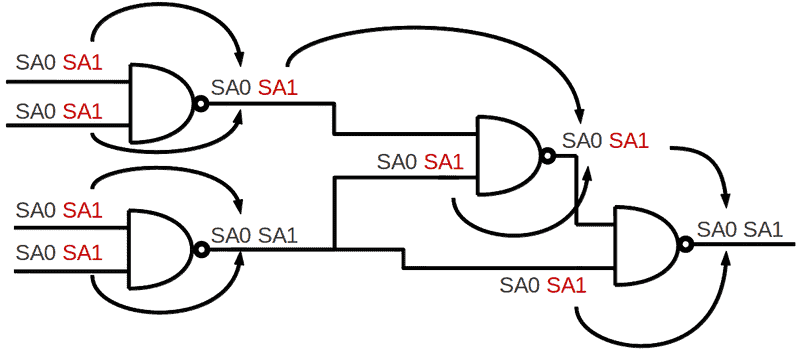

Permanent faults can manifest through various mechanisms, depending on the nature and location of the fault. Stuck-at faults (Seong et al. 2010) are common permanent faults where a signal or memory cell remains fixed at a particular value (either 0 or 1) regardless of the inputs, as illustrated in Figure 17.8.

Stuck-at faults can occur in logic gates, memory cells, or interconnects, causing incorrect computations or data corruption. Another mechanism is device failures, where a component, such as a transistor or a memory cell, completely ceases to function. This can be due to manufacturing defects or severe wear-out. Bridging faults occur when two or more signal lines are unintentionally connected, causing short circuits or incorrect logic behavior.

In addition to stuck-at faults, there are several other types of permanent faults that can affect digital circuits that can impact an ML system. Delay faults can cause the propagation delay of a signal to exceed the specified limit, leading to timing violations. Interconnect faults, such as open faults (broken wires), resistive faults (increased resistance), or capacitive faults (increased capacitance), can cause signal integrity issues or timing violations. Memory cells can also suffer from various faults, including transition faults (inability to change state), coupling faults (interference between adjacent cells), and neighborhood pattern sensitive faults (faults that depend on the values of neighboring cells). Other permanent faults can occur in the power supply network or the clock distribution network, affecting the functionality and timing of the circuit.

Impact on ML Systems

Permanent faults can severely affect the behavior and reliability of computing systems. For example, a stuck-at-fault in a processor’s arithmetic logic unit (ALU) can cause incorrect computations, leading to erroneous results or system crashes. A permanent fault in a memory module, such as a stuck-at fault in a specific memory cell, can corrupt the stored data, causing data loss or program misbehavior. In storage devices, permanent faults like bad sectors or device failures can result in data inaccessibility or complete loss of stored information. Permanent interconnect faults can disrupt communication channels, causing data corruption or system hangs.

Permanent faults can significantly affect ML systems during the training and inference phases. During training, permanent faults in processing units or memory can lead to incorrect computations, resulting in corrupted or suboptimal models (He et al. 2023). Furthermore, faults in storage devices can corrupt the training data or the stored model parameters, leading to data loss or model inconsistencies (He et al. 2023).

During inference, permanent faults can impact the reliability and correctness of ML predictions. Faults in the processing units can produce incorrect results or cause system failures, while faults in memory storing the model parameters can lead to corrupted or outdated models being used for inference (J. J. Zhang et al. 2018).

To mitigate the impact of permanent faults in ML systems, fault-tolerant techniques must be employed at both the hardware and software levels. Hardware redundancy, such as duplicating critical components or using error-correcting codes (Kim, Sullivan, and Erez 2015), can help detect and recover from permanent faults. Software techniques, such as checkpoint and restart mechanisms (Egwutuoha et al. 2013), can enable the system to recover from permanent faults by returning to a previously saved state. Regular monitoring, testing, and maintenance of ML systems can help identify and replace faulty components before they cause significant disruptions.

Designing ML systems with fault tolerance in mind is crucial to ensure their reliability and robustness in the presence of permanent faults. This may involve incorporating redundancy, error detection and correction mechanisms, and fail-safe strategies into the system architecture. By proactively addressing the challenges posed by permanent faults, ML systems can maintain their integrity, accuracy, and trustworthiness, even in the face of hardware failures.

17.3.3 Intermittent Faults

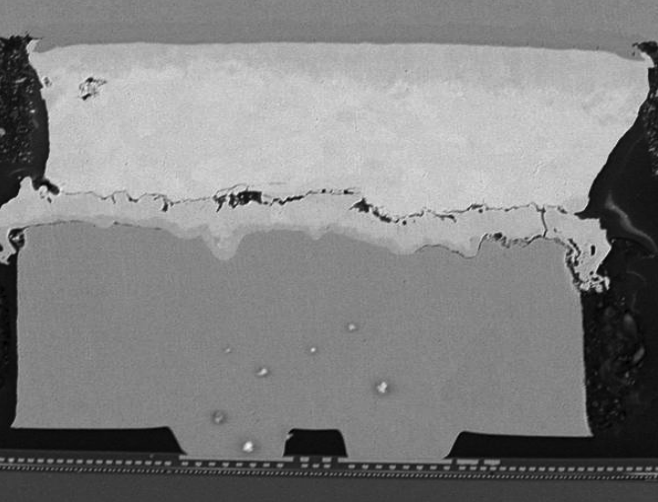

Intermittent faults are hardware faults that occur sporadically and unpredictably in a system. An example is illustrated in Figure 17.9, where cracks in the material can introduce increased resistance in circuitry. These faults are particularly challenging to detect and diagnose because they appear and disappear intermittently, making it difficult to reproduce and isolate the root cause. Intermittent faults can lead to system instability, data corruption, and performance degradation.

Definition and Characteristics

Intermittent faults are characterized by their sporadic and non-deterministic nature. They occur irregularly and may appear and disappear spontaneously, with varying durations and frequencies. These faults do not consistently manifest every time the affected component is used, making them harder to detect than permanent faults. Intermittent faults can affect various hardware components, including processors, memory modules, storage devices, or interconnects. They can cause transient errors, data corruption, or unexpected system behavior.

Intermittent faults can significantly impact the behavior and reliability of computing systems (Rashid, Pattabiraman, and Gopalakrishnan 2015). For example, an intermittent fault in a processor’s control logic can cause irregular program flow, leading to incorrect computations or system hangs. Intermittent faults in memory modules can corrupt data values, resulting in erroneous program execution or data inconsistencies. In storage devices, intermittent faults can cause read/write errors or data loss. Intermittent faults in communication channels can lead to data corruption, packet loss, or intermittent connectivity issues. These faults can cause system crashes, data integrity problems, or performance degradation, depending on the severity and frequency of the intermittent failures.

Causes of Intermittent Faults

Intermittent faults can arise from several causes, both internal and external, to the hardware components (Constantinescu 2008). One common cause is aging and wear-out of the components. As electronic devices age, they become more susceptible to intermittent failures due to degradation mechanisms such as electromigration, oxide breakdown, or solder joint fatigue.

Manufacturing defects or process variations can also introduce intermittent faults, where marginal or borderline components may exhibit sporadic failures under specific conditions, as shown in Figure 17.10.

Environmental factors, such as temperature fluctuations, humidity, or vibrations, can trigger intermittent faults by altering the electrical characteristics of the components. Loose or degraded connections, such as those in connectors or printed circuit boards, can cause intermittent faults.

Mechanisms of Intermittent Faults

Intermittent faults can manifest through various mechanisms, depending on the underlying cause and the affected component. One mechanism is the intermittent open or short circuit, where a signal path or connection becomes temporarily disrupted or shorted, causing erratic behavior. Another mechanism is the intermittent delay fault (J. Zhang et al. 2018), where the timing of signals or propagation delays becomes inconsistent, leading to synchronization issues or incorrect computations. Intermittent faults can manifest as transient bit flips or soft errors in memory cells or registers, causing data corruption or incorrect program execution.

Impact on ML Systems

In the context of ML systems, intermittent faults can introduce significant challenges and impact the system’s reliability and performance. During the training phase, intermittent faults in processing units or memory can lead to inconsistencies in computations, resulting in incorrect or noisy gradients and weight updates. This can affect the convergence and accuracy of the training process, leading to suboptimal or unstable models. Intermittent data storage or retrieval faults can corrupt the training data, introducing noise or errors that degrade the quality of the learned models (He et al. 2023).

During the inference phase, intermittent faults can impact the reliability and consistency of ML predictions. Faults in the processing units or memory can cause incorrect computations or data corruption, leading to erroneous or inconsistent predictions. Intermittent faults in the data pipeline can introduce noise or errors in the input data, affecting the accuracy and robustness of the predictions. In safety-critical applications, such as autonomous vehicles or medical diagnosis systems, intermittent faults can have severe consequences, leading to incorrect decisions or actions that compromise safety and reliability.

Mitigating the impact of intermittent faults in ML systems requires a multifaceted approach (Rashid, Pattabiraman, and Gopalakrishnan 2012). At the hardware level, techniques such as robust design practices, component selection, and environmental control can help reduce the occurrence of intermittent faults. Redundancy and error correction mechanisms can be employed to detect and recover from intermittent failures. At the software level, runtime monitoring, anomaly detection, and fault-tolerant techniques can be incorporated into the ML pipeline. This may include techniques such as data validation, outlier detection, model ensembling, or runtime model adaptation to handle intermittent faults gracefully.

Designing ML systems resilient to intermittent faults is crucial to ensuring their reliability and robustness. This involves incorporating fault-tolerant techniques, runtime monitoring, and adaptive mechanisms into the system architecture. By proactively addressing the challenges of intermittent faults, ML systems can maintain their accuracy, consistency, and trustworthiness, even in sporadic hardware failures. Regular testing, monitoring, and maintenance of ML systems can help identify and mitigate intermittent faults before they cause significant disruptions or performance degradation.

17.3.4 Detection and Mitigation

This section explores various fault detection techniques, including hardware-level and software-level approaches, and discusses effective mitigation strategies to enhance the resilience of ML systems. Additionally, we will look into resilient ML system design considerations, present case studies and examples, and highlight future research directions in fault-tolerant ML systems.

Fault Detection Techniques

Fault detection techniques are important for identifying and localizing hardware faults in ML systems. These techniques can be broadly categorized into hardware-level and software-level approaches, each offering unique capabilities and advantages.

Hardware-level fault detection

Hardware-level fault detection techniques are implemented at the physical level of the system and aim to identify faults in the underlying hardware components. There are several hardware techniques, but broadly, we can bucket these different mechanisms into the following categories.

Built-in self-test (BIST) mechanisms: BIST is a powerful technique for detecting faults in hardware components (Bushnell and Agrawal 2002). It involves incorporating additional hardware circuitry into the system for self-testing and fault detection. BIST can be applied to various components, such as processors, memory modules, or application-specific integrated circuits (ASICs). For example, BIST can be implemented in a processor using scan chains, which are dedicated paths that allow access to internal registers and logic for testing purposes.

During the BIST process, predefined test patterns are applied to the processor’s internal circuitry, and the responses are compared against expected values. Any discrepancies indicate the presence of faults. Intel’s Xeon processors, for instance, include BIST mechanisms to test the CPU cores, cache memory, and other critical components during system startup.

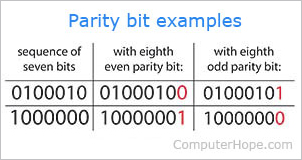

Error detection codes: Error detection codes are widely used to detect data storage and transmission errors (Hamming 1950). These codes add redundant bits to the original data, allowing the detection of bit errors. Example: Parity checks are a simple form of error detection code shown in Figure 17.11. In a single-bit parity scheme, an extra bit is appended to each data word, making the number of 1s in the word even (even parity) or odd (odd parity).

When reading the data, the parity is checked, and if it doesn’t match the expected value, an error is detected. More advanced error detection codes, such as cyclic redundancy checks (CRC), calculate a checksum based on the data and append it to the message. The checksum is recalculated at the receiving end and compared with the transmitted checksum to detect errors. Error-correcting code (ECC) memory modules, commonly used in servers and critical systems, employ advanced error detection and correction codes to detect and correct single-bit or multi-bit errors in memory.

Hardware redundancy and voting mechanisms: Hardware redundancy involves duplicating critical components and comparing their outputs to detect and mask faults (Sheaffer, Luebke, and Skadron 2007). Voting mechanisms, such as triple modular redundancy (TMR), employ multiple instances of a component and compare their outputs to identify and mask faulty behavior (Arifeen, Hassan, and Lee 2020).

In a TMR system, three identical instances of a hardware component, such as a processor or a sensor, perform the same computation in parallel. The outputs of these instances are fed into a voting circuit, which compares the results and selects the majority value as the final output. If one of the instances produces an incorrect result due to a fault, the voting mechanism masks the error and maintains the correct output. TMR is commonly used in aerospace and aviation systems, where high reliability is critical. For instance, the Boeing 777 aircraft employs TMR in its primary flight computer system to ensure the availability and correctness of flight control functions (Yeh 1996).

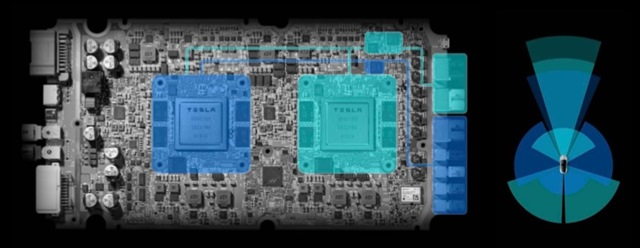

Tesla’s self-driving computers employ a redundant hardware architecture to ensure the safety and reliability of critical functions, such as perception, decision-making, and vehicle control, as shown in Figure 17.12. One key component of this architecture is using dual modular redundancy (DMR) in the car’s onboard computer systems.

In Tesla’s DMR implementation, two identical hardware units, often called “redundant computers” or “redundant control units,” perform the same computations in parallel (Bannon et al. 2019). Each unit independently processes sensor data, executes perception and decision-making algorithms, and generates control commands for the vehicle’s actuators (e.g., steering, acceleration, and braking).

The outputs of these two redundant units are continuously compared to detect any discrepancies or faults. If the outputs match, the system assumes that both units function correctly, and the control commands are sent to the vehicle’s actuators. However, if there is a mismatch between the outputs, the system identifies a potential fault in one of the units and takes appropriate action to ensure safe operation.

The system may employ additional mechanisms to determine which unit is faulty in a mismatch. This can involve using diagnostic algorithms, comparing the outputs with data from other sensors or subsystems, or analyzing the consistency of the outputs over time. Once the faulty unit is identified, the system can isolate it and continue operating using the output from the non-faulty unit.

DMR in Tesla’s self-driving computer provides an extra safety and fault tolerance layer. By having two independent units performing the same computations, the system can detect and mitigate faults that may occur in one of the units. This redundancy helps prevent single points of failure and ensures that critical functions remain operational despite hardware faults.

Furthermore, Tesla also incorporates additional redundancy mechanisms beyond DMR. For example, they use redundant power supplies, steering and braking systems, and diverse sensor suites (e.g., cameras, radar, and ultrasonic sensors) to provide multiple layers of fault tolerance. These redundancies collectively contribute to the overall safety and reliability of the self-driving system.

It’s important to note that while DMR provides fault detection and some level of fault tolerance, TMR may provide a different level of fault masking. In DMR, if both units experience simultaneous faults or the fault affects the comparison mechanism, the system may be unable to identify the fault. Therefore, Tesla’s SDCs rely on a combination of DMR and other redundancy mechanisms to achieve a high level of fault tolerance.

The use of DMR in Tesla’s self-driving computer highlights the importance of hardware redundancy in safety-critical applications. By employing redundant computing units and comparing their outputs, the system can detect and mitigate faults, enhancing the overall safety and reliability of the self-driving functionality.

Google employs redundant hot spares to deal with SDC issues within its data centers, thereby enhancing the reliability of critical functions. As illustrated in Figure 17.13, during the normal training phase, multiple synchronous training workers function flawlessly. However, if a worker becomes defective and causes SDC, an SDC checker automatically identifies the issues. Upon detecting the SDC, the SDC checker moves the training to a hot spare and sends the defective machine for repair. This redundancy safeguards the continuity and reliability of ML training, effectively minimizing downtime and preserving data integrity.

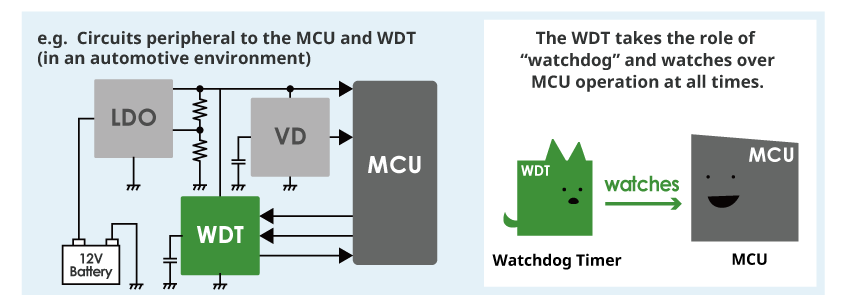

Watchdog timers: Watchdog timers are hardware components that monitor the execution of critical tasks or processes (Pont and Ong 2002). They are commonly used to detect and recover from software or hardware faults that cause a system to become unresponsive or stuck in an infinite loop. In an embedded system, a watchdog timer can be configured to monitor the execution of the main control loop, as illustrated in Figure 17.14. The software periodically resets the watchdog timer to indicate that it functions correctly. Suppose the software fails to reset the timer within a specified time limit (timeout period). In that case, the watchdog timer assumes that the system has encountered a fault and triggers a predefined recovery action, such as resetting the system or switching to a backup component. Watchdog timers are widely used in automotive electronics, industrial control systems, and other safety-critical applications to ensure the timely detection and recovery from faults.

Software-level fault detection

Software-level fault detection techniques rely on software algorithms and monitoring mechanisms to identify system faults. These techniques can be implemented at various levels of the software stack, including the operating system, middleware, or application level.

Runtime monitoring and anomaly detection: Runtime monitoring involves continuously observing the behavior of the system and its components during execution (Francalanza et al. 2017). It helps detect anomalies, errors, or unexpected behavior that may indicate the presence of faults. For example, consider an ML-based image classification system deployed in a self-driving car. Runtime monitoring can be implemented to track the classification model’s performance and behavior (Mahmoud et al. 2021).

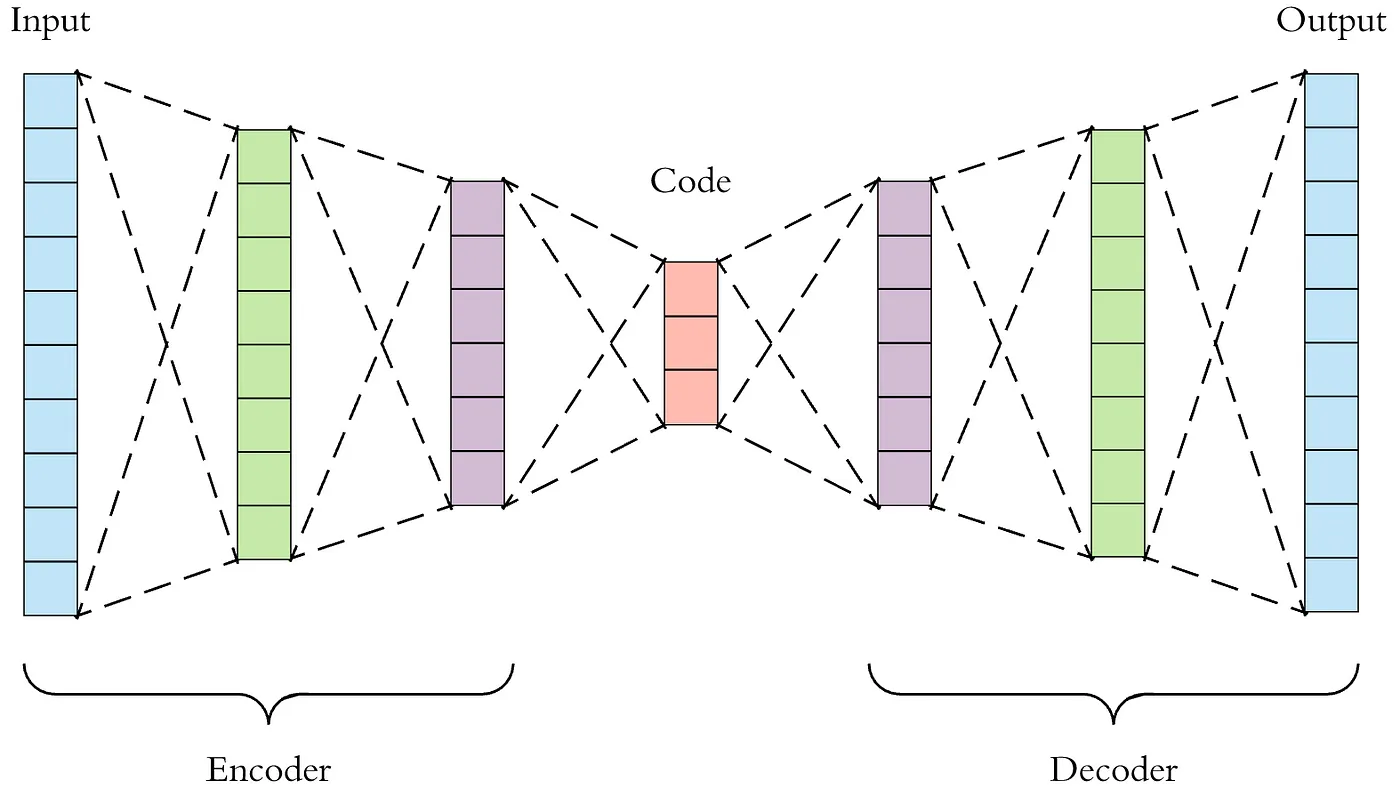

Anomaly detection algorithms can be applied to the model’s predictions or intermediate layer activations, such as statistical outlier detection or machine learning-based approaches (e.g., One-Class SVM or Autoencoders) (Chandola, Banerjee, and Kumar 2009). Figure 17.15 shows example of anomaly detection. Suppose the monitoring system detects a significant deviation from the expected patterns, such as a sudden drop in classification accuracy or out-of-distribution samples. In that case, it can raise an alert indicating a potential fault in the model or the input data pipeline. This early detection allows for timely intervention and fault mitigation strategies to be applied.

Consistency checks and data validation: Consistency checks and data validation techniques ensure data integrity and correctness at different processing stages in an ML system (Lindholm et al. 2019). These checks help detect data corruption, inconsistencies, or errors that may propagate and affect the system’s behavior. Example: In a distributed ML system where multiple nodes collaborate to train a model, consistency checks can be implemented to validate the integrity of the shared model parameters. Each node can compute a checksum or hash of the model parameters before and after the training iteration, as shown in Figure 17.15. Any inconsistencies or data corruption can be detected by comparing the checksums across nodes. Additionally, range checks can be applied to the input data and model outputs to ensure they fall within expected bounds. For instance, if an autonomous vehicle’s perception system detects an object with unrealistic dimensions or velocities, it can indicate a fault in the sensor data or the perception algorithms (Wan et al. 2023).

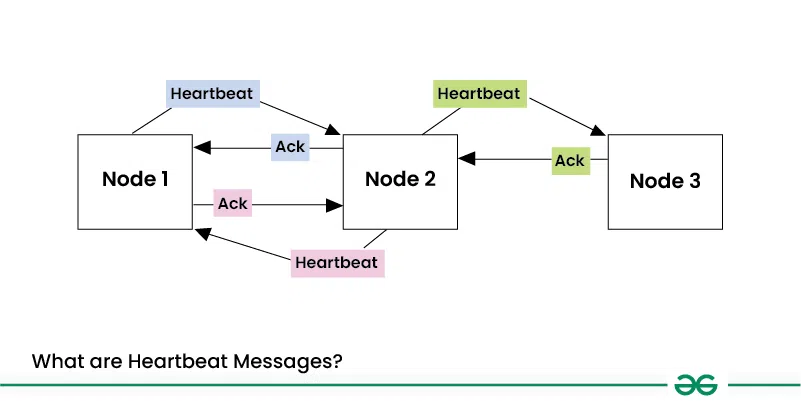

Heartbeat and timeout mechanisms: Heartbeat mechanisms and timeouts are commonly used to detect faults in distributed systems and ensure the liveness and responsiveness of components (Kawazoe Aguilera, Chen, and Toueg 1997). These are quite similar to the watchdog timers found in hardware. For example, in a distributed ML system, where multiple nodes collaborate to perform tasks such as data preprocessing, model training, or inference, heartbeat mechanisms can be implemented to monitor the health and availability of each node. Each node periodically sends a heartbeat message to a central coordinator or its peer nodes, indicating its status and availability. Suppose a node fails to send a heartbeat within a specified timeout period, as shown in Figure 17.16. In that case, it is considered faulty, and appropriate actions can be taken, such as redistributing the workload or initiating a failover mechanism. Timeouts can also be used to detect and handle hanging or unresponsive components. For example, if a data loading process exceeds a predefined timeout threshold, it may indicate a fault in the data pipeline, and the system can take corrective measures.

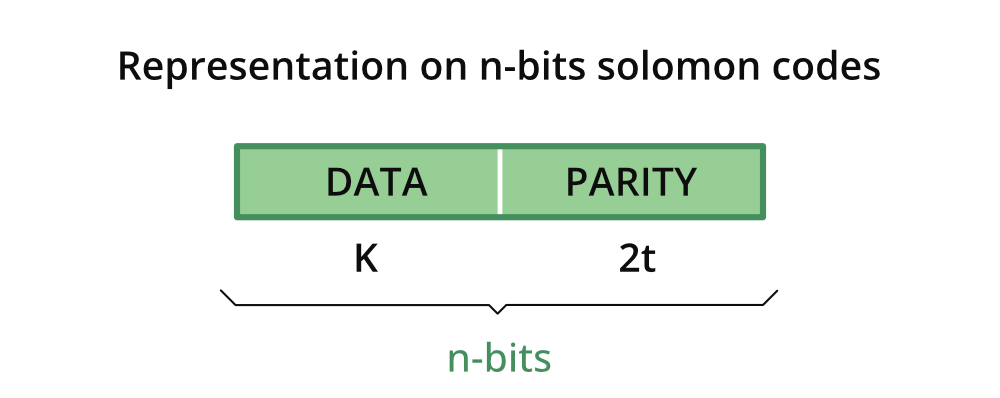

Software-implemented fault tolerance (SIFT) techniques: SIFT techniques introduce redundancy and fault detection mechanisms at the software level to improve the reliability and fault tolerance of the system (Reis et al. 2005). Example: N-version programming is a SIFT technique where multiple functionally equivalent software component versions are developed independently by different teams. This can be applied to critical components such as the model inference engine in an ML system. Multiple versions of the inference engine can be executed in parallel, and their outputs can be compared for consistency. It is considered the correct result if most versions produce the same output. If there is a discrepancy, it indicates a potential fault in one or more versions, and appropriate error-handling mechanisms can be triggered. Another example is using software-based error correction codes, such as Reed-Solomon codes (Plank 1997), to detect and correct errors in data storage or transmission, as shown in Figure 17.17. These codes add redundancy to the data, enabling detecting and correcting certain errors and enhancing the system’s fault tolerance.

In this Colab, play the role of an AI fault detective! You’ll build an autoencoder-based anomaly detector to pinpoint errors in heart health data. Learn how to identify malfunctions in ML systems, a vital skill for creating dependable AI. We’ll use Keras Tuner to fine-tune your autoencoder for top-notch fault detection. This experience directly links to the Robust AI chapter, demonstrating the importance of fault detection in real-world applications like healthcare and autonomous systems. Get ready to strengthen the reliability of your AI creations!

17.3.5 Summary

Table 17.1 provides an extensive comparative analysis of transient, permanent, and intermittent faults. It outlines the primary characteristics or dimensions that distinguish these fault types. Here, we summarize the relevant dimensions we examined and explore the nuances that differentiate transient, permanent, and intermittent faults in greater detail.

| Dimension | Transient Faults | Permanent Faults | Intermittent Faults |

|---|---|---|---|

| Duration | Short-lived, temporary | Persistent, remains until repair or replacement | Sporadic, appears and disappears intermittently |

| Persistence | Disappears after the fault condition passes | Consistently present until addressed | Recurs irregularly, not always present |

| Causes | External factors (e.g., electromagnetic interference cosmic rays) | Hardware defects, physical damage, wear-out | Unstable hardware conditions, loose connections, aging components |

| Manifestation | Bit flips, glitches, temporary data corruption | Stuck-at faults, broken components, complete device failures | Occasional bit flips, intermittent signal issues, sporadic malfunctions |

| Impact on ML Systems | Introduces temporary errors or noise in computations | Causes consistent errors or failures, affecting reliability | Leads to sporadic and unpredictable errors, challenging to diagnose and mitigate |

| Detection | Error detection codes, comparison with expected values | Built-in self-tests, error detection codes, consistency checks | Monitoring for anomalies, analyzing error patterns and correlations |

| Mitigation | Error correction codes, redundancy, checkpoint and restart | Hardware repair or replacement, component redundancy, failover mechanisms | Robust design, environmental control, runtime monitoring, fault-tolerant techniques |

17.4 ML Model Robustness

17.4.1 Adversarial Attacks

Definition and Characteristics

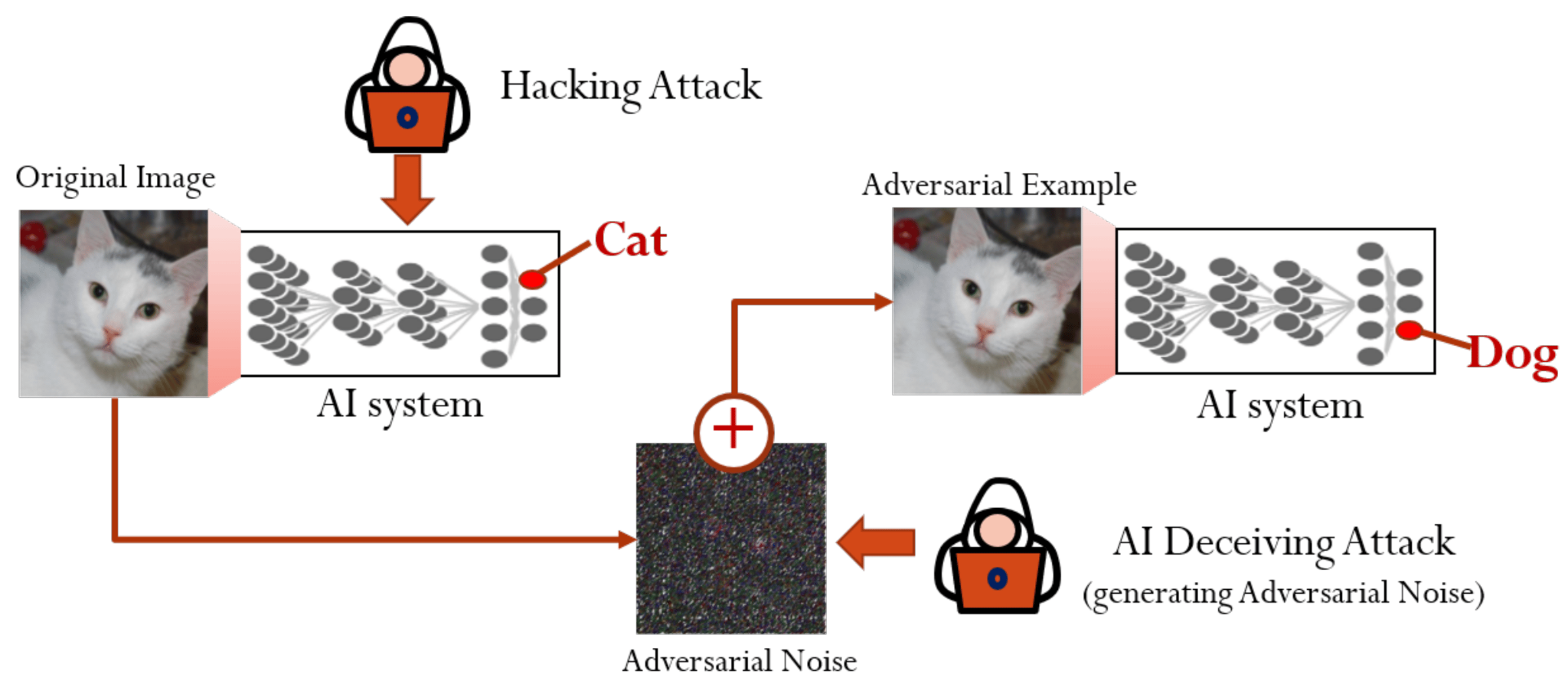

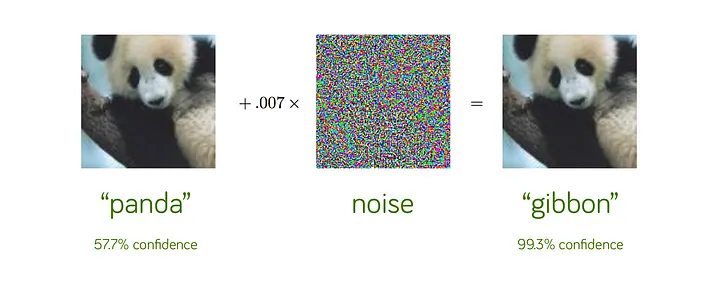

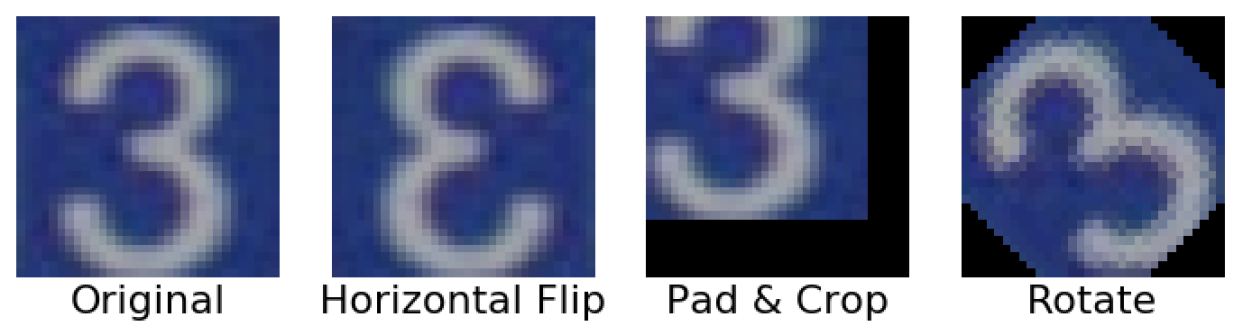

Adversarial attacks aim to trick models into making incorrect predictions by providing them with specially crafted, deceptive inputs (called adversarial examples) (Parrish et al. 2023). By adding slight perturbations to input data, adversaries can “hack” a model’s pattern recognition and deceive it. These are sophisticated techniques where slight, often imperceptible alterations to input data can trick an ML model into making a wrong prediction, as shown in Figure 17.18.

One can generate prompts that lead to unsafe images in text-to-image models like DALLE (Ramesh et al. 2021) or Stable Diffusion (Rombach et al. 2022). For example, by altering the pixel values of an image, attackers can deceive a facial recognition system into identifying a face as a different person.

Adversarial attacks exploit the way ML models learn and make decisions during inference. These models work on the principle of recognizing patterns in data. An adversary crafts special inputs with perturbations to mislead the model’s pattern recognition—essentially ‘hacking’ the model’s perceptions.

Adversarial attacks fall under different scenarios:

Whitebox Attacks: The attacker fully knows the target model’s internal workings, including the training data, parameters, and architecture (Ye and Hamidi 2021). This comprehensive access creates favorable conditions for attackers to exploit the model’s vulnerabilities. The attacker can use specific and subtle weaknesses to craft effective adversarial examples.

Blackbox Attacks: In contrast to white-box attacks, black-box attacks involve the attacker having little to no knowledge of the target model (Guo et al. 2019). To carry out the attack, the adversarial actor must carefully observe the model’s output behavior.

Greybox Attacks: These fall between blackbox and whitebox attacks. The attacker has only partial knowledge about the target model’s internal design (Xu et al. 2021). For example, the attacker could have knowledge about training data but not the architecture or parameters. In the real world, practical attacks typically fall under black-box or grey-box categories.

The landscape of machine learning models is complex and broad, especially given their relatively recent integration into commercial applications. This rapid adoption, while transformative, has brought to light numerous vulnerabilities within these models. Consequently, various adversarial attack methods have emerged, each strategically exploiting different aspects of different models. Below, we highlight a subset of these methods, showcasing the multifaceted nature of adversarial attacks on machine learning models:

Generative Adversarial Networks (GANs) are deep learning models that consist of two networks competing against each other: a generator and a discriminator (Goodfellow et al. 2020). The generator tries to synthesize realistic data while the discriminator evaluates whether they are real or fake. GANs can be used to craft adversarial examples. The generator network is trained to produce inputs that the target model misclassifies. These GAN-generated images can then attack a target classifier or detection model. The generator and the target model are engaged in a competitive process, with the generator continually improving its ability to create deceptive examples and the target model enhancing its resistance to such examples. GANs provide a powerful framework for crafting complex and diverse adversarial inputs, illustrating the adaptability of generative models in the adversarial landscape.

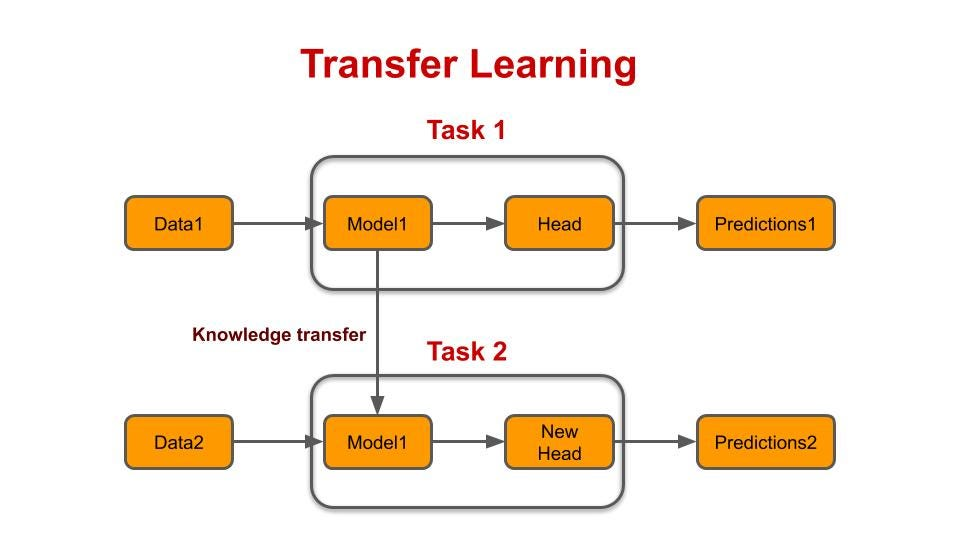

Transfer Learning Adversarial Attacks exploit the knowledge transferred from a pre-trained model to a target model, creating adversarial examples that can deceive both models. These attacks pose a growing concern, particularly when adversaries have knowledge of the feature extractor but lack access to the classification head (the part or layer responsible for making the final classifications). Referred to as “headless attacks,” these transferable adversarial strategies leverage the expressive capabilities of feature extractors to craft perturbations while being oblivious to the label space or training data. The existence of such attacks underscores the importance of developing robust defenses for transfer learning applications, especially since pre-trained models are commonly used (Abdelkader et al. 2020).

Mechanisms of Adversarial Attacks

Gradient-based Attacks

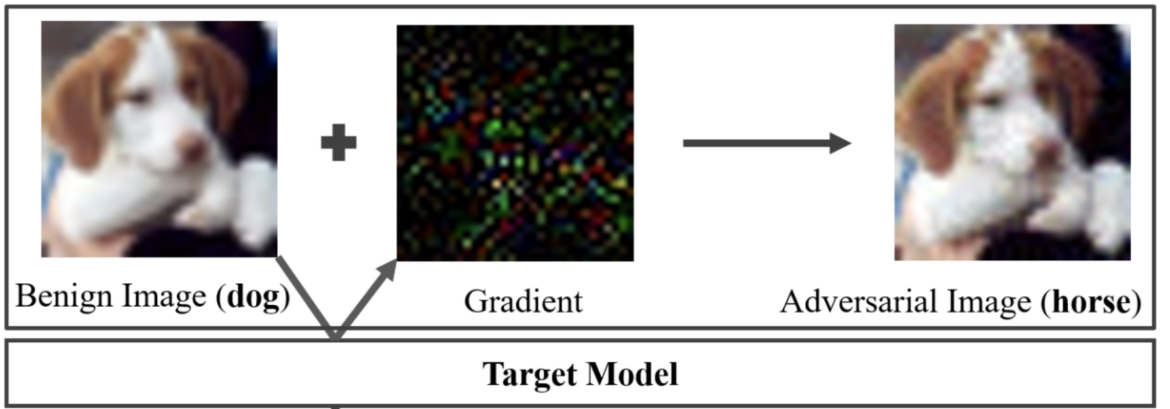

One prominent category of adversarial attacks is gradient-based attacks. These attacks leverage the gradients of the ML model’s loss function to craft adversarial examples. The Fast Gradient Sign Method (FGSM) is a well-known technique in this category. FGSM perturbs the input data by adding small noise in the gradient direction, aiming to maximize the model’s prediction error. FGSM can quickly generate adversarial examples, as shown in Figure 17.19, by taking a single step in the gradient direction.

Another variant, the Projected Gradient Descent (PGD) attack, extends FGSM by iteratively applying the gradient update step, allowing for more refined and powerful adversarial examples. The Jacobian-based Saliency Map Attack (JSMA) is another gradient-based approach that identifies the most influential input features and perturbs them to create adversarial examples.

Optimization-based Attacks

These attacks formulate the generation of adversarial examples as an optimization problem. The Carlini and Wagner (C&W) attack is a prominent example in this category. It finds the smallest perturbation that can cause misclassification while maintaining the perceptual similarity to the original input. The C&W attack employs an iterative optimization process to minimize the perturbation while maximizing the model’s prediction error.

Another optimization-based approach is the Elastic Net Attack to DNNs (EAD), which incorporates elastic net regularization to generate adversarial examples with sparse perturbations.

Transfer-based Attacks

Transfer-based attacks exploit the transferability property of adversarial examples. Transferability refers to the phenomenon where adversarial examples crafted for one ML model can often fool other models, even if they have different architectures or were trained on different datasets. This enables attackers to generate adversarial examples using a surrogate model and then transfer them to the target model without requiring direct access to its parameters or gradients. Transfer-based attacks highlight the generalization of adversarial vulnerabilities across different models and the potential for black-box attacks.

Physical-world Attacks

Physical-world attacks bring adversarial examples into the realm of real-world scenarios. These attacks involve creating physical objects or manipulations that can deceive ML models when captured by sensors or cameras. Adversarial patches, for example, are small, carefully designed patches that can be placed on objects to fool object detection or classification models. When attached to real-world objects, these patches can cause models to misclassify or fail to detect the objects accurately. Adversarial objects, such as 3D-printed sculptures or modified road signs, can also be crafted to deceive ML systems in physical environments.

Summary

Table 17.2 a concise overview of the different categories of adversarial attacks, including gradient-based attacks (FGSM, PGD, JSMA), optimization-based attacks (C&W, EAD), transfer-based attacks, and physical-world attacks (adversarial patches and objects). Each attack is briefly described, highlighting its key characteristics and mechanisms.

| Attack Category | Attack Name | Description |

|---|---|---|

| Gradient-based | Fast Gradient Sign Method (FGSM) Projected Gradient Descent (PGD) Jacobian-based Saliency Map Attack (JSMA) | Perturbs input data by adding small noise in the gradient direction to maximize prediction error. Extends FGSM by iteratively applying the gradient update step for more refined adversarial examples. Identifies influential input features and perturbs them to create adversarial examples. |

| Optimization-based | Carlini and Wagner (C&W) Attack Elastic Net Attack to DNNs (EAD) | Finds the smallest perturbation that causes misclassification while maintaining perceptual similarity. Incorporates elastic net regularization to generate adversarial examples with sparse perturbations. |

| Transfer-based | Transferability-based Attacks | Exploits the transferability of adversarial examples across different models, enabling black-box attacks. |

| Physical-world | Adversarial Patches Adversarial Objects | Small, carefully designed patches placed on objects to fool object detection or classification models. Physical objects (e.g., 3D-printed sculptures, modified road signs) crafted to deceive ML systems in real-world scenarios. |

The mechanisms of adversarial attacks reveal the intricate interplay between the ML model’s decision boundaries, the input data, and the attacker’s objectives. By carefully manipulating the input data, attackers can exploit the model’s sensitivities and blind spots, leading to incorrect predictions. The success of adversarial attacks highlights the need for a deeper understanding of ML models’ robustness and generalization properties.

Defending against adversarial attacks requires a multifaceted approach. Adversarial training is one common defense strategy in which models are trained on adversarial examples to improve robustness. Exposing the model to adversarial examples during training teaches it to classify them correctly and become more resilient to attacks. Defensive distillation, input preprocessing, and ensemble methods are other techniques that can help mitigate the impact of adversarial attacks.

As adversarial machine learning evolves, researchers explore new attack mechanisms and develop more sophisticated defenses. The arms race between attackers and defenders drives the need for constant innovation and vigilance in securing ML systems against adversarial threats. Understanding the mechanisms of adversarial attacks is crucial for developing robust and reliable ML models that can withstand the ever-evolving landscape of adversarial examples.

Impact on ML Systems

Adversarial attacks on machine learning systems have emerged as a significant concern in recent years, highlighting the potential vulnerabilities and risks associated with the widespread adoption of ML technologies. These attacks involve carefully crafted perturbations to input data that can deceive or mislead ML models, leading to incorrect predictions or misclassifications, as shown in Figure 17.20. The impact of adversarial attacks on ML systems is far-reaching and can have serious consequences in various domains.

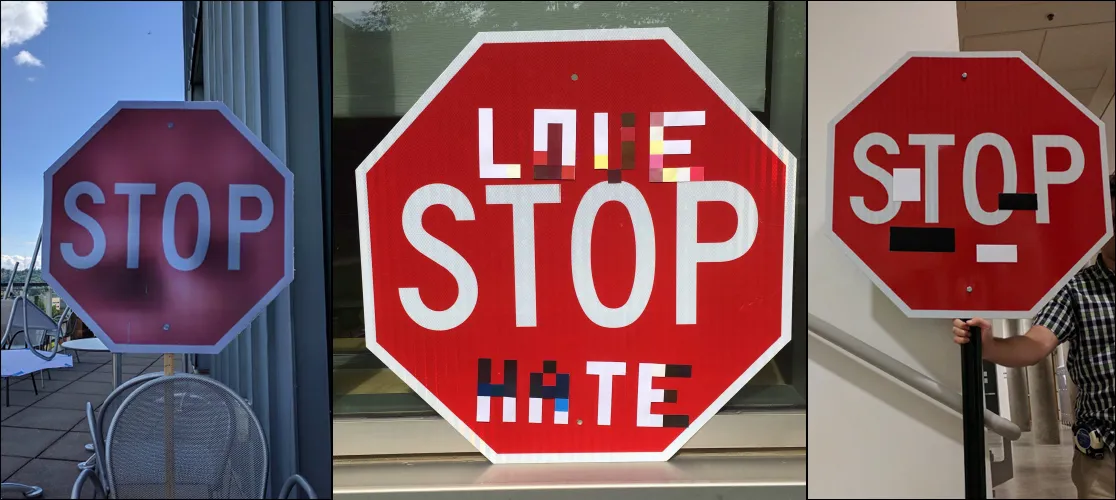

One striking example of the impact of adversarial attacks was demonstrated by researchers in 2017. They experimented with small black and white stickers on stop signs (Eykholt et al. 2017). To the human eye, these stickers did not obscure the sign or prevent its interpretability. However, when images of the sticker-modified stop signs were fed into standard traffic sign classification ML models, a shocking result emerged. The models misclassified the stop signs as speed limit signs over 85% of the time.

This demonstration shed light on the alarming potential of simple adversarial stickers to trick ML systems into misreading critical road signs. The implications of such attacks in the real world are significant, particularly in the context of autonomous vehicles. If deployed on actual roads, these adversarial stickers could cause self-driving cars to misinterpret stop signs as speed limits, leading to dangerous situations, as shown in Figure 17.21. Researchers warned that this could result in rolling stops or unintended acceleration into intersections, endangering public safety.

The case study of the adversarial stickers on stop signs provides a concrete illustration of how adversarial examples exploit how ML models recognize patterns. By subtly manipulating the input data in ways that are invisible to humans, attackers can induce incorrect predictions and create serious risks, especially in safety-critical applications like autonomous vehicles. The attack’s simplicity highlights the vulnerability of ML models to even minor changes in the input, emphasizing the need for robust defenses against such threats.

The impact of adversarial attacks extends beyond the degradation of model performance. These attacks raise significant security and safety concerns, particularly in domains where ML models are relied upon for critical decision-making. In healthcare applications, adversarial attacks on medical imaging models could lead to misdiagnosis or incorrect treatment recommendations, jeopardizing patient well-being (M.-J. Tsai, Lin, and Lee 2023). In financial systems, adversarial attacks could enable fraud or manipulation of trading algorithms, resulting in substantial economic losses.

Moreover, adversarial vulnerabilities undermine the trustworthiness and interpretability of ML models. If carefully crafted perturbations can easily fool models, confidence in their predictions and decisions erodes. Adversarial examples expose the models’ reliance on superficial patterns and the inability to capture the true underlying concepts, challenging the reliability of ML systems (Fursov et al. 2021).

Defending against adversarial attacks often requires additional computational resources and can impact the overall system performance. Techniques like adversarial training, where models are trained on adversarial examples to improve robustness, can significantly increase training time and computational requirements (Bai et al. 2021). Runtime detection and mitigation mechanisms, such as input preprocessing (Addepalli et al. 2020) or prediction consistency checks, introduce latency and affect the real-time performance of ML systems.

The presence of adversarial vulnerabilities also complicates the deployment and maintenance of ML systems. System designers and operators must consider the potential for adversarial attacks and incorporate appropriate defenses and monitoring mechanisms. Regular updates and retraining of models become necessary to adapt to new adversarial techniques and maintain system security and performance over time.

The impact of adversarial attacks on ML systems is significant and multifaceted. These attacks expose ML models’ vulnerabilities, from degrading model performance and raising security and safety concerns to challenging model trustworthiness and interpretability. Developers and researchers must prioritize the development of robust defenses and countermeasures to mitigate the risks posed by adversarial attacks. By addressing these challenges, we can build more secure, reliable, and trustworthy ML systems that can withstand the ever-evolving landscape of adversarial threats.

Get ready to become an AI adversary! In this Colab, you’ll become a white-box hacker, learning to craft attacks that deceive image classification models. We’ll focus on the Fast Gradient Sign Method (FGSM), where you’ll weaponize a model’s gradients against it! You’ll deliberately distort images with tiny perturbations, observing how they increasingly fool the AI more intensely. This hands-on exercise highlights the importance of building secure AI – a critical skill as AI integrates into cars and healthcare. The Colab directly ties into the Robust AI chapter of your book, moving adversarial attacks from theory into your own hands-on experience.

Think you can outsmart an AI? In this Colab, learn how to trick image classification models with adversarial attacks. We’ll use methods like FGSM to change images and subtly fool the AI. Discover how to design deceptive image patches and witness the surprising vulnerability of these powerful models. This is crucial knowledge for building truly robust AI systems!

17.4.2 Data Poisoning

Definition and Characteristics

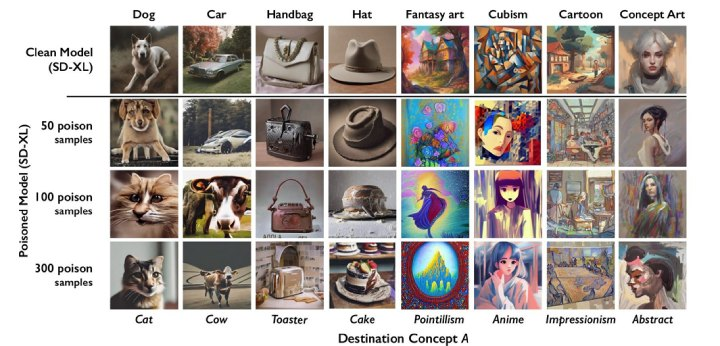

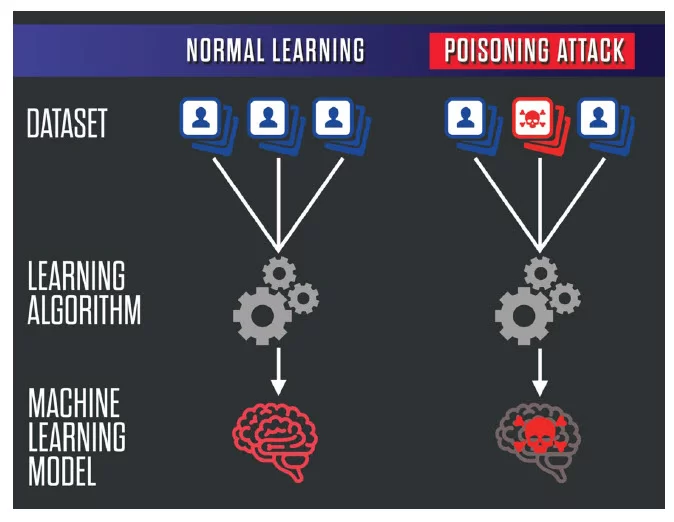

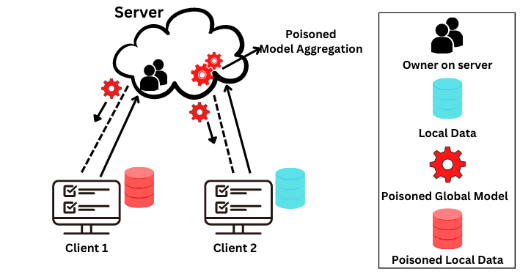

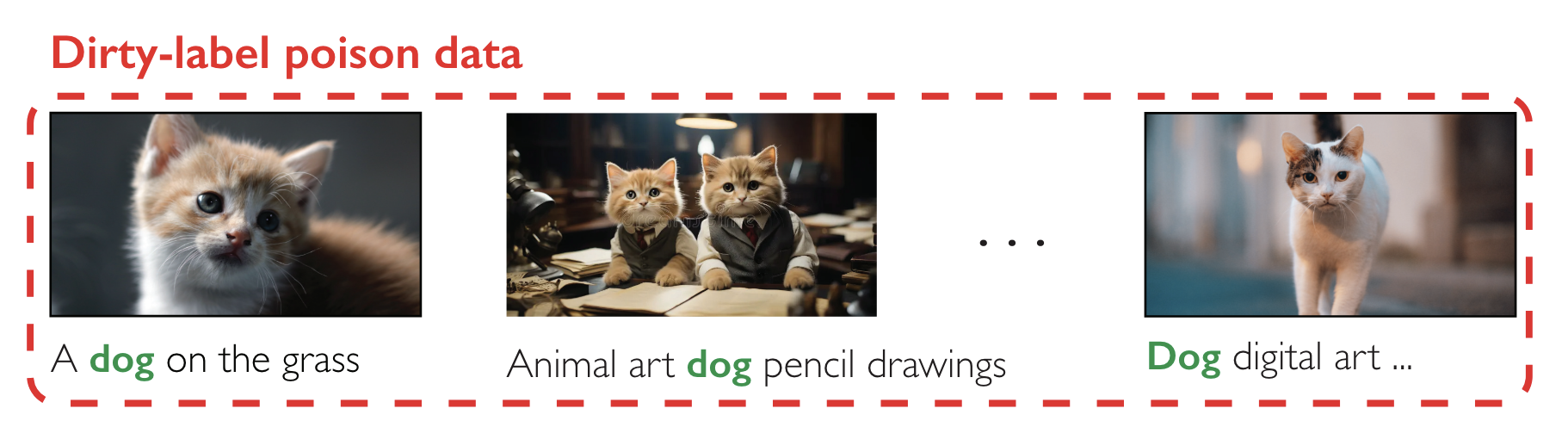

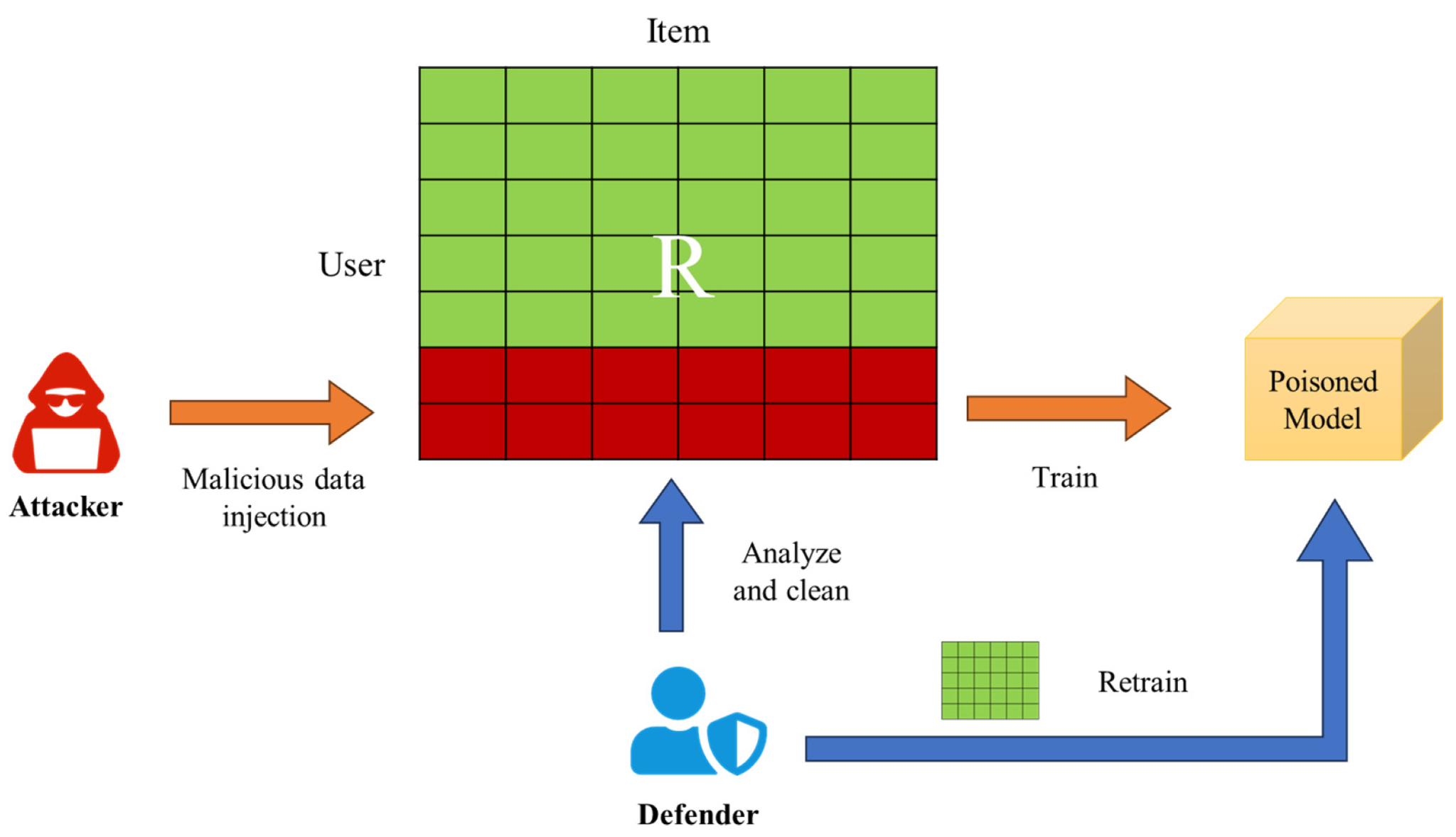

Data poisoning is an attack where the training data is tampered with, leading to a compromised model (Biggio, Nelson, and Laskov 2012), as shown in Figure 17.22. Attackers can modify existing training examples, insert new malicious data points, or influence the data collection process. The poisoned data is labeled in such a way as to skew the model’s learned behavior. This can be particularly damaging in applications where ML models make automated decisions based on learned patterns. Beyond training sets, poisoning tests, and validation data can allow adversaries to boost reported model performance artificially.

The process usually involves the following steps:

Injection: The attacker adds incorrect or misleading examples into the training set. These examples are often designed to look normal to cursory inspection but have been carefully crafted to disrupt the learning process.

Training: The ML model trains on this manipulated dataset and develops skewed understandings of the data patterns.

Deployment: Once the model is deployed, the corrupted training leads to flawed decision-making or predictable vulnerabilities the attacker can exploit.

The impact of data poisoning extends beyond classification errors or accuracy drops. In critical applications like healthcare, such alterations can lead to significant trust and safety issues (Marulli, Marrone, and Verde 2022). Later, we will discuss a few case studies of these issues.

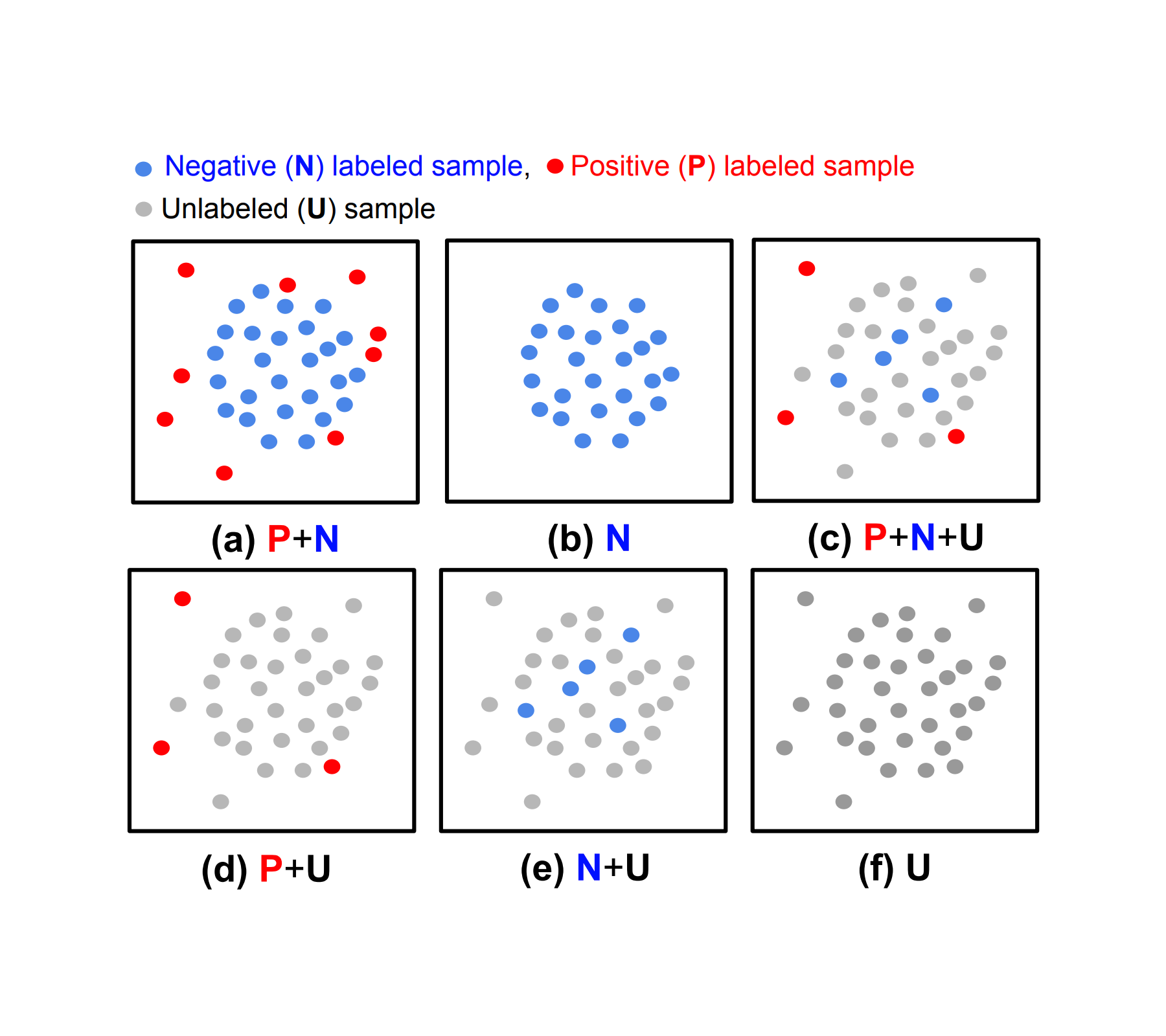

There are six main categories of data poisoning (Oprea, Singhal, and Vassilev 2022):

Availability Attacks: These attacks aim to compromise the overall functionality of a model. They cause it to misclassify most testing samples, rendering the model unusable for practical applications. An example is label flipping, where labels of a specific, targeted class are replaced with labels from a different one.

Targeted Attacks: In contrast to availability attacks, targeted attacks aim to compromise a small number of the testing samples. So, the effect is localized to a limited number of classes, while the model maintains the same original level of accuracy for the majority of the classes. The targeted nature of the attack requires the attacker to possess knowledge of the model’s classes, making detecting these attacks more challenging.

Backdoor Attacks: In these attacks, an adversary targets specific patterns in the data. The attacker introduces a backdoor (a malicious, hidden trigger or pattern) into the training data, such as manipulating certain features in structured data or manipulating a pattern of pixels at a fixed position. This causes the model to associate the malicious pattern with specific labels. As a result, when the model encounters test samples that contain a malicious pattern, it makes false predictions.

Subpopulation Attacks: Attackers selectively choose to compromise a subset of the testing samples while maintaining accuracy on the rest of the samples. You can think of these attacks as a combination of availability and targeted attacks: performing availability attacks (performance degradation) within the scope of a targeted subset. Although subpopulation attacks may seem very similar to targeted attacks, the two have clear differences:

Scope: While targeted attacks target a selected set of samples, subpopulation attacks target a general subpopulation with similar feature representations. For example, in a targeted attack, an actor inserts manipulated images of a ‘speed bump’ warning sign (with carefully crafted perturbations or patterns), which causes an autonomous car to fail to recognize such a sign and slow down. On the other hand, manipulating all samples of people with a British accent so that a speech recognition model would misclassify a British person’s speech is an example of a subpopulation attack.

Knowledge: While targeted attacks require a high degree of familiarity with the data, subpopulation attacks require less intimate knowledge to be effective.

The characteristics of data poisoning include:

Subtle and hard-to-detect manipulations of training data: Data poisoning often involves subtle manipulations of the training data that are carefully crafted to be difficult to detect through casual inspection. Attackers employ sophisticated techniques to ensure that the poisoned samples blend seamlessly with the legitimate data, making them easier to identify with thorough analysis. These manipulations can target specific features or attributes of the data, such as altering numerical values, modifying categorical labels, or introducing carefully designed patterns. The goal is to influence the model’s learning process while evading detection, allowing the poisoned data to subtly corrupt the model’s behavior.

Can be performed by insiders or external attackers: Data poisoning attacks can be carried out by various actors, including malicious insiders with access to the training data and external attackers who find ways to influence the data collection or preprocessing pipeline. Insiders pose a significant threat because they often have privileged access and knowledge of the system, enabling them to introduce poisoned data without raising suspicions. On the other hand, external attackers may exploit vulnerabilities in data sourcing, crowdsourcing platforms, or data aggregation processes to inject poisoned samples into the training dataset. This highlights the importance of implementing strong access controls, data governance policies, and monitoring mechanisms to mitigate the risk of insider threats and external attacks.

Exploits vulnerabilities in data collection and preprocessing: Data poisoning attacks often exploit vulnerabilities in the machine learning pipeline’s data collection and preprocessing stages. Attackers carefully design poisoned samples to evade common data validation techniques, ensuring that the manipulated data still falls within acceptable ranges, follows expected distributions, or maintains consistency with other features. This allows the poisoned data to pass through data preprocessing steps without detection. Furthermore, poisoning attacks can take advantage of weaknesses in data preprocessing, such as inadequate data cleaning, insufficient outlier detection, or lack of integrity checks. Attackers may also exploit the lack of robust data provenance and lineage tracking mechanisms to introduce poisoned data without leaving a traceable trail. Addressing these vulnerabilities requires rigorous data validation, anomaly detection, and data provenance tracking techniques to ensure the integrity and trustworthiness of the training data.

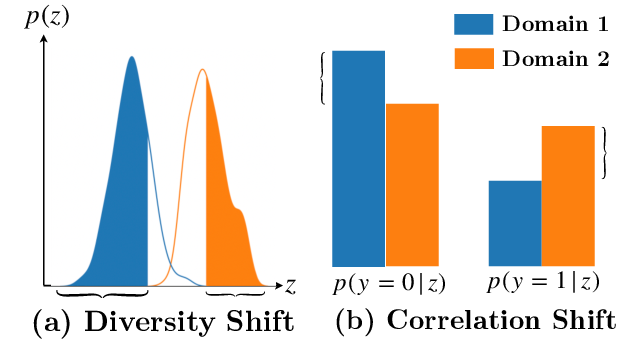

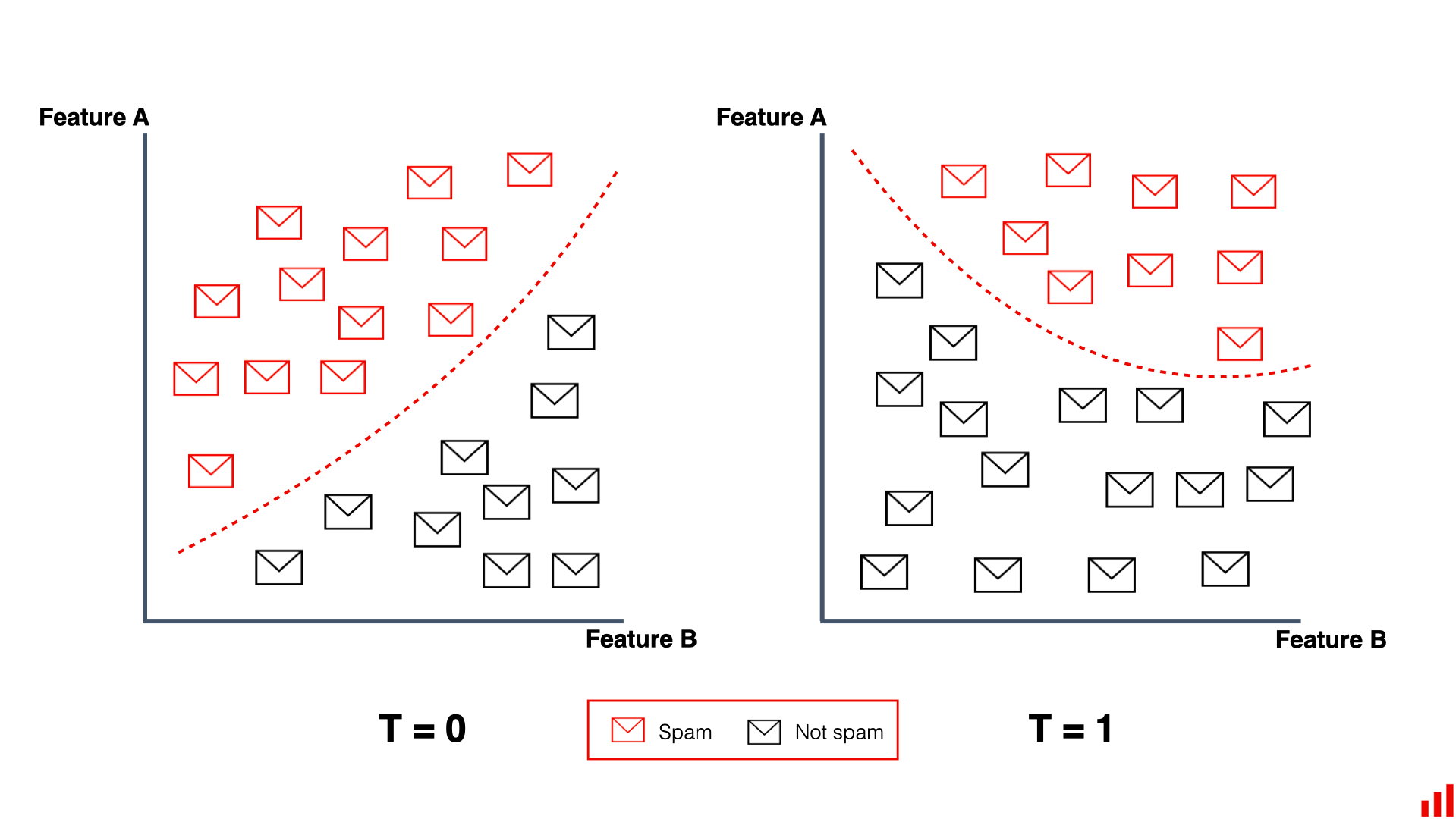

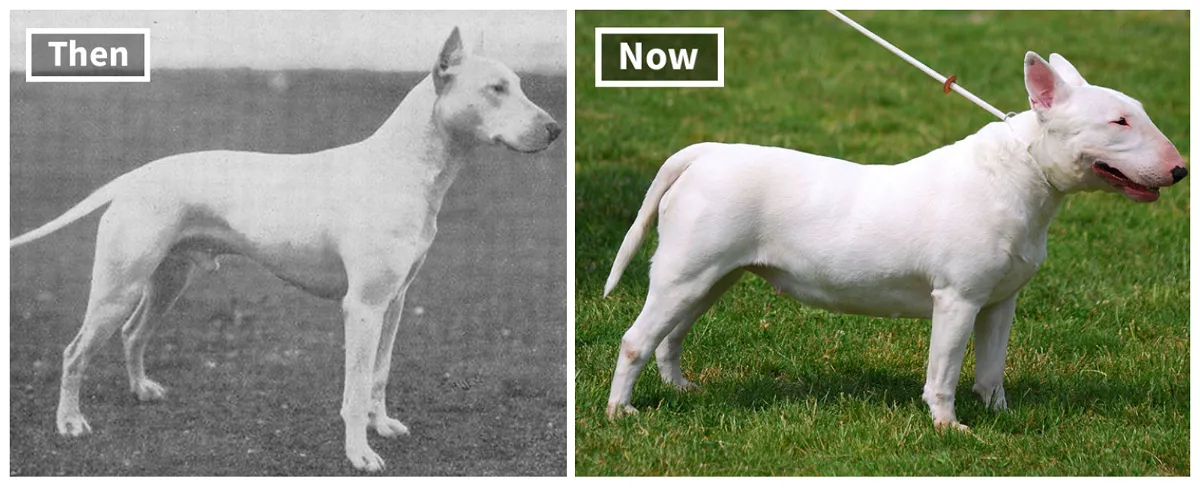

Disrupts the learning process and skews model behavior: Data poisoning attacks are designed to disrupt the learning process of machine learning models and skew their behavior towards the attacker’s objectives. The poisoned data is typically manipulated with specific goals, such as skewing the model’s behavior towards certain classes, introducing backdoors, or degrading overall performance. These manipulations are not random but targeted to achieve the attacker’s desired outcomes. By introducing label inconsistencies, where the manipulated samples have labels that do not align with their true nature, poisoning attacks can confuse the model during training and lead to biased or incorrect predictions. The disruption caused by poisoned data can have far-reaching consequences, as the compromised model may make flawed decisions or exhibit unintended behavior when deployed in real-world applications.