4 AI Workflow

Resources: Slides, Videos, Exercises, Labs

In this chapter, we’ll explore the machine learning (ML) workflow, setting the stage for subsequent chapters that go deeper into the specifics. To ensure we see the bigger picture, this chapter offers a high-level overview of the steps involved in the ML workflow.

The ML workflow is a structured approach that guides professionals and researchers through developing, deploying, and maintaining ML models. This workflow is generally divided into several crucial stages, each contributing to the effective development of intelligent systems.

Understand the ML workflow and gain insights into the structured approach and stages of developing, deploying, and maintaining machine learning models.

Learn about the unique challenges and distinctions between workflows for Traditional machine learning and embedded AI.

Appreciate the roles in ML projects and understand their responsibilities and significance.

Understanding the importance, applications, and considerations for implementing ML models in resource-constrained environments.

Gain awareness about the ethical and legal aspects that must be considered and adhered to in ML and embedded AI projects.

Establish a basic understanding of ML workflows and roles to be well-prepared for deeper exploration in the following chapters.

4.1 Overview

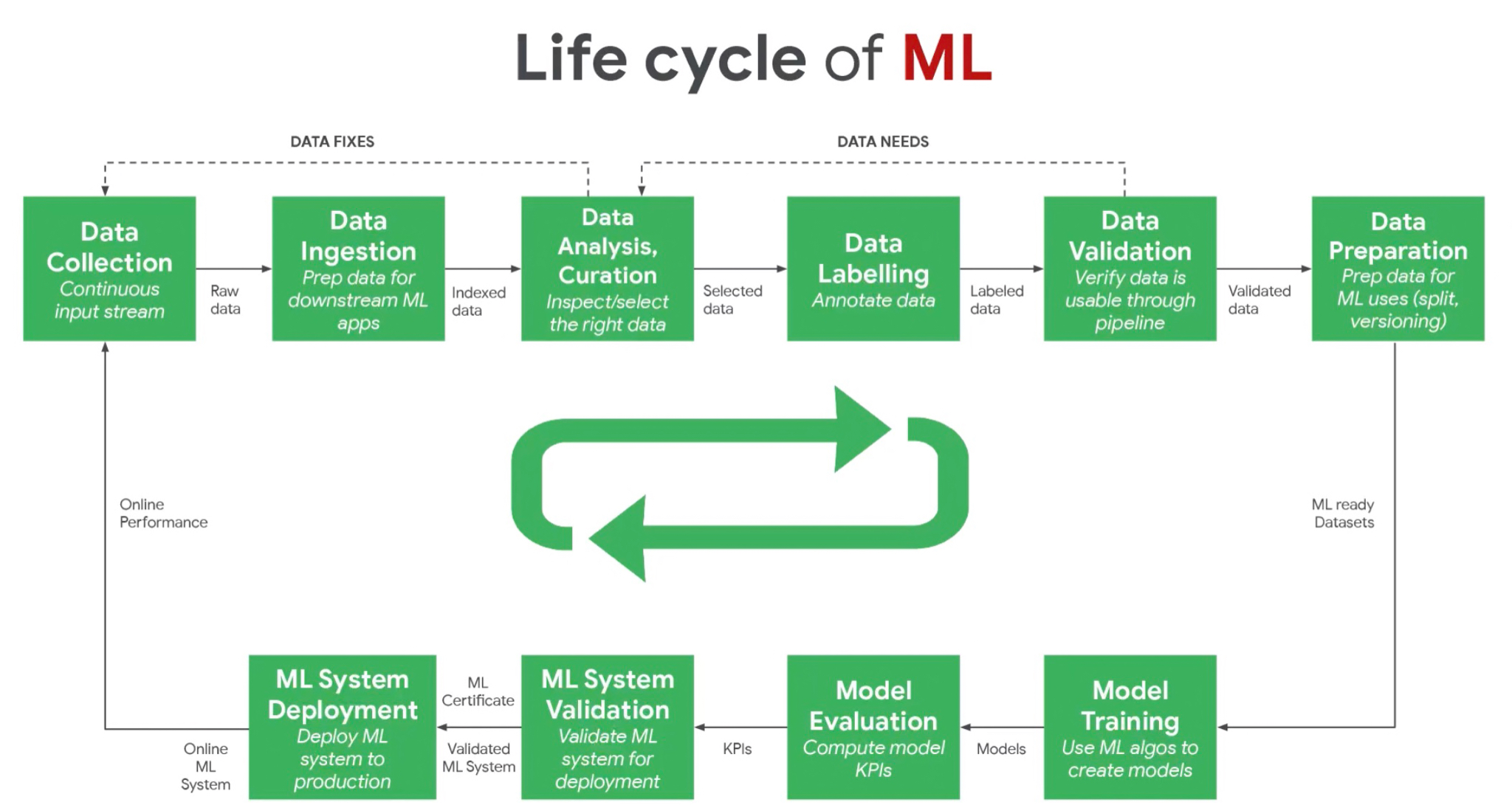

Developing a successful machine learning model requires a systematic workflow. This end-to-end process enables you to build, deploy, and maintain models effectively. As shown in Figure 4.1, It typically involves the following key steps:

- Problem Definition - Start by clearly articulating the specific problem you want to solve. This focuses on your efforts during data collection and model building.

- Data Collection and Preparation: Gather relevant, high-quality training data that captures all aspects of the problem. Clean and preprocess the data to prepare it for modeling.

- Model Selection and Training: Choose a machine learning algorithm suited to your problem type and data. Consider the pros and cons of different approaches. Feed the prepared data into the model to train it. Training time varies based on data size and model complexity.

- Model Evaluation: Test the trained model on new unseen data to measure its predictive accuracy. Identify any limitations.

- Model Deployment: Integrate the validated model into applications or systems to start operationalization.

- Monitor and Maintain: Track model performance in production. Retrain periodically on new data to keep it current.

Following this structured ML workflow helps guide you through the key phases of development. It ensures you build effective and robust models ready for real-world deployment, resulting in higher-quality models that solve your business needs.

The ML workflow is iterative, requiring ongoing monitoring and potential adjustments. Additional considerations include:

- Version Control: Track code and data changes to reproduce results and revert to earlier versions if needed.

- Documentation: Maintain detailed documentation for workflow understanding and reproduction.

- Testing: Rigorously test the workflow to ensure its functionality.

- Security: Safeguard your workflow and data when deploying models in production settings.

4.2 Traditional vs. Embedded AI

The ML workflow is a universal guide applicable across various platforms, including cloud-based solutions, edge computing, and TinyML. However, the workflow for Embedded AI introduces unique complexities and challenges, making it a captivating domain and paving the way for remarkable innovations.

4.2.1 Resource Optimization

- Traditional ML Workflow: This workflow prioritizes model accuracy and performance, often leveraging abundant computational resources in cloud or data center environments.

- Embedded AI Workflow: Given embedded systems’ resource constraints, this workflow requires careful planning to optimize model size and computational demands. Techniques like model quantization and pruning are crucial.

4.2.2 Real-time Processing

- Traditional ML Workflow: Less emphasis on real-time processing, often relying on batch data processing.

- Embedded AI Workflow: Prioritizes real-time data processing, making low latency and quick execution essential, especially in applications like autonomous vehicles and industrial automation.

4.2.3 Data Management and Privacy

- Traditional ML Workflow: Processes data in centralized locations, often necessitating extensive data transfer and focusing on data security during transit and storage.

- Embedded AI Workflow: This workflow leverages edge computing to process data closer to its source, reducing data transmission and enhancing privacy through data localization.

4.2.4 Hardware-Software Integration

- Traditional ML Workflow: Typically operates on general-purpose hardware, with software development occurring independently.

- Embedded AI Workflow: This workflow involves a more integrated approach to hardware and software development, often incorporating custom chips or hardware accelerators to achieve optimal performance.

4.3 Roles & Responsibilities

Creating an ML solution, especially for embedded AI, is a multidisciplinary effort involving various specialists. Unlike traditional software development, building an ML solution demands a multidisciplinary approach due to the experimental nature of model development and the resource-intensive requirements of training and deploying these models.

There is a pronounced need for roles focusing on data for the success of machine learning pipelines. Data scientists and data engineers handle data collection, build data pipelines, and ensure data quality. Since the nature of machine learning models depends on the data they consume, the models are unique and vary with different applications, necessitating extensive experimentation. Machine learning researchers and engineers drive this experimental phase through continuous testing, validation, and iteration to achieve optimal performance.

The deployment phase often requires specialized hardware and infrastructure, as machine learning models can be resource-intensive, demanding high computational power and efficient resource management. This necessitates collaboration with hardware engineers to ensure that the infrastructure can support the computational demands of model training and inference.

As models make decisions that can impact individuals and society, ethical and legal aspects of machine learning are becoming increasingly important. Ethicists and legal advisors are needed to ensure compliance with ethical standards and legal regulations.

Table 4.1 shows a rundown of the typical roles involved. While the lines between these roles can sometimes blur, the table below provides a general overview.

| Role | Responsibilities |

|---|---|

| Project Manager | Oversees the project, ensuring timelines and milestones are met. |

| Domain Experts | Offer domain-specific insights to define project requirements. |

| Data Scientists | Specialize in data analysis and model development. |

| Machine Learning Engineers | Focus on model development and deployment. |

| Data Engineers | Manage data pipelines. |

| Embedded Systems Engineers | Integrate ML models into embedded systems. |

| Software Developers | Develop software components for AI system integration. |

| Hardware Engineers | Design and optimize hardware for the embedded AI system. |

| UI/UX Designers | Focus on user-centric design. |

| QA Engineers | Ensure the system meets quality standards. |

| Ethicists and Legal Advisors | Consult on ethical and legal compliance. |

| Operations and Maintenance Personnel | Monitor and maintain the deployed system. |

| Security Specialists | Ensure system security. |

Understanding these roles is crucial for completing an ML project. As we proceed through the upcoming chapters, we’ll explore each role’s essence and expertise, fostering a comprehensive understanding of the complexities involved in embedded AI projects. This holistic view facilitates seamless collaboration and nurtures an environment ripe for innovation and breakthroughs.

4.4 Conclusion

This chapter has laid the foundation for understanding the machine learning workflow, a structured approach crucial for the development, deployment, and maintenance of ML models. By exploring the distinct stages of the ML lifecycle, we have gained insights into the unique challenges faced by traditional ML and embedded AI workflows, particularly in terms of resource optimization, real-time processing, data management, and hardware-software integration. These distinctions underscore the importance of tailoring workflows to meet the specific demands of the application environment.

The chapter emphasized the significance of multidisciplinary collaboration in ML projects. Understanding the diverse roles provides a comprehensive view of the teamwork necessary to navigate the experimental and resource-intensive nature of ML development. As we move forward to more detailed discussions in the subsequent chapters, this high-level overview equips us with a holistic perspective on the ML workflow and the various roles involved.

4.5 Resources

Here is a curated list of resources to support students and instructors in their learning and teaching journeys. We are continuously working on expanding this collection and will add new exercises soon.

These slides are a valuable tool for instructors to deliver lectures and for students to review the material at their own pace. We encourage students and instructors to leverage these slides to improve their understanding and facilitate effective knowledge transfer.

- Coming soon.

To reinforce the concepts covered in this chapter, we have curated a set of exercises that challenge students to apply their knowledge and deepen their understanding.

- Coming soon.

In addition to exercises, we offer a series of hands-on labs allowing students to gain practical experience with embedded AI technologies. These labs provide step-by-step guidance, enabling students to develop their skills in a structured and supportive environment. We are excited to announce that new labs will be available soon, further enriching the learning experience.

- Coming soon.