2 ML Systems

Resources: Slides, Videos, Exercises, Labs

Machine learning (ML) systems, built on the foundation of computing systems, hold the potential to transform our world. These systems, with their specialized roles and real-time computational capabilities, represent a critical junction where data and computation meet on a micro-scale. They are specifically tailored to optimize performance, energy usage, and spatial efficiency—key factors essential for the successful implementation of ML systems.

As this chapter progresses, we will explore embedded systems’ complex and fascinating world. We’ll gain insights into their structural design and operational features and understand their key role in powering ML applications. Starting with the basics of microcontroller units, we will examine the interfaces and peripherals that improve their functionalities. This chapter is designed to be a comprehensive guide elucidating the nuanced aspects of embedded systems within the ML systems framework.

Acquire a comprehensive understanding of ML systems, including their definitions, architecture, and programming languages.

Explore the design and operational principles of ML systems, including the use of a microprocessor, memory management, System-on-chip (SoC) integration, and the development and deployment of machine learning models.

Examine the interfaces, power management, and real-time operating characteristics essential for efficient ML systems alongside energy efficiency, reliability, and security considerations.

Investigate the distinctions, benefits, challenges, and use cases for Cloud ML, Edge ML, and TinyML, emphasizing selecting the appropriate machine learning approach based on specific application needs and the evolving landscape of embedded systems in machine learning.

2.1 Introduction

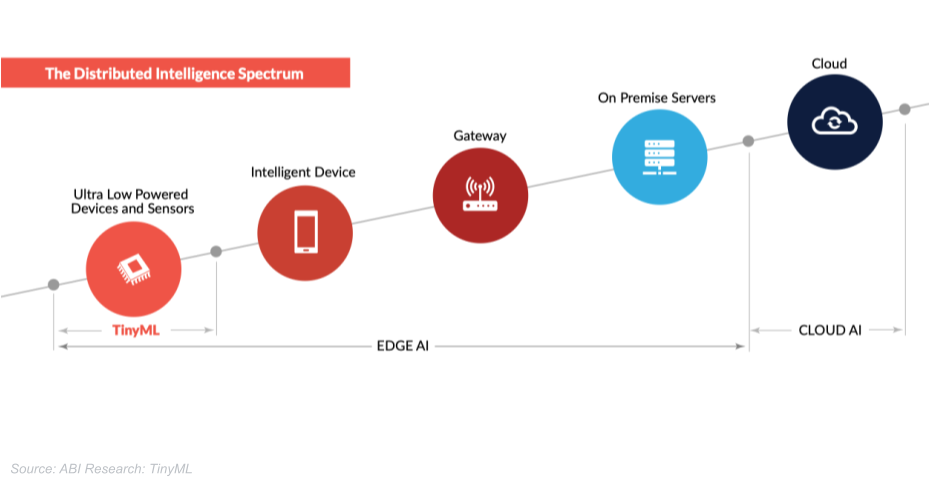

ML is rapidly evolving, with new paradigms reshaping how models are developed, trained, and deployed. One such paradigm is embedded machine learning, which is experiencing significant innovation driven by the proliferation of smart sensors, edge devices, and microcontrollers. Embedded machine learning refers to the integration of machine learning algorithms into the hardware of a device, enabling real-time data processing and analysis without relying on cloud connectivity. This chapter explores the landscape of embedded machine learning, covering the key approaches of Cloud ML, Edge ML, and TinyML (Figure 2.1).

ML began with Cloud ML, where powerful servers in the cloud were used to train and run large ML models. However, as the need for real-time, low-latency processing grew, Edge ML emerged, bringing inference capabilities closer to the data source on edge devices such as smartphones. The latest development in this progression is TinyML, which enables ML models to run on extremely resource-constrained microcontrollers and small embedded systems. TinyML allows for on-device inference without relying on connectivity to the cloud or edge, opening up new possibilities for intelligent, battery-operated devices.

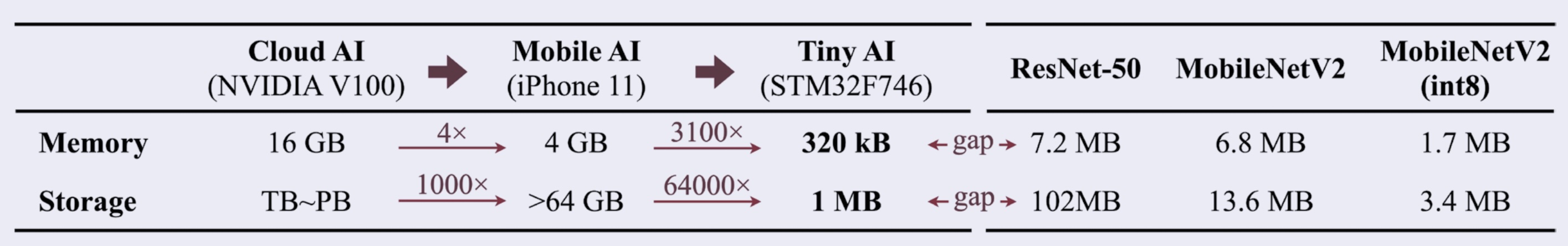

Figure 2.2 shows the key differences between Cloud ML, Edge ML, and TinyML in terms of hardware, latency, connectivity, power requirements, and model complexity. This significant disparity in available resources poses challenges when attempting to deploy deep learning models on microcontrollers, as these models often require substantial memory and storage. For instance, widely used deep learning models such as ResNet-50 exceed the resource limits of microcontrollers by a factor of around 100, while more efficient models like MobileNet-V2 still surpass these constraints by a factor of approximately 20. Even when quantized to use 8-bit integers (int8) for reduced memory usage, MobileNetV2 requires more than 5 times the memory typically available on a microcontroller, making it difficult to fit the model on these tiny devices.

2.2 Cloud ML

Cloud ML leverages powerful servers in the cloud for training and running large, complex ML models, and relies on internet connectivity.

2.2.1 Characteristics

Definition of Cloud ML

Cloud Machine Learning (Cloud ML) is a subfield of machine learning that leverages the power and scalability of cloud computing infrastructure to develop, train, and deploy machine learning models. By utilizing the vast computational resources available in the cloud, Cloud ML enables the efficient handling of large-scale datasets and complex machine learning algorithms.

Centralized Infrastructure

One of the key characteristics of Cloud ML is its centralized infrastructure. Cloud service providers offer a virtual platform that consists of high-capacity servers, expansive storage solutions, and robust networking architectures, all housed in data centers distributed across the globe (Figure 2.3). This centralized setup allows for the pooling and efficient management of computational resources, making it easier to scale machine learning projects as needed.

Scalable Data Processing and Model Training

Cloud ML excels in its ability to process and analyze massive volumes of data. The centralized infrastructure is designed to handle complex computations and model training tasks that require significant computational power. By leveraging the scalability of the cloud, machine learning models can be trained on vast amounts of data, leading to improved learning capabilities and predictive performance.

Flexible Deployment and Accessibility

Another advantage of Cloud ML is the flexibility it offers in terms of deployment and accessibility. Once a machine learning model is trained and validated, it can be easily deployed and made accessible to users through cloud-based services. This allows for seamless integration of machine learning capabilities into various applications and services, regardless of the user’s location or device.

Collaboration and Resource Sharing

Cloud ML promotes collaboration and resource sharing among teams and organizations. The centralized nature of the cloud infrastructure enables multiple users to access and work on the same machine learning projects simultaneously. This collaborative approach facilitates knowledge sharing, accelerates the development process, and optimizes resource utilization.

Cost-Effectiveness and Scalability

By leveraging the pay-as-you-go pricing model offered by cloud service providers, Cloud ML allows organizations to avoid the upfront costs associated with building and maintaining their own machine learning infrastructure. The ability to scale resources up or down based on demand ensures cost-effectiveness and flexibility in managing machine learning projects.

Cloud ML has revolutionized the way machine learning is approached, making it more accessible, scalable, and efficient. It has opened up new possibilities for organizations to harness the power of machine learning without the need for significant investments in hardware and infrastructure.

2.2.2 Benefits

Cloud ML offers several significant benefits that make it a powerful choice for machine learning projects:

Immense Computational Power

One of the key advantages of Cloud ML is its ability to provide vast computational resources. The cloud infrastructure is designed to handle complex algorithms and process large datasets efficiently. This is particularly beneficial for machine learning models that require significant computational power, such as deep learning networks or models trained on massive datasets. By leveraging the cloud’s computational capabilities, organizations can overcome the limitations of local hardware setups and scale their machine learning projects to meet demanding requirements.

Dynamic Scalability

Cloud ML offers dynamic scalability, allowing organizations to easily adapt to changing computational needs. As the volume of data grows or the complexity of machine learning models increases, the cloud infrastructure can seamlessly scale up or down to accommodate these changes. This flexibility ensures consistent performance and enables organizations to handle varying workloads without the need for extensive hardware investments. With Cloud ML, resources can be allocated on-demand, providing a cost-effective and efficient solution for managing machine learning projects.

Access to Advanced Tools and Algorithms

Cloud ML platforms provide access to a wide range of advanced tools and algorithms specifically designed for machine learning. These tools often include pre-built libraries, frameworks, and APIs that simplify the development and deployment of machine learning models. Developers can leverage these resources to accelerate the building, training, and optimization of sophisticated models. By utilizing the latest advancements in machine learning algorithms and techniques, organizations can stay at the forefront of innovation and achieve better results in their machine learning projects.

Collaborative Environment

Cloud ML fosters a collaborative environment that enables teams to work together seamlessly. The centralized nature of the cloud infrastructure allows multiple users to access and contribute to the same machine learning projects simultaneously. This collaborative approach facilitates knowledge sharing, promotes cross-functional collaboration, and accelerates the development and iteration of machine learning models. Teams can easily share code, datasets, and results, enabling efficient collaboration and driving innovation across the organization.

Cost-Effectiveness

Adopting Cloud ML can be a cost-effective solution for organizations, especially compared to building and maintaining an on-premises machine learning infrastructure. Cloud service providers offer flexible pricing models, such as pay-as-you-go or subscription-based plans, allowing organizations to pay only for the resources they consume. This eliminates the need for upfront capital investments in hardware and infrastructure, reducing the overall cost of implementing machine learning projects. Additionally, the scalability of Cloud ML ensures that organizations can optimize their resource usage and avoid overprovisioning, further enhancing cost-efficiency.

The benefits of Cloud ML, including its immense computational power, dynamic scalability, access to advanced tools and algorithms, collaborative environment, and cost-effectiveness, make it a compelling choice for organizations looking to harness the potential of machine learning. By leveraging the capabilities of the cloud, organizations can accelerate their machine learning initiatives, drive innovation, and gain a competitive edge in today’s data-driven landscape.

2.2.3 Challenges

While Cloud ML offers numerous benefits, it also comes with certain challenges that organizations need to consider:

Latency Issues

One of the main challenges of Cloud ML is the potential for latency issues, especially in applications that require real-time responses. Since data needs to be sent from the data source to centralized cloud servers for processing and then back to the application, there can be delays introduced by network transmission. This latency can be a significant drawback in time-sensitive scenarios, such as autonomous vehicles, real-time fraud detection, or industrial control systems, where immediate decision-making is critical. Developers need to carefully design their systems to minimize latency and ensure acceptable response times.

Data Privacy and Security Concerns

Centralizing data processing and storage in the cloud can raise concerns about data privacy and security. When sensitive data is transmitted and stored in remote data centers, it becomes vulnerable to potential cyber-attacks and unauthorized access. Cloud data centers can become attractive targets for hackers seeking to exploit vulnerabilities and gain access to valuable information. Organizations need to invest in robust security measures, such as encryption, access controls, and continuous monitoring, to protect their data in the cloud. Compliance with data privacy regulations, such as GDPR or HIPAA, also becomes a critical consideration when handling sensitive data in the cloud.

Cost Considerations

As data processing needs grow, the costs associated with using cloud services can escalate. While Cloud ML offers scalability and flexibility, organizations dealing with large data volumes may face increasing costs as they consume more cloud resources. The pay-as-you-go pricing model of cloud services means that costs can quickly add up, especially for compute-intensive tasks like model training and inference. Organizations need to carefully monitor and optimize their cloud usage to ensure cost-effectiveness. They may need to consider strategies such as data compression, efficient algorithm design, and resource allocation optimization to minimize costs while still achieving desired performance.

Dependency on Internet Connectivity

Cloud ML relies on stable and reliable internet connectivity to function effectively. Since data needs to be transmitted to and from the cloud, any disruptions or limitations in network connectivity can impact the performance and availability of the machine learning system. This dependency on internet connectivity can be a challenge in scenarios where network access is limited, unreliable, or expensive. Organizations need to ensure robust network infrastructure and consider failover mechanisms or offline capabilities to mitigate the impact of connectivity issues.

Vendor Lock-In

When adopting Cloud ML, organizations often become dependent on the specific tools, APIs, and services provided by their chosen cloud vendor. This vendor lock-in can make it difficult to switch providers or migrate to different platforms in the future. Organizations may face challenges in terms of portability, interoperability, and cost when considering a change in their cloud ML provider. It is important to carefully evaluate vendor offerings, consider long-term strategic goals, and plan for potential migration scenarios to minimize the risks associated with vendor lock-in.

Addressing these challenges requires careful planning, architectural design, and risk mitigation strategies. Organizations need to weigh the benefits of Cloud ML against the potential challenges and make informed decisions based on their specific requirements, data sensitivity, and business objectives. By proactively addressing these challenges, organizations can effectively leverage the power of Cloud ML while ensuring data privacy, security, cost-effectiveness, and overall system reliability.

2.2.4 Example Use Cases

Cloud ML has found widespread adoption across various domains, revolutionizing the way businesses operate and users interact with technology. Let’s explore some notable examples of Cloud ML in action:

Virtual Assistants

Cloud ML plays a crucial role in powering virtual assistants like Siri and Alexa. These systems leverage the immense computational capabilities of the cloud to process and analyze voice inputs in real-time. By harnessing the power of natural language processing and machine learning algorithms, virtual assistants can understand user queries, extract relevant information, and generate intelligent and personalized responses. The cloud’s scalability and processing power enable these assistants to handle a vast number of user interactions simultaneously, providing a seamless and responsive user experience.

Recommendation Systems

Cloud ML forms the backbone of advanced recommendation systems used by platforms like Netflix and Amazon. These systems use the cloud’s ability to process and analyze massive datasets to uncover patterns, preferences, and user behavior. By leveraging collaborative filtering and other machine learning techniques, recommendation systems can offer personalized content or product suggestions tailored to each user’s interests. The cloud’s scalability allows these systems to continuously update and refine their recommendations based on the ever-growing amount of user data, enhancing user engagement and satisfaction.

Fraud Detection

In the financial industry, Cloud ML has revolutionized fraud detection systems. By leveraging the cloud’s computational power, these systems can analyze vast amounts of transactional data in real-time to identify potential fraudulent activities. Machine learning algorithms trained on historical fraud patterns can detect anomalies and suspicious behavior, enabling financial institutions to take proactive measures to prevent fraud and minimize financial losses. The cloud’s ability to process and store large volumes of data makes it an ideal platform for implementing robust and scalable fraud detection systems.

Personalized User Experiences

Cloud ML is deeply integrated into our online experiences, shaping the way we interact with digital platforms. From personalized ads on social media feeds to predictive text features in email services, Cloud ML powers smart algorithms that enhance user engagement and convenience. It enables e-commerce sites to recommend products based on a user’s browsing and purchase history, fine-tunes search engines to deliver accurate and relevant results, and automates the tagging and categorization of photos on platforms like Facebook. By leveraging the cloud’s computational resources, these systems can continuously learn and adapt to user preferences, providing a more intuitive and personalized user experience.

Security and Anomaly Detection

Cloud ML plays a role in bolstering user security by powering anomaly detection systems. These systems continuously monitor user activities and system logs to identify unusual patterns or suspicious behavior. By analyzing vast amounts of data in real-time, Cloud ML algorithms can detect potential cyber threats, such as unauthorized access attempts, malware infections, or data breaches. The cloud’s scalability and processing power enable these systems to handle the increasing complexity and volume of security data, providing a proactive approach to protecting users and systems from potential threats.

2.3 Edge ML

2.3.1 Characteristics

Definition of Edge ML

Edge Machine Learning (Edge ML) runs machine learning algorithms directly on endpoint devices or closer to where the data is generated rather than relying on centralized cloud servers. This approach brings computation closer to the data source, reducing the need to send large volumes of data over networks, often resulting in lower latency and improved data privacy.

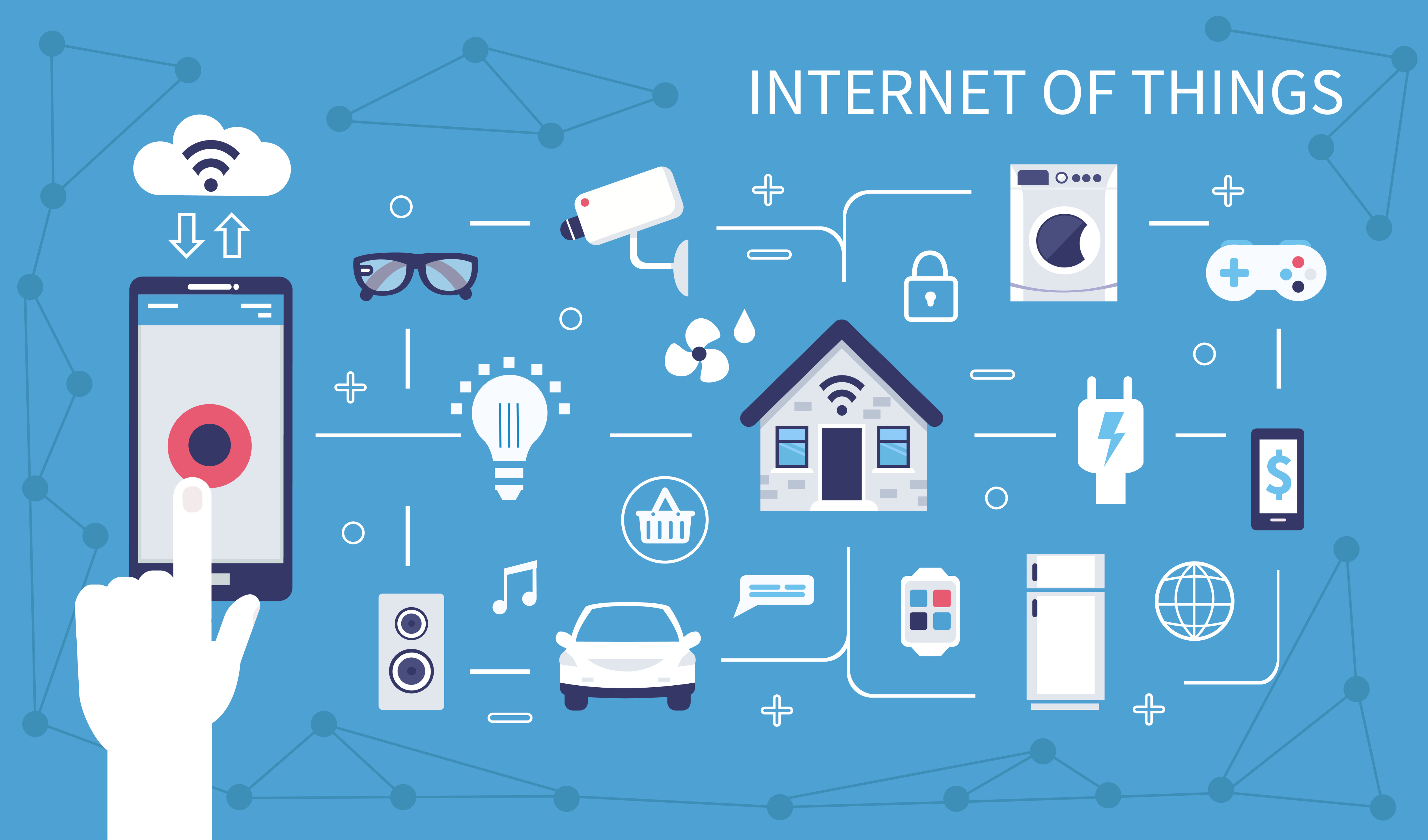

Decentralized Data Processing

In Edge ML, data processing happens in a decentralized fashion. Instead of sending data to remote servers, the data is processed locally on devices like smartphones, tablets, or Internet of Things (IoT) devices (Figure 2.4). This local processing allows devices to make quick decisions based on the data they collect without relying heavily on a central server’s resources. This decentralization is particularly important in real-time applications where even a slight delay can have significant consequences.

Local Data Storage and Computation

Local data storage and computation are key features of Edge ML. This setup ensures that data can be stored and analyzed directly on the devices, thereby maintaining the privacy of the data and reducing the need for constant internet connectivity. Moreover, this often leads to more efficient computation, as data doesn’t have to travel long distances, and computations are performed with a more nuanced understanding of the local context, which can sometimes result in more insightful analyses.

2.3.2 Benefits

Reduced Latency

One of Edge ML’s main advantages is the significant latency reduction compared to Cloud ML. This reduced latency can be a critical benefit in situations where milliseconds count, such as in autonomous vehicles, where quick decision-making can mean the difference between safety and an accident.

Enhanced Data Privacy

Edge ML also offers improved data privacy, as data is primarily stored and processed locally. This minimizes the risk of data breaches that are more common in centralized data storage solutions. Sensitive information can be kept more secure, as it’s not sent over networks that could be intercepted.

Lower Bandwidth Usage

Operating closer to the data source means less data must be sent over networks, reducing bandwidth usage. This can result in cost savings and efficiency gains, especially in environments where bandwidth is limited or costly.

2.3.3 Challenges

Limited Computational Resources Compared to Cloud ML

However, Edge ML has its challenges. One of the main concerns is the limited computational resources compared to cloud-based solutions. Endpoint devices may have a different processing power or storage capacity than cloud servers, limiting the complexity of the machine learning models that can be deployed.

Complexity in Managing Edge Nodes

Managing a network of edge nodes can introduce complexity, especially regarding coordination, updates, and maintenance. Ensuring all nodes operate seamlessly and are up-to-date with the latest algorithms and security protocols can be a logistical challenge.

Security Concerns at the Edge Nodes

While Edge ML offers enhanced data privacy, edge nodes can sometimes be more vulnerable to physical and cyber-attacks. Developing robust security protocols that protect data at each node without compromising the system’s efficiency remains a significant challenge in deploying Edge ML solutions.

2.3.4 Example Use Cases

Edge ML has many applications, from autonomous vehicles and smart homes to industrial Internet of Things (IoT). These examples were chosen to highlight scenarios where real-time data processing, reduced latency, and enhanced privacy are not just beneficial but often critical to the operation and success of these technologies. They demonstrate the role that Edge ML can play in driving advancements in various sectors, fostering innovation, and paving the way for more intelligent, responsive, and adaptive systems.

Autonomous Vehicles

Autonomous vehicles stand as a prime example of Edge ML’s potential. These vehicles rely heavily on real-time data processing to navigate and make decisions. Localized machine learning models assist in quickly analyzing data from various sensors to make immediate driving decisions, ensuring safety and smooth operation.

Smart Homes and Buildings

Edge ML plays a crucial role in efficiently managing various systems in smart homes and buildings, from lighting and heating to security. By processing data locally, these systems can operate more responsively and harmoniously with the occupants’ habits and preferences, creating a more comfortable living environment.

Industrial IoT

The Industrial IoT leverages Edge ML to monitor and control complex industrial processes. Here, machine learning models can analyze data from numerous sensors in real-time, enabling predictive maintenance, optimizing operations, and enhancing safety measures. This revolution in industrial automation and efficiency is transforming manufacturing and production across various sectors.

The applicability of Edge ML is vast and not limited to these examples. Various other sectors, including healthcare, agriculture, and urban planning, are exploring and integrating Edge ML to develop innovative solutions responsive to real-world needs and challenges, heralding a new era of smart, interconnected systems.

2.4 Tiny ML

2.4.1 Characteristics

Definition of TinyML

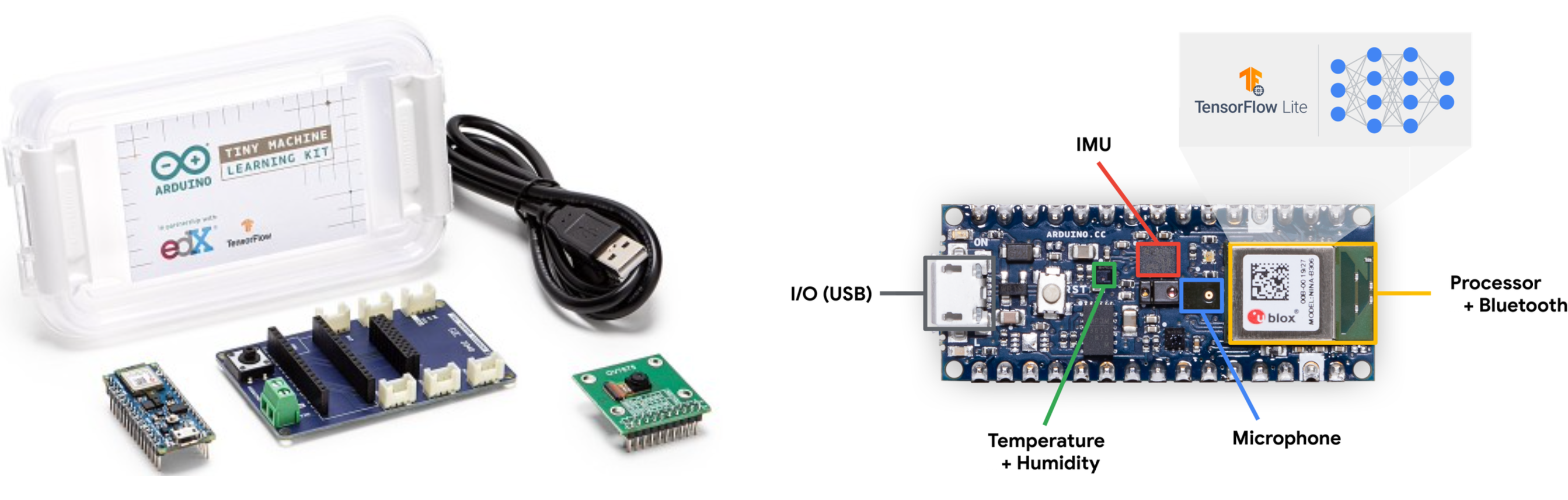

TinyML sits at the crossroads of embedded systems and machine learning, representing a burgeoning field that brings smart algorithms directly to tiny microcontrollers and sensors. These microcontrollers operate under severe resource constraints, particularly regarding memory, storage, and computational power (see a TinyML kit example in Figure 2.5).

On-Device Machine Learning

In TinyML, the focus is on on-device machine learning. This means that machine learning models are deployed and trained on the device, eliminating the need for external servers or cloud infrastructures. This allows TinyML to enable intelligent decision-making right where the data is generated, making real-time insights and actions possible, even in settings where connectivity is limited or unavailable.

Low Power and Resource-Constrained Environments

TinyML excels in low-power and resource-constrained settings. These environments require highly optimized solutions that function within the available resources. TinyML meets this need through specialized algorithms and models designed to deliver decent performance while consuming minimal energy, thus ensuring extended operational periods, even in battery-powered devices.

Get ready to bring machine learning to the smallest of devices! In the embedded machine learning world, TinyML is where resource constraints meet ingenuity. This Colab notebook will walk you through building a gesture recognition model designed on an Arduino board. You’ll learn how to train a small but effective neural network, optimize it for minimal memory usage, and deploy it to your microcontroller. If you’re excited about making everyday objects smarter, this is where it begins!

2.4.2 Benefits

Extremely Low Latency

One of the standout benefits of TinyML is its ability to offer ultra-low latency. Since computation occurs directly on the device, the time required to send data to external servers and receive a response is eliminated. This is crucial in applications requiring immediate decision-making, enabling quick responses to changing conditions.

High Data Security

TinyML inherently enhances data security. Because data processing and analysis happen on the device, the risk of data interception during transmission is virtually eliminated. This localized approach to data management ensures that sensitive information stays on the device, strengthening user data security.

Energy Efficiency

TinyML operates within an energy-efficient framework, a necessity given its resource-constrained environments. By employing lean algorithms and optimized computational methods, TinyML ensures that devices can execute complex tasks without rapidly depleting battery life, making it a sustainable option for long-term deployments.

2.4.3 Challenges

Limited Computational Capabilities

However, the shift to TinyML comes with its set of hurdles. The primary limitation is the devices’ constrained computational capabilities. The need to operate within such limits means that deployed models must be simplified, which could affect the accuracy and sophistication of the solutions.

Complex Development Cycle

TinyML also introduces a complicated development cycle. Crafting lightweight and effective models demands a deep understanding of machine learning principles and expertise in embedded systems. This complexity calls for a collaborative development approach, where multi-domain expertise is essential for success.

Model Optimization and Compression

A central challenge in TinyML is model optimization and compression. Creating machine learning models that can operate effectively within the limited memory and computational power of microcontrollers requires innovative approaches to model design. Developers often face the challenge of striking a delicate balance and optimizing models to maintain effectiveness while fitting within stringent resource constraints.

2.4.4 Example Use Cases

Wearable Devices

In wearables, TinyML opens the door to smarter, more responsive gadgets. From fitness trackers offering real-time workout feedback to smart glasses processing visual data on the fly, TinyML transforms how we engage with wearable tech, delivering personalized experiences directly from the device.

Predictive Maintenance

In industrial settings, TinyML plays a significant role in predictive maintenance. By deploying TinyML algorithms on sensors that monitor equipment health, companies can preemptively identify potential issues, reducing downtime and preventing costly breakdowns. On-site data analysis ensures quick responses, potentially stopping minor issues from becoming major problems.

Anomaly Detection

TinyML can be employed to create anomaly detection models that identify unusual data patterns. For instance, a smart factory could use TinyML to monitor industrial processes and spot anomalies, helping prevent accidents and improve product quality. Similarly, a security company could use TinyML to monitor network traffic for unusual patterns, aiding in detecting and preventing cyber-attacks. TinyML could monitor patient data for anomalies in healthcare, aiding early disease detection and better patient treatment.

Environmental Monitoring

In environmental monitoring, TinyML enables real-time data analysis from various field-deployed sensors. These could range from city air quality monitoring to wildlife tracking in protected areas. Through TinyML, data can be processed locally, allowing for quick responses to changing conditions and providing a nuanced understanding of environmental patterns, crucial for informed decision-making.

In summary, TinyML serves as a trailblazer in the evolution of machine learning, fostering innovation across various fields by bringing intelligence directly to the edge. Its potential to transform our interaction with technology and the world is immense, promising a future where devices are connected, intelligent, and capable of making real-time decisions and responses.

2.5 Comparison

Up to this point, we’ve explored each of the different ML variants individually. Now, let’s bring them all together for a comprehensive view. Table 2.1 offers a comparative analysis of Cloud ML, Edge ML, and TinyML based on various features and aspects. This comparison provides a clear perspective on the unique advantages and distinguishing factors, aiding in making informed decisions based on the specific needs and constraints of a given application or project.

| Aspect | Cloud ML | Edge ML | TinyML |

|---|---|---|---|

| Processing Location | Centralized servers (Data Centers) | Local devices (closer to data sources) | On-device (microcontrollers, embedded systems) |

| Latency | High (Depends on internet connectivity) | Moderate (Reduced latency compared to Cloud ML) | Low (Immediate processing without network delay) |

| Data Privacy | Moderate (Data transmitted over networks) | High (Data remains on local networks) | Very High (Data processed on-device, not transmitted) |

| Computational Power | High (Utilizes powerful data center infrastructure) | Moderate (Utilizes local device capabilities) | Low (Limited to the power of the embedded system) |

| Energy Consumption | High (Data centers consume significant energy) | Moderate (Less than data centers, more than TinyML) | Low (Highly energy-efficient, designed for low power) |

| Scalability | High (Easy to scale with additional server resources) | Moderate (Depends on local device capabilities) | Low (Limited by the hardware resources of the device) |

| Cost | High (Recurring costs for server usage, maintenance) | Variable (Depends on the complexity of local setup) | Low (Primarily upfront costs for hardware components) |

| Connectivity | High (Requires stable internet connectivity) | Low (Can operate with intermittent connectivity) | Very Low (Can operate without any network connectivity) |

| Real-time Processing | Moderate (Can be affected by network latency) | High (Capable of real-time processing locally) | Very High (Immediate processing with minimal latency) |

| Application Examples | Big Data Analysis, Virtual Assistants | Autonomous Vehicles, Smart Homes | Wearables, Sensor Networks |

| Complexity | Moderate to High (Requires knowledge in cloud computing) | Moderate (Requires knowledge in local network setup) | Moderate to High (Requires expertise in embedded systems) |

2.6 Conclusion

In this chapter, we’ve offered a panoramic view of the evolving landscape of machine learning, covering cloud, edge, and tiny ML paradigms. Cloud-based machine learning leverages the immense computational resources of cloud platforms to enable powerful and accurate models but comes with limitations, including latency and privacy concerns. Edge ML mitigates these limitations by bringing inference directly to edge devices, offering lower latency and reduced connectivity needs. TinyML takes this further by miniaturizing ML models to run directly on highly resource-constrained devices, opening up a new category of intelligent applications.

Each approach has its tradeoffs, including model complexity, latency, privacy, and hardware costs. Over time, we anticipate converging these embedded ML approaches, with cloud pre-training facilitating more sophisticated edge and tiny ML implementations. Advances like federated learning and on-device learning will enable embedded devices to refine their models by learning from real-world data.

The embedded ML landscape is rapidly evolving and poised to enable intelligent applications across a broad spectrum of devices and use cases. This chapter serves as a snapshot of the current state of embedded ML. As algorithms, hardware, and connectivity continue to improve, we can expect embedded devices of all sizes to become increasingly capable, unlocking transformative new applications for artificial intelligence.

2.7 Resources

Here is a curated list of resources to support students and instructors in their learning and teaching journeys. We are continuously working on expanding this collection and will be adding new exercises soon.

These slides are a valuable tool for instructors to deliver lectures and for students to review the material at their own pace. We encourage students and instructors to leverage these slides to improve their understanding and facilitate effective knowledge transfer.

- Coming soon.

To reinforce the concepts covered in this chapter, we have curated a set of exercises that challenge students to apply their knowledge and deepen their understanding.

- Coming soon.

In addition to exercises, we offer a series of hands-on labs allowing students to gain practical experience with embedded AI technologies. These labs provide step-by-step guidance, enabling students to develop their skills in a structured and supportive environment. We are excited to announce that new labs will be available soon, further enriching the learning experience.

- Coming soon.